Beyond PHP - it's not (just) about the code

-

Upload

wim-godden -

Category

Technology

-

view

7.596 -

download

3

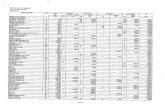

description

Transcript of Beyond PHP - it's not (just) about the code

- 1. Beyond PHP :Its not (just) about the codeWim GoddenCu.be Solutions

2. Who am I ?Wim Godden (@wimgtr)Founder of Cu.be Solutions (http://cu.be)Open Source developer since 1997Developer of OpenXZend Certified EngineerZend Framework Certified EngineerMySQL Certified DeveloperSpeaker at PHP and Open Source conferences 3. Cu.be Solutions ?Open source consultancyPHP-centeredHigh-speed redundant network (BGP, OSPF, VRRP)High scalability developmentNginx + extensionsMySQL ClusterProjects :mostly IT & Telecom companieslots of public-facing apps/sites 4. Who are you ?Developers ?Anyone setup a MySQL master-slave ?Anyone setup a site/app on separate web and database server ? How much traffic between them ? 5. The topicThings we take for grantedFamous last words : "It should work just fine"Works fine today might fail tomorrowMost common mistakesPHP code PHP ecosystemHow-to & How-NOT-to 6. It starts with... code !First up : database 7. Database queries complexitySELECT DISTINCT n.nid, n.uid, n.title, n.type, e.event_start, e.event_start ASevent_start_orig, e.event_end, e.event_end AS event_end_orig, e.timezone,e.has_time, e.has_end_date, tz.offset AS offset, tz.offset_dst AS offset_dst,tz.dst_region, tz.is_dst, e.event_start - INTERVAL IF(tz.is_dst, tz.offset_dst,tz.offset) HOUR_SECOND AS event_start_utc, e.event_end - INTERVALIF(tz.is_dst, tz.offset_dst, tz.offset) HOUR_SECOND AS event_end_utc,e.event_start - INTERVAL IF(tz.is_dst, tz.offset_dst, tz.offset) HOUR_SECOND +INTERVAL 0 SECOND AS event_start_user, e.event_end - INTERVAL IF(tz.is_dst,tz.offset_dst, tz.offset) HOUR_SECOND + INTERVAL 0 SECOND ASevent_end_user, e.event_start - INTERVAL IF(tz.is_dst, tz.offset_dst, tz.offset)HOUR_SECOND + INTERVAL 0 SECOND AS event_start_site, e.event_end -INTERVAL IF(tz.is_dst, tz.offset_dst, tz.offset) HOUR_SECOND + INTERVAL 0SECOND AS event_end_site, tz.name as timezone_name FROM node n INNERJOIN event e ON n.nid = e.nid INNER JOIN event_timezones tz ON tz.timezone =e.timezone INNER JOIN node_access na ON na.nid = n.nid LEFT JOINdomain_access da ON n.nid = da.nid LEFT JOIN node i18n ON n.tnid > 0 ANDn.tnid = i18n.tnid AND i18n.language = en WHERE (na.grant_view >= 1 AND((na.gid = 0 AND na.realm = all))) AND ((da.realm = "domain_id" AND da.gid = 4)OR (da.realm = "domain_site" AND da.gid = 0)) AND (n.language =en ORn.language = OR n.language IS NULL OR n.language = is AND i18n.nid IS NULL)AND ( n.status = 1 AND ((e.event_start >= 2010-01-31 00:00:00 ANDe.event_start = 2010-01-31 00:00:00AND e.event_end = 2010-02-01 00:00:00 AND event_start = 2010-02-01 00:00:00 AND event_end 2 order by qty aggregate index on (status, qty) ? No : range selection stops use of aggregate index separate index on status and qty 9. Database - indexingIndexes make database faster Lets index everything ! DONT :Insert/update/delete Index modificationEach query evaluation of all indexes"Relational schema design is based on databut index design is based on queries"(Bill Karwin, Percona) 10. Databases detecting problematic queriesSlow query log SET GLOBAL slow_query_log = ON;Queries not using indexes In my.cnf/my.ini : log_queries_not_using_indexesGeneral query log SET GLOBAL general_log = ON; Turn it off quickly !Percona Toolkit (Maatkit)pt-query-digest 11. Databases - pt-query-digest# Profile# Rank Query ID Response time Calls R/Call Apdx V/M Item# ==== ================== ================ ===== ======= ==== ===== ==========# 1 0x543FB322AE4330FF 16526.2542 62.0% 1208 13.6806 1.00 0.00 SELECT output_option# 2 0xE78FEA32E3AA3221 0.8312 10.3% 6412 0.0001 1.00 0.00 SELECT poller_output poller_item# 3 0x211901BF2E1C351E 0.6811 8.4% 6416 0.0001 1.00 0.00 SELECT poller_time# 4 0xA766EE8F7AB39063 0.2805 3.5% 149 0.0019 1.00 0.00 SELECT wp_terms wp_term_taxonomy wp_term_relationships# 5 0xA3EEB63EFBA42E9B 0.1999 2.5% 51 0.0039 1.00 0.00 SELECT UNION wp_pp_daily_summary wp_pp_hourly_summary# 6 0x94350EA2AB8AAC34 0.1956 2.4% 89 0.0022 1.00 0.01 UPDATE wp_options# MISC 0xMISC 0.8137 10.0% 3853 0.0002 NS 0.0 12. Databases - pt-query-digest# Query 2: 0.26 QPS, 0.00x concurrency, ID 0x92F3B1B361FB0E5B at byte 14081299# This item is included in the report because it matches --limit.# Scores: Apdex = 1.00 [1.0], V/M = 0.00# Query_time sparkline: | _^ |# Time range: 2011-12-28 18:42:47 to 19:03:10# Attribute pct total min max avg 95% stddev median# ============ === ======= ======= ======= ======= ======= ======= =======# Count 1 312# Exec time 50 4s 5ms 25ms 13ms 20ms 4ms 12ms# Lock time 3 32ms 43us 163us 103us 131us 19us 98us# Rows sent 59 62.41k 203 231 204.82 202.40 3.99 202.40# Rows examine 13 73.63k 238 296 241.67 246.02 10.15 234.30# Rows affecte 0 0 0 0 0 0 0 0# Rows read 59 62.41k 203 231 204.82 202.40 3.99 202.40# Bytes sent 53 24.85M 46.52k 84.36k 81.56k 83.83k 7.31k 79.83k# Merge passes 0 0 0 0 0 0 0 0# Tmp tables 0 0 0 0 0 0 0 0# Tmp disk tbl 0 0 0 0 0 0 0 0# Tmp tbl size 0 0 0 0 0 0 0 0# Query size 0 21.63k 71 71 71 71 0 71# InnoDB:# IO r bytes 0 0 0 0 0 0 0 0# IO r ops 0 0 0 0 0 0 0 0# IO r wait 0 0 0 0 0 0 0 0# pages distin 40 11.77k 34 44 38.62 38.53 1.87 38.53# queue wait 0 0 0 0 0 0 0 0# rec lock wai 0 0 0 0 0 0 0 0# Boolean:# Full scan 100% yes, 0% no# String:# Databases wp_blog_one (264/84%), wp_blog_tw (36/11%)... 1 more# Hosts# InnoDB trxID 86B40B (1/0%), 86B430 (1/0%), 86B44A (1/0%)... 309 more# Last errno 0# Users wp_blog_one (264/84%), wp_blog_two (36/11%)... 1 more# Query_time distribution# 1us# 10us# 100us# 1ms# 10ms ################################################################# 100ms# 1s# 10s+# Tables# SHOW TABLE STATUS FROM `wp_blog_one ` LIKE wp_optionsG# SHOW CREATE TABLE `wp_blog_one `.`wp_options`G# EXPLAIN /*!50100 PARTITIONS*/SELECT option_name, option_value FROM wp_options WHERE autoload = yesG 13. Databases pt-query-digest Digest UI 14. Databases next step : explainexplain "How will MySQL execute the query" 15. Databases next step : explain+----+-------------+-----------+------+---------------+------+---------+------+--------+-------------+| id | select_type | TABLE | TYPE | possible_keys | KEY | key_len | REF | ROWS | Extra |+----+-------------+-----------+------+---------------+------+---------+------+--------+-------------+| 1 | SIMPLE | employees | ALL | NULL | NULL | NULL | NULL | 299809 | USING WHERE |+----+-------------+-----------+------+---------------+------+---------+------+--------+-------------++----+-------------+------------+-------+-------------------------------+---------+---------+-------+------+-------+| id | select_type | table | type | possible_keys | key | key_len | ref | rows | Extra |+----+-------------+------------+-------+-------------------------------+---------+---------+-------+------+-------+| 1 | SIMPLE | itdevice | const | PRIMARY,fk_device_devicetype1 | PRIMARY | 4 | const | 1 | || 1 | SIMPLE | devicetype | const | PRIMARY | PRIMARY | 4 | const | 1 | |+----+-------------+------------+-------+-------------------------------+---------+---------+-------+------+-------+ 16. Databases next step : explainexplain "How will MySQL execute the query"Shows :Indexes availableIndexes used (do you see one ?)Number of rows scannedType of lookupsystem, const and ref = goodALL = badExtra infoUsing index = goodUsing filesort = usually badUsing where = bad 17. Databases when to use / not to useGood at :Fetching dataStoring dataSearching through dataBad at :select `someField` from `bigTable` where crc32(`field`) = "something" full table scan 18. For / foreach$customers = CustomerQuery::create()->filterByState(SC)->find();foreach ($customers as $customer) {$contacts = ContactsQuery::create()->filterByCustomerid($customer->getId())->find();foreach ($contacts as $contact) {doSomestuffWith($contact);}} 19. Joins$contacts = mysql_query("selectcontacts.*fromcustomerjoin contacton contact.customerid = customer.idwherestate = SC");while ($contact = mysql_fetch_array($contacts)) {doSomeStuffWith($contact);}or the ORM equivalent 20. Better...10001 1 querySadly : people still produce code with query loopsUsually :Growth not anticipatedInternal app Public app 21. The origins of this talkCustomers :Projects we builtProjects we didnt build, but got pulled intoFixesChangesInfrastructure migration15 years of how to cause mayhem with a few lines of code 22. Client XJobs search siteMonitor job views :Daily hitsWeekly hitsMonthly hitsWhich user saw which job 23. Client XOriginally : when user viewed job detailsNow : when job is in search resultSearch for php 50 jobs = 50 jobs to be updated 50 updates for shown_today 50 updates for shown_week 50 updates for shown_month 50 inserts for shown_user 24. Client X : the codeforeach ($jobs as $job) {$db->query("insert into shown_today(jobId,number) values(" . $job[id] . ",1)on duplicate keyupdatenumber = number + 1");$db->query("insert into shown_week(jobId,number) values(" . $job[id] . ",1)on duplicate keyupdatenumber = number + 1");$db->query("insert into shown_month(jobId,number) values(" . $job[id] . ",1)on duplicate keyupdatenumber = number + 1");$db->query("insert into shown_user(jobId,userId,when) values (" . $job[id] . "," . $user[id] . ",now())");} 25. Client X : the graph 26. Client X : the numbers600-1000 updates/sec (peaks up to 1600)400-1000 updates/sec (peaks up to 2600)16 core machine 27. Client X : panic !Mail : "MySQL slave is more than 5 minutes behind master"We set it up who did they blame ?Wait a second ! 28. Client X : whats causing those peaks ? 29. Client X : possible cause ?Code changes ? According to developers : noneAction : turn on general log, analyze with pt-query-digest 50+-fold increase in queries Developers : Oops we did make a changeAfter 3 days : 2,5 days behindEvery hour : 50 min extra lag 30. Client X : But why is the slave lagging ?Master SlaveFile :master-bin-xxxx.logFile :master-bin-xxxx.logSlave I/O threadBinlog dumpthreadSlaveSQLthread 31. Client X : Master 32. Client X : Slave 33. Client X : fix ?foreach ($jobs as $job) {$db->query("insert into shown_today(jobId,number) values(" . $job[id] . ",1)on duplicate keyupdatenumber = number + 1");$db->query("insert into shown_week(jobId,number) values(" . $job[id] . ",1)on duplicate keyupdatenumber = number + 1");$db->query("insert into shown_month(jobId,number) values(" . $job[id] . ",1)on duplicate keyupdatenumber = number + 1");$db->query("insert into shown_user(jobId,userId,when) values (" . $job[id] . "," . $user[id] . ",now())");} 34. Client X : the code change$todayQuery = "insert into shown_today(jobId,number) values ";foreach ($jobs as $job) {$todayQuery .= "(" . $job[id] . ", 1),";}$todayQuery = substr($todayQuery, -1);$todayQuery .= ")on duplicate keyupdatenumber = number + 1";$db->query($todayQuery);Careful : max_allowed_packet !Result : insert into shown_today values (5, 1), (8, 1), (12, 1), (18, 1), ... 35. Client X : the chosen solution$db->autocommit(false);foreach ($jobs as $job) {$db->query("insert into shown_today(jobId,number) values(" . $job[id] . ",1)on duplicate keyupdatenumber = number + 1");$db->query("insert into shown_week(jobId,number) values(" . $job[id] . ",1)on duplicate keyupdatenumber = number + 1");$db->query("insert into shown_month(jobId,number) values(" . $job[id] . ",1)on duplicate keyupdatenumber = number + 1");$db->query("insert into shown_user(jobId,userId,when) values (" . $job[id] . "," . $user[id] . ",now())");}$db->commit(); 36. Client X : conclusionFor loops are bad (we already knew that)Add master/slave and it gets much worseUse transactions : it will provide huge performance increaseResult : slave caught up 5 days later 37. Database NetworkCustomer YTop 10 site in BelgiumGrowing rapidlyAt peak traffic :Unexplicable latency on databaseLoad on webservers : minimalLoad on database servers : acceptable 38. Client Y : the network 39. Client Y : the network60GB 700GB 700GB 40. Client Y : network overloadCause : Drupal hooks retrieving data that was not neededOnly load data you actually needDont know at the start ? Use lazy loadingCaching :Same storyMemcached/Redis are fastBut : data still needs to cross the network 41. Network trouble : more than just trafficCustomer Z150.000 visits/dayNews ticker :XML feed from other site (owned by same customer)Cached for 15 min 42. Customer Z fetching the feedif (filectime(APP_DIR . /tmp/ScrambledSiteName.xml) < time() - 900) {unlink(APP_DIR . /tmp/ScrambledSiteName.xml);file_put_contents(APP_DIR . /tmp/ScrambledSiteName.xml,file_get_contents(http://www.scrambledsitename.be/xml/feed.xml));}$xmlfeed = ParseXmlFeed(APP_DIR . /tmp/ScrambledSiteName.xml);Whats wrong with this code ? 43. Customer Z no feed without the sourceFeed source 44. Customer Z no feed without the sourceFeed source 45. Customer Z : timeoutdefault_socket_timeout : 60 sec by defaultEach visitor : 60 sec wait timePeople keep hitting refresh more loadMore active connections more loadApache hits maximum connections entire site down 46. Customer Z : timeout fix$context = stream_context_create(array(http => array(timeout => 5)));if (filectime(APP_DIR . /tmp/ScrambledSiteName.xml) < time() - 900) {unlink(APP_DIR . /tmp/ScrambledSiteName.xml);file_put_contents(APP_DIR . /tmp/ScrambledSiteName.xml,file_get_contents(http://www.scrambledsitename.be/xml/feed.xml, false, $context));}$xmlfeed = ParseXmlFeed(APP_DIR . /tmp/ScrambledSiteName.xml); 47. Customer Z : dont delete from cache$context = stream_context_create(array(http => array(timeout => 5)));if (filectime(APP_DIR . /tmp/ScrambledSiteName.xml) < time() - 900) {unlink(APP_DIR . /tmp/ScrambledSiteName.xml);file_put_contents(APP_DIR . /tmp/ScrambledSiteName.xml,file_get_contents(http://www.scrambledsitename.be/xml/feed.xml, false, $context));}$xmlfeed = ParseXmlFeed(APP_DIR . /tmp/ScrambledSiteName.xml); 48. Network resourcesUse timeouts for all :fopencurlSOAPData source trusted ? setup a webservice let them push updates when their feed changes less load on data source no timeout issuesAdd logging early detection 49. LoggingLogging = goodLogging in PHP using fopen bad idea : locking issues Use file_put_contents($filename, $data, FILE_APPEND)For Firefox : FirePHP (add-on for Firebug)Debug logging = bad on productionWatch your logs !Dont log on slow disks I/O bottlenecks 50. File system : I/O bottlenecksCauses :Excessive writes (database updates, logfiles, swapping, )Excessive reads (non-indexed database queries, swapping, small filesystem cache, )How to detect ?topiostatSee iowait ? Stop worrying about php, fix the I/O problem ! 51. File systemWorst of all : NFSPHP files lstat callsTemplates sameSessions locking issues corrupt data store sessions in database, Memcached, Redis, ... 52. Much more than codeDBserverWebserverUserNetworkXML feed 53. Look beyond PHP ! 54. Questions ? 55. Questions ? 56. ContactTwitter @wimgtrWeb http://techblog.wimgodden.beSlides http://www.slideshare.net/wimgE-mail [email protected] my talk : http://joind.in/8186 57. Thanks !Please...Rate my talk : http://joind.in/8186

![[Php vigo][talk] unit testing sucks ( and it's your fault )](https://static.fdocuments.in/doc/165x107/58a5d2c81a28ab6c2a8b6769/php-vigotalk-unit-testing-sucks-and-its-your-fault-.jpg)