Bayesian inference and generative models - TNU should I know about Bayesian inference? Because...

Transcript of Bayesian inference and generative models - TNU should I know about Bayesian inference? Because...

Bayesian inference and generative models

Klaas Enno Stephan

Lecture as part of "Methods & Models for fMRI data analysis",

University of Zurich & ETH Zurich, 22 November 2016

With slides from and many thanks to:

Kay Brodersen,

Will Penny,

Sudhir Shankar Raman

Slides with a yellow title were not covered in detail in the

lecture and will not be part of the exam.

Why should I know about Bayesian inference?

Because Bayesian principles are fundamental for

• statistical inference in general

• system identification

• translational neuromodeling ("computational assays")

– computational psychiatry

– computational neurology

• contemporary theories of brain function (the "Bayesian brain")

– predictive coding

– free energy principle

– active inference

Why should I know about Bayesian inference?

Because Bayesian principles are fundamental for

• statistical inference in general

• system identification

• translational neuromodeling ("computational assays")

– computational psychiatry

– computational neurology

• contemporary theories of brain function (the "Bayesian brain")

– predictive coding

– free energy principle

– active inference

Bayes‘ Theorem

Reverend Thomas Bayes

1702 - 1761

“Bayes‘ Theorem describes, how an ideally rational person

processes information."

Wikipedia

( | ) ( )( | )

( )

p y pP y

p y

likelihood prior

evidence

posterior

Bayesian inference: an animation

Generative models

• specify a joint probability distribution over all variables (observations and

parameters)

• require a likelihood function and a prior:

• can be used to randomly generate synthetic data (observations) by sampling

from the prior

– we can check in advance whether the model can explain certain

phenomena at all

• model comparison based on the model evidence

( , | ) ( | , ) ( | ) ( | , )p y m p y m p m p y m

( | ) ( | , ) ( | )p y m p y m p m d

Observation of data

Formulation of a generative model

Model inversion – updating one's beliefs

( | ) ( | ) ( )p y p y p

Model

likelihood function p(y|)

prior distribution p()

Measurement data y

maximum a posteriori

(MAP) estimates

model evidence

Principles of Bayesian inference

Priors

Priors can be of different sorts, e.g.

• empirical (previous data)

• empirical (estimated from

current data using a hierarchical

model → "empirical Bayes")

• uninformed

• principled (e.g., positivity

constraints)

• shrinkage

Example of a shrinkage prior

Advantages of generative models

• describe how observed data were generated

by hidden mechanisms

• we can check in advance whether a model

can explain certain phenomena at all

• force us to think mechanistically and be

explicit about pathophysiological theories

• formal framework for differential

diagnosis:

statistical comparison of competing

generative models, each of which provides a

different explanation of measured brain

activity or clinical symptoms

mechanism 1 mechanism N...

data y

A generative modelling framework for fMRI & EEG:

Dynamic causal modeling (DCM)

Friston et al. 2003, NeuroImage

( , , )dx

f x udt

),(xgy

Model inversion:

Estimating neuronal

mechanisms

EEG, MEG fMRI

Forward model:

Predicting measured

activity

dwMRI

Stephan et al. 2009, NeuroImage

endogenous

connectivity

direct inputs

modulation of

connectivity

Neural state equation CuxBuAx j

j )( )(

u

xC

x

x

uB

x

xA

j

j

)(

hemodynamic

modelλ

x

y

integration

BOLDyyy

activity

x1(t)

activity

x2(t) activity

x3(t)

neuronal

states

t

driving

input u1(t)

modulatory

input u2(t)

t

endogenous

connectivity

direct inputs

modulation of

connectivity

Neuronal state equationModulatory input

t

u2(t)

t

Driving input

u1(t)

𝑨 =𝝏 𝒙

𝝏𝒙

𝑩(𝒋) =𝝏

𝝏𝒖𝒋

𝝏 𝒙

𝝏𝒙

𝑪 =𝝏 𝒙

𝝏𝒖

𝒙 = 𝑨 + 𝒖𝒋𝑩𝒋 𝒙 + 𝑪𝒖

Hemodynamic model

𝝂𝒊(𝒕) and 𝒒𝒊(𝒕)

Neuronal states

𝒙𝒊(𝒕)

𝒙𝟏(𝒕)𝒙𝟑(𝒕)

𝒙𝟐(𝒕)

BOLD signal change equation

𝒚 = 𝑽𝟎 𝒌𝟏 𝟏 − 𝒒 + 𝒌𝟐 𝟏 −𝒒

𝝂+ 𝒌𝟑 𝟏 − 𝝂 + 𝒆

with 𝒌𝟏 = 𝟒. 𝟑𝝑𝟎𝑬𝟎𝑻𝑬, 𝒌𝟐 = 𝜺𝒓𝟎𝑬𝟎𝑻𝑬, 𝒌𝟑 = 𝟏 − 𝜺

𝝉 𝝂 = 𝒇 − 𝝂𝟏/𝜶

𝝉 𝒒 = 𝒇𝑬(𝒇, 𝑬𝟎)/𝑬𝟎 − 𝝂𝟏/𝜶𝒒/𝝂

Local hemodynamic

state equations

Changes in volume (𝝂)

and dHb (𝒒)

𝒇Balloon model

𝒔 = 𝒙 − 𝜿𝒔 − 𝜸 𝒇 − 𝟏

𝒇 = 𝒔

vasodilatory

signal and flow

induction (rCBF)

BOLD signal

y(t)Stephan et al. 2015,

Neuron

0 10 20 30 40 50 60 70 80 90 100

0

0.1

0.2

0.3

0.4

0 10 20 30 40 50 60 70 80 90 100

0

0.2

0.4

0.6

0 10 20 30 40 50 60 70 80 90 100

0

0.1

0.2

0.3

Neural population activity

0 10 20 30 40 50 60 70 80 90 100

0

1

2

3

0 10 20 30 40 50 60 70 80 90 100-1

0

1

2

3

4

0 10 20 30 40 50 60 70 80 90 100

0

1

2

3

fMRI signal change (%)

x1 x2

x3

CuxDxBuAdt

dx n

j

j

j

m

i

i

i

1

)(

1

)(

Nonlinear Dynamic Causal Model for fMRI

Stephan et al. 2008, NeuroImage

u1

u2

Why should I know about Bayesian inference?

Because Bayesian principles are fundamental for

• statistical inference in general

• system identification

• translational neuromodeling ("computational assays")

– computational psychiatry

– computational neurology

• contemporary theories of brain function (the "Bayesian brain")

– predictive coding

– free energy principle

– active inference

Generative models as "computational assays"

( | , )p y m

( | , )p y m ( | )p m

( | , )p y m

( | , )p y m ( | )p m

Differential diagnosis based on generative models of

disease symptoms

SYMPTOM

(behaviour

or physiology)

HYPOTHETICAL

MECHANISM...

( | )kp m y( | , )kp y m

y

1m Kmkm ...

( | ) ( )( | y)

( | ) ( )

k kk

k k

k

p y m p mp m

p y m p m

Stephan et al. 2016, NeuroImage

Application to brain activity and

behaviour of individual patients

Computational assays:

Models of disease mechanisms

Detecting physiological subgroups

(based on inferred mechanisms)

Translational Neuromodeling

Individual treatment prediction

disease mechanism A

disease mechanism B

disease mechanism C

( , , )dx

f x udt

Stephan et al. 2015, Neuron

Perception = inversion of a hierarchical generative model

environm. states

others' mental states

bodily states

( | , )p x y m

( | , ) ( | )p y x m p x mforward model

perception

neuronal states

Example: free-energy principle and active inference

Change

sensory input

sensations – predictions

Prediction error

Change

predictions

Action Perception

Maximizing the evidence (of the brain's generative model)

= minimizing the surprise about the data (sensory inputs).Friston et al. 2006,

J Physiol Paris

How is the posterior computed =

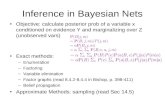

how is a generative model inverted?

Bayesian Inference

Approximate Inference

Variational Bayes

MCMC Sampling

Analytical solutions

How is the posterior computed =

how is a generative model inverted?

• compute the posterior analytically

– requires conjugate priors

– even then often difficult to derive an analytical solution

• variational Bayes (VB)

– often hard work to derive, but fast to compute

– cave: local minima, potentially inaccurate approximations

• sampling methods (MCMC)

– guaranteed to be accurate in theory (for infinite computation time)

– but may require very long run time in practice

– convergence difficult to prove

Conjugate priors

If the posterior p(θ|x) is in the same family as the prior p(θ), the prior and

posterior are called "conjugate distributions", and the prior is called a "conjugate

prior" for the likelihood function.

)(

)( )|()|(

yp

pypyp

same form analytical expression for posterior

examples (likelihood-prior):

• Normal-Normal

• Normal-inverse Gamma

• Binomial-Beta

• Multinomial-Dirichlet

Likelihood & Prior

Posterior:

Posterior mean =

variance-weighted combination of

prior mean and data mean

Prior

Likelihood

Posterior

y

Posterior mean & variance of univariate Gaussians

p

2

2

( | ) ( , )

( ) ( , )

e

p p

p y N

p N

2( | ) ( , )p y N

p

pe

pe

22

2

222

11

111

Likelihood & prior

Posterior:

Prior

Likelihood

Posterior

Same thing – but expressed as precision weighting

p

1

1

( | ) ( , )

( ) ( , )

e

p p

p y N

p N

1( | ) ( , )p y N

p

pe

pe

Relative precision weighting

y

Variational Bayes (VB)

best proxy

𝑞 𝜃

trueposterior

𝑝 𝜃 𝑦

hypothesisclass

divergence

KL 𝑞||𝑝

Idea: find an approximate density 𝑞(𝜃) that is maximally similar to the true

posterior 𝑝 𝜃 𝑦 .

This is often done by assuming a particular form for 𝑞 (fixed form VB) and

then optimizing its sufficient statistics.

Kullback–Leibler (KL) divergence

• non-symmetric measure

of the difference

between two probability

distributions P and Q

• DKL(P‖Q) = a measure of

the information lost when

Q is used to approximate

P: the expected number

of extra bits required to

code samples from P

when using a code

optimized for Q, rather

than using the true code

optimized for P.

Variational calculus

Standard calculusNewton, Leibniz, and

others

• functions

𝑓: 𝑥 ↦ 𝑓 𝑥

• derivatives d𝑓d𝑥

Example: maximize

the likelihood

expression 𝑝 𝑦 𝜃w.r.t. 𝜃

Variational

calculusEuler, Lagrange, and

others

• functionals

𝐹: 𝑓 ↦ 𝐹 𝑓

• derivatives d𝐹d𝑓

Example: maximize

the entropy 𝐻 𝑝w.r.t. a probability

distribution 𝑝 𝑥

Leonhard Euler(1707 – 1783)

Swiss mathematician, ‘Elementa Calculi

Variationum’

Variational Bayes

𝐹 𝑞 is a functional wrt. the

approximate posterior 𝑞 𝜃 .

Maximizing 𝐹 𝑞, 𝑦 is equivalent to:

• minimizing KL[𝑞| 𝑝

• tightening 𝐹 𝑞, 𝑦 as a lower

bound to the log model evidence

When 𝐹 𝑞, 𝑦 is maximized, 𝑞 𝜃 is

our best estimate of the posterior.

ln𝑝(𝑦) = KL[𝑞| 𝑝 + 𝐹 𝑞, 𝑦

divergence ≥ 0

(unknown)

neg. free energy

(easy to evaluate for a given 𝑞)

KL[𝑞| 𝑝

ln 𝑝 𝑦 ∗

𝐹 𝑞, 𝑦

KL[𝑞| 𝑝

ln 𝑝 𝑦

𝐹 𝑞, 𝑦

initialization …

… convergence

Derivation of the (negative) free energy approximation

• See whiteboard!

• (or Appendix to Stephan et al. 2007, NeuroImage 38: 387-401)

Mean field assumption

Factorize the approximate

posterior 𝑞 𝜃 into independent

partitions:

𝑞 𝜃 =

𝑖

𝑞𝑖 𝜃𝑖

where 𝑞𝑖 𝜃𝑖 is the approximate

posterior for the 𝑖th subset of

parameters.

For example, split parameters

and hyperparameters:𝜃1

𝜃2

𝑞 𝜃1 𝑞 𝜃2

Jean Daunizeau, www.fil.ion.ucl.ac.uk/ ~jdaunize/presentations/Bayes2.pdf , | ,p y q q q

VB in a nutshell (under mean-field approximation)

, | ,p y q q q

( )

( )

exp exp ln , ,

exp exp ln , ,

q

q

q I p y

q I p y

Iterative updating of sufficient statistics of approx. posteriors by

gradient ascent.

ln | , , , |

ln , , , , , |q

p y m F KL q p y

F p y KL q p m

Mean field approx.

Neg. free-energy

approx. to model

evidence.

Maximise neg. free

energy wrt. q =

minimise divergence,

by maximising

variational energies

VB (under mean-field assumption) in more detail

VB (under mean-field assumption) in more detail

Model comparison and selection

Given competing hypotheses

on structure & functional

mechanisms of a system, which

model is the best?

For which model m does p(y|m)

become maximal?

Which model represents the

best balance between model

fit and model complexity?

Pitt & Miyung (2002) TICS

( | ) ( | , ) ( | ) p y m p y m p m d

Model evidence (marginal likelihood):

Various approximations, e.g.:

- negative free energy, AIC, BIC

Bayesian model selection (BMS)

accounts for both accuracy

and complexity of the model

“If I randomly sampled from my

prior and plugged the resulting

value into the likelihood

function, how close would the

predicted data be – on average

– to my observed data?”

all possible datasets

y

p(y|m)

Gharamani, 2004

McKay 1992, Neural Comput.

Penny et al. 2004a, NeuroImage

Model space M is defined by prior on models.

Usual choice: flat prior over a small set of models.

Model space (hypothesis set) M

1 1

( | ) ( ) ( | )( | )

( | ) ( ) ( | )

i i ii M M

j j j

j j

p y m p m p y mp m y

p y m p m p y m

1/ if ( )

0 if

M m Mp m

m M

In this case, the posterior probability of model i is:

Differential diagnosis based on generative models of

disease symptoms

SYMPTOM

(behaviour

or physiology)

HYPOTHETICAL

MECHANISM...

( | )kp m y( | , )kp y m

y

1m Kmkm ...

( | ) ( )( | y)

( | ) ( )

k kk

k k

k

p y m p mp m

p y m p m

Stephan et al. 2016, NeuroImage

pmypAIC ),|(log

Logarithm is a

monotonic function

Maximizing log model evidence

= Maximizing model evidence

)(),|(log

)()( )|(log

mcomplexitymyp

mcomplexitymaccuracymyp

Np

mypBIC log2

),|(log

Akaike Information Criterion:

Bayesian Information Criterion:

Log model evidence = balance between fit and complexity

Approximations to the model evidence

No. of

parameters

No. of

data points

The (negative) free energy approximation F

log ( | , ) , |

accuracy complexity

F p y m KL q p m

KL[𝑞| 𝑝

ln 𝑝 𝑦|𝑚

𝐹 𝑞, 𝑦 log ( | ) , | ,p y m F KL q p y m

Like AIC/BIC, F is an accuracy/complexity tradeoff:

F is a lower bound on the log model evidence:

The (negative) free energy approximation

• Log evidence is thus expected log likelihood (wrt. q) plus 2 KL's:

log ( | )

log ( | , ) , | , | ,

p y m

p y m KL q p m KL q p y m

log ( | ) , | ,

log ( | , ) , |

accuracy complexity

F p y m KL q p y m

p y m KL q p m

The complexity term in F

• In contrast to AIC & BIC, the complexity term of the negative free energy F accounts for parameter interdependencies. UnderGaussian assumptions about the posterior (Laplace approximation):

• The complexity term of F is higher

– the more independent the prior parameters ( effective DFs)

– the more dependent the posterior parameters

– the more the posterior mean deviates from the prior mean

y

T

yy CCC

mpqKL

|

1

||2

1ln

2

1ln

2

1

)|(),(

Bayes factors

)|(

)|(

2

112

myp

mypB

positive value, [0;[

But: the log evidence is just some number – not very intuitive!

A more intuitive interpretation of model comparisons is made

possible by Bayes factors:

To compare two models, we could just compare their log

evidences.

B12 p(m1|y) Evidence

1 to 3 50-75% weak

3 to 20 75-95% positive

20 to 150 95-99% strong

150 99% Very strong

Kass & Raftery classification:

Kass & Raftery 1995, J. Am. Stat. Assoc.

Fixed effects BMS at group level

Group Bayes factor (GBF) for 1...K subjects:

Average Bayes factor (ABF):

Problems:

- blind with regard to group heterogeneity

- sensitive to outliers

k

k

ijij BFGBF )(

( )kKij ij

k

ABF BF

)|(~ 111 mypy)|(~ 111 mypy

)|(~ 222 mypy)|(~ 111 mypy

)|(~ pmpm kk

);(~ rDirr

)|(~ pmpm kk)|(~ pmpm kk

),1;(~1 rmMultm

Random effects BMS for heterogeneous groups

Dirichlet parameters

= “occurrences” of models in the population

Dirichlet distribution of model probabilities r

Multinomial distribution of model labels m

Measured data y

Model inversion

by Variational

Bayes or MCMC

Stephan et al. 2009, NeuroImage

)|(~ 111 mypy)|(~ 111 mypy

)|(~ 222 mypy)|(~ 111 mypy

)|(~ pmpm kk

);(~ rDirr

)|(~ pmpm kk)|(~ pmpm kk

),1;(~1 rmMultm

Random effects BMS

Stephan et al. 2009, NeuroImage

11( | ) ,

( )

k

k

k

k k

kk

p r Dir r rZ

Z

( | ) nkm

n k

k

p m r r

( | ) ( | ) ( | )n nk nkp y m p y p m d

0

0

0

0

1

0

1

0

, , | | ( | )

( | ) | |

1|

1

k nk

nkk

n n n

n

m

k n n k

k n k

m

n nk k k

n k

p y r m p y m p m r p r

p r p y m p m r

r p y m rZ

p y m r rZ

Write down joint probability

and take the log

0 0ln , , ln 1 ln log | lnk k nk n nk k

n k

p y r m Z r m p y m r

Maximise neg. free

energy wrt. q =

minimise divergence,

by maximising

variational energies

Mean field approx. ( , ) ( ) ( )q r m q r q m

( )

( )

( ) exp

( ) exp

log , ,

log , ,

q m

q r

q r I r

q m I m

I r p y r m

I m p y r m

Iterative updating of sufficient statistics of approx.

posteriors

Until convergence

end

0 0 [1, ,1]

0

exp ln ( | )nk n nk k k

k

nknk

nk

k

k nk

n

u p y m

ug

u

g

( 1)nk nkg q m our (normalized)

posterior belief that

model k generated the

data from subject n

k nk

n

g expected number of

subjects whose data we

believe were generated

by model k

Four equivalent options for reporting model ranking by

random effects BMS

1. Dirichlet parameter estimates

2. expected posterior probability of

obtaining the k-th model for any

randomly selected subject

3. exceedance probability that a

particular model k is more likely than

any other model (of the K models

tested), given the group data

4. protected exceedance probability:

see below

)( 1 Kkqkr

{1... }, {1... | }:

( | ; )k k j

k K j K j k

p r r y

-35 -30 -25 -20 -15 -10 -5 0 5

Su

bje

cts

Log model evidence differences

MOG

LG LG

RVF

stim.

LVF

stim.

FGFG

LD|RVF

LD|LVF

LD LD

MOGMOG

LG LG

RVF

stim.

LVF

stim.

FGFG

LD

LD

LD|RVF LD|LVF

MOG

m2 m1

m1m2

Data: Stephan et al. 2003, Science

Models: Stephan et al. 2007, J. Neurosci.

Example: Hemispheric interactions during vision

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 10

0.5

1

1.5

2

2.5

3

3.5

4

4.5

5

r1

p(r

1|y

)

p(r1>0.5 | y) = 0.997

m1m2

1

1

11.8

84.3%r

2

2

2.2

15.7%r

%7.9921 rrp

Stephan et al. 2009a, NeuroImage

Example: Synaesthesia

• “projectors” experience

color externally colocalized

with a presented grapheme

• “associators” report an

internally evoked

association

• across all subjects: no

evidence for either model

• but BMS results map

precisely onto projectors

(bottom-up mechanisms)

and associators (top-down)

van Leeuwen et al. 2011, J. Neurosci.

Overfitting at the level of models

• #models risk of overfitting

• solutions:

– regularisation: definition of model

space = choosing priors p(m)

– family-level BMS

– Bayesian model averaging (BMA)

|

| , |m

p y

p y m p m y

|

| ( )

| ( )m

p m y

p y m p m

p y m p m

posterior model probability:

BMA:

Model space partitioning: comparing model families

• partitioning model space into K subsets

or families:

• pooling information over all models in

these subsets allows one to compute

the probability of a model family, given

the data

• effectively removes uncertainty about

any aspect of model structure, other

than the attribute of interest (which

defines the partition)

Stephan et al. 2009, NeuroImage

Penny et al. 2010, PLoS Comput. Biol.

1,..., KM f f

kp f

Family-level inference: fixed effects

• We wish to have a uniform prior at the

family level:

• This is related to the model level via

the sum of the priors on models:

• Hence the uniform prior at the family

level is:

• The probability of each family is then

obtained by summing the posterior

probabilities of the models it includes:

Penny et al. 2010, PLoS Comput. Biol.

1

kp fK

( )k

k

m f

p f p m

1

:k

k

m f p mK f

1.. 1..| |k

k N N

m f

p f y p m y

Family-level inference: random effects

• The frequency of a family in the

population is given by:

• In RFX-BMS, this follows a Dirichlet

distribution, with a uniform prior on the

parameters (see above).

• A uniform prior over family

probabilities can be obtained by

setting:

Stephan et al. 2009, NeuroImage

Penny et al. 2010, PLoS Comput. Biol.

k

k m

m f

s r

1: ( )k prior

k

m f mf

( )p s Dir

Family-level inference: random effects – a special case

• When the families are of equal size, one can simply sum the posterior model

probabilities within families by exploiting the agglomerative property of the

Dirichlet distribution:

Stephan et al. 2009, NeuroImage

1 2

1 2

1 2 1 2

* * *

1 2

* * *

1 2

, ,..., ~ , ,...,

, ,...,

~ , ,...,

J

J

K K

k k J k

k N k N k N

k k J k

k N k N k N

r r r Dir

r r r r r r

Dir

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 10

0.5

1

1.5

2

2.5

3

3.5

4

4.5

5

r1

p(r

1|y

)

p(r1>0.5 | y) = 0.986

Model space partitioning:

comparing model families

0

2

4

6

8

10

12

14

16

alp

ha

0

2

4

6

8

10

12

alp

ha

0

20

40

60

80

Su

mm

ed

lo

g e

vid

en

ce

(re

l. t

o R

BM

L)

CBMNCBMN(ε) CBML CBML(ε)RBMN

RBMN(ε) RBML RBML(ε)

CBMNCBMN(ε) CBML CBML(ε)RBMN

RBMN(ε) RBML RBML(ε)

nonlinear models linear models

FFX

RFX

4

1

*

1

k

k

8

5

*

2

k

k

nonlinear linear

log

GBF

Model

space

partitioning

1 73.5%r 2 26.5%r

1 2 98.6%p r r

m1m2

m1m2

Stephan et al. 2009, NeuroImage

MMN

A1 A1

STG

input

STG

IFG

prediction

prediction

error

input

standardsdeviants

Mismatch negativity (MMN)

Garrido et al. 2009, Clin. Neurophysiol.

• elicited by surprising stimuli

(scales with unpredictability)

• in schizophrenic patients

• classical interpretations:

– pre-attentive change

detection

– neuronal adaptation

• current theories:

– reflection of (hierarchical)

Bayesian inference

• in schizophrenic patients

• Highly relevant for

computational assays of

glutamatergic and cholinergic

transmission:

+ NMDAR

+ ACh (nicotinic &

muscarinic)

– 5HT

– DA

standards

deviantsMismatch negativity

(MMN)

placebo

ketamine

MMN

Schmidt, Diaconescu et al. 2013, Cereb. Cortex

ERPs

MMN

Lieder et al. 2013, PLoS Comput. Biol.

Modelling Trial-by-Trial Changes of the Mismatch

Negativity (MMN)

Lieder et al. 2013, PLoS Comput. Biol.

MMN model comparison

at multiple levels

Comparing

individual

models

Comparing

MMN

theories

Comparing

modeling

frameworks

Bayesian Model Averaging (BMA)

• abandons dependence of parameter

inference on a single model and takes into

account model uncertainty

• uses the entire model space considered (or

an optimal family of models)

• averages parameter estimates, weighted

by posterior model probabilities

• represents a particularly useful alternative

– when none of the models (or model

subspaces) considered clearly

outperforms all others

– when comparing groups for which the

optimal model differs

|

| , |m

p y

p y m p m y

Penny et al. 2010, PLoS Comput. Biol.

1..

1..

|

| , |

n N

n n N

m

p y

p y m p m y

NB: p(m|y1..N) can be obtained

by either FFX or RFX BMS

single-subject BMA:

group-level BMA:

Schmidt et al. 2013, JAMA Psychiatry

Prefrontal-parietal connectivity during

working memory in schizophrenia

• 17 at-risk mental

state (ARMS)

individuals

• 21 first-episode

patients

(13 non-treated)

• 20 controls

Schmidt et al. 2013, JAMA Psychiatry

BMS results for all groups

BMA results: PFC PPC connectivity

Schmidt et al. 2013, JAMA Psychiatry

17 ARMS, 21 first-episode (13 non-treated),

20 controls

Protected exceedance probability:

Using BMA to protect against chance findings

• EPs express our confidence that the posterior probabilities of models are

different – under the hypothesis H1 that models differ in probability: rk1/K

• does not account for possibility "null hypothesis" H0: rk=1/K

• Bayesian omnibus risk (BOR) of wrongly accepting H1 over H0:

• protected EP: Bayesian model averaging over H0 and H1:

Rigoux et al. 2014, NeuroImage

inference on model structure or inference on model parameters?

inference on

individual models or model space partition?

comparison of model

families using

FFX or RFX BMS

optimal model structure assumed

to be identical across subjects?

FFX BMS RFX BMS

yes no

inference on

parameters of an optimal model or parameters of all models?

BMA

definition of model space

FFX analysis of

parameter estimates

(e.g. BPA)

RFX analysis of

parameter estimates

(e.g. t-test, ANOVA)

optimal model structure assumed

to be identical across subjects?

FFX BMS

yes no

RFX BMS

Stephan et al. 2010, NeuroImage

Further reading

• Penny WD, Stephan KE, Mechelli A, Friston KJ (2004) Comparing dynamic causal models. NeuroImage

22:1157-1172.

• Penny WD, Stephan KE, Daunizeau J, Joao M, Friston K, Schofield T, Leff AP (2010) Comparing Families of

Dynamic Causal Models. PLoS Computational Biology 6: e1000709.

• Penny WD (2012) Comparing dynamic causal models using AIC, BIC and free energy. Neuroimage 59: 319-

330.

• Rigoux L, Stephan KE, Friston KJ, Daunizeau J (2014) Bayesian model selection for group studies – revisited.

NeuroImage 84: 971-985.

• Stephan KE, Weiskopf N, Drysdale PM, Robinson PA, Friston KJ (2007) Comparing hemodynamic models with

DCM. NeuroImage 38:387-401.

• Stephan KE, Penny WD, Daunizeau J, Moran RJ, Friston KJ (2009) Bayesian model selection for group

studies. NeuroImage 46:1004-1017.

• Stephan KE, Penny WD, Moran RJ, den Ouden HEM, Daunizeau J, Friston KJ (2010) Ten simple rules for

Dynamic Causal Modelling. NeuroImage 49: 3099-3109.

• Stephan KE, Iglesias S, Heinzle J, Diaconescu AO (2015) Translational Perspectives for Computational

Neuroimaging. Neuron 87: 716-732.

• Stephan KE, Schlagenhauf F, Huys QJM, Raman S, Aponte EA, Brodersen KH, Rigoux L, Moran RJ,

Daunizeau J, Dolan RJ, Friston KJ, Heinz A (2016) Computational Neuroimaging Strategies for Single Patient

Predictions. NeuroImage, in press. DOI: 10.1016/j.neuroimage.2016.06.038

Thank you