Bayes Theorem

description

Transcript of Bayes Theorem

-

Bayes' theorem 1

Bayes' theorem

A blue neon sign, showing the simple statementof Bayes' theorem

Bayesian statistics

Theory

Admissible decision rule Bayesian efficiency Bayesian probability Probability interpretations Bayes' theorem Bayes' rule Bayes factor Bayesian inference Bayesian network Prior Posterior Likelihood Conjugate prior Posterior predictive Hyperparameter Hyperprior Principle of indifference Principle of maximum entropy Empirical Bayes method Cromwell's rule Bernsteinvon Mises theorem Bayesian information criterion Credible interval Maximum a posteriori estimation

Techniques

Bayesian linear regression Bayesian estimator Approximate Bayesian computation

Uses

-

Bayes' theorem 2

Bayesian spam filtering Naive Bayes classifier Decision Support System

Statistics portal

v t e [1]

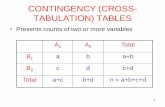

In probability theory and statistics, Bayes' theorem (alternatively Bayes' law or Bayes' rule) is a result that is ofimportance in the mathematical manipulation of conditional probabilities. Bayes rule can be derived from more basicaxioms of probability, specifically conditional probability.When applied, the probabilities involved in Bayes' theorem may have any of a number of probability interpretations.In one of these interpretations, the theorem is used directly as part of a particular approach to statistical inference. lnparticular, with the Bayesian interpretation of probability, the theorem expresses how a subjective degree of beliefshould rationally change to account for evidence: this is Bayesian inference, which is fundamental to Bayesianstatistics. However, Bayes' theorem has applications in a wide range of calculations involving probabilities, not justin Bayesian inference.Bayes' theorem is named after Thomas Bayes (/bez/; 17011761), who first suggested using the theorem to updatebeliefs. His work was significantly edited and updated by Richard Price before it was posthumously read at theRoyal Society. The ideas gained limited exposure until they were independently rediscovered and further developedby Laplace, who first published the modern formulation in his 1812 Thorie analytique des probabilits.Sir Harold Jeffreys wrote that Bayes' theorem is to the theory of probability what Pythagoras's theorem is togeometry.

Introductory exampleSuppose a man told you he had a nice conversation with someone on the train. Not knowing anything about thisconversation, the probability that he was speaking to a woman is 50% (assuming the speaker was as likely to strikeup a conversation with a man as with a woman). Now suppose he also told you that his conversational partner hadlong hair. It is now more likely he was speaking to a woman, since women are more likely to have long hair thanmen. Bayes' theorem can be used to calculate the probability that the person was a woman.To see how this is done, let W represent the event that the conversation was held with a woman, and L denote theevent that the conversation was held with a long-haired person. It can be assumed that women constitute half thepopulation for this example. So, not knowing anything else, the probability that W occurs is P(W)=0.5.Suppose it is also known that 75% of women have long hair, which we denote as P(L |W)=0.75 (read: theprobability of event L given event W is 0.75, meaning that the probability of a person having long hair (event "L"),given that we already know that the person is a woman ("event W") is 75%). Likewise, suppose it is known that 15%of men have long hair, or P(L |M)=0.15, where M is the complementary event of W, i.e., the event that theconversation was held with a man (assuming that every human is either a man or a woman).Our goal is to calculate the probability that the conversation was held with a woman, given the fact that the personhad long hair, or, in our notation, P(W |L). Using the formula for Bayes' theorem, we have:

where we have used the law of total probability to expand . The numeric answer can be obtained bysubstituting the above values into this formula (the algebraic multiplication is annotated using " ", the centered dot).This yields

-

Bayes' theorem 3

i.e., the probability that the conversation was held with a woman, given that the person had long hair, is about 83%.More examples are provided below.Another way to do this calculation is as follows. Initially, it is equally likely that the conversation is held with awoman as with a man, so the prior odds are 1:1. The respective chances that a man and a woman have long hair are15% and 75%. It is 5 times more likely that a woman has long hair than that a man has long hair. We say that thelikelihood ratio or Bayes factor is 5:1. Bayes' theorem in odds form, also known as Bayes' rule, tells us that theposterior odds that the person was a woman is also 5:1 (the prior odds, 1:1, times the likelihood ratio, 5:1). In aformula:

Statement and interpretationMathematically, Bayes' theorem gives the relationship between the probabilities of A and B, P(A) and P(B), and theconditional probabilities of A given B and B given A, P(A|B) and P(B|A). In its most common form, it is:

The meaning of this statement depends on the interpretation of probability ascribed to the terms:

Bayesian interpretationMain article: Bayesian probabilityIn the Bayesian (or epistemological) interpretation, probability measures a degree of belief. Bayes' theorem thenlinks the degree of belief in a proposition before and after accounting for evidence. For example, suppose somebodyproposes that a biased coin is twice as likely to land heads than tails. Degree of belief in this might initially be 50%.The coin is then flipped a number of times to collect evidence. Belief may rise to 70% if the evidence supports theproposition.For proposition A and evidence B,

P(A), the prior, is the initial degree of belief in A. P(A|B), the posterior, is the degree of belief having accounted for B. the quotient P(B|A)/P(B) represents the support B provides for A.

For more on the application of Bayes' theorem under the Bayesian interpretation of probability, see Bayesianinference.

-

Bayes' theorem 4

Frequentist interpretation

Illustration of frequentist interpretation with treediagrams. Bayes' theorem connects conditional

probabilities to their inverses.

In the frequentist interpretation, probability measures a proportion ofoutcomes. For example, suppose an experiment is performed manytimes. P(A) is the proportion of outcomes with property A, and P(B)that with property B. P(B|A) is the proportion of outcomes withproperty B out of outcomes with property A, and P(A|B) the proportionof those with A out of those with B.

The role of Bayes' theorem is best visualized with tree diagrams, asshown to the right. The two diagrams partition the same outcomes by Aand B in opposite orders, to obtain the inverse probabilities. Bayes'theorem serves as the link between these different partitionings.

Forms

Events

Simple form

For events A and B, provided that P(B)0,

In many applications, for instance in Bayesian inference, the event B isfixed in the discussion, and we wish to consider the impact of its having been observed on our belief in variouspossible events A. In such a situation the denominator of the last expression, the probability of the given evidence B,is fixed; what we want to vary is A. Bayes theorem then shows that the posterior probabilities are proportional to thenumerator:

(proportionality over A for given B).In words: posterior is proportional to prior times likelihood (see Lee, 2012, Chapter 1).If events A1, A2, , are mutually exclusive and exhaustive, i.e., one of them is certain to occur but no two can occurtogether, and we know their probabilities up to proportionality, then we can determine the proportionality constantby using the fact that their probabilities must add up to one. For instance, for a given event A, the event A itself andits complement A are exclusive and exhaustive. Denoting the constant of proportionality by c we have

and Adding these two formulas we deduce that

-

Bayes' theorem 5

Extended form

Often, for some partition {Aj} of the event space, the event space is given or conceptualized in terms of P(Aj) andP(B|Aj). It is then useful to compute P(B) using the law of total probability:

In the special case where A is a binary variable:

Random variables

Diagram illustrating the meaning of Bayes'theorem as applied to an event space generated bycontinuous random variables X and Y. Note thatthere exists an instance of Bayes' theorem foreach point in the domain. In practice, these

instances might be parametrized by writing thespecified probability densities as a function of x

and y.

Consider a sample space generated by two random variables X andY. In principle, Bayes' theorem applies to the events A={X=x} andB={Y=y}. However, terms become 0 at points where either variablehas finite probability density. To remain useful, Bayes' theorem may beformulated in terms of the relevant densities (see Derivation).

Simple form

If X is continuous and Y is discrete,

If X is discrete and Y is continuous,

If both X and Y are continuous,

Extended form

Diagram illustrating how an event spacegenerated by continuous random variables X and

Y is often conceptualized.

A continuous event space is often conceptualized in terms of thenumerator terms. It is then useful to eliminate the denominator usingthe law of total probability. For fY(y), this becomes an integral:

-

Bayes' theorem 6

Bayes' ruleMain article: Bayes' ruleBayes' rule is Bayes' theorem in odds form.

where

is called the Bayes factor or likelihood ratio and the odds between two events is simply the ratio of the probabilitiesof the two events. Thus

,

,

So the rule says that the posterior odds are the prior odds times the Bayes factor, or in other words, posterior isproportional to prior times likelihood.

Derivation

For eventsBayes' theorem may be derived from the definition of conditional probability:

For random variablesFor two continuous random variables X and Y, Bayes' theorem may be analogously derived from the definition ofconditional density:

-

Bayes' theorem 7

Examples

Frequentist example

Tree diagram illustrating frequentist example. R,C, P and P bar are the events representing rare,common, pattern and no pattern. Percentages in

parentheses are calculated. Note that threeindependent values are given, so it is possible to

calculate the inverse tree (see figure above).

An entomologist spots what might be a rare subspecies of beetle, dueto the pattern on its back. In the rare subspecies, 98% have the pattern,or P(Pattern|Rare) = 98%. In the common subspecies, 5% have thepattern. The rare subspecies accounts for only 0.1% of the population.How likely is the beetle having the pattern to be rare, or what isP(Rare|Pattern)?

From the extended form of Bayes' theorem (since any beetle can be only rare or common),

Coin flip exampleConcrete example from 5 August 2011 New York Times article [2] by John Allen Paulos (quoted verbatim):"Assume that youre presented with three coins, two of them fair and the other a counterfeit that always lands heads.If you randomly pick one of the three coins, the probability that its the counterfeit is 1 in 3. This is the priorprobability of the hypothesis that the coin is counterfeit. Now after picking the coin, you flip it three times andobserve that it lands heads each time. Seeing this new evidence that your chosen coin has landed heads three times ina row, you want to know the revised posterior probability that it is the counterfeit. The answer to this question, foundusing Bayess theorem (calculation mercifully omitted), is 4 in 5. You thus revise your probability estimate of thecoins being counterfeit upward from 1 in 3 to 4 in 5."The calculation ("mercifully supplied") follows:

-

Bayes' theorem 8

Drug testing

Tree diagram illustrating drug testing example. U,U bar, "+" and "" are the events representing

user, non-user, positive result and negative result.Percentages in parentheses are calculated.

Suppose a drug test is 99% sensitive and 99% specific. That is, the testwill produce 99% true positive results for drug users and 99% truenegative results for non-drug users. Suppose that 0.5% of people areusers of the drug. If a randomly selected individual tests positive, whatis the probability he or she is a user?

Despite the apparent accuracy of the test, if an individual tests positive, it is more likely that they do not use the drugthan that they do.This surprising result arises because the number of non-users is very large compared to the number of users; thus thenumber of false positives (0.995%) outweighs the number of true positives (0.495%). To use concrete numbers, if1000 individuals are tested, there are expected to be 995 non-users and 5 users. From the 995 non-users,0.0199510 false positives are expected. From the 5 users, 0.9955 true positives are expected. Out of 15positive results, only 5, about 33%, are genuine.Note: The importance of specificity can be illustrated by showing that even if sensitivity is 100% and specificity is at99% the probability of the person being a drug user is ~33% but if the specificity is changed to 99.5% and the

-

Bayes' theorem 9

sensitivity is dropped down to 99% the probability of the person being a drug user raises to 49.8%. Even at 90%sensitivity and 99.5% specificity the probability of a person being a drug user is 47.5%.

ApplicationsBayes' Theorem is significantly important in inverse problem theory, where the a posteriori probability densityfunction is obtained from the product of prior probability density function and the likelihood probability densityfunction. An important application is constructing computational models of oil reservoirs given the observed data.[3]

Although Bayes' theorem is commonly used to determine the probability of an event occurring, it can also be appliedto verify someones credibility as a prognosticator. Many pundits claim to be able to predict the outcome of an event;political elections, trials, the weather and even sporting events. Larry Sabato, founder of Sabatos Crystal Ball, is aperfect example. His website provides free political analysis and election predictions. His success at predictions haseven led him to be called a pundit with an opinion for every reporters phone call. We even have PunxsutawneyPhil, the famous groundhog, who tells us whether or not we can expect a longer winter or an early spring. Bayestheorem tells us the difference between whos on a hot streak and who is what they claim to be.Lets say we live in an area where everyone gambles on the outcome of coin flips. Because of that, there is a bigbusiness for predicting coin flips. Suppose that 5% of predictors can actually win in the long run, and 80% of thoseare winners over a 2-year period. 95% of predictors are pretenders who are just guessing, and 20% of them arewinners over a 2-year period (everyone gets lucky once in a while). This means that 82.6% of bettors who arewinners over a 2-year period are actually long-term losers who are winning above their real average.

HistoryBayes' theorem was named after the Reverend Thomas Bayes (170161), who studied how to compute a distributionfor the probability parameter of a binomial distribution (in modern terminology). His friend Richard Price edited andpresented this work in 1763, after Bayes' death, as An Essay towards solving a Problem in the Doctrine of Chances.The French mathematician Pierre-Simon Laplace reproduced and extended Bayes' results in 1774, apparently quiteunaware of Bayes' work.[4] Stephen Stigler suggested in 1983 that Bayes' theorem was discovered by NicholasSaunderson some time before Bayes.[5] However, this interpretation has been disputed.[6]

Martyn Hooper[7] and Sharon McGrayne have argued that Richard Price's contribution was substantial."By modern standards, we should refer to the Bayes-Price rule. Price discovered Bayes's work, recognized itsimportance, corrected it, contributed to the article, and found a use for it. The modern convention ofemploying Bayes' name alone is unfair but so entrenched that anything else makes little sense."

Notes[1] http:/ / en. wikipedia. org/ w/ index. php?title=Template:Bayesian_statistics& action=edit[2] http:/ / www. nytimes. com/ 2011/ 08/ 07/ books/ review/ the-theory-that-would-not-die-by-sharon-bertsch-mcgrayne-book-review. html[3] Gharib Shirangi, M., History matching production data and uncertainty assessment with an efficient TSVD parameterization algorithm,

Journal of Petroleum Science and Engineering, http:/ / www. sciencedirect. com/ science/ article/ pii/ S0920410513003227[4][4] Laplace refined Bayes' theorem over a period of decades:

Laplace announced his independent discovery of Bayes' theorem in: Laplace (1774) "Mmoire sur la probabilit des causes par lesvnements," Mmoires de l'Acadmie royale des Sciences de Paris (Savants trangers), 4 : 621--656. Reprinted in: Laplace, Oeuvrescompltes (Paris, France: Gauthier-Villars et fils, 1841), vol. 8, pp. 27-65. Available on-line at: Gallica (http:/ / gallica. bnf. fr/ ark:/ 12148/bpt6k77596b/ f32. image). Bayes' theorem appears on p. 29.

Laplace presented a refinement of Bayes' theorem in: Laplace (read: 1783 / published: 1785) "Mmoire sur les approximations desformules qui sont fonctions de trs grands nombres," Mmoires de l'Acadmie royale des Sciences de Paris, 423-467. Reprinted in:Laplace, Oeuvres compltes (Paris, France: Gauthier-Villars et fils, 1844), vol. 10, pp. 295-338. Available on-line at: Gallica (http:/ /gallica. bnf. fr/ ark:/ 12148/ bpt6k775981/ f218. image. langEN). Bayes' theorem is stated on page 301.

See also: Laplace, Essai philosophique sur les probabilits (Paris, France: Mme. Ve. Courcier [Madame veuve (i.e., widow) Courcier], 1814), page 10 (http:/ / books. google. com/ books?id=rDUJAAAAIAAJ& pg=PA10#v=onepage& q& f=false). English translation: Pierre

-

Bayes' theorem 10

Simon, Marquis de Laplace with F. W. Truscott and F. L. Emory, trans., A Philosophical Essay on Probabilities (New York, New York:John Wiley & Sons, 1902), page 15 (http:/ / google. com/ books?id=WxoPAAAAIAAJ& pg=PA15#v=onepage& q& f=false).

[5] Stigler, Stephen M. (1983), "Who Discovered Bayes' Theorem?", The American Statistician 37(4):290296.[6] Edwards, A. W. F. (1986), "Is the Reference in Hartley (1749) to Bayesian Inference?", The American Statistician 40(2):109110[7] Hooper, Martyn. (2013), "Richard Price, Bayes' theorem, and God", Significance 10(1):36-39.

Further reading McGrayne, Sharon Bertsch (2011). The Theory That Would Not Die: How Bayes' Rule Cracked the Enigma Code,

Hunted Down Russian Submarines & Emerged Triumphant from Two Centuries of Controversy. Yale UniversityPress. ISBN978-0-300-18822-6.

Andrew Gelman, John B. Carlin, Hal S. Stern, and Donald B. Rubin (2003), "Bayesian Data Analysis", SecondEdition, CRC Press.

Charles M. Grinstead and J. Laurie Snell (1997), "Introduction to Probability (2nd edition)", AmericanMathematical Society (free pdf available (http:/ / www. dartmouth. edu/ ~chance/ teaching_aids/ books_articles/probability_book/ book. html).

Pierre-Simon Laplace. (1774/1986), "Memoir on the Probability of the Causes of Events", Statistical Science1(3):364378.

Peter M. Lee (2012), "Bayesian Statistics: An Introduction", Wiley. Rosenthal, Jeffrey S. (2005): "Struck by Lightning: the Curious World of Probabilities". Harper Collings. Stephen M. Stigler (1986), "Laplace's 1774 Memoir on Inverse Probability", Statistical Science 1(3):359363. Hazewinkel, Michiel, ed. (2001), "Bayes formula" (http:/ / www. encyclopediaofmath. org/ index. php?title=p/

b015380), Encyclopedia of Mathematics, Springer, ISBN978-1-55608-010-4 Stone, JV (2013). Chapter 1 of book "Bayes Rule: A Tutorial Introduction" (http:/ / jim-stone. staff. shef. ac. uk/

BookBayes2012/ BayesRuleBookMain. html), University of Sheffield, Psychology.

External links The Theory That Would Not Die by Sharon Bertsch McGrayne (http:/ / www. nytimes. com/ 2011/ 08/ 07/ books/

review/ the-theory-that-would-not-die-by-sharon-bertsch-mcgrayne-book-review. html) New York Times BookReview by John Allen Paulos on 5 August 2011

Visual explanation of Bayes using trees (https:/ / www. youtube. com/ watch?v=Zxm4Xxvzohk) (video) Bayes' frequentist interpretation explained visually (http:/ / www. youtube. com/ watch?v=D8VZqxcu0I0) (video) Earliest Known Uses of Some of the Words of Mathematics (B) (http:/ / jeff560. tripod. com/ b. html). Contains

origins of "Bayesian", "Bayes' Theorem", "Bayes Estimate/Risk/Solution", "Empirical Bayes", and "BayesFactor".

Weisstein, Eric W., "Bayes' Theorem" (http:/ / mathworld. wolfram. com/ BayesTheorem. html), MathWorld. Bayes' theorem (http:/ / planetmath. org/ encyclopedia/ BayesTheorem. html) at PlanetMath Bayes Theorem and the Folly of Prediction (http:/ / rldinvestments. com/ Articles/ BayesTheorem. html) A tutorial on probability and Bayes theorem devised for Oxford University psychology students (http:/ / www.

celiagreen. com/ charlesmccreery/ statistics/ bayestutorial. pdf) An Intuitive Explanation of Bayes' Theorem by Eliezer S. Yudkowsky (http:/ / yudkowsky. net/ rational/ bayes)

-

Article Sources and Contributors 11

Article Sources and ContributorsBayes' theorem Source: http://en.wikipedia.org/w/index.php?oldid=608810162 Contributors: 3mta3, Abtract, Academic Challenger, AeroIllini, Ajarmst, Akitstika, AlanUS, Aldux, Alexpiet,Alfio, Amosfolarin, Andrewaskew, Andyjsmith, Antaeus Feldspar, Ap, Apelbaum, Arpit.chauhan1, Artegis, Arthur Rubin, AxelBoldt, BD2412, Baccyak4H, Benet Allen, BeteNoir, Bikramac,BillFlis, Billjefferys, Bjcairns, Bkell, Blocter, Brianbjparker, Bryangeneolson, Btyner, Butros, C. A. Russell, Calumny, Ceran, Chalst, Charles Matthews, Charleswallingford, Chewings72,Chinju, ChuckHG, CiaPan, Coffee2theorems, Colin Marquardt, Conradl, Cramadur, Curb Chain, Cwkmail, Cybercobra, DJ1AM, Danachandler, Dandin1, Daniel il, Davegroulx, DavidLevinson,Dcljr, Dcoetzee, Decora, Deflective, Dessources, Docemc, Doradus, Download, Dragice, DragonflySixtyseven, Drallim, DramaticTheory, Dratman, Duncan.france, Duncharris, Duoduoduo,Dysmorodrepanis, ENeville, Ebehn, EdH, El C, Eliasen, Emufarmers, Enkyo2, FSB1614, Facetious, Fcueto, Fentlehan, Forwardmeasure, Fowlslegs, Freefall322, Fresheneesz, G716, GJ, Geni,Gerhardvalentin, Giftlite, Gill110951, Gimboid, Glacialfox, Gnathan87, Gombang, Graham87, Gregbard, Guppyfinsoup, Gurch, Gustavh, Hannes Eder, Happy-melon, Hateweaver, Helgus,Henrygb, Hercule, Heron, Hike395, Htmort, Hurmata, Hushpuckena, InBalance, Iner22, InverseHypercube, J.e, JA(000)Davidson, JCSantos, Ja malcolm, Jameshfisher, Jauhienij, Jean.julius,JeffJor, Jfmarchini, Jmath666, Jmorgan, Jodi.a.schneider, Jogloran, John.St, Jordanolsommer, Josh Griffith, Justin W Smith, JustinHagstrom, Jvstone, Jna runn, Karada, Karen Huyser,Karinhunter, Kaslanidi, Khfan93, Kiefer.Wolfowitz, KoleTang, Kripkenstein, Krtschil, Kupirijo, Kwamikagami, Kzollman, Lambiam, Lamro, Lancevortex, Landroni, Lazarus1907, Lemeza,Lgstarn, Lightmouse, Lindsay658, Llamapez, Lova Falk, Lunae, Lupin, MER-C, Maghnus, MairAW, Makana, Makecat, Manishearth, Marabiloso, Marco Polo, MarkSweep, Marlow4, Maroux,Marquez, Martin Hogbin, MartinPoulter, Marvoir, Materialscientist, Mathew5000, Mattbuck, MauriceJFox3, Maximaximax, McGeddon, Mcld, MedicineMan555, Melchoir, Melcombe,Metacomet, Michael Hardy, Miguel, Mmattb, Mo ainm, Monado, MrOllie, Narxysus, NawlinWiki, Nbarth, Neelix, New questions, Newportm, Nijdam, Noah Salzman, Nobar, Ogai, OlegAlexandrov, Ost316, P64, Paul Murray, Paulpeeling, Pcarbonn, Penguin929, Petiatil, PhySusie, Pianoroy, Pichpich, Plynn9, Policron, Primarscources, Pseudomonas, Qniemiec, Quuxplusone,Qwfp, RDBury, Randywombat, Ranger2006, Requestion, Rick Block, Riitoken, Ritchy, RolandR, Rrenner, Rtc, Rumping, SE16, Sailing to Byzantium, Salgueiro, Sam Hocevar, Samwb123,Sanpitch, Savh, Schutz, Shadowjams, Sharp11, Shorespirit, Shotgunlee, Siddhartha Ghai, Sirovsky, Sisson, Smaines, SmartGuy Old, Smsarmad, Snoyes, Sodin, Solomon7968, Sonett72,Spellbinder, Splintax, SqueakBox, Srays, Stephen B Streater, SteveMacIntyre, Stevertigo, Stoni, Subash.chandran007, Sullivan.t.j, Sun Creator, Svyatoslav, Swapnil.iims, TakuyaMurata, Taw,Tcncv, Terra Green, Terrycojones, The Anome, Theme97, Themfromspace, Thunderfish24, Tide rolls, Tietew, Tim bates, TimothyFreeman, Timwi, Toms2866, Tomyumgoong, Trioculite,Trovatore, Urdutext, Urhixidur, User A1, Verne Equinox, Vespertineflora, Vieque, Voomoo, Vsb, Wafulz, Wavelength, Weakishspeller, Weather Man, Wfunction, WikHead, WikiAnoopRao,Wikid77, Wikidilworth, Wile E. Heresiarch, X7q, Xabian40409, Yserarau, Zadignose, Zundark, Zven, Zvika, 633 anonymous edits

Image Sources, Licenses and ContributorsFile:Bayes' Theorem MMB 01.jpg Source: http://en.wikipedia.org/w/index.php?title=File:Bayes'_Theorem_MMB_01.jpg License: Creative Commons Attribution-Sharealike 2.0Contributors: mattbuck (category)File:Bayes icon.svg Source: http://en.wikipedia.org/w/index.php?title=File:Bayes_icon.svg License: Creative Commons Zero Contributors: Mikhail RyazanovFile:Fisher iris versicolor sepalwidth.svg Source: http://en.wikipedia.org/w/index.php?title=File:Fisher_iris_versicolor_sepalwidth.svg License: Creative Commons Attribution-Sharealike 3.0Contributors: en:User:Qwfp (original); Pbroks13 (talk) (redraw)File:Bayes theorem tree diagrams.svg Source: http://en.wikipedia.org/w/index.php?title=File:Bayes_theorem_tree_diagrams.svg License: Creative Commons Zero Contributors: Gnathan87File:Bayes continuous diagram.svg Source: http://en.wikipedia.org/w/index.php?title=File:Bayes_continuous_diagram.svg License: Creative Commons Zero Contributors: User:Gnathan87File:Continuous event space specification.svg Source: http://en.wikipedia.org/w/index.php?title=File:Continuous_event_space_specification.svg License: Creative Commons ZeroContributors: User:Gnathan87File:Bayes theorem simple example tree.svg Source: http://en.wikipedia.org/w/index.php?title=File:Bayes_theorem_simple_example_tree.svg License: Creative Commons Zero Contributors:User:Gnathan87File:Bayes theorem drugs example tree.svg Source: http://en.wikipedia.org/w/index.php?title=File:Bayes_theorem_drugs_example_tree.svg License: Creative Commons Zero Contributors:User:Gnathan87

LicenseCreative Commons Attribution-Share Alike 3.0//creativecommons.org/licenses/by-sa/3.0/

Bayes' theoremIntroductory exampleStatement and interpretationBayesian interpretation Frequentist interpretation

FormsEventsSimple formExtended form

Random variablesSimple formExtended form

Bayes' rule

DerivationFor eventsFor random variables

ExamplesFrequentist exampleCoin flip exampleDrug testing

Applications HistoryNotesFurther readingExternal links

License

![Bayesian InferenceStatisticat].pdf1. Bayes’ Theorem Bayes’ theorem shows the relation between two conditional probabilities that are the reverse of each other. This theorem is](https://static.fdocuments.in/doc/165x107/5f8dd532af152601480d83ba/bayesian-inference-statisticatpdf-1-bayesa-theorem-bayesa-theorem-shows-the.jpg)