Attribute Extraction and Scoring: A Probabilistic Approach Taesung Lee, Zhongyuan Wang, Haixun Wang,...

-

Upload

solomon-black -

Category

Documents

-

view

218 -

download

0

Transcript of Attribute Extraction and Scoring: A Probabilistic Approach Taesung Lee, Zhongyuan Wang, Haixun Wang,...

Attribute Extraction and Scoring:

A Probabilistic ApproachTaesung Lee, Zhongyuan Wang, Haixun Wang, Seung-won Hwang

Microsoft Research AsiaSpeaker: Bo Xu

Background

• Goal of Creating Knowledge Base• Knowledge base is used to provide this background knowledge to machines• Enable machines to perform certain types of inferences as humans do

• Three backbone of the knowledge base• IsA: a relationship between a sub-concept and a concept

• e.g., an IT company is a company• isInstanceOf: a relationship between an entity and a concept

• e.g., Microsoft is a company• isPropertyOf: a relationship between an attribute and a concept

• e.g., color is a property/attribute of wine

Attributes(isPropertyOf) play a critical role in knowledge inference• Knowledge Inference• Given some attributes, guess which concept they described

• E.g. • “capital city, population”, people may infer that it relates to country• Given “color, body, smell,” people may think of wine

• However, in many cases, the association may not be straightforward, and may require some guesswork even for humans

• However, in order to perform inference, knowing that a concept can be described by a certain set of attributes is not enough. Instead, we must know how important, or how typical each attribute is for the concept• Typically• P(c|a)

• Denotes how typical concept c is, given attribute a• P(a|c)

• Denotes how typical attribute a is, given concept c

Problem Definition

• Find the most likely concept given a set of attributes.

• A: attribute set• c: candidate concept

• Estimate the probability using a naïve Bayes modelGoal

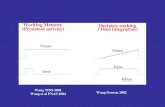

Attribute extraction from the web corpus• Concept-based extraction(CB)

• E.g., “... the acidity of a wine is an essential component of the wine ...”

• Instance-based extraction(IB)

• E.g., “the taste of Bordeaux is ...”

(c, a, n(c, a)) tuples

(i, a, n(i, a)) tuples

Attribute extraction from an external knowledgebase• Extract instance-based attributes from DBpedia entities(KB)• use n(i, a) = 1 to produce (i, a, n(i, a)) tuples

Attribute extraction from the query logs• Extract instance-based attributes from query logs (QB)• we cannot expect concept-based attributes from query logs as people usually

have interest in a more specific instance rather than a general concept

• Two-pass approach• First pass

• Extract candidate instance-attribute pairs (i, a) from the pattern “the a of (the/a/an) ⟨ ⟩i ”⟨ ⟩

• extend the candidate list IU to include the attributes obtained from IB and KB• Second pass

• count the co-occurrence n(i, a) of i and a in the query log for each (I, a) IU to produce ∈the (i, a, n(i, a)) list

Attribute Distribution

e.g., the attribute name is frequently observed from the CB pattern, such as “the name of a state is...”, but people will not mention it with the specific state, as in “the name of Washington is...”.

Computing Naive P(a|i, c) and P(i|c) using Probase• A simplifying assumption• one instance belongs to one concept

P(c|i) = 1 if this concept-instance is observed in Probase and 0 otherwise

However, in reality, the same instance name appears with many concepts• [C1] ambiguous instance related to dissimilar concepts• ‘Washington’ can refer to a president and a state

• [C2] unambiguous instance related to similar concepts• ‘George Washington’ is a president, patriot, and historical figure

Unbiasing P(a|i, c)

• JC(a, c) (join count)• defined as the number of instances in c that has attribute

• join ratio (JR)• representing how likely a is associated with c

Unbiasing P(c|i)

• Probase frequency counts np(c, i)• However, using this equation would not distinguish C2 from C1• sim(c, c′)• comparing the instance sets of the two concepts using Jaccard Similarity

Typicality Score Aggregation

• P(a|c)• One CB list from web documents • Three IB lists from web documents, query log, and knowledge base• no single source can be most reliable for all concepts

• Formulate P(a|c)

• The goal is to learn weights for the given concept

Learn the weights

• Ranking SVM approach• pairwise learning VS. regression • Since training data does not have to quantify absolute typicality score

Pairwise Learning

• Given x1>x2>x3• Binary Classification

• Positive example: x1-x2, x1-x3, x2-x3• Negative example: x2-x1, x3-x1, x3-x2

Attribute Synonym Set

• Leverage the evidence from Wikipedia• Wikipedia Redirects• Wikipedia Internal Links

• we take each connected component as a synonymous attribute cluster• Within each cluster, we set the most frequent attribute as a

representative attribute of the synonymous attributes

Experiment——Evaluation Measures and Baseline• The number of labeled attributes for evaluation is 4846 attributes

from 12 concepts• evaluation measures

• relevance score to the four groups as 1, 2/3, 1/3, and 0, respectively

• absolute value is hard to acquire, approximate this value• consider a set of attributes labeled as the universe