Artificial Intelligence Neural Networks ( Chapter 9 )

-

Upload

augustus-harris -

Category

Documents

-

view

230 -

download

2

Transcript of Artificial Intelligence Neural Networks ( Chapter 9 )

Outline of this Chapter

• Biological Neurons • Neural networks History• Artificial Neural Network• Perceptrons• Multilayer Neural Network• Applications of neural networks

Neural NetworkA broad class of models that mimic functioning inside the human brain

There are various classes of NN models. They are different from each other depending on

Problem types

Structure of the model

Model building algorithm

For this discussion we are going to focus onFeed-forward Back-propagation Neural Network

(used for Prediction and Classification problems)

Definition

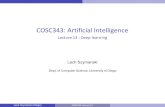

Biological Neurons• The brain is made up of neurons (nerve

cells) which have– dendrites (inputs)– a cell body (soma)– an axon (outputs)– synapse (connections between cells)

• Synapses can be excitatory (potential-increasing activity) or inhibitory (potential-decreasing), and may change over time

• The synapse releases a chemical transmitter – the sum of which can cause a threshold to be reached – causing the neuron to fire (electrical impulse is sent down the axon )

Most important functional unit in human brain – a class of cells called – NEURON

• Dendrites – Receive information

A bit of biology . . .

Hippocampal NeuronsSource: heart.cbl.utoronto.ca/ ~berj/projects.html

• Cell Body – Process information

• Axon – Carries processed information to other neurons

• Synapse – Junction between Axon end and Dendrites of other Neurons

Dendrites

Cell Body Axon

Schematic

Synapse

An Artificial Neuron

• Receives Inputs X1 X2 … Xp from other neurons or environment• Inputs fed-in through connections with ‘weights’• Total Input = Weighted sum of inputs from all sources• Transfer function (Activation function) converts the input to output• Output goes to other neurons or environment

f

X1

X2

Xp

I

I = w1X1 + w2X2

+ w3X3 +… + wpXp

V = f(I)

w1

w2

...wp

Dendrites Cell Body Axon

Direction of flow of Information

Biological Neurons (cont.)

• When the inputs reach some threshold an action potential (electrical pulse) is sent along the axon to the outputs.

• The pulse spreads out along the axon reaching synapse & releasing transmitters into the bodies of other cells.

• Learning occurs as a result of the synapse’ plasticicity: They exhibit long-term changes in connection strength.

• There are about 1011 neurons and about 1014 synapses in the human brain(!)

• A neuron may connect to as many as 100,000 other neurons

Brain structure• We still do not know exactly how the brain works.

e.g, born with about 100 billion neurons in our brain. Many die as we progress through life, & are not replaced, but we continue to learn.

But we do know certain things about it.• Different areas of the brain have different functions

– Some areas seem to have the same function in all humans (e.g., Broca’s region- speech & language); the overall layout is generally consistent

– Some areas vary in their function; also, the lower-level structure and function vary greatly emotions, reasoning,

planning, movement, & parts of speech.

senses

hearing, memory, meaning, and language

vision & ability to recognize objects

Brain structure (cont.)

• We don’t know how different functions are “assigned” or acquired– Partly the result of the physical layout / connection to

inputs (sensors) and outputs (effectors)– Partly the result of experience (learning)

• We really don’t understand how this neural structure/ collection of simple cells leads to action, consciousness and thought

• Artificial neural networks are not nearly as complex as the actual brain structure

Comparing brains with computers

• They are more neurons in human brain than they are bits in computers• Human brain is evolving very slowly---computer memories are growing

rapidly.• There are a lot more neurons than we can reasonably model in modern

digital computers, and they all fire in parallel• NN running on a serial computer requires 100 of cycles to decided if a

single N will fire---in real brain, all Ns do this in a single step.e.g. brain recognizes a face in less than a sec--- billion of cycles

• Neural networks are designed to be massively parallel• The brain is effectively a billion times faster at what it does

Computer Human Brain

Computational unitsStorage unitsCycle timeBandwidthNeuron updates/sec

1 CPU, 105 gates109 Bits RAM, 1011 bits disk

10-8 Sec109 bits/sec105

1011 neurons1011neurons, 1014 synapses

10-3 sec1014 bits/sec1014

Neural networks History• McCulloch & Pitts (1943) are generally recognised as the

designers of the first neural network

• Many of their ideas still used today (e.g. a neuron has a threshold level and once that level is reached the neuron fires is still the fundamental way in which artificial neural networks operate)

• Hebb (1949) developed the first learning rule (on the premise that if two neurons were active at the same time the strength between them should be increased).

• During the 50’s and 60’s many researchers such as Minsky & Papert, worked on the perceptron (NNModel)

• 1969 saw the death of neural network research for about 15 years

• Only in the mid 80’s (Parker and LeCun) NN research revived.

Artificial Neural Network• (Artificial) Neural networks are made up of nodes/units

connected by links which have– inputs edges, each link has a numeric weight– outputs edges (with weights)– an activation level (a function of the inputs)

The computation is split into 2 components:1. Linear component, called input function (ini)-- computes the

weighted sum of the unit’s input values.2. Non-linear component, called activation function (g)–

transforms the weighted sum into the final value that serves

as the unit’s activation value: ai = g(ini) = g( aj wj,i

)• Some nodes are inputs (perception), some are outputs

(action)

Modeling a Neuron

j

jiji aWin ,

Each unit does a local computation based on inputs from its neighbours & compute a new activation level – sends along each of its output links

aj: Activation value of unit j

wj,I: Weight on the link from unit j to unit i

inI: Weighted sum of inputs to unit i

aI: Activation value of unit i

g: Activation function.

Activation Functions• Different models are obtained by using different mathematical

functions for g.• 3 common choices are:

Step(x) = 1 if x >= 0, else 0

Sign(x) = +1 if x >= 0, else –1

Sigmoid(x) = 1/(1+e-x)

( in which we try to minimize the error by adjusting the weights of the network,

e : represents error degree)

1 represents the firing of a pulse down the axon, & 0 represents no firing.

t (threshold) represents the min total weighted input needed to cause the neuron to fire.

threshold function logistic function

ANN (Artificial Neural Network) – Feed-forward Network

A collection of neurons form a ‘Layer’

Dire

ctio

n of

info

rmat

ion

flow

X1 X2 X3 X4

y1 y2

Input Layer - Each neuron gets ONLY one input, directly from outside

Output Layer - Output of each neuron directly goes to outside

Hidden Layer - Connects Input and Output layers

Implementing logical functions

• McCulloch and Pitts: every Boolean function AND, OR, & NOT can be represented by units with suitable weights & thresholds.

• We can use these units to build a network to compute any Boolean function

(t = threshold or the value of the Bias weight that determines the threshold to cause the neuron to fire)

Network StructureThey are 2 main categories of NN structure:• Feed-forward/acyclic networks: allow signals to travel one way only; from input to output. There is

no feedback (loops). Tend to be straight forward networks that associate inputs with

outputs. (i.e. pattern recognition.) Usually arranged in layers– each unit receives input only from units

in preceding layer, no links between units in the same layer.– single-layer perceptrons– multi-layer perceptrons

• Recurrent/cyclic networks:– Feeds its outputs back into its own inputs.– recurrent neural nets have directed cycles with delays– The links can form arbitrary topologies.

• The brain is recurrent network – activation is fed back to the units that caused it.

Feed-forward example

a5 = g(W3,5.a3 +W4,5.a4)

= g(W3,5. g(W1,3.a1 +W2,3.a2) +W4,5 g(W1,4.a1 +W2,4.a2))

By adjusting weights, we change the function that the Network represents: learning occurs in NN this way!

Simple NN with 2 inputs, 2 hidden units & 1 output unit.

No direct connection to the outside world

Perceptron

• Is a network with all inputs connected directly to the output.

• This is called a single layer NN (Neural Network) or a Perceptron Network.

• It is a simple form of NN that is used for classification of linearly separable patterns. (i.e. If we have 2 results we can separate them with a line with each group result on a different side of the line)

Perceptron or a Single-layer NN A Feed-Forward NN with

no hidden units. Output units all operate

separately--no shared weights.

First Studied in the 50’s Other networks were

known about but the perceptron was the only one capable of learning and thus all research was concentrated in this area.

A single weight only affects one output so we can limit our study to a model as shown on the right

Notation can be simpler, i.e.

jWjIjStepO 0

Multilayer NN

• Network with 1/more layers of hidden units• Layers are usually fully connected;• numbers of hidden units typically chosen by hand

Summary

• Most brains have lots of neurons; each neuron linear threshold unit (?)

• Perceptrons (one-layer networks) insufficiently expressive• Multi-layer networks are sufficiently expressive; can be

trained by gradient descent, i.e., error back-propagation• Many applications: speech, driving, handwriting, fraud

detection, etc.• Engineering, cognitive modelling, and neural system

modelling• sub fields have largely diverged