AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition,...

Transcript of AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition,...

![Page 1: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/1.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 1

An Efficient Joint Formulation for BayesianFace Verification

Dong Chen, Xudong Cao, David Wipf, Fang Wen, and Jian Sun

Abstract—This paper revisits the classical Bayesian face recognition algorithm from Baback Moghaddam et al. and proposesenhancements tailored to face verification, the problem of predicting whether or not a pair of facial images share the sameidentity. Like a variety of face verification algorithms, the original Bayesian face model only considers the appearance differencebetween two faces rather than the raw images themselves. However, we argue that such a fixed and blind projection mayprematurely reduce the separability between classes. Consequently, we model two facial images jointly with an appropriate priorthat considers intra- and extra-personal variations over the image pairs. This joint formulation is trained using a principled EMalgorithm, while testing involves only efficient closed-formed computations that are suitable for real-time practical deployment.Supporting theoretical analyses investigate computational complexity, scale-invariance properties, and convergence issues. Wealso detail important relationships with existing algorithms, such as probabilistic linear disciminant analysis (PLDA) and metriclearning. Finally, on extensive experimental evaluations, the proposed model is superior to the classical Bayesian face algorithmand many alternative state-of-the-art supervised approaches, achieving the best test accuracy on three challenging datasets,Labeled Face in Wild (LFW), Multi-PIE, and YouTube Faces, all with unparalleled computational efficiency.

Index Terms—Bayesian face recognition, face verification, EM algorithm

F

1 INTRODUCTION

FACE verification and face identification representtwo sub-problems of face recognition. The former

involves verifying whether or not two given facesbelong to the same person, while the latter answersthe question of which identity should be assigned toa probe face set given a gallery of candidates. In thispaper, we focus on the verification problem, whichis somewhat more widely applicable and lays thefoundation for challenging identification problems.

Bayesian face recognition [1] by Baback Moghad-dam et al. represents one successful algorithm thathas been applied to face verification. It formulates theverification task as a binary Bayesian decision prob-lem. Let HI represents the intra-personal hypothesisthat two faces x1 and x2 belong to the same subject,and HE is the extra-personal hypothesis that two facesare from different subjects. Then, the face verificationproblem amounts to classifying the difference ∆ =x1 − x2 as intra-personal variation or extra-personalvariation using the log-likelihood ratio statistic

r(x1, x2) = logP (∆ |HI )

P (∆ |HE ). (1)

This ratio can be considered as a probabilistic measureof similarity between x1 and x2 for the face verifica-tion problem. In [1], the two conditional probabilitiesin (1) are modeled as Gaussians and eigen-analysis isused for model learning and efficient computation.

• D. Chen X. Cao, D. Wipf, F. Wen and J. Sun are with the VisualComputing Group, Microsoft Research, Beijing, China.E-mail: {doch, xudongca, davidwip, fangwen, jiansun}@microsoft.com

Because of the simplicity and competitive per-formance [2] of Bayesian face recognition, furtherprogress has been made along this line of research. Forexample, Wang and Tang [3] propose a unified frame-work for subspace face recognition which decompos-es the face difference into three subspaces: intrinsicdifference, transformation difference, and noise. Byexcluding the transform difference and noise andretaining the intrinsic difference, better performanceis obtained. In [4], a random subspace is introduced tohandle the multi-model and high dimension problem.The appearance difference can be also computed inany feature space such as Gabor feature [5]. Instead ofusing a naive Bayesian classifier, a SVM is trained in[6] to classify the the difference face which is projectedand whitened in an intra-person subspace.

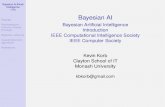

One commonality of all of these Bayesian face meth-ods is that discrimination is based solely on the differ-ence between a pair of face images. As illustrated bya 2D example in Figure 1, modeling the difference canbe viewed as first projecting all 2D points onto a 1Dline (X-Y) and then performing classification in 1D.While such a projection may retain some importantdiscriminative information, it may nonetheless reducethe separability of the classes. Therefore, the power ofBayesian face framework may be limited by discard-ing the discriminative information when we view twoclasses jointly in the original feature space.

In this paper, we propose to directly model the fulljoint distribution of {x1, x2} for the face verificationproblem in a similar Bayesian framework. We beginby introducing an appropriate parametric prior onface representations in Section 2, where each face

![Page 2: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/2.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 2

Y

X

X-Y

O

OClass 1

Class 2

Inseparable

}

Fig. 1: The 2-D data is projected to 1-D by x− y. The two classes,which are separable in the original joint representation, becomeinseparable after the projection. In the context of face verification,“Class1” and “Class2” would refer to the two hypotheses HI andHE .

feature is expressed as the summation of two indepen-dent Gaussian latent variables, one related to identity,and another that captures intra-personal variations.This prior allows us to obtain the joint distributionsof {x1, x2} under either HI or HE , leading to a closed-form expression for a log-likelihood ratio classificationrule analogous to (1).

Model parameters are estimated using the EM al-gorithm in Section 3, while low-rank and invarianceproperties germane to efficient training and testing arerigorously explored in Section 4. In Section 5, we nextscrutinize similarities and differences between theproposed algorithm and probabilistic linear discrimi-nant analysis (PLDA) [7], [8], a widely-used techniquefor face verification that is based on a related proba-bilistic factor analysis model. This is followed by fur-ther analytical comparisons with metric learning [9],[10], [11], [12] and reference-based methods [13], [14],[15], [16] in Section 6. Finally, extensive empirical val-idations are presented in Section 7, where we demon-strate state-of-the-art face verification performance onchallenging datasets: WDRef [17], Labeled Face inWild (LFW) [18], Multi-PIE [19], and YouTube Faces[20]. All proofs are deferred to Section 8. Overall ourprimary high-level contributions are summarized asfollows:

• The derivation and theoretical analysis of arobust, practical face verification system thatis well-suited for large-scale, real-time environ-ments.

• The detailed situating of this algorithm in thecontext of existing methods and theory, pinpoint-ing important distinctions that lead to improve-ments in both accuracy and efficiency. For ex-ample, we rigorously investigate the connectionwith PLDA, highlighting previously unexamineddistinctions in the corresponding update rulesthat can impact performance. We envision that

this analysis may have wider consequences inprobabilistic models with related parameteriza-tions.

• The empirical demonstration of face verificationaccuracy exceeding state-of-the-art algorithms onchallenging benchmark data.

Note that portions of this work appeared in recentconference proceedings [17] and to a lesser extent [21];however, here we include full proofs and algorithmderivations, expanded empirical results, and new the-oretical analyses and comparisons.

2 OUR APPROACH: A JOINT FORMULATION

In this section, we first present a naive joint formula-tion and then introduce our proposed alternative andattendant model learning algorithm.

2.1 A naive formulationA straightforward joint formulation is to directlymodel the joint distribution of {x1, x2} as a Gaus-sian. Thus, we have P (x1, x2|HI) = N(0,ΣI) andP (x1, x2|HE) = N(0,ΣE), where covariance matrixesΣI and ΣE can be estimated from a large canon ofintra-personal pairs and extra-personal pairs respec-tively. The mean of all faces is first subtracted as apreprocessing step. At test time, the log-likelihoodratio between P (x1, x2|HI) and P (x1, x2|HE) is usedas the similarity metric just as in (1). As will beseen in later experiments, such a naive formulationis moderately better than the original Bayesian facealgorithm.

Estimating the covariance matrices ΣI and ΣE di-rectly from the data may be ineffective though be-cause of two factors. First, if face images x1 and x2 arerepresented by d-dimensional features, then the naiveformulation requires the estimation of joint covariancematrices of size 2d×2d. This size could be prohibitive-ly large for robust estimation with limited trainingsamples. Secondly, because training samples may notbe entirely independent, the estimated ΣE may not beblock diagonal, although ideally it should be if x1 andx2 are truly statistically independent samples fromdifferent subjects. To address these issues, we nextintroduce a simple prior on the face representationto form a more robust joint Bayesian formulation.

2.2 A joint formulation with face priorAs assumed by previous models [8], [22], [23], [24], theappearance of a face can be well-approximated by twoadditive factors: identity and intra-personal variation.A face is represented by the sum of two independentGaussian variables

x = µ+ ε, (2)

where x is the observed face feature vector withthe mean of all faces subtracted, µ represents and

![Page 3: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/3.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 3

identity component, and ε is remaining intra-personalvariations that reflect differences in lighting, pose,and expression within a given identity. The latentvariables µ and ε are distributed independently asN (0, Sµ) and N (0, Sε) respectively, where Sµ and Sε

are two unknown covariance matrixes. Together thesedistributions constitute a prior distribution on faces.

Given this prior, the joint distribution of {x1, x2}is Gaussian with zero mean under either HE or HI .Based on the linear form of (2) and the independentassumption between µ and ε, the covariance of twofaces is

cov(xi, xj) = cov(µi, µj) + cov(εi, εj),i, j ∈ {1, 2}.

(3)

Under hypothesis HI , the identity µ1, µ2 of the pairare the same and their intra-person variations ε1,ε2 are independent. Consequently, P (x1, x2|HI) is azero-mean Gaussian with covariance

ΣI =

[Sµ + Sε Sµ

Sµ Sµ + Sε

].

In contrast, assuming HE , both the identities andintra-person variations are independent. Hence, thecovariance matrix of the distribution P (x1, x2|HE) is

ΣE =

[Sµ + Sε 0

0 Sµ + Sε

].

Given these two conditional joint probabilities forHI and HE , the log-likelihood ratio r(x1, x2) can beobtained in closed-form after a series of linear algebramanipulations resulting in

r(x1, x2) = logP (x1, x2|HI)

P (x1, x2|HE)

= xT1 Ax1 + xT

2 Ax2 − 2xT1 Gx2,

(4)

where

A = (Sµ + Sε)−1 − (F +G),

and F and G satisfy[F +G G

G F +G

]=

[Sµ + Sε Sµ

Sµ Sµ + Sε

]−1

. (5)

Note that constant factors have been omitted from (4)for simplicity.

From (4) we have a candidate test statistic for faceverification which only depends on estimating thed × d covariance matrices Sµ and Sε, which are ofsignificantly lower dimension than the requirementsfrom the naive joint formulation. However, we stillrequire a careful learning procedure to ensure reli-able estimates. In the forthcoming sections we willderive an efficient algorithm along with complemen-tary analyses and justifications. Highlights include thefollowing:

1) The matrices A and G in (4) produced by theproposed training pipeline will ultimately be

negative semi-definite and low rank, enablinga highly efficient testing implementation (seeSection 4.2).

2) Both the learning procedure for the matrices Sµ

and Sε, and the resulting log-likelihood ratiometric r, are invariant to any invertible lineartransform of the face features, implying reducedmanual intervention (see Section 4.3).

3) While perhaps deceptively simple upon firstinspective, we carefully examine important char-acteristics that differentiate the proposed JointBayesian model from existing face verificationalgorithms including PLDA, metric learning,and reference-based methods (see Sections 5 and6).

3 PARAMETERS ESTIMATION

As described in the previous section, the unknownparameters that we need to learn are the covariancematrices of identity and intra-person variation Θ ={Sµ, Sε}. In this section, we develop an EM algorithmto estimate the covariances by maximizing the log-likelihood function.

3.1 The log-likelihood function

Due to the independence of each subject, the overalllog-likelihood function is the summation of the log-likelihood function of each individual subject. There-fore our goal will be to solve

minSµ,Sε

−∑

i logP (xi|Sµ, Sε) (6)

s.t. Sµ ≽ 0, Sε ≽ 0

The likelihood term P (xi|Sµ, Sε) for subject i is de-rived based on the following generative process. Anidentity factor µi is first drawn from N(0, Sµ). Subse-quently mi i.i.d. intra-person variations [εi1; ...; εimi

]are drawn from N(0, Sε). The observed mi samplesare then given by the stacked vector xi = [µi +εi1; ...;µi + εim]. In matrix notation we have

xi = Qihi, where Qi =

I I 0 · · · 0I 0 I · · · 0...

......

. . ....

I 0 0 · · · I

, (7)

where the identity and intra-person variations arestacked into a column vector hi = [µi; εi1; · · · ; εimi ],the distribution of which is a Gaussian with a block-diagonal covariance matrix Σhi = diag(Sµ, Sε, ..., Sε).From this generative process, it follows that the like-lihood function of the ith subject is

P (xi|Sµ, Sε) = N(0,Σxi), where Σxi = QiΣhiQTi , (8)

where the covariance matrix Σxi has been constructedwith the unknown parameters Θ = {Sµ, Sε}.

![Page 4: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/4.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 4

Minimizing (6) with P (xi|Sµ, Sε) given by (8) ischallenging both because of the constraints needed toenforce proper covariances and the underlying non-convexity of the problem. Consequently, we developan EM algorithm for this purpose as described next.

3.2 EM algorithmInstead of directly maximizing the log-likelihood us-ing a brute force technique such as gradient descent,the EM algorithm introduces additional hidden or la-tent variables into the likelihood, the values of which,if known, would greatly simplify the optimization[25]. At each iteration, the expected log-likelihoodof both observed and hidden variables is computedwith respect to the conditional distribution of thehidden variables (E-step), and then the parameters areupdated by maximizing the resulting functional (M-step). We now unpack each step in detail.

E-step. For our purposes, we choose the identityand intra-person variations hi = [µi; εi1; · · · ; εim] asthe hidden variables and consider the joint distribu-tion P (xi,hi|Θ). As the observations xi are uniquelydetermined by the hidden variables hi in (7), the jointdistribution can be simplified into P (hi|Θ). Thereforethe expected negative log-likelihood function overhidden and observed variables reduces to

−∑i

EP (hi|xi,Θt) logP (hi|Θt+1), (9)

where the expectation is computed on the conditionaldistribution of P (hi|xi,Θt) at iteration t. Note the pa-rameters Θt in P (hi|xi,Θt) are known and used in theE-step for calculating the expectation. This is unlikethe parameters Θt+1 in the distribution P (hi|Θt+1)which are assumed to be unknown and updated inthe M-step below.

Given that the distribution of the hidden variablesis a Gaussian, we expand the expected log-likelihoodto produce the equivalent∑

i

log |Σhi |+ trace(Σ−1hi

E[hihTi ]), (10)

where E[·] is an abbreviated form for representing theexpectation computed on the conditional distributionP (hi|xi,Θt).

The expectation E[hihTi ] is the second-order mo-

ment of the conditional distribution P (hi|xi,Θt). Asshown in Section 8.1, the conditional distributionP (hi|xi,Θt) is a Gaussian with first- and second-ordermoments given by

E[hi] = ΣhiQTi Σ

−1xi

xi (11)

E[hihTi ] = Σh − ΣhQ

Ti Σ

−1x QiΣh + E[hi]E[hi]

T .(12)

The second-order moments can be simplified furtherusing E[hih

Ti ] ≈ E[hi]E[hi]

T . While the full E-stepwithout this approximation can actually be calculatedusing our model with limited additional computation,

in most practical situations we choose not to includethis extra term for several reasons. First, generalizedEM algorithms (of which this approximation is aspecial case) enjoy similar convergence properties toregular EM and are widely used in machine learn-ing and signal processing [26]. Secondly, we haveobserved empirically that the system performance isessentially identical with or without this additionalcovariance factor, largely because it tends to be negli-gibly small (several orders of magnitude smaller thanE[hi]E[hi]

T , and in certain quantifiable conditionsprovably equal to zero). And finally, removing thiscovariance leads to much more transparent analysisas discussed more in Section 4.2.

M-step. In the M-step, we solve for the unknownparameters Θt+1 by optimizing the expected log-likelihood in (10). As the covariance of the hiddenvariables Σhi

is a block diagonal matrix, the unknownSµ and Sε are effectively decoupled, and (10) can befurther simplified to∑

i

log |Sµ|+ trace(S−1µ E[µiµ

Ti ]) (13)

+∑i

∑j

log |Sε|+ trace(S−1ε E[εijε

Tij ])

where E[µiµTi ] and E[εijε

Tij ] are the block matrices at

the diagonal of E[hihTi ] in (11). We can then derive

the optimal parameters in a closed form by takingderivatives with respective to Sµ and Sε and equatingwith zero, leading to

Sµ = 1/n∑i

E[µiµTi ] (14)

Sε = 1/k∑i

∑j

E[εijεTij ], (15)

where n is the number of subjects, and k =∑

i mi

is the total number of all images of all subjects.The solution is therefore very intuitive: the optimalcovariance matrices are the sample covariances ofthe corresponding expected second-order momentsof the hidden variables. Overall then, the proposedEM algorithm handles the aforementioned difficultiesin optimizing the raw log-likelihood of the observeddata by decoupling the unknown parameters usinghidden data. This ultimately produces straightfor-ward, closed-form updates for the M-step.

3.3 Initialization

The proposed likelihood optimization problem (6) isnon-convex, and hence it is difficult to guarantee apriori that the global solution can be found except inspecial situations. The limiting case of large, equally-distributed samples is one such example.

Lemma 1: If the number of samples per subject mi =m is the same across all subjects, and m → ∞, then

![Page 5: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/5.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 5

the global minimum of (6) is obtained by

Sε =1

mn

∑i

∑j

(xij − xi)(xij − xi)T

Sµ =1

n

∑i

xixTi ,

where xi = 1/m∑

j xij .

The proof is deferred to Section 8.2. The asymptoticclosed-form solution is similar to the M-step of theaforementioned EM algorithm. The sample mean andsample residual can be viewed as the empirical ap-proximation of the true expected hidden identity andintra-person variation. In the extreme case describedby Lemma 1, the sample mean and residual graduallyapproach the true identity and intra-person variation,and the optimal estimation is the sample covariance.

However in practical scenarios it is intractable tocollect infinite samples for each subject. Moreover,it is rare to have an equivalent number of samplesfrom each subject, and inefficient to throw away extrasamples to artificially create such a balanced set. Con-sequently, the proposed EM algorithm, even thoughnon-convex, provides better estimates of the hiddendata as well as the unknown covariances comparedto raw empirical estimates. And we are always free toinitialize with the raw empirical estimates and thenpress for improvements through the EM iterations.Intuitively, the fewer samples per subject, and the moreheterogeneous the distribution of samples between sub-jects, the larger the margin of improvement affordedby the proposed EM algorithm.

Of course ultimately the EM algorithm, and thequality of the solutions it produces, is still potentiallyinitialization dependent. However, we have carefullytested the robustness of Joint Bayesian to a widevariety of initialization schemes for both Sµ and Sε,e.g., different combinations of sample between-class,sample within-class, and randomized covariance ma-trices). In all cases the algorithm converges to a so-lution with the same face verification accuracy, andtherefore local minima do not appear to be a problem.

4 TRAINING AND TESTING CONSIDERA-TIONS

In this section we detail several ways of improvingefficiency such that Joint Bayesian can be scaled tolarge-scale, practical application domains. We alsodiscuss an invariance property which simplifies thefeature selection process.

4.1 Efficient TrainingThe main computational cost of the EM algorithmis from computing (11) in the E-step, which requiresboth the inverse of a large covariance Σxi as well asexpensive matrix multiplications. The complexity of

computing the inverse of Σxi is O(d3m3i ), where d

is the dimension of feature and mi is the number ofsamples of the ith subject. For example, if d = 2, 000and mi = 40, the size of covariance matrix Σxi is80,000. It takes 25GB memory to store such a largematrix and 9 hours to compute its inverse.

Herein, we present an algorithm to reduce thecost by taking the advantage of block-wise structureembedded in Σxi . Given that

Σxi=

Sµ + Sε Sµ · · · Sµ

Sµ Sµ + Sε · · · Sµ

......

. . ....

Sµ Sµ · · · Sµ + Sε

.

it follows that Σ−1xi

has the same block-diagonal struc-ture

Σ−1xi

=

F +G G · · · G

G F +G · · · G...

.... . .

...G G · · · F +G

for some F and G. By plugging the above matricesinto the equation ΣxiΣ

−1xi

= I , we can solve for theunknown matrices giving

F = S−1ε

G = −(miSµ + Sε)−1SµS

−1ε .

During training, we only need to compute and storeF and G. Compared to directly evaluating Σ−1

xi, the

computational complexity is reduced from O(d3m3i )

to O(d3)

and the storage complexity from O(d2m2i ) to

O(d2), where d is the feature dimension. This leads to

substantial reductions, for example, if d = 2, 000 andmi = 40, the running time is reduced from 9 hoursto 0.5 seconds and the storage cost is reduced from25GB to 16MB.

We can also accelerate the required matrix mul-tiplication by taking the advantage of this block-wise structure. The expected hidden variables can beefficiently computed as

E[µi] = Sµ(F +miG)∑j

xij

E[εij ] = SεFxij + SεG∑j

xij .

Here the multiplication of large square matrices (ofsize dmi) have been decomposed into the multipli-cation of the small block matrices (of size d), againleading to significant computational savings. With allof these considerations in mind, the overall trainingpipeline of Joint Bayesian is shown in Table. 1.

4.2 Efficient testingAs described in Section 2.2, the closed-form solutionof the log-likelihood ratio test statistic is,

r(x1, x2) = xT1 Ax1 + xT

2 Ax2 − 2xT1 Gx2. (16)

![Page 6: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/6.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 6

Input

• The training samples {xij}; The number of subjects n; Thenumber of the samples of the ith subject mi; The totalnumber of the samples of all subjects k.

Output

• Joint Bayesian model parameters Θ = {Sµ, Sε}.

Joint Bayesian Learning

1) Initialize Sµ and Sε with the sample covariances.2) Calculate the expectation of the hidden variables of each

subject (E step):

F = S−1ε

G = −(miSµ + Sε)−1SµS

−1ε

E[µi] = Sµ(F +miG)∑j

xij

E[εij ] = SεFxij + SεG∑j

xij

E[µiµTi ] = E[µi]E[µi]

T

E[εijεTij ] = E[εij ]E[εij ]

T

3) Update the model parameter (M step):

Sµ =1

n

∑i

E[µiµTi ]

Sε =1

k

∑i,j

E[εijεTij ]

4) Repeat step 2) and step 3) until convergence (5 iterationsis usually sufficient).

TABLE 1: Efficient training pipeline of Joint Bayesian algorithm.Note the matrix F and G can be pre-computed to speed up thetraining. These matrices only depend on the number of samplesof a given subject, and we can pre-compute all possible F and G(for all unique values of mi) at the beginning of each iteration, andretrieve the right one to use according to the number of samples ofeach subject. This saves considerable computation whenever manyof the subjects share the same number of training instances.

Direct calculation requires computations of orderO(d2), where d is the feature dimension. This canbe prohibitively high in many practical scenarios thatrequire comparing a query image with all of the refer-ence images in a large database. Fortunately, the JointBayesian framework provides a natural mechanismfor accelerating testing calculations considerably. Thisis ultimately possible because estimates of Sµ tend tobe low rank, which then leads to low rank values for Aand G, culminating in potentially orders of magnitudereductions in computational complexity.

We will now justify these claims in detail. To beginwe have the following:

Lemma 2: The matrices A and G satisfy

A = −PAPTA , with rank[PA] ≤ rank[Sµ]

G = −PGPTG , with rank[PG] ≤ rank[Sµ]

for some matrices PA and PG respectively.

The proof of this lemma is included in Section 8.3.Consequently, if Sµ happens to be low rank, then

Input

• Joint Bayesian model parameters Θ = {Sµ, Sε}, and twotesting samples x1 and x2.

Output

• The similarity of two testing samples r(x1, x2).

Pre-computation

1) Calculate the matrices A and G used in the log-likelihoodratio function:

A = (Sµ + Sε)−1 −

[(Sµ + Sε)− Sµ(Sµ + Sε)

−1Sµ]−1

G = −(2Sµ + Sε)−1SµS

−1ε

2) Decompose −A and −G into two low rank matrices PA

and PG ({PA, PG} ∈ Rd×s and s < d) using SVD toproduce:

PAPTA ≈ −A

PGPTG ≈ −G

Computing Log-Likelihood Ratio

1) Project testing samples with PA and PG:

ai = PTA xi

gi = PTGxi

2) Calculate the log-likelihood ratio of two samples to mea-sure similarity:

r(x1, x2) = 2gT1 g2 − aT1 a1 − aT2 a2

TABLE 2: Efficient testing pipeline of Joint Bayesian algorithm.

both A and G can be expressed using convenient low-rank factorizations to reduce the complexity of testing.Specifically, we plug the low-rank factorization intothe log-likelihood ratio statistic (16) and end up withthe modified decision function

r(x1, x2) = 2gT1 g2 − aT1 a1 − aT2 a2, (17)

where ai = PTAxi, and gi = PT

Gxi (for i = 1, 2) are low-dimensional features obtained by linear projection.Define s = rank[Sµ]. Then the complexity of thislinear projection is no more than O(ds), and thecomplexity of the new decision function is at mostO(s). Therefore the overall complexity is O(ds), whichcan be considerably smaller with respect to O(d2).

Importantly, the speedup will be even more signif-icant in the task of face identification and face search,where we need to compute the log-likelihood ratio asa measurement of similarity between an input querysample and all reference samples in the database. Inthis situation, we can off-line pre-compute the low-dimension features for all samples in the referencedatabase. Given a query sample, we only need to on-line compute its low-dimension feature once and thenthe new low-cost decision function (similarity metric)from (17) across the entire reference database. Thiscan lead to multiple orders of magnitude speedup ifthere are many samples in the reference dataset. Forexample, if d = 2000, s = 200, and N = 1, 000, 000,

![Page 7: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/7.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 7

its takes 2 hours for the direct implementation togo through the entire database, but it only takes 7seconds for the efficient testing, over one thousandtimes speedup. A single end-to-end verification teston a pair of images can be done in milliseconds usingstandard hardware. The efficient testing pipeline ofthe Joint Bayesian is shown in Table 2.

But of course these efficiencies hinge on Sµ actuallybeing low rank. Consequently, for this overall lineof reasoning to have merit it is essential that wequantify why this property is likely to hold. For thispurpose we introduce some additional notation. LetX denote the d × k matrix of all image features fromall subjects, with the j-th column xj representing thefeature vector of image j. Also, define Φ to be the n×kmatrix with i-th row given by all zeros except a vectorof mi ones starting at element index ei =

∑i−1r=1 mr+1.

We may then express the relationship between alllatent and observed variables using X = E+MΦ. Wenow have the following:

Lemma 3: With E[hihTi ] = E[hi]E[hi]

T , the iter-ations from Table 1 are guaranteed to reduce (orleave unchanged once a fixed point is reached) theminimization problem

minE,M

n log |Sµ|+ k log |Sε| (18)

s.t. X = E+MΦ,

Sµ = 1nMMT , Sε =

1kEE

T .

The proof can be found in the supplementary mate-rial from [21]. As discussed previously (and supportedby a vast battery of empirical tests), the approximationE[hih

Ti ] ≈ E[hi]E[hi]

T does not significantly affectestimation results. Hence the Joint Bayesian estimatorcan be well-characterized by a linearly constrainedoptimization problem, with log-det penalties on therespective covariances Sµ and Sε. Such a penaltyfunction favors low-rank solutions given that it isa concave, nondecreasing function of the embeddedsingular values [21]. However, even with the exactcomputation for E[hih

Ti ], it can be shown that Join-

t Bayesian still includes a concave, nondecreasingpenalty function on the singular values of Sµ (albeitthe constraint surface is no longer linear), so regard-less of any approximation singular values of Sµ arefavored to be shrunk to zero where possible.

To summarize then, the EM algorithm adopted byJoint Bayesian is likely to produce low-rank estimatesfor Sµ, which subsequently leads to low-rank esti-mates for A and G, which then ultimately facilitatesextremely efficient testing via simple low-dimensionalprojections.

4.3 Invariance to Invertible Linear Transforms

We conclude this section by formalizing how the pro-posed Joint Bayesian training and testing pipelines,

as implemented via Table 2 and Table 1 respectively,display a desirable form of feature invariance.

Lemma 4: Let W denote an arbitrary invertible lin-ear transform of the d-dimensional face features, andlet Wi = diag(W, . . . ,W ) denote a block diagonalmatrix with mi+1 blocks. Then we have the following:

1) Training invariance: If Sµ and Sε represent a glob-al minimum of (6), then WSµW

T and WSεWT

are an optimal solution to

minSµ,Sε

−∑

i logP (Wixi|Sµ, Sε) (19)

s.t. Sµ ∈ H+, Sε ∈ H+.

2) Testing invariance: The likelihood ratio statisticfrom (4) satisfies r(x1, x2) = r(Wx1,Wx2).

The proof of this lemma is included in Section 8.4.This result, which is equally valid with or without theapproximation E[hih

Ti ] = E[hi]E[hi]

T , then impliesthat we need not be concerned about linear transfor-mations of the features, nor with motivating what theoptimal feature representation actually is. It is worthnoting that these desirable invariance properties arequite unlike other sparse or low-rank models thatincorporate, for example, convex penalties such as theℓ1 norm or the nuclear norm. With these penalties aninvertible linear transform would lead to an entirelydifferent decision rule and therefore different classifi-cation results. Hence significant manual involvementmay be required to determine the optimal W .

5 CONNECTIONS WITH PLDAProbabilistic linear discriminant analysis (PLDA) [8] isa widely used technique for face verification and othercomputer vision tasks. Although derived from theperspective of factor analysis models, it shares manycommonalities with the Joint Bayesian frameworkdescribed herein. Consequently, this section outlinessimilarities and differences that practically affect be-havior both in the context of face verification andbeyond.

5.1 Cost Function ComparisonsJoint Bayesian and PLDA training both involve min-imizing the negative log-likelihood of the trainingsamples under Gaussian distributional assumptionsand with independence across subjects. Specifically,we must solve

minΘ∈C

−∑i

logP (xi|Θ), (20)

where as before, xi denotes the stacked vector of alltraining exemplars from subject i, and Θ agglomeratesall model parameters, and C is a potential constraintset. For the Joint Bayesian model, Θ = {Sε, Sµ}, andP (xi|Θ) is given by (8).

![Page 8: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/8.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 8

In contrast, the PLDA model is parameterized asfollows. The j-th sample from subject i is expressedas

xij = Bzi +Rwij + νij , (21)

where

P (zi) = N(0, I), P (wij) = N(0, I), P (νij) = N(0, Sν).

Here B and R are low-rank matrices while Sν isa diagonal covariance. Per these specifications, theinput signal xij is considered to consist of two parts:an identity component Bzi, and intra-personal varia-tions Rwij + νij . Given the above, xij and xi are alsoGaussians given by

P (xij |B,R, Sν) = N(0, BBT +RRT + Sν) (22)

and

P (xi|B,R, Sν) = N(0, QΨΨTQT +Σν). (23)

respectively, where Σν = diag(Sν , . . . , Sν) and Ψ =diag(B,R, . . . , R). While technically Q, Ψ, and Σν

all depend on i, we omit this subscript to simplifynotation.

The likelihood function (23) is closely connectedwith the Joint Bayesian counterpart from (8). In par-ticular, after a few linear algebra manipulations, itis easily shown that when Sµ = BBT and Sε =RRT +Sν , then QΨΨTQT +Σν = QΣhQ

T , and hencethe overall likelihoods and underlying cost functionsbecome exactly equivalent. Consequently, in this re-spect PLDA can be formally viewed as applying amore constrained parameterization to the same JointBayesian cost function,1 and for training purposesboth rely on the EM algorithm. At the testing phasethis relationship is also equivalently maintained. Wewill now examine previously unexplored reasons forwhy the looser parameterization and associated EMalgorithm adopted by Joint Bayesian may be prefer-able for many applications such as face verification.Later in Section 7 we will present complementaryempirical evidence for these claims.

5.2 The Effects of Different ParameterizationsThe PLDA model requires that we choose the dimen-sionality, or number of columns, in B and R. Conse-quently, additional user involvement may be requiredfor optimizing these factors. In contrast, the JointBayesian model implicitly assumes that Sµ and Sε,and therefore equivalently B and R, are of unrestrict-ed and unknown rank. The natural mechanism for fa-voring lower rank estimates, to the extent allowed bythe data as discussed in Section 4.2, then effectively al-lows the Joint Bayesian model to learn the appropriatedimensionality without user tuning. Therefore, unless

1. Note that we are assuming the data have zero-mean after astandard centering operation, so the extra mean factor from [8] canbe omitted here.

we somehow know the true intrinsic dimensionality apriori, the less-constrained parameterization used byJoint Bayesian may be advantageous.

Of course we could always consider running thePLDA algorithm and its associated EM algorithm withB and R initialized to be full rank. In this context,we might expect PLDA and its now equivalent log-likelihood function to inherit the same rank minimiza-tion properties from Section 4.2 as Joint Bayesian lead-ing to similar performance and efficiencies. However,even when B and R are full rank, there remain im-portant differences in the underlying EM algorithmsthat minimize the Joint Bayesian and PLDA objectivesas we will now describe.

First, we note that if R is full rank in the PLDAmodel, it follows that Sν is then formally uniden-tifiable in the strict statistical sense, meaning thatmultiple parameterizations lead to an equivalent like-lihood model and associated intra-personal variationcomponent. Hence we will consider the behavior ofPLDA in the limit as Sν → 0, equivalent to the as-sumption employed by Joint Bayesian. In this regime,Joint Bayesian and PLDA cost functions are essentiallyidentical, with Sµ = BBT and Sε = RRT bothfull rank by assumption at initialization. However,while the cost functions are the same, the partitioninginto hidden data, as required by the associated EMalgorithms, are not. In particular, the hidden dataof PLDA, denoted as yi = [zi;wi1; · · · ;wiJ ] for thei-th subject, is related to that of Joint Bayesian viahi = Ψyi, ∀i. This leads to different upper boundsfor minimizing the negative log-likelihood functionsat each iteration of PLDA.

To see this, we carefully revisit the E and M stepsas derived in [8] for PLDA in the limit as Sν → 0. Therelationship between the latent variables yi and theobservations xi for the i-th subject is defined by

xi = Ayi

A =

B R 0 · · · 0B 0 R · · · 0...

......

. . ....

B 0 0 · · · R

,

and we again omit a subscript i on A here for sim-plicity. For the E-step, the required moments are

E[yi] = AT (AAT )−1xi (24)

E[yiyTi ] = I −AT (AAT )−1A+ E[yi]E[yi]

T , (25)

where the expectation is with respect to P (yi|xi,Θ).These are related to those of Joint Bayesian via

E[hi] = ΨE[yi] and E[yiyTi ] = ΨE[hih

Ti ]Ψ

T , (26)

and equivalent to those in [8] after application of thematrix inversion lemma. For the M-step, PLDA must

![Page 9: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/9.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 9

update the model parameters to find some new B andR that maximize

E

[∑i

logP (yi, xi|B, R)

]≡∑i

E[logP (xi|yi, B, R)

].

(27)This is then equivalent to minimizing∑

i

E[∥xi − Ayi∥

22

]=∑i

trace(xixTi − 2xiE[yi]T AT + AE[yiy

Ti ]A

T )

=∑i

trace(xi − AE[yi])(xi − AE[yi])T )

+ ntrace(A(I −AT (AAT )−1A)AT )

(28)

over A defined analogously to A but using B and R.It is easily shown that both (xi−AE[yi])(xi−AE[yi])

T

and A(I−AT (AAT )−1A)AT are positive semi-definitematrices. Therefore, the value of (28) is greater thanor equal to zero for any A. However, when A = A,

xi −AE[yi] = xi −AAT (AAT )−1xi = 0

andA(I −AT (AAT )−1A)AT = 0,

where the second equality follows from the fact thatI−AT (AAT )−1A is a projection operator to the orthog-onal complement of range[AT ]. Therefore, both thefirst and second term in (28) are minimized by A = A.Moreover, it can be shown that this minimizer isunique when there are a sufficient number of trainingexamples in general position. Hence {B = B, R = R}uniquely optimizes the M-step for all B and R, andthe EM-algorithm stalls upon a single iteration.

Note that there exists a pervasive perception thatthe EM algorithm is guaranteed to converge to a localoptimum of the log-likelihood function, which seemsto contradict the claim here of zero convergence awayfrom any starting point. However, the paradox isresolved once we understand that EM is only actuallyguaranteed to produce a series of iterates satisfying

log p(x|θt) ≤ log p(x|θt+1) (29)

for any general likelihood function p(x|θ). Strict con-vergence to local minima (or stationary points) re-quires further assumptions [27]. In particular, theconditions for Zangwill’s Global Convergence Theo-rem must be satisfied [28]. These conditions require,among other things, that

log p(x|θt) < log p(x|θt+1) (30)

for all θt which are not local minima. This then explainswhy, with Sν → 0, the PLDA algorithm becomesimmediately stuck after a single iteration even thoughthe gradient of the log-likelihood is far from zero.Additionally, even with Sν > 0, if Sν is small theconvergence rate becomes prohibitively slow.

To summarize then, for face verification (as well asmany other applications) it may be valuable to allowthe data to determine the appropriate dimensional-ity of the approximate low-dimensional subspacesrelevant for maximal discrimination, as opposed torequiring heuristic manual selections. This then favorsthe looser parameterization of Joint Bayesian, whichsubsequently requires the Joint Bayesian variant ofEM to better ensure convergence to meaningful so-lutions. Note that this previously unexamined dis-tinction between parameterizations and attendant EMalgorithms, and the convergence issues that result,likely has wide-ranging implications in numerousother applications of Bayesian-inspired factor analysismodels.

6 RELATIONSHIPS WITH OTHER WORKS

In this section, we discuss the connections betweenour joint Bayesian formulation and two other types ofleading supervised methods which are widely used inface recognition.

6.1 Connection with metric learningMetric learning [9], [10], [11], [12], [29] applied to facerecognition has recently attracted significant attention.The goal of metric learning is to find a new metricunder which two classes are more separable. Oneimportant example involves learning a Mahalanobisdistance

(x1 − x2)TM (x1 − x2) , (31)

where M is a positive definite matrix. However, this s-trategy shares the same drawback of the conventionalBayesian faces. Both first project the joint representa-tion to a lower dimension using the transform [I,−I].As already discussed, this transformation may reducethe separability and degrade the accuracy.

In contrast, the proposed joint formulation metricfrom (4) faces no analogous restriction. To clarify thispicture, we reformulate (4) as

(x1 − x2)TA (x1 − x2) + 2x1

T (A−G)x2. (32)

Comparing (31) and (32), we observe that the joint for-mulation provides additional freedom for construct-ing the discriminant surface. Consequently, the newmetric could be viewed as a more general distancewhich better preserves the separability.

Ultimately, there are two components in the pro-posed metric from (4): the cross inner term xT

1 Gx2

and two norm terms x1TAx1 and x2

TAx2. To in-vestigate the differing roles these terms may occupy,we performed a preliminary experiment under fiveconditions with changing conditions for A and G:a) use the original A and G as estimated by JointBayesian; b) set A → 0; c) set G → 0 ; d) setA → G; e) set G → A. The experimental design andresulting classification percentages are shown in Table

![Page 10: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/10.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 10

Experiments AccuracyA and G 87.5%A → 0 84.93%G → 0 55.63%A → G 83.73%G → A 84.85%

TABLE 3: Roles of A and G in the log-likelihood ratio metric. Bothtraining and testing are conducted on LFW data following the unre-stricted protocol. SIFT features are used followed by dimensionalityreduction to d = 200 via PCA. Readers can refer to Section 7 formore detailed information and testing of Joint Bayesian.

3. Rigorous empirical validation of Joint Bayesian isdeferred to Section 7.

Not surprisingly, most of discriminative informa-tion lies with the inner product term x1

TGx2; howev-er, the norms x1

TAx1 and x2TAx2 also play significant

roles. They serve as image-specific adjustments to thedecision boundary.

In summary, a variety of different algorithmshave been designed for learning discriminative Ma-halanobis distances, but relatively few investigatemore general forms such as that produced by Join-t Bayesian. Other recent research explores a usefulmetric based on cosine similarity by discriminativelearning [30]. As future work we plan to considerlearning a log-likelihood ratio metric in a similardiscriminative fashion.

6.2 Connection with Reference Based Methods

Reference-based methods [13], [14], [15], [16] gauge aface by its similarities to a set of reference faces. Forexample, in [13], each reference is represented by aSVM classifier which is trained from multiple imagesof the same person, and the SVM score is used as thesimilarity.

From a Bayesian viewpoint, if we model eachreference as a Gaussian with mean µi and a com-mon covariance, then the similarity from a facex to each reference is the conditional likelihoodP (x |µi ). Given n references, x can be representedas [P (x |µ1 ) , ..., P (x |µn )]. With this reference-basedrepresentation, we can define the similarity betweentwo faces {x1, x2} as the log-likelihood ratio

log

(1n

∑ni=1 P (x1|µi)P (x2|µi)(

1n

∑ni=1 P (x1|µi)

) (1n

∑ni=1 P (x2|µi)

)) . (33)

If we consider that the references are infinite andindependently sampled from a distribution P (µ), theabove equation can be rewritten as

log

( ∫P (x1|µ)P (x2|µ)P (µ)dµ∫

P (x1|µ)P (µ)dµ∫P (x2|µ)P (µ)dµ

). (34)

Now furthermore assume that

P (µ) = N (0, Sµ) (35)P (x|µ) = N (µ, Sε)

Then we have∫P (x|µ)P (µ)dµ = P (x) = N (0, Sµ + Sε)∫

P (x1|µ)P (x2|µ)P (µ)dµ = P (x1, x2) = N(0,Σ)

Σ =

(Sµ + Sε Sµ

Sµ Sµ + Sε

). (36)

It is now obvious from (36) that the numerator of (34)is equal to logP (x1, x2|HI) and the denominator of(34) is equal to logP (x1, x2|HE). Therefore the twometrics are equivalent, and Joint Bayesian can beconsidered as a kind of probabilistic reference-basedmethod, with infinite Gaussian references.

7 EXPERIMENTS

In this section, we compare our Joint Bayesian ap-proach with conventional Bayesian faces and othercompetitive supervised methods on the four bench-marks LFW [18], WDRef [17], Multi-PIE [19], andYouTube Faces [20] datasets. We use LBP features [31]and high-dimensional LBP features [32] in all experi-ments.LBP feature. We first normalize the image to 100×100pixels using an affine transformation calculated basedon 5 landmarks (two eyes, noise and two mouth tips).Then the image is divided into 10×10 non-overlappedcells, and each cell within the image is mapped toa vector using LBP descriptors. All descriptors areconcatenated to form the final feature.High-dimensional LBP feature. We first rectify im-ages using a similarity transformation based on fivelandmarks (two eyes, nose, and mouth corners). Thenwe extract patches centered around 27 landmarks in5 scales. The side lengths of the image in each scaleare 300, 212, 150, 106, and 75. The patch size is fixedto 40×40 in all scales. We divide each patch into 4×4non-overlapped cells. Each cell is then mapped to avector using an LBP descriptor. Next all descriptorsare concatenated to form the final feature.

The feature dimensions of LBP and high-dimensional LBP are 5,900 and 127,440 respectively.We apply PCA to reduce the dimensionality ofthe raw feature to a feasible range for subsequentsupervised learning.

7.1 Comparison with other Bayesian face meth-odsIn the first experiment, we compare conventionalBayesian face recognition [1], Wang and Tang’s u-nified subspace work [3], the naive formulation dis-cussed in Section 2.1, and our Joint Bayesian algorith-m. The first two methods are based on the differencebetween a given face pair while the last two model thefull joint distribution. All algorithms are tested on twodatasets: LFW and WDRef. When testing with LFW,

![Page 11: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/11.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 11

600 900 1200 1500 1800 2100 2400 2700 30000.76

0.78

0.8

0.82

0.84

0.86

0.88

0.9

Identity Number

Acc

urac

y

Classic Bayesian [1]Unified Subspace [3]Naive FormulationJoint Bayesian

600 900 1200 1500 1800 2100 2400 27000.72

0.74

0.76

0.78

0.8

0.82

0.84

0.86

Identity Number

Acc

urac

y

Classic Bayesian [1]Unified Subspace [3]Naive FormulationJoint Bayesian

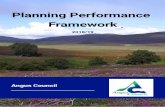

Fig. 2: Comparison with other Bayesian face related works. The joint Bayesian method is consistently better other a wide range of differenttraining data sizes and on two databases: LFW(left) and WDRef(right).

all identities in WDRef are used for the training. Wevary the number of identities n in the training datafrom 600 to 3000 to examine performance with respectto training data size. When testing with WDRef, wesplit the data into two mutually exclusive parts: 300different subjects are used for testing, the others arefor training. Similar to the standard LFW protocol,the test images are divided into 10 cross-validationsets and each set contains 300 intra-personal andextra-personal pairs. We use LBP features and re-duce the feature dimension by PCA to the algorithm-dependent dimension that performed best (d = 2000for joint Bayesian and unified subspace, d = 400 forBayesian faces and naive formulation methods).

As shown in Fig. 2, by enforcing an appropri-ate prior on the face representation, our proposedjoint Bayesian method performs substantially betteron various training data sizes. The unified subspacealgorithm is the next best by taking advantage ofsubspace selection over face differences, i.e. retain-ing the identity component and excluding the intra-person variation component and noise. We also notethat when the training data size is small, the naive for-mulation displays the worst performance, the reasonbeing that it must estimate more parameters in higherdimension using only sample averages. However, astraining data increases, the performance of conven-tional Bayesian and unified subspace method (whichboth rely only on the difference of face pair) graduallybegin to saturate. In contrast, the performance of thenaive joint formulation keeps increasing as the train-ing data increases. Its performance surpasses that ofthe conventional Bayesian method and is approachingthat of the unified subspace method. The trend of thejoint Bayesian method shares the same pattern as thenaive joint formulation but at a higher discriminationlevel demonstrating its overall efficacy.

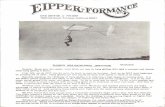

7.2 Comparison with LDA and PLDAIn this experiment, we compare Joint Bayesian withtwo subspace learning methods, LDA [22] and PL-

DA [8]. We use the same experimental setting asdescribed in section 7.1. LDA is based on our ownimplementation and PLDA is from the authors’ im-plementation [8]. In the experiments, the original LBPfeatures are reduced to the best dimension by PCA foreach method (2000 for all methods).

We also study the verification accuracy while vary-ing the sub-space dimensions. For PLDA, the sub-space dimensions are determined by the user-definedcolumn number of the matrices F and G in (21).For Joint Bayesian, the sub-space dimension is de-termined automatically by initializing the algorithmwith full-rank covariance matrices. However, we canartificially truncate the singular values of PA and PG

in (17) for the purpose of comparing with PLDA andLDA. Of course such truncation has no effect oncewe reach the range of zero-valued singular valueslearned by the Joint Bayesian with its preference forlow-rank representations. However, it is importantto emphasize that PLDA and LDA have a distinctadvantage by explicitly learning a new subspace foreach dimension. In contrast, for Joint Bayesian wehave learned only a single model with mostly zerovalued (or nearly so) singular values in the learnedPA and PG, which then automatically determines theintrinsic dimensionality.

As shown in Fig. 3, if the sub-space dimensionalityis too high or too low, the results of both LDAand PLDA will degrade. In the case of PLDA, thisdemonstrates that when the subspace dimensionalityis too low, the model is underfitting, and when itis too high, the EM algorithm is no longer well-posed for reasons described in Section 5. LDA alsodisplays a similar sensitivity and fails to fully exploithigher dimensional features. On the other hand, withJoint Bayesian once the dimensionality is sufficientlyhigh such that we are no longer artificially truncatingsignificant singular values, the performance remainsconstant. Overall, Joint Bayesian is independent ofany such manual tuning in a pracitcal setting andoperates naturally to learn appropriate discriminative

![Page 12: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/12.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 12

100 300 500 700 900 1100 13000.83

0.84

0.85

0.86

0.87

0.88

0.89

0.9

Sub−space Dimension

Acc

urac

y

LDA [22]PLDA [8]Joint Bayesian

100 300 500 700 900 1100 13000.77

0.78

0.79

0.8

0.81

0.82

0.83

0.84

0.85

Sub−space Dimension

Acc

urac

y

LDA [22]PLDA [8]Joint Bayesian

Fig. 3: Comparison with LDA and PLDA on LFW(left) and WDRef(right).

Algorithms LBP High-dim LBPBayesian Face [1] 82.65% 89.97%

LDA [22] 84.20% 91.37%PLDA [8] 84.67% 92.38%

Joint Bayesian 86.47% 92.95%

TABLE 4: Comparison over Multi-PIE dataset. Proposed methodachieves the best result with both LBP and High-dim LBP features.

subspaces. This property makes it very convenient fordeployment.

7.3 Comparison on Multi-PIE datasetWe now further evaluate the generalization abilityof our method. All algorithms are evaluated on theMulti-PIE dataset and trained on WDRef. Unlike theprevious LFW and WDRef datasets, Multi-PIE datais collected in a controlled laboratory environmentand displays considerable differences from image datacollected from the Internet such as LFW and WDRef.

We follow the settings in [32] and [33] which aresimilar to the LFW protocol. We randomly select 49test identities. Each identity contains 7 different posecategories and 4 illumination conditions. The posecategories range from -60% to +60% and the four illu-mination conditions are no-flash, left-flash, right-flashand left-right-flash. Then we randomly select 3000intra-personal and 3000 extra-personal pairs that areplaced in 10 folders for cross-validation evaluation. Asshown in Table 4, we achieve 92.95% which is abovethe other supervised learning methods.

7.4 Comparison on YouTube Faces datasetIn this section, we validate the proposed JointBayesian method on the task of video-level verifica-tion. Joint Bayesian measures the similarity of twoimages. To extend Joint Bayesian to measuring thesimilarity of two videos, we use the maximum sim-ilarity of all image pairs between the two videos asthe video-level similarity.

The YouTube Faces dataset [20] is a common bench-mark for evaluating video-level face verification. It

contains 3,425 videos of 1,595 different people. Onaverage, a face track from a video clip consists of181.3 frames of faces. We report comparative resultsunder two settings: a) the Youtube dataset is split fortraining and testing consistent with previous works[20][34][35]; b) the WDRef dataset is used for trainingwhile the Youtube dataset is reserved for testing.

When training on the YouTube dataset, we followthe unrestricted protocol in [20]. We divide 5000 videopairs into 10 splits, with each split containing 250intra-personal pairs and 250 inter-personal pairs. Thesubject identity labels are accessible during training.We randomly selected 10 frames from each trainingvideo to construct the training set. On average thereare around 30k frames of 1,400 persons for training ineach round. When training on the WDRef dataset, wefollow the same setting as described in section 7.1.

During testing, given a pair of videos, we extracthigh-dimensional LBP features for all frames of thetwo videos and compute the pairwise similaritiesbetween the frames of the two videos. Finally, wekeep the maximum similarity. It is worth mention-ing that by applying the efficient testing methods inSection 4.2, all image-level similarities can be com-puted in around one millisecond, indicating that ourmethod is applicable to real-time video-level verifica-tion on both PC and mobile devices.

Table 5 shows the results of our method alongwith LDA and PLDA under the aforementioned twosettings using our high-dimensional LBP features forconsistency, as well as the results of recent state-of-the-art algorithms. Joint Bayesian achieves 84.40%when training on Youtube, and 87.12% when trainingon WDref, surpassing all other methods. Moreover,we emphasize that although PLDA performs secondbest, this requires both tuning of the subspace di-mension via cross-validation and an extremely largetraining cost for each trial dimension without thetailored analytical simplifications described in Sec-tion 4. Considering the simplicity of the extensionfrom image-level similarity to video-level similarity,these results provide additional compelling evidence

![Page 13: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/13.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 13

0 0.1 0.2 0.3 0.40.6

0.7

0.8

0.9

1

false positive rate

true

pos

itive

rat

e

LDML+MkNN [11]LBP Multishot [14]Multishot combined [14]LBP PLDA [8]Combine PLDA [8]Sub−SML [37]Fisher Vector Faces [36]Joint Bayesian (High−dim LBP)

0 0.1 0.2 0.3 0.40.6

0.7

0.8

0.9

1

false positive rate

true

pos

itive

rat

e

Attribute and simile classifiers [13]Associate−Predict [15]face.com r2011b [39]CMD+SLBP [40]Tom−vs−Pete [38]Joint Bayesian (High−dim LBP)

Fig. 4: Comparison with state-of-the-art methods on LFW. Without outside training data(left) and with outside training data(right).Note that Joint Bayesian automatically determines the subspace dimension and here we artificially truncate singular values to producelower-dimensional models merely for purposes of comparison.

Algorithms AccuracyMBGS+LBP [20] 76.40%

STFRD+PMML [34] 79.48%APEM (fusion) [35] 79.06%

LDA (Train on Youtube) [22] 82.20%PLDA (Train on Youtube) [8] 83.60%

Joint Bayesian (Train on Youtube) 84.40%LDA (Train on WDRef) [22] 85.06%PLDA (Train on WDRef) [8] 86.54%

Joint Bayesian (Train on WDRef) 87.12%

TABLE 5: Performance comparison on YouTube Faces Dataset.Joint Bayesian significantly outperforms existing state-of-the-artalgorithms, as well as LDA and PLDA carefully tuned with high-dimensional LBP features.

for the efficacy of the proposed Joint Bayesian method.

7.5 Comparison with LFW state-of-the-art

This section presents the best performance of JointBayesian on the LFW dataset, along with existingstate-of-the-art methods for comparison, under twosettings: supervised learning without and with out-side training data.Without outside training data. In order to fairlycompare with other approaches published on the LFWwebsite [8], [11], [14], [36], [37], we follow the LFWunrestricted protocol, using only LFW for training.With outside training data. Our purposes here aretwofold: 1) verify the generalization ability from onedataset to another dataset; 2) see what improvementis possible using limited outside training data. Wefollow the standard restricted protocol in LFW us-ing the high-dimensional LBP features and comparewith other top-performing algorithms that use outsidetraining data [13], [15], [38], [39], [40].

We achieve 93.18% under the LFW unrestrictedprotocol (known identity information) without out-side training data. At the time of submission, thisrepresented the top performing algorithm; however,very recently two new hand-crafted features lead

to a bit higher performance(see for example [42]).Although feature development is not our focus here,it is of course likely that our Joint Bayesian pipelinecould equally benefit from such features as well. Notethat without labeled outside training data, DNN-based features are not competitive under this protocol.Using WDRef as outside training data, we achieve95.17%. As shown in Fig. 4, Joint Bayesian achievesstate-of-the-art performance under both settings. Ad-ditionally, we are able to push the accuracy evenfurther to 96.33% (approaching human performance)if transfer learning [21] is applied to alleviate thedistributional differences between the outside trainingdata (WDRef) and the testing data (LFW). At the timeof original submission, this represented the highestpublished result on the challenging LFW data satisfy-ing all the requirements for the unrestricted protocol,and yet this accuracy is nonetheless still achievablewith an extremely fast and scalable algorithm.

Since the time of our original submission, a farmore complex set of face verification features havebeen derived using a deep learning architecture [41].When these features are then substituted into ourJoint Bayesian framework, performance can be fur-ther improved to 99.15%, which to our knowledgerepresents the first method to break the 99% barrierand essentially saturate the LFW benchmark underthe unrestricted protocol. This is further compellingevidence that Joint Bayesian represents a useful gener-ic tool for incorporation in face verification pipelinesirrespective of the specific features that are used.Moreover, it especially speaks to the continued rel-evance of this methodology even in the emerging eraof deep learning.

8 PROOFS AND DERIVATIONS

8.1 Conditional distribution P (h|x,Θ)

We omit the subject-indicator subscript i here forconvenience, and then introduce an auxiliary variable

![Page 14: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/14.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 14

z defined as

z =

[hx

]=

[IQ

]h.

The distribution of h is N(0,Σh), hence any lineartransformation such as z is also Gaussian, in this casegiven by

P (z|Θ) = N(0,Σz)

Σz =

[Σh ΣhQ

T

QΣh QΣhQT

].

Given the joint Gaussian distribution, any conditionaldistribution is likewise a Gaussian and can be easilyderived using standard identites producing

P (h|x,Θt) = N(µ,Σ)

where

µ = ΣhQT (QΣhQ

T )−1x

Σ = Σh − ΣhQTQΣhQ

TQΣh.

From the mean and covariance, we can easily derivethe first- and second- order moments of the condition-al distribution in Equation (11).

8.2 Proof of Lemma 1

If each subject has the same number of samples, thenthe log-likelihood function

−∑i

logP (xi|Sµ, Sε)

can be simplified to

n(m− 1) log |Sε|+ n log |mSµ + Sε|

+∑i

∑j

trace(Sε(xij − xi)(xij − xi)T )

+∑i

trace(m(mSµ + Sε)xixTi ),

(37)

where n is the number of subjects and m is thenumber of samples per subject, and xi is the samplemean of the ith subject. This result follows using theidentity |Σx| = |mSµ + Sε||Sε|m−1. We can then ob-tain an unconstrained closed-form solution by takingderivatives of (37) with respect to Sµ and Sε andequating to zero which gives

Sε =1

n

1

m− 1

∑i

∑j

(xij − xi)(xij − xi)T

Sµ =1

n

∑i

xixTi − 1

mSε.

As an unconstrained optimal solution, it is clearthat Sµ will not necessarily satisfy the positive semi-definite requirement of a covariance matrix. However,

in the limit as m → ∞, the term 1mSε will converge

to zero. The limiting solutions

Sε =1

mn

∑i

∑j

(xij − xi)(xij − xi)T

Sµ =1

n

∑i

xixTi

now satisfy the necessary positive semi-definite con-straint and are also symmetric as required. As theseunconstrained solutions now satisfy the constraintsand are globally optimal, they must then now rep-resent globally optimal constrained solutions.

8.3 Proof of Lemma 2

From Equation (5) and the standard formulae for theinverse of a block partitioned matrix, it is straightfor-ward to show that

F +G =(U − SµU

−1Sµ)−1)−1

(38)

where U = Sµ + Sε. It follows then that

A = U−1 − (U − SµU−1Sµ)

−1.

We may then apply the Woodbury matrix inversionidentity and arrive at

A = −U−1Sµ(U − SµU−1Sµ)

−1SµU−1. (39)

From the Shur Complement Lemma, U −SµU−1Sµ ≽

0. Consequently, the matrix A is a negative semi-definite symmetric matrix and hence can be expressedas A = −PAP

TA for some matrix PA.

For the matrix G, also from (5), it follows that[F +G G

G F +G

] [Sµ + Sε Sµ

Sµ Sµ + Sε

]= I, (40)

which can easily be solved to find that

G = −(2Sµ + Sε)−1SµS

−1ε . (41)

We then define V such that Sµ = V V T , which is al-ways possible since Sµ is a covariance. Again, we usethe Woodbury matrix inversion identity to reexpressG as

G = −S−1ε V (I + 2V TS−1

ε V )−1V TS−1ε (42)

from which it is immediately apparent that G is anegative semi-definite symmetric matrix, and henceG = −PGP

TG for some PG.

Finally, regarding matrix rank, in (39) and (41) thematrices A and G include Sµ in product form. Giventhat rank[XY ] ≤ rank[Y ], it immediately follows that

rank[A] = rank[PA] ≤ rank[Sµ]

rank[G] = rank[PG] ≤ rank[Sµ].

![Page 15: AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE ... · formance [2] of Bayesian face recognition, further progress has been made along this line of research. For example, Wang and](https://reader033.fdocuments.in/reader033/viewer/2022060308/5f0a227e7e708231d42a2e49/html5/thumbnails/15.jpg)

0162-8828 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for moreinformation.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI10.1109/TPAMI.2016.2533383, IEEE Transactions on Pattern Analysis and Machine Intelligence

AN EFFICIENT JOINT FORMULATION FOR BAYESIAN FACE VERIFICATION 15

8.4 Proof of Lemma 4We first consider Equation (18) and let E∗ and M∗denote an optimal solution. With an invertible lineartransform the constraint becomes WX = E + MΦ orequivalently X = W−1E + W−1MΦ. Defining E =W−1E and M = W−1M, (18) becomes

minE,M

n log |Sµ|+ k log |Sε|

s.t. X = E+ MΦ,

Sµ = 1nWMMTWT , Sε =

1kW EETWT .

However, since log |AB| = log |A|+ log |B| for squarematrices A and B, this is equivalent to solving

minE,M

n log∣∣∣MMT

∣∣∣+ k log∣∣∣EET

∣∣∣s.t. X = E+ MΦ,

Sµ = 1nWMMTWT , Sε =

1kW EETWT ,

which is obviously minimized when E = E∗ andM = M∗, directly leading to the stated result. Withsome additional effort, a similar result can be shownwhen we do not adopt the assumption E[hih

Ti ] =

E[hi]E[hi]T ; however, we omit the details here for

brevity.Regarding the second invariance, by plugging the

new optimal covariances into (5), we arrive at

A = (WT )−1AW−1

G = (WT )−1GW−1

By plugging the above equations into the log-likelihood ratio test, we get

r (x1, x2) = xT1 Ax1 + xT

2 Ax2 − 2xT1 Gx2

= xT1 Ax1 + xT

2 Ax2 − 2xT1 Gx2

= r (x1, x2)

9 CONCLUSION