[ACM Press the third ACM conference - New York, New York, USA (2009.10.23-2009.10.25)] Proceedings...

Transcript of [ACM Press the third ACM conference - New York, New York, USA (2009.10.23-2009.10.25)] Proceedings...

![Page 1: [ACM Press the third ACM conference - New York, New York, USA (2009.10.23-2009.10.25)] Proceedings of the third ACM conference on Recommender systems - RecSys '09 - Generating transparent,](https://reader036.fdocuments.in/reader036/viewer/2022072116/5750a2cb1a28abcf0c9dd6f0/html5/thumbnails/1.jpg)

Generating Transparent, Steerable Recommendations fromTextual Descriptions of Items

Stephen GreenSun Microsystems Labs

Burlington, [email protected]

Paul LamereThe Echo NestSomerville, MA

Jeffrey AlexanderSun Microsystems Labs

Burlington, [email protected]

François MailletDept of Computer Science

Université de Montré[email protected]

ABSTRACTWe propose a recommendation technique that works by col-lecting text descriptions of items and using this textual aurato compute the similarity between items using techniquesdrawn from information retrieval. We show how this rep-resentation can be used to explain the similarities betweenitems using terms from the textual aura and further howit can be used to steer the recommender. We describe asystem that demonstrates these techniques and we’ll detailsome preliminary experiments aimed at evaluating the qual-ity of the recommendations and the effectiveness of the ex-planations of item similarity.

Categories and Subject DescriptorsH.3.3 [Information Search And Retrieval]: InformationFiltering

General TermsAlgorithms, Measurement, Performance

KeywordsSteerable recommender, Explainable recommende

1. INTRODUCTIONOne of the problems faced by current recommender sys-

tems is explaining why a particular item was recommendedfor a particular user. Tintarev and Masthoff [5] provide agood overview of why it is desirable to provide explanationsin a recommender system. Among other things, they pointout that good explanations can help inspire trust in a rec-ommender, increase user satisfaction, and make it easier forusers to find what they want.

If users are unsatisfied with the recommendations gener-ated by a particular system, often their only way to changehow recommendations are generated in the future is to pro-vide thumbs-up or thumbs-down ratings to the system. Un-fortunately, it is not usually apparent to the user how thesegestures will affect future recommendations.

Copyright 2009 Sun MicrosystemsRecSys’09, October 23–25, 2009, New York, New York, USA.ACM 978-1-60558-435-5/09/10.

Finally, many current recommender systems simply presenta static list of recommended items in response to a userviewing a particular item. Our aim is to move towards ex-ploratory interfaces that use recommendation techniques tohelp users find new items that they might like and have theexploration of the space part of the user experience.

In this paper, we’ll describe a system that builds a textualaura for items and then uses that aura to recommend similaritems using textual similarity metrics taken from text infor-mation retrieval. In addition to providing a useful similaritymetric, the textual aura can be used to explain to users whyparticular items were recommended based on the relation-ships between the textual auras of similar items. The auracan also be used to steer the recommender, allowing usersto explore how changes in the textual aura create differentsets of recommended items.

2. RELATED WORKTintarev and Masthoff’s [5] survey of explanations in rec-

ommender systems provides an excellent description of theaims of explanations and what makes a good explanation.We will adopt their terminology wherever possible. Za-nardi and Capra [8] exploit tags and recommender systemtechniques to provide a social ranking algorithm for taggeditems. Their approach uses straight tag frequency, ratherthan more well-accepted term weighting measures.

Vig et al. [6] use social tags to generate descriptions of therecommendations generated by a collaborative filtering rec-ommender. Although they explicitly forego “keyword-style”approaches to generating recommendations, we believe thatit is worthwhile to try techniques from information retrievalthat are known to provide good results.

Wetzker et al. [7] use Probabilistic Latent Semantic Anal-ysis (PLSA) to combine social tags and attention data in ahybrid recommender. While PLSA is a standard informa-tion retrieval technique, the dimensionality reduction that itgenerates leads to a representation for items that is difficultto use for explanations.

3. THE TEXTUAL AURAThe key aspect of our approach is to use an item represen-

tation that consists of text about the item. For some itemtypes like books or blog posts, this could include the actualtext of the item, but for most items, the representation will

281

![Page 2: [ACM Press the third ACM conference - New York, New York, USA (2009.10.23-2009.10.25)] Proceedings of the third ACM conference on Recommender systems - RecSys '09 - Generating transparent,](https://reader036.fdocuments.in/reader036/viewer/2022072116/5750a2cb1a28abcf0c9dd6f0/html5/thumbnails/2.jpg)

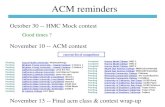

Figure 1: The textual aura for Jimi Hendrix

mainly consist of text crawled from the Web. In our rep-resentation, each item in the system is considered to be adocument. The “text” of the document is simply the con-glomeration of whatever text we can find that pertains tothe item. Because we may collect text about the item from anumber of sources, we divide an item’s document into fields,where each field contains text from a particular source.

The representation we use for an item is a variant of thestandard vector space representation used in document re-trieval systems. Rather than using a single vector to repre-sent a document, the system keeps track of separate vectorsfor each of the fields that make up the document. This al-lows us to calculate document similarity on any single fieldor any combination of fields.

Once one is treating items as documents in this fashion, itbecomes easy to do other things like treat individual socialtags as documents whose words are the items to which thesocial tag has been applied. Similarly, a user can be modeledas a document whose words are the social tags that the userhas applied to items, allowing us to compute user-user anduser-item similarity.

An advantage of a vector space representation is that thereare well known techniques for dealing with a number of phe-nomena that are typically encountered when dealing withtags. For example, the issues of tag quality and tag redun-dancy encountered by Vig et al. [6] are usually handled quitewell using the standard vector space model for document re-trieval. Another advantage is that there are also well-knowntechniques for building and scaling systems that use such arepresentation.

Our current work has focused on recommending musicalartists. The data sources that we use to represent a musicalartist include social tags that have been applied to the artistat Last.fm [1] and the Wikipedia entry for the artist. Fig-ure 1 shows a portion of the textual aura for Jimi Hendrixderived from the tags applied to him at Last.fm as a tagcloud. Unlike typical tag clouds, where the size of a tag inthe cloud is proportional to the frequency of occurrence ofthe tag, here the size of a tag is proportional to it’s termweight in the vector space representation for the artist.

By tying the size of a tag in the cloud to the term weight,we are giving the user an indication of what kind of artistswe will find when we look for similar artists. In this case,we will tend to find a guitar virtuoso, who plays blues in-fluenced psychedelic rock, as these are the largest tags andtherefore the tags with the most influence over the similaritycalculation.

3.1 Generating RecommendationsWe can use the textual aura in a number of recommenda-

tion scenarios. We can find similar artists based on a seedartist’s aura, or we can find artists that a user might likebased on tags that they have applied or based on the com-bined aura of their favorite artists. We allow users to savetag clouds and use them to generate recommendations overtime.

Since the implementations of these recommendation sce-narios are based on similar techniques, we’ll illustrate thegeneral approach by explaining the specific case of findingartists that are similar to a seed artist. First, we retrieve thedocument vector for the seed item. This may be a vectorbased on a single field of the item’s document or a compositevector that incorporates information for a number of fields.

In any case, for each of the terms that occurs in the vectorfor the seed item, we retrieve the list of items that have thatterm in their textual aura. As each list is processed, weaccumulate a per-item score that measures the similarity tothe seed item. This gives us the set of items that have anon-zero similarity to the seed item. Once this set of similaritems has been built, we can select the top n most-similaritems. This selection may be done in the presence of itemfilters that can be used to impose restrictions on the set ofitems that will ultimately be recommended to a user.

For example, we can derive a popularity metric from thelistener counts provided by Last.fm and use this metric toshow a user artists that everyone else is listening to or thathardly anyone else is listening to.

3.2 Explanations and TransparencyGiven the auras for two items, it is straightforward to

determine how each of the terms in the textual aura fora seed item contributes to the similarity between the seeditem and a recommended item. For items A and B, and thevectors used to compute their similarity, we can build threenew tag clouds that describe both how the items are relatedand how they differ.

We can build an overlap tag cloud from the terms thatoccur in the vectors for both items. The tags in this cloudwill be sized according to the proportion of the similaritybetween A and B contributed by the term. This explanationof the similarity between two items is directly related to themechanism by which the similarity was computed. We canalso build two difference clouds that show the terms in A andB that are not in the set of overlapping terms. The differencecloud can be used to indicate to a user how a recommendeditem differs from the seed item, which may lead them into anattempt to steer the recommendation towards a particularaspect.

3.3 Steerable RecommendationsIn addition to providing a straightforward way of gener-

ating explanations of the recommendations made, our ap-proach offers an equally straightforward way of steering therecommendations.

We allow the user to directly manipulate the documentvector associated with an item and use their manipulationsto interactively generate new sets of recommended items onthe fly. If the vector for an item is presented as a tag cloud,then the user can manipulate this representation by draggingtags so that the tags increase or decrease in size. As withany other recommendation, we can provide an explanation

282

![Page 3: [ACM Press the third ACM conference - New York, New York, USA (2009.10.23-2009.10.25)] Proceedings of the third ACM conference on Recommender systems - RecSys '09 - Generating transparent,](https://reader036.fdocuments.in/reader036/viewer/2022072116/5750a2cb1a28abcf0c9dd6f0/html5/thumbnails/3.jpg)

like the ones described above that detail why a particularitem was recommended given a particular cloud.

The user can also make particular tags sticky or negative.A sticky tag is one that must occur in the textual aura of arecommended artist. A sticky tag will still contribute someportion of the similarity score, since it appears in both theseed item and in the recommended item. A negative tagis one that must not occur in the textual aura of a recom-mended artist. Negative tags are used as a filter to removeartists whose aura contains the tag from the results of thesimilarity computation.

The overall effect of the steerability provided to the usersis that they can begin to answer questions like “Can I findan artist like Britney Spears but with an indie vibe?” or“Can I find an artist like The Beatles, but who is recordingin this decade?”

3.4 The Music ExplauraAs part of the AURA project in Sun Labs, we have de-

veloped the Music Explaura [2], an interface for finding newmusical artists that incorporates all of the techniques de-scribed above. Users can start out by searching for a knownartist, by searching for an artist with a particular tag intheir aura, or by searching for a tag and seeing similar tags.

The aim is to provide as much context as possible forthe user. In addition to the tag cloud for the artist (basedon Last.fm tags by default) and the top 15 most-similarartists to the seed artist, we display a portion of the artist’sbiography from Wikipedia, videos crawled from YouTube,photos crawled from Flickr, albums crawled from Amazon,and events crawled from Upcoming.

4. EVALUATING RECOMMENDATIONSTo get a better understanding of how well the aura-based

recommendations perform, we conducted a web-based usersurvey that allowed us to compare the user reactions torecommendations generated by a number of different rec-ommenders. We compared two of our research algorithms,our aura-based recommender and a more traditional collab-orative filtering recommender, to nine commercial recom-menders. The aura-based system used a data set [4] con-sisting of about 7 million tags (with 100,000 unique tags)that had been applied to 21,000 artists. The CF-based sys-tem generated item-item recommendations based on the lis-tening habits of 12,000 Last.fm listeners. The nine com-mercial recommenders evaluated consist of seven CF-basedrecommenders, one expert-based recommender and one hy-brid (combining CF with content-based recommendation).We also included the recommendations of five professionalcritics from the music review site Pitchfork [3].

To evaluate the recommenders we chose the simple rec-ommendation task of finding artists that are similar to asingle seed artist. This was the only recommendation sce-nario that was supported by all recommenders in the survey.We chose five seed artists: The Beatles, Emerson Lake andPalmer, Deerhoof, Miles Davis and Arcade Fire. For eachrecommender in the study, we retrieved the top eight mostsimilar artists. We then conducted a Web-based survey torank the quality of each recommended artist. The surveyasked each participant in the survey to indicate how well agiven recommended artist answers the question “If you likethe seed artist you might like X.” Participants could answer“Excellent” “Good” “Don’t Know” “Fair” or “Poor”. Two

Average RelativeSystem Rating Precision NoveltySun aura 4.02 0.49 0.31CF-10M 3.68 -0.02 0.24CF-2M 3.50 -0.16 0.28Sun-CF-12K 3.48 -0.38 0.26CF-1M 3.26 -0.47 0.23CF-100K 2.59 -1.01 0.32Expert 2.06 -1.17 0.58Music Critic 2.00 -1.26 0.49CF-1M 1.82 -1.18 0.38Hyb-1M 0.89 - 3.31 0.57CF-10K 0.82 -4.32 0.47CF-10K -2.39 -13.18 0.48

Table 1: Survey Results. CF-X represents a col-laborative filtering-based system with an estimatednumber of users X

hundred individuals participated in the survey, contributinga total of over ten thousand recommendation rankings. Weused the survey rankings to calculate three scores for eachrecommender:

Average Rating The average score for all recommenda-tions based on the point assignment of 5, 1, 0, -1 and-5 respectively for each Excellent, Good, Don’t Know,Fair and Poor rating. This score provides a ranking ofthe overall quality of the recommendations.

Relative Precision The average score for all recommen-dations based on the point assignment of 1, 1, 0, -1and -25 respectively for each Excellent, Good, Don’tKnow, Fair and Poor rating. This score provides aranking for a recommender’s ability to reject poor rec-ommendations.

Novelty The fraction of recommendations that are uniqueto a recommender. For instance, a value of 0.31 indi-cates that 31 percent of recommendations were uniqueto the particular recommender.

Table 1 shows the results of the survey. Some observationsabout the results: CF-based systems with larger numbers ofusers tend to have higher average ratings and relative pre-cision. Somewhat surprisingly, human-based recommenders(Expert and Music Critic) do not rate as well as larger CF-based recommenders, but do tend give much more novel rec-ommendations. Poorly rated recommenders tend to have ahigher novelty score. The aura-based recommender providesgood average rating and relative precision results while stillproviding somewhat novel recommendations.

It is important not to draw too many conclusions fromthis exploratory evaluation. The recommendation task was asimple one using popular artists, the number of participantswas relatively small and the participants were self-selected.Nevertheless, the survey does confirm that the aura-basedapproach to recommendation is a viable approach yieldingresults that are competitive with current commercial sys-tems.

283

![Page 4: [ACM Press the third ACM conference - New York, New York, USA (2009.10.23-2009.10.25)] Proceedings of the third ACM conference on Recommender systems - RecSys '09 - Generating transparent,](https://reader036.fdocuments.in/reader036/viewer/2022072116/5750a2cb1a28abcf0c9dd6f0/html5/thumbnails/4.jpg)

4.1 The Music ExplauraIn order to gain preliminary insight into the effectiveness

of our approach to transparent, steerable recommendations,we conducted a small scale qualitative usability evaluationusing The Music Explaura, contrasting it against other mu-sic recommendation sites.

We asked users to evaluate The Explaura’s recommen-dations with respect to the familiarity and accuracy of therecommendations, their satisfaction with the recommenda-tions, how novel the recommended artists were, the trans-parency and trustworthiness of the recommender, and thesteerability of the recommendations.

Ten participants were recruited from the student popula-tion of Bentley University and musician forums on craigslist.The participants were all web-savvy, regular Internet users.

The Explaura interface presents a number of interactionparadigms very different from familiar interaction conven-tions, creating a pronounced learning curve for new users.Users’ ratings of different sites’ recommendations appearedto largely correspond to how much they agreed with the rec-ommendation of artists that were familiar to them. Includedin this assessment of the quality of the recommendations wasoften an assessment of rank.

Ten of ten users agreed, though not always immediately(some required prompting to investigate the similarity tagclouds) that they understood why Explaura recommendedthe items it did, and that the list of recommendations madesense to them.

It was clear that the value of the tag clouds as an expla-nation is highly vulnerable to any perception of inaccuracyor redundancy in the tag cloud. Also, sparse tag clouds pro-duce more confusion than understanding. Almost none ofthe participants immediately grasped the meaning of the tagclouds. The general first impression is of confusion. Further-more, no one expected to be able to manipulate the cloud.

Despite these problems, the users continually expressedthe desire to limit and redefine the scope of an exploration.When the concept is presented, virtually all users expresssurprise, interest, and pleasure at the idea that they can dosomething with the results.

5. FUTURE WORK AND CONLUSIONSThe current system uses the traditional bag-of-words model

for the tags. While this has provided some worthwhile re-sults, it seems clear that we should be clustering terms likecanada and canadian so that their influence can be com-bined when generating recommendations and explanations.At the very least, combining such terms should lead to lessconfusion for users.

We’re interested in generating more language-like descrip-tions of the similarities and differences between items. Itseems like it should be possible to use the term weight-ings along with language resources like WordNet to generatePandora-like descriptions of the similarity tag clouds, whichmay make them more approachable for new users.

Our ultimate aim is to provide hybrid recommendationsthat include the influence of the textual aura as well as thatof collaborative filtering approaches. An obvious problemhere is how to decide which approach should have more in-fluence for any given user or item.

The textual aura provides a simple representation for itemsthat can produce novel recommendations while providing a

clear path to a Web-scale recommender system. The ini-tial evaluation of the quality of the recommendations pro-vided by an aura-based recommender provides strong evi-dence that the technique has merit, if we can solve some ofthe user interface problems.

Our initial usability study for the Music Explaura showedthat the tag cloud representation for the artist can be con-fusing at first viewing. While the users expressed a realdesire to explore the recommendation space via interactionwith the system, the current execution needs further usabil-ity testing and interface design refinement to enhance useracceptance of the model.

AcknowledgmentsThanks to Professor Terry Skelton of Bentley University.

6. ADDITIONAL AUTHORSSusanna Kirk (Bentley University, [email protected]),Jessica Holt (Bentley University, [email protected]),Jackie Bourque (Bentley University, [email protected]),Xiao-Wen Mak (Bentley University, [email protected])

7. REFERENCES[1] Last.fm. http://last.fm.

[2] The Music Explaura. http://music.tastekeeper.com.

[3] Pitchfork magazine.http://kenai.com/projects/aura.

[4] P. Lamere. Last.fm artist tags 2007 data set.http://tinyurl.com/6ry8ph.

[5] N. Tintarev and J. Masthoff. A survey of explanationsin recommender systems. In Data EngineeringWorkshop, 2007 IEEE 23rd International Conferenceon, pages 801–810, April 2007.

[6] J. Vig, S. Sen, and J. Riedl. Tagsplanations: explainingrecommendations using tags. In IUI ’09: Proceedingscof the 13th international conference on Intelligent userinterfaces, pages 47–56, New York, NY, USA, 2008.ACM.

[7] R. Wetzker, W. Umbrath, and A. Said. A hybridapproach to item recommendation in folksonomies. InESAIR ’09: Proceedings of the WSDM ’09 Workshopon Exploiting Semantic Annotations in InformationRetrieval, pages 25–29, New York, NY, USA, 2009.ACM.

[8] V. Zanardi and L. Capra. Social ranking: uncoveringrelevant content using tag-based recommender systems.In RecSys ’08: Proceedings of the 2008 ACM conferenceon Recommender systems, pages 51–58, New York, NY,USA, 2008. ACM.

284