ABC-Boost: Adaptive Base Class Boost for Multi-class ......3. Abc-boost and Abc-mart Abc-mart...

Transcript of ABC-Boost: Adaptive Base Class Boost for Multi-class ......3. Abc-boost and Abc-mart Abc-mart...

-

ABC-Boost: Adaptive Base Class Boost for Multi-class Classification

Ping Li [email protected] of Statistical Science, Cornell University, Ithaca, NY 14853 USA

Abstract

We propose abc-boost (adaptive base classboost) for multi-class classification andpresent abc-mart, an implementation of abc-boost, based on the multinomial logit model.The key idea is that, at each boosting it-eration, we adaptively and greedily choosea base class. Our experiments on pub-lic datasets demonstrate the improvement ofabc-mart over the original mart algorithm.

1. Introduction

Classification is a basic task in machine learning. Atraining data set {yi,Xi}Ni=1 consists of N feature vec-tors (samples) Xi, and N class labels, yi. Here yi ∈{0, 1, 2, ..., K−1} and K is the number of classes. Thetask is to predict the class labels. Among many classi-fication algorithms, boosting has become very popu-lar (Schapire, 1990; Freund, 1995; Freund & Schapire,1997; Bartlett et al., 1998; Schapire & Singer, 1999;Friedman et al., 2000; Friedman, 2001).

This study focuses on multi-class classification (i.e.,K ≥ 3). The multinomial logit model has been usedfor solving multi-class classification problems. Usingthis model, we first learn the class probabilities:

pi,k = Pr (yi = k|xi) = eFi,k(xi)

∑K−1s=0 e

Fi,s(xi), (1)

and then predict each class label according to

ŷi = argmaxk

pi,k. (2)

A classification error occurs if ŷi 6= yi. In (1), Fi,k =Fi,k(xi) is a function to be learned from the data.Boosting algorithms (Friedman et al., 2000; Friedman,2001) have been developed to fit the multinomial logitmodel. Several search engine ranking algorithms usedmart (multiple additive regression trees) (Friedman,

Appearing in Proceedings of the 26 th International Confer-ence on Machine Learning, Montreal, Canada, 2009. Copy-right 2009 by the author(s)/owner(s).

2001) as the underlying learning procedure (Cossock& Zhang, 2006; Zheng et al., 2007; Li et al., 2008).

Note that in (1), the values of pi,k are not affected byadding a constant C to each Fi,k, because

eFi,k+C∑K−1s=0 e

Fi,s+C=

eCeFi,k

eC∑K−1

s=0 eFi,s

=eFi,k∑K−1

s=0 eFi,s

= pi,k.

Therefore, for identifiability, one should impose aconstraint on Fi,k. One popular choice is to as-sume

∑K−1k=0 Fi,k = const, which is equivalent to∑K−1

k=0 Fi,k = 0, i.e., the sum-to-zero constraint.

This study proposes abc-boost (adaptive base classboost), based on the following two key ideas:

1. Popular loss functions for multi-class classificationusually need to impose a constraint such that onlythe values for K − 1 classes are necessary (Fried-man et al., 2000; Friedman, 2001; Zhang, 2004;Lee et al., 2004; Tewari & Bartlett, 2007; Zouet al., 2008). Therefore, we can choose a baseclass and derive algorithms only for K−1 classes.

2. At each boosting step, we can adaptively choosethe base class to achieve the best performance.

We present a concrete implementation named abc-mart, which combines abc-boost with mart. Ourextensive experiments will demonstrate the improve-ments of our new algorithm.

2. Review Mart & Gradient Boosting

Mart is the marriage of regress trees and function gra-dient boosting (Friedman, 2001; Mason et al., 2000).Given a training dataset {yi,xi}Ni=1 and a loss functionL, (Friedman, 2001) adopted a “greedy stagewise” ap-proach to build an additive function F (M),

F (M)(x) =M∑

m=1

ρmh(x;am), (3)

such that, at each stage m, m = 1 to M ,

{ρm,am} = argminρ,a

N∑i=1

L(yi, F

(m−1) + ρh(xi;a))

(4)

-

Adaptive Base Class Boost

Here h(x;a) is the “weak” learner. Instead of directlysolving the difficult problem (4), (Friedman, 2001) ap-proximately conducted steepest descent in functionspace, by solving a least square (Line 4 in Alg. 1)

am = argmina,ρ

N∑

i=1

[−gm(xi)− ρh(xi;a)]2 ,

where

−gm(xi) = −[∂L (yi, F (xi))

∂F (xi)

]

F ((x))=F (m−1)((x))

is the steepest descent direction in the N -dimensionaldata space at F (m−1)(x). For the other coefficient ρm,a line search is performed (Line 5 in Alg. 1):

ρm = argminρ

N∑i=1

L(yi, F

(m−1)(xi) + ρh(xi;am))

.

Algorithm 1 Gradient boosting (Friedman, 2001).

1: Fx = argminρ∑N

i=1 L(yi, ρ)

2: For m = 1 to M Do

3: ỹi = −[

∂L(yi,F (xi))∂F (xi)

]F (x)=F (m−1)(x)

.

4: am = argmina,ρ∑N

i=1 [ỹi − ρh(xi;a)]25: ρm = argminρ

∑Ni=1 L

(yi, F

(m−1)(xi) + ρh(xi;am))

6: Fx = Fx + ρmh(x;am)

7: End

8: End

Mart adopted the multinomial logit model (1) and thecorresponding negative multinomial log-likelihood loss

L =N∑

i=1

Li, Li = −K−1∑

k=0

ri,k log pi,k (5)

where ri,k = 1 if yi = k and ri,k = 0 otherwise.

As provided in (Friedman et al., 2000):

∂Li∂Fi,k

= − (ri,k − pi,k) , (6)

∂2Li∂F 2i,k

= pi,k (1− pi,k) . (7)

Alg. 2 describes mart for multi-class classification. Ateach stage, the algorithm solves the mean square prob-lem (Line 4 in Alg. 1) by regression trees, and imple-ments Line 5 in Alg. 1 by a one-step Newton updatewithin each terminal node of trees. Mart builds K re-gression trees at each boosting step. It is clear thatthe constraint

∑K−1k=0 Fi,k = 0 need not hold.

Algorithm 2 Mart (Friedman, 2001, Alg. 6)0: ri,k = 1, if yi = k, and ri,k = 0 otherwise.

1: Fi,k = 0, k = 0 to K − 1, i = 1 to N2: For m = 1 to M Do

3: For k = 0 to K − 1 Do4: pi,k = exp(Fi,k)/

∑K−1s=0 exp(Fi,s)

5: {Rj,k,m}Jj=1 = J-terminal node regression tree: from {ri,k − pi,k, xi}Ni=16: βj,k,m =

K−1K

∑xi∈Rj,k,m ri,k−pi,k∑

xi∈Rj,k,m(1−pi,k)pi,k7: Fi,k = Fi,k + ν

∑Jj=1 βj,k,m1xi∈Rj,k,m

8: End

9: End

Alg. 2 has three main parameters. The number ofterminal nodes, J , determines the capacity of the weaklearner. (Friedman, 2001) suggested J = 6. (Friedmanet al., 2000; Zou et al., 2008) commented that J > 10is very unlikely. The shrinkage, ν, should be largeenough to make sufficient progress at each step andsmall enough to avoid over-fitting. (Friedman, 2001)suggested ν ≤ 0.1. The number of iterations, M , islargely determined by the affordable computing time.

3. Abc-boost and Abc-mart

Abc-mart implements abc-boost. Corresponding to thetwo key ideas of abc-boost in Sec. 1, we need to: (A)re-derive the derivatives of (5) under the sum-to-zeroconstraint; (B) design a strategy to adaptively selectthe base class at each boosting iteration.

3.1. Derivatives of the Multinomial LogitModel with a Base Class

Without loss of generality, we assume class 0 is thebase. Lemma 1 provides the derivatives of the classprobabilities pi,k under the logit model (1).

Lemma 1∂pi,k∂Fi,k

= pi,k (1 + pi,0 − pi,k) , k 6= 0∂pi,k∂Fi,s

= pi,k (pi,0 − pi,s) , k 6= s 6= 0∂pi,0∂Fi,k

= pi,0 (−1 + pi,0 − pi,k) , k 6= 0

Proof: Note that Fi,0 = −∑K−1

k=1 Fi,k. Hence

pi,k =eFi,k∑K−1

s=0 eFi,s

=eFi,k

∑K−1s=1 e

Fi,s + e∑K−1

s=1 −Fi,s

∂pi,k∂Fi,k

=eFi,k∑K−1

s=0 eFi,s

− eFi,k

(eFi,k − e−Fi,0)(∑K−1s=0 e

Fi,s

)2

= pi,k (1 + pi,0 − pi,k) .

-

Adaptive Base Class Boost

The other derivatives can be obtained similarly. ¤

Lemma 2 provides the derivatives of the loss (5).

Lemma 2 For k 6= 0,∂Li∂Fi,k

= (ri,0 − pi,0)− (ri,k − pi,k) ,

∂2Li∂F 2i,k

= pi,0(1− pi,0) + pi,k(1− pi,k) + 2pi,0pi,k.

Proof:

Li = −K−1∑

s=1,s6=kri,s log pi,s − ri,k log pi,k − ri,0 log pi,0.

Its first derivative is

∂Li∂Fi,k

= −K−1∑

s=1,s 6=k

ri,spi,s

∂pi,sFi,k

− ri,kpi,k

∂pi,kFi,k

− ri,0pi,0

∂pi,0Fi,k

=

K−1∑

s=1,s 6=k−ri,s (pi,0 − pi,k)− ri,k (1 + pi,0 − pi,k)

− ri,0 (−1 + pi,0 − pi,k)

=−K−1∑s=0

ri,s (pi,0 − pi,k) + ri,0 − ri,k

=(ri,0 − pi,0)− (ri,k − pi,k) .

And the second derivative is

∂2Li∂F 2i,k

= − ∂pi,0∂Fi,k

+∂pi,k∂Fi,k

=− pi,0 (−1 + pi,0 − pi,k) + pi,k (1 + pi,0 − pi,k)=pi,0(1− pi,0) + pi,k(1− pi,k) + 2pi,0pi,k. ¤

3.2. The Exhaustive Strategy for the Base

We adopt a greedy strategy. At each training iteration,we try each class as the base and choose the one thatachieves the smallest training loss (5) as the final baseclass, for the current iteration. Alg. 3 describesabc-mart using this strategy.

3.3. More Insights in Mart and Abc-mart

First of all, one can verify that abc-mart recovers martwhen K = 2. For example, consider K = 2, ri,0 = 1,ri,1 = 0, then ∂Li∂Fi,1 = 2pi,1,

∂2Li∂F 2i,1

= 4pi,0pi,1. Thus,

the factor K−1K =12 appeared in Alg. 2 is recovered.

When K ≥ 3, it is interesting that mart used the av-eraged first derivatives. The following equality

∑

b6=k{−(ri,b − pi,b) + (ri,k − pi,k)} = K(ri,k − pi,k),

Algorithm 3 Abc-mart using the exhaustive searchstrategy. The vector B stores the base class numbers.0: ri,k = 1, if yi = k, ri,k = 0 otherwise.

1: Fi,k = 0, pi,k =1K

, k = 0 to K − 1, i = 1 to N2: For m = 1 to M Do

3: For b = 0 to K − 1, Do4: For k = 0 to K − 1, k 6= b, Do5: {Rj,k,m}Jj=1 = J-terminal node regression tree: from {−(ri,b − pi,b) + (ri,k − pi,k), xi}Ni=1

6: βj,k,m =

∑xi∈Rj,k,m −(ri,b−pi,b)+(ri,k−pi,k)∑

xi∈Rj,k,m pi,b(1−pi,b)+pi,k(1−pi,k)+2pi,bpi,k

7: Gi,k,b = Fi,k + ν∑J

j=1 βj,k,m1xi∈Rj,k,m8: End

9: Gi,b,b = −∑

k 6=b Gi,k,b10: qi,k = exp(Gi,k,b)/

∑K−1s=0 exp(Gi,s,b)

11: L(b) = −∑Ni=1∑K−1

k=0 ri,k log (qi,k)

12: End

13: B(m) = argminb

L(b)

14: Fi,k = Gi,k,B(m)15: pi,k = exp(Fi,k)/

∑K−1s=0 exp(Fi,s)

16: End

holds because∑

b 6=k{−(ri,b − pi,b) + (ri,k − pi,k)}

=−∑

b6=kri,b +

∑

b6=kpi,b + (K − 1)(ri,k − pi,k)

=− 1 + ri,k + 1− pi,k + (K − 1)(ri,k − pi,k)=K(ri,k − pi,k).

We can also show that, for the second derivatives,∑

b 6=k{(1− pi,b)pi,b + (1− pi,k)pi,k + 2pi,bpi,k}

≥(K + 2)(1− pi,k)pi,k,

with equality holding when K = 2, because∑

b6=k{(1− pi,b)pi,b + (1− pi,k)pi,k + 2pi,bpi,k}

=(K + 1)(1− pi,k)pi,k +∑

b6=kpi,b −

∑

b6=kp2i,b

≥(K + 1)(1− pi,k)pi,k +∑

b6=kpi,b −

∑

b6=kpi,b

2

=(K + 1)(1− pi,k)pi,k + (1− pi,k)pi,k=(K + 2)(1− pi,k)pi,k.

The factor K−1K in Alg. 2 may be reasonably replacedby KK+2 (both equal

12 when K = 2), or smaller. In a

-

Adaptive Base Class Boost

sense, to make the comparisons more fair, we shouldhave replaced the shrinkage factor ν in Alg. 3 by ν′,

ν′ ≥ ν K − 1K

K + 2K

= νK2 + K − 2

K2≥ ν

Thus, one should keep in mind that the shrinkage usedin mart is effectively larger than the shrinkage used inabc-mart.

4. Evaluations

Our experiments were conducted on several publicdatasets (Table 1), including one large dataset andseveral small or very small datasets. Even smallerdatasets will be too sensitive to the implementation(or tuning) of weak learners.

Table 1. Whenever possible, we used the standard (de-fault) training and test sets. For Covertype, we randomlysplit the original dataset into halves. For Letter, the defaulttest set consisted of the last 4000 samples. For Letter2k(Letter4k), we took the last 2000 (4000) samples of Letterfor training and the remaining 18000 (16000) for test.

dataset K # training # test # featuresCovertype 7 290506 290506 54Letter 26 16000 4000 16Letter2k 26 2000 18000 16Letter4k 26 4000 16000 16Pendigits 10 7494 3498 16Zipcode 10 7291 2007 256Optdigits 10 3823 1797 64Isolet 26 6218 1559 617

Ideally, we hope that abc-mart will improve mart (orbe as good as mart), for every reasonable combinationof tree size J and shrinkage ν.

Except for Covertype and Isolet, we experimented withevery combination of ν ∈ {0.04, 0.06, 0.08, 0.1} andJ ∈ {4, 6, 8, 10, 12, 14, 16, 18, 20}. Except forCovertype, we let the number of boosting steps M =10000. However, the experiments usually terminatedearlier because the machine accuracy was reached.

For the Covertype dataset, since it is fairly large, weonly experimented with J = 6, 10, 20 and ν = 0.1. Welimited M = 5000, which is probably already a toolarge learning model for real applications, especiallyapplications that are sensitive to the test time.

4.1. Summary of Experiment Results

We define Rerr, the “relative improvement of test mis-classification errors” as

Rerr =error of mart− error of abc-mart

error of mart. (8)

Since we experimented with a series of parameters, J ,ν, and M , we report, in Table 2, the overall “best”(i.e., smallest) mis-classification errors. Later, we willalso report the more detailed mis-classification errorsfor every combination of J and ν, in Sec. 4.2 to 4.9.We believe this is a fair (side-by-side) comparison.

Table 2. Summary of test mis-classification errors.

Dataset mart abc-mart Rerr (%) P -valueCovertype 11439 10375 9.3 0Letter 129 99 23.3 0.02Letter2k 2439 2180 10.6 0Letter4k 1352 1126 16.7 0Pendigits 124 100 19.4 0.05Zipcode 111 100 9.9 0.22Optdigits 55 43 21.8 0.11Isolet 80 64 20.0 0.09

In Table 2, we report the numbers of mis-classificationerrors mainly for the convenience of future compar-isons with this work. The reported P -values are basedon the error rate, for testing whether abc-mart hasstatistically lower error rates than mart. We shouldmention that testing the statistical significance of thedifference of two small probabilities (error rates) re-quires particularly strong evidence.

4.2. Experiments on the Covertype Dataset

Table 3 summarizes the smallest test mis-classificationerrors along with the relative improvements (Rerr).For each J and ν, the smallest test errors, separatelyfor abc-mart and mart, are the lowest points in thecurves in Figure 2, which are almost always the lastpoints on the curves, for this dataset.

Table 3. Covertype. We report the test mis-classificationerrors of mart and abc-mart, together with the results fromFriedman’s MART program (in [ ]). The relative improve-ments (Rerr, %) of abc-mart are included in ( ).

ν M J mart abc-mart0.1 1000 6 40072 [39775] 34758 (13.3)0.1 1000 10 29471 [29196] 23577 (20.0)0.1 1000 20 19451 [19438] 15190 (21.9)0.1 2000 6 31541 [31526] 26126 (17.2)0.1 2000 10 21739 [21698] 17479 (19.6)0.1 2000 20 14554 [14665] 11995 (17.6)0.1 3000 6 26972 [26742] 22111 (18.0)0.1 3000 10 18430 [18444] 14984 (18.7)0.1 3000 20 12888 [12893] 10996 (14.7)0.1 5000 6 22511 [22335] 18445 (18.1)0.1 5000 10 15449 [15429] 13018 (15.7)0.1 5000 20 11439 [11524] 10375 (9.3)

The results on Covertype are reported differently from

-

Adaptive Base Class Boost

other datasets. Covertype is fairly large. Buildinga very large model (e.g., M = 5000 boosting steps)would be expensive. Testing a very large model atrun-time can be costly or infeasible for certain applica-tions. Therefore, it is often important to examine theperformance of the algorithm at much earlier boostingiterations. Table 3 shows that abc-mart may improvemart as much as Rerr ≈ 20%, as opposed to the re-ported Rerr = 9.3% in Table 2.

Figure 1 indicates that abc-mart reduces the trainingloss (5) considerably and consistently faster than mart.Figure 2 demonstrates that abc-mart exhibits consid-erably and consistently smaller test mis-classificationerrors than mart.

1 1000 2000 3000 4000 500010

4

105

106

Train: J = 10 ν = 0.1

Iterations

Tra

inin

g lo

ss

abc

1 1000 2000 3000 4000 500010

3

104

105

106

Train: J = 20 ν = 0.1

Iterations

Tra

inin

g lo

ss

abc

Figure 1. Covertype. The training loss, i.e., (5).

1 1000 2000 3000 4000 50001

2

3

4

5x 10

4

Test: J = 10 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 1000 2000 3000 4000 50001

2

3

4x 10

4

Test: J = 20 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

Figure 2. Covertype. The test mis-classification errors.

4.3. Experiments on the Letter Dataset

We trained till the loss (5) reached machine accuracy,to exhaust the capacity of the learner so that we couldprovide a reliable comparison, up to M = 10000.

Table 4 summarizes the smallest test mis-classificationerrors along with the relative improvements. For abc-mart, the smallest error usually occurred at very closeto the last iteration, as reflected in Figure 3, whichagain demonstrates that abc-mart exhibits consider-ably and consistently smaller test errors than mart.

One observation is that test errors are fairly stableacross J and ν, unless J and ν are small (machineaccuracy was not reached in these cases).

4.4. Experiments on the Letter2k Dataset

Table 5 and Figure 4 present the test errors, illus-trating the considerable and consistent improvementof abc-mart over mart on this dataset.

Table 4. Letter. We report the test mis-classificationerrors of mart and abc-mart, together with Friedman’sMART program results (in [ ]). The relative improvements(Rerr, %) of abc-mart are included in ( ).

martν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 174 [178] 177 [176] 177 [177] 172 [177]J = 6 163 [153] 157 [160] 159 [156] 159 [162]J = 8 155 [151] 148 [152] 155 [148] 144 [151]J = 10 145 [141] 145 [148] 136 [144] 142 [136]J = 12 143 [142] 147 [143] 139 [145] 141 [145]J = 14 141 [151] 144 [150] 145 [144] 152 [142]J = 16 143 [148] 145 [146] 139 [145] 141 [137]J = 18 132 [132] 133 [137] 129 [135] 138 [134]J = 20 129 [143] 140 [135] 134 [139] 136 [143]

abc-martJ = 4 152 (12.6) 147 (16.9) 142 (19.8) 137 (20.3)J = 6 127 (22.1) 126 (19.7) 118 (25.8) 119 (25.2)J = 8 122 (21.3) 112 (24.3) 108 (30.3) 103 (28.5)J = 10 126 (13.1) 115 (20.7) 106 (22.1) 100 (29.6)J = 12 117 (18.2) 114 (22.4) 107 (23.0) 104 (26.2)J = 14 112 (20.6) 113 (21.5) 106 (26.9) 108 (28.9)J = 16 111 (22.4) 112 (22.8) 106 (23.7) 99 (29.8)J = 18 113 (14.4) 110 (17.3) 108 (16.3) 104 (24.6)J = 20 100 (22.5) 104 (25.7) 100 (25.4) 102 (25.0)

1 2000 4000 6000 8000 10000

100

120

140

160

180

200

220

Iterations

Tes

t mis

clas

sific

atio

ns

Test: J = 6 ν = 0.1

abc

1 2000 4000 6000 8000 10000

100

120

140

160

180

200

220Test: J = 10 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 1000 2000 3000 4000 5000

100

120

140

160

180

200

220Test: J = 16 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 500 1000 1500 2000 2500 3000

100

120

140

160

180

200

220Test: J = 20 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

Figure 3. Letter. The test mis-classification errors.

Table 5. Letter2k. The test mis-classification errors.

martν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 2694 [2750] 2698 [2728] 2684 [2706] 2689 [2733]J = 6 2683 [2720] 2664 [2688] 2640 [2716] 2629 [2688]J = 8 2569 [2577] 2603 [2579] 2563 [2603] 2571 [2559]J = 10 2534 [2545] 2516 [2546] 2504 [2539] 2491 [2514]J = 12 2503 [2474] 2516 [2465] 2473 [2492] 2492 [2455]J = 14 2488 [2432] 2467 [2482] 2460 [2451] 2460 [2454]J = 16 2503 [2499] 2501 [2494] 2496 [2437] 2500 [2424]J = 18 2494 [2464] 2497 [2482] 2472 [2489] 2439 [2476]J = 20 2499 [2507] 2512 [2523] 2504 [2460] 2482 [2505]

abc-martJ = 4 2476 (8.1) 2458 (8.9) 2406 (10.4) 2407 (10.5)J = 6 2355 (12.2) 2319 (12.9) 2309 (12.5) 2314 (12.0)J = 8 2277 (11.4) 2281 (12.4) 2253 (12.1) 2241 (12.8)J = 10 2236 (11.8) 2204 (12.4) 2190 (12.5) 2184 (12.3)J = 12 2199 (12.1) 2210 (12.2) 2193 (11.3) 2200 (11.7)J = 14 2202 (11.5) 2218 (10.1) 2198 (10.7) 2180 (11.4)J = 16 2215 (11.5) 2216 (11.4) 2228 (10.7) 2202 (11.9)J = 18 2216 (11.1) 2208 (11.6) 2205 (10.8) 2213 (9.3)J = 20 2199 (12.0) 2195 (12.6) 2183 (12.8) 2213 (10.8)

-

Adaptive Base Class Boost

1 1000 2000 3000 40002000

2200

2400

2600

2800

3000

3200Test: J = 6 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 500 1000 1500 20002000

2200

2400

2600

2800

3000

3200Test: J = 10 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 200 400 600 8002000

2200

2400

2600

2800

3000

3200Test: J = 16 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 200 400 600 8002000

2200

2400

2600

2800

3000

3200Test: J = 20 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

Figure 4. Letter2k. The test mis-classification errors.

Figure 4 indicates that we train more iterations for abc-mart than mart, for some cases. In our experiments,we terminated training mart whenever the training loss(5) reached 10−14 because we found out that, for mostdatasets and parameter settings, mart had difficultyreaching smaller training loss. In comparisons, abc-mart usually had no problem of reducing the trainingloss (5) down to 10−16 or smaller; and hence we ter-minated training abc-mart at 10−16. For the Letter2kdataset, our choice of the termination conditions maycause mart to terminate earlier than abc-mart in somecases. Also, as explained in Sec. 3.3, the fact that marteffectively uses larger shrinkage than abc-mart may bepartially responsible for the phenomenon in Figure 4.

4.5. Experiments on the Letter4k Dataset

Table 6. Letter4k. The test mis-classification errors.

martν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 1681 [1664] 1660 [1684] 1671 [1664] 1655 [1672]J = 6 1618 [1584] 1584 [1584] 1588 [1596] 1577 [1588]J = 8 1531 [1508] 1522 [1492] 1516 [1492] 1521 [1548]J = 10 1499 [1500] 1463 [1480] 1479 [1480] 1470 [1464]J = 12 1420 [1456] 1434 [1416] 1409 [1428] 1437 [1424]J = 14 1410 [1412] 1388 [1392] 1377 [1400] 1396 [1380]J = 16 1395 [1428] 1402 [1392] 1396 [1404] 1387 [1376]J = 18 1376 [1396] 1375 [1392] 1357 [1400] 1352 [1364]J = 20 1386 [1384] 1397 [1416] 1371 [1388] 1370 [1388]

abc-martJ = 4 1407 (16.3) 1372 (17.3) 1348 (19.3) 1318 (20.4)J = 6 1292 (20.1) 1285 (18.9) 1261 (20.6) 1234 (21.8)J = 8 1259 (17.8) 1246 (18.1) 1191 (21.4) 1183 (22.2)J = 10 1228 (18.1) 1201 (17.9) 1181 (20.1) 1182 (19.6)J = 12 1213 (14.6) 1178 (17.9) 1170 (17.0) 1162 (19.1)J = 14 1181 (16.2) 1154 (16.9) 1148 (16.6) 1158 (17.0)J = 16 1167 (16.3) 1153 (17.8) 1154 (17.3) 1142 (17.7)J = 18 1164 (15.4) 1136 (17.4) 1126 (17.0) 1149 (15.0)J = 20 1149 (17.1) 1127 (19.3) 1126 (17.9) 1142 (16.4)

4.6. Experiments on the Pendigits Dataset

1 2000 4000 6000 80001000

1200

1400

1600

1800

2000Test: J = 6 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 1000 2000 3000 4000 50001000

1200

1400

1600

1800

2000Test: J = 10 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 200 400 600 800 1000120014001000

1200

1400

1600

1800

2000Test: J = 16 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 200 400 600 800 10001000

1200

1400

1600

1800

2000Test: J = 20 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

Figure 5. Letter4k. The test mis-classification errors.

Table 7. Pendigits. The test mis-classification errors.

martν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 144 [145] 145 [146] 145 [144] 143 [142]J = 6 135 [139] 135 [140] 143 [137] 135 [138]J = 8 133 [133] 130 [132] 129 [133] 128 [134]J = 10 132 [132] 129 [128] 127 [128] 130 [132]J = 12 136 [134] 134 [134] 135 [140] 133 [134]J = 14 129 [126] 131 [131] 130 [133] 133 [131]J = 16 129 [127] 130 [129] 133 [132] 134 [126]J = 18 132 [129] 130 [129] 126 [128] 133 [131]J = 20 130 [129] 125 [125] 126 [130] 130 [128]

abc-martJ = 4 109 (24.3) 106 (26.9) 106 (26.9) 107 (25.2)J = 6 109 (19.3) 105 (22.2) 104 (27.3) 102 (24.4)J = 8 105 (20.5) 101 (22.3) 104 (19.4) 104 (18.8)J = 10 102 (17.7) 102 (20.9) 102 (19.7) 100 (23.1)J = 12 101 (25.7) 103 (23.1) 103 (23.7) 105 (21.1)J = 14 105 (18.6) 102 (22.1) 102 (21.5) 102 (23.3)J = 16 109 (15.5) 107 (17.7) 106 (20.3) 106 (20.9)J = 18 110 (16.7) 106 (18.5) 105 (16.7) 105 (21.1)J = 20 109 (16.2) 105 (16.0) 107 (15.1) 105 (19.2)

1 500 1000 1500 2000 2500100

120

140

160

180Test: J = 6 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 500 1000 1500100

120

140

160

180

Test: J = 10 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 200 400 600 800100

120

140

160

180Test: J = 16 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 200 400 600 800100

120

140

160

180

Test: J = 20 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

Figure 6. Pendigits. The test mis-classification errors.

-

Adaptive Base Class Boost

4.7. Experiments on the Zipcode Dataset

Table 8. Zipcode. The test mis-classification errors.

martν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 130 [132] 125 [128] 129 [132] 127 [128]J = 6 123 [126] 124 [128] 123 [127] 126 [124]J = 8 120 [119] 122 [123] 122 [120] 123 [115]J = 10 118 [117] 118 [119] 120 [115] 118 [117]J = 12 117 [118] 116 [118] 117 [116] 118 [113]J = 14 118 [115] 120 [116] 119 [114] 118 [114]J = 16 119 [121] 111 [113] 116 [114] 115 [114]J = 18 113 [120] 114 [116] 114 [116] 114 [113]J = 20 114 [111] 112 [110] 115 [110] 111 [115]

abc-martJ = 4 120 (7.7) 113 (9.5) 116 (10.1) 109 (14.2)J = 6 110 (10.6) 112 (9.7) 109 (11.4) 104 (17.5)J = 8 106 (11.7) 102 (16.4) 103 (15.5) 103 (16.3)J = 10 103 (12.7) 104 (11.9) 106 (11.7) 105 (11.0)J = 12 103 (12.0) 101 (12.9) 101 (13.7) 104 (11.9)J = 14 103 (12.7) 106 (11.7) 103 (13.4) 104 (11.9)J = 16 106 (10.9) 102 (8.1) 100 (13.8) 104 (9.6)J = 18 102 (9.7) 100 (12.3) 101 (11.4) 101 (11.4)J = 20 104 (8.8) 103 (8.0) 105 (8.7) 105 (5.4)

1 1000 2000 3000 4000100

120

140

160

180Test: J = 6 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 500 1000 1500 2000100

120

140

160

180Test: J = 10 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 200 400 600 800 1000 1200100

120

140

160

180Test: J = 16 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 200 400 600 800 1000100

120

140

160

180Test: J = 20 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

Figure 7. Zipcode. The test mis-classification errors.

4.8. Experiments on the Optdigits Dataset

This dataset is one of two largest datasets (Optdigitsand Pendigits) used in a recent paper on boosting (Zouet al., 2008), which proposed the multi-class gentle-boost and ADABOOST.ML.

For Pendigits, (Zou et al., 2008) reported 3.69%,4.09%, and 5.86% error rates, for gentleboost, AD-ABOOST.ML, and ADABOOST.MH (Schapire &Singer, 2000), respectively. For Optdigits, they re-ported 5.01%, 5.40%, and 5.18% for those three al-gorithms, respectively.

Table 9. Optdigits. The test mis-classification errors

.mart

ν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1J = 4 58 [61] 57 [58] 57 [57] 59 [58]J = 6 58 [57] 57 [54] 59 [59] 57 [56]J = 8 61 [62] 60 [58] 57 [59] 60 [56]J = 10 60 [62] 55 [59] 57 [57] 60 [60]J = 12 57 [60] 58 [59] 56 [60] 60 [59]J = 14 57 [57] 58 [61] 58 [59] 55 [57]J = 16 60 [60] 58 [59] 59 [58] 57 [58]J = 18 60 [59] 59 [60] 59 [58] 59 [57]J = 20 58 [60] 61 [62] 58 [60] 59 [60]

abc-martJ = 4 48 (17.2) 45 (21.1) 46 (19.3) 43 (27.1)J = 6 43 (25.9) 47 (17.5) 43 (27.1) 43 (24.6)J = 8 46 (24.6) 45 (25.0) 46 (19.3) 47 (21.6)J = 10 48 (20.0) 47 (14.5) 47 (17.5) 50 (16.7)J = 12 47 (17.5) 48 (17.2) 47 (16.1) 47 (21.7)J = 14 50 (12.3) 50 (13.8) 49 (15.5) 47 (14.6)J = 16 51 (15.0) 48 (17.2) 46 (22.0) 49 (14.0)J = 18 49 (18.3) 48 (18.6) 49 (20.0) 50 (15.3)J = 20 51 (12.1) 48 (21.3) 50 (13.8) 47 (20.3)

1 500 1000 1500 2000 250040

60

80

100Test: J = 6 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 200 400 600 800 1000 120040

60

80

100Test: J = 10 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 200 400 600 80040

60

80

100Test: J = 16 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 100 200 300 400 500 60040

60

80

100Test: J = 20 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

Figure 8. Optdigits. The test mis-classification errors.

4.9. Experiments on the Isolet Dataset

This dataset is high-dimensional with 617 features,for which tree algorithms become less efficient. Wehave only conducted experiments for one shrinkage,i.e,. ν = 0.1.

Table 10. Isolet. The test mis-classification errors.

mart abc-martν = 0.1 ν = 0.1

J = 4 80 [86] 64 (20.0)J = 6 84 [86] 67 (20.2)J = 8 84 [88] 72 (14.3)J = 10 82 [83] 74 (9.8)J = 12 91 [90] 74 (18.7)J = 14 95 [94] 74 (22.1)J = 16 94 [92] 78 (17.0)J = 18 86 [91] 78 (9.3)J = 20 87 [94] 78 (10.3)

-

Adaptive Base Class Boost

1 500 1000 1500 2000 2500 300060

80

100

120

140Test: J = 6 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 200 400 600 800 1000 120060

80

100

120

140Test: J = 10 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 200 400 600 80060

80

100

120

140Test: J = 16 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

1 100 200 300 400 500 60060

80

100

120

140Test: J = 20 ν = 0.1

Iterations

Tes

t mis

clas

sific

atio

ns

abc

Figure 9. Isolet. The test mis-classification errors.

Experiments on the Isolet dataset help demonstratethat using weak learners with larger trees does notnecessarily lead to better test results.

5. Conclusion

We present the concept of abc-boost and its concreteimplementation named abc-mart, for multi-class clas-sification (with K ≥ 3 classes). Two key componentsof abc-boost include: (A) By enforcing the (commonlyused) constraint on the loss function, we can deriveboosting algorithms for only K−1 classes using a baseclass; (B) We adaptively (and greedily) choose thebase class at each boosting step, to achieve the bestperformance. Our experiments on one large datasetand several small datasets demonstrate that abc-martcould potentially improve Friedman’s mart algorithm.

Since we use public datasets, comparisons with otherboosting algorithms are available through prior publi-cations, e.g., (Allwein et al., 2000; Holmes et al., 2002;Zou et al., 2008). We have provided excessive ex-periment details (no “black-box” weaker learners) andhopefully our results will also be useful as the baselinefor future development in multi-class classification.

Acknowledgement

The author thanks the reviewers and area chair fortheir constructive comments. The author thanks dis-cussions and suggestions from Trevor Hastie, JeromeFriedman, Tong Zhang, Phil Long, Cun-hui Zhang,Giles Hooker, Rich Caruana, and Thomas Dietterich.

Ping Li is partially supported by grant DMS-0808864from the National Science Foundation and the “Be-yond Search - Semantic Computing and Internet Eco-nomics” Microsoft 2007 Award as an unrestricted gift.

References

Allwein, E. L., Schapire, R. E., & Singer, Y. (2000). Re-ducing multiclass to binary: A unifying approach formargin classifiers. JMLR, 1, 113–141.

Bartlett, P., Freund, Y., Lee, W. S., & Schapire, R. E.(1998). Boosting the margin: a new explanation for theeffectiveness of voting methods. The Annals of Statistics,26, 1651–1686.

Cossock, D., & Zhang, T. (2006). Subset ranking usingregression. COLT (pp. 605–619).

Freund, Y. (1995). Boosting a weak learning algorithm bymajority. Inf. Comput., 121, 256–285.

Freund, Y., & Schapire, R. E. (1997). A decision-theoreticgeneralization of on-line learning and an application toboosting. J. Comput. Syst. Sci., 55, 119–139.

Friedman, J. H. (2001). Greedy function approximation:A gradient boosting machine. The Annals of Statistics,29, 1189–1232.

Friedman, J. H., Hastie, T. J., & Tibshirani, R. (2000). Ad-ditive logistic regression: a statistical view of boosting.The Annals of Statistics, 28, 337–407.

Holmes, G., Pfahringer, B., Kirkby, R., Frank, E., & Hall,M. (2002). Multiclass alternating decision trees. ECML(pp. 161–172). London, UK.

Lee, Y., Lin, Y., & Wahba, G. (2004). Multicategory sup-port vector machines: Theory and application to theclassification of microarray data and satellite radiancedata. JASA, 99, 67–81.

Li, P., Burges, C. J., & Wu, Q. (2008). Mcrank: Learningto rank using classification and gradient boosting. NIPS.

Mason, L., Baxter, J., Bartlett, P., & Frean, M. (2000).Boosting algorithms as gradient descent. NIPS.

Schapire, R. (1990). The strength of weak learnability.Machine Learning, 5, 197–227.

Schapire, R. E., & Singer, Y. (1999). Improved boostingalgorithms using confidence-rated predictions. MachineLearning, 37, 297–336.

Schapire, R. E., & Singer, Y. (2000). Boostexter: Aboosting-based system for text categorization. MachineLearning, 39, 135–168.

Tewari, A., & Bartlett, P. L. (2007). On the consistency ofmulticlass classification methods. JMLR, 8, 1007–1025.

Zhang, T. (2004). Statistical analysis of some multi-category large margin classification methods. JMLR,5, 1225–1251.

Zheng, Z., Chen, K., Sun, G., & Zha, H. (2007). A re-gression framework for learning ranking functions usingrelative relevance judgments. SIGIR (pp. 287–294).

Zou, H., Zhu, J., & Hastie, T. (2008). New multicate-gory boosting algorithms based on multicategory fisher-consistent losses. The Annals of Applied Statistics, 2,1290–1306.

-

Robust LogitBoost and Adaptive Base Class (ABC) LogitBoost

Ping LiDepartment of Statistical Science

Faculty of Computing and Information ScienceCornell UniversityIthaca, NY 14853

AbstractLogitboost is an influential boosting algorithmfor classification. In this paper, we develop ro-bust logitboost to provide an explicit formu-lation of tree-split criterion for building weaklearners (regression trees) for logitboost. Thisformulation leads to a numerically stable im-plementation of logitboost. We then proposeabc-logitboost for multi-class classification, bycombining robust logitboost with the prior workof abc-boost. Previously, abc-boost was imple-mented as abc-mart using the mart algorithm.

Our extensive experiments on multi-class clas-sification compare four algorithms: mart, abc-mart, (robust) logitboost, and abc-logitboost, anddemonstrate the superiority of abc-logitboost.Comparisons with other learning methods in-cluding SVM and deep learning are also avail-able through prior publications.

1 IntroductionBoosting [14, 5, 6, 1, 15, 8, 13, 7, 4] has been successful inmachine learning and industry practice. This study revisitslogitboost [8], focusing on multi-class classification.

We denote a training dataset by {yi,xi}Ni=1, where N is thenumber of feature vectors (samples), xi is the ith featurevector, and yi ∈ {0, 1, 2, ..., K − 1} is the ith class label,where K ≥ 3 in multi-class classification.Both logitboost [8] and mart (multiple additive regressiontrees) [7] can be viewed as generalizations to the classicallogistic regression, which models class probabilities pi,k as

pi,k = Pr (yi = k|xi) = eFi,k(xi)

∑K−1s=0 e

Fi,s(xi). (1)

While logistic regression simply assumes Fi,k(xi) = βTkxi,Logitboost and mart adopt the flexible “additive model,”which is a function of M terms:

F (M)(x) =

M∑m=1

ρmh(x;am), (2)

where h(x;am), the base (weak) learner, is typically a re-gression tree. The parameters, ρm and am, are learnedfrom the data, by maximum likelihood, which is equivalentto minimizing the negative log-likelihood loss

L =

N∑i=1

Li, Li = −K−1∑

k=0

ri,k log pi,k (3)

where ri,k = 1 if yi = k and ri,k = 0 otherwise.

For identifiability,∑K−1

k=0 Fi,k = 0, i.e., the sum-to-zeroconstraint, is usually adopted [8, 7, 17, 11, 16, 19, 18].

1.1 LogitboostAs described in Alg. 1, [8] builds the additive model (2) bya greedy stage-wise procedure, using a second-order (diag-onal) approximation, which requires knowing the first twoderivatives of the loss function (3) with respective to thefunction values Fi,k. [8] obtained:

∂Li∂Fi,k

= − (ri,k − pi,k) , ∂2Li

∂F 2i,k= pi,k (1− pi,k) . (4)

While [8] assumed the sum-to-zero constraint, they showed(4) by conditioning on a “base class” and noticed the resul-tant derivatives were independent of the choice of the base.

Algorithm 1 Logitboost [8, Alg. 6]. ν is the shrinkage.0: ri,k = 1, if yi = k, ri,k = 0 otherwise.1: Fi,k = 0, pi,k = 1K , k = 0 to K − 1, i = 1 to N2: For m = 1 to M Do3: For k = 0 to K − 1, Do4: Compute wi,k = pi,k (1− pi,k).5: Compute zi,k =

ri,k−pi,kpi,k(1−pi,k)

.

6: Fit the function fi,k by a weighted least-square of zi,k: to xi with weights wi,k.

7: Fi,k = Fi,k + ν K−1K(fi,k − 1K

∑K−1k=0 fi,k

)

8: End9: pi,k = exp(Fi,k)/

∑K−1s=0 exp(Fi,s)

10: End

At each stage, logitboost fits an individual regression func-tion separately for each class. This diagonal approximationappears to be a must if the base learner is implemented us-ing regression trees. For industry applications, using treesas the weak learner appears to be the standard practice.

-

1.2 Adaptive Base Class Boost[12] derived the derivatives of (3) under the sum-to-zeroconstraint. Without loss of generality, we can assume thatclass 0 is the base class. For any k 6= 0,

∂Li∂Fi,k

= (ri,0 − pi,0)− (ri,k − pi,k) , (5)

∂2Li∂F 2i,k

= pi,0(1− pi,0) + pi,k(1− pi,k) + 2pi,0pi,k. (6)

The base class must be identified at each boosting iterationduring training. [12] suggested an exhaustive procedure toadaptively find the best base class to minimize the trainingloss (3) at each iteration. [12] combined the idea of abc-boost with mart, to develop abc-mart, which achieved goodperformance in multi-class classification.

It was believed that logitboost could be numerically unsta-ble [8, 7, 9, 3]. In this paper, we provide an explicit formu-lation for tree construction to demonstrate that logitboostis actually stable. We name this construction robust logit-boost. We then combine the idea of robust logitboost withabc-boost to develop abc-logitboost, for multi-class clas-sification, which often considerably improves abc-mart.

2 Robust LogitboostIn practice, tree is the default weak learner. The next sub-section presents the tree-split criterion of robust logitboost.

2.1 Tree-Split Criterion Using 2nd-order InformationConsider N weights wi, and N response values zi, i = 1to N , which are assumed to be ordered according to thesorted order of the corresponding feature values. The tree-split procedure is to find the index s, 1 ≤ s < N , such thatthe weighted square error (SE) is reduced the most if splitat s. That is, we seek the s to maximize

Gain(s) = SET − (SEL + SER)

=

N∑i=1

(zi − z̄)2wi −[

s∑i=1

(zi − z̄L)2wi +N∑

i=s+1

(zi − z̄R)2wi]

where

z̄ =

∑Ni=1 ziwi∑Ni=1 wi

, z̄L =

∑si=1 ziwi∑si=1 wi

, z̄R =

∑Ni=s+1 ziwi∑Ni=s+1 wi

.

We can simplify the expression for Gain(s) to be:

Gain(s) =N∑

i=1

(z2i + z̄

2 − 2z̄zi)wi

−s∑

i=1

(z2i + z̄

2L − 2z̄Lzi)wi −

N∑

i=s+1

(z2i + z̄

2R − 2z̄Rzi)wi

=N∑

i=1

(z̄2 − 2z̄zi)wi −

s∑

i=1

(z̄2L − 2z̄Lzi)wi −

N∑

i=s+1

(z̄2R − 2z̄Rzi)wi

=

[z̄2

N∑

i=1

wi − 2z̄N∑

i=1

ziwi

]

−[

z̄2L

s∑

i=1

wi − 2z̄Ls∑

i=1

ziwi

]−

z̄2R

N∑

i=s+1

wi − 2z̄RN∑

i=s+1

ziwi

Gain(s) =

[−z̄

N∑

i=1

ziwi

]−

[−z̄L

s∑

i=1

ziwi

]−

−z̄R

N∑

i=s+1

ziwi

=

[∑si=1 ziwi

]2∑s

i=1 wi+

[∑Ni=s+1 ziwi

]2∑N

i=s+1 wi−

[∑Ni=1 ziwi

]2∑N

i=1 wi

Plugging in wi = pi,k(1− pi,k), zi = ri,k−pi,kpi,k(1−pi,k) yields

Gain(s) =

[∑si=1 (ri,k − pi,k)

]2∑s

i=1 pi,k(1− pi,k)+

[∑Ni=s+1 (ri,k − pi,k)

]2∑N

i=s+1 pi,k(1− pi,k)

−

[∑Ni=1 (ri,k − pi,k)

]2∑N

i=1 pi,k(1− pi,k). (7)

There are at least two ways to see why the criterion givenby (7) is numerically stable. First of all, the computationsinvolve

∑pi,k(1 − pi,k) as a group. It is much less likely

that pi,k(1 − pi,k) ≈ 0 for all i’s in the region. Secondly,if indeed that pi,k(1 − pi,k) → 0 for all i’s in this region,it means the model is fitted perfectly, i.e., pi,k → ri,k. Inother words, (e.g.,)

[∑Ni=1 (ri,k − pi,k)

]2in (7) also ap-

proaches zero at the square rate.

2.2 The Robust Logitboost Algorithm

Algorithm 2 Robust logitboost, which is very similar to Fried-man’s mart algorithm [7], except for Line 4.

1: Fi,k = 0, pi,k = 1K , k = 0 to K − 1, i = 1 to N2: For m = 1 to M Do3: For k = 0 to K − 1 Do4: {Rj,k,m}Jj=1 = J-terminal node regression tree from: {ri,k − pi,k, xi}Ni=1, with weights pi,k(1− pi,k) as in (7)5: βj,k,m = K−1K

∑xi∈Rj,k,m ri,k−pi,k∑

xi∈Rj,k,m(1−pi,k)pi,k6: Fi,k = Fi,k + ν

∑Jj=1 βj,k,m1xi∈Rj,k,m

7: End8: pi,k = exp(Fi,k)/

∑K−1s=0 exp(Fi,s)

9: End

Alg. 2 describes robust logitboost using the tree-split crite-rion (7). Note that after trees are constructed, the values ofthe terminal nodes are computed by

∑node zi,kwi,k∑

node wi,k=

∑node ri,k − pi,k∑

node pi,k(1− pi,k), (8)

which explains Line 5 of Alg. 2.

2.3 Friedman’s Mart Algorithm

Friedman [7] proposed mart (multiple additive regressiontrees), a creative combination of gradient descent and New-ton’s method, by using the first-order information to con-struct the trees and using both the first- & second-order in-formation to determine the values of the terminal nodes.

-

Corresponding to (7), the tree-split criterion of mart is

MartGain(s) =1

s

[s∑

i=1

(ri,k − pi,k)]2

(9)

+1

N − s

[N∑

i=s+1

(ri,k − pi,k)]2− 1

N

[N∑

i=1

(ri,k − pi,k)]2

.

In Sec. 2.1, plugging in responses zi,k = ri,k − pi,k andweights wi = 1, yields (9).

Once the tree is constructed, Friedman [7] applied a one-step Newton update to obtain the values of the terminalnodes. Interestingly, this one-step Newton update yieldsexactly the same equation as (8). In other words, (8) isinterpreted as weighted average in logitboost but it is inter-preted as the one-step Newton update in mart.

Therefore, the mart algorithm is similar to Alg. 2; we onlyneed to change Line 4, by replacing (7) with (9).

In fact, Eq. (8) also provides one more explanation whythe tree-split criterion (7) is numerically stable, because (7)is always numerically more stable than (8). The updateformula (8) has been successfully used in practice for 10years since the advent of mart.

2.4 Experiments on Binary ClassificationWhile we focus on multi-class classification, we also pro-vide some experiments on binary classification in App. A.

3 Adaptive Base Class (ABC) LogitboostDeveloped by [12], the abc-boost algorithm consists of thefollowing two components:

1. Using the widely-used sum-to-zero constraint [8, 7,17, 11, 16, 19, 18] on the loss function, one can for-mulate boosting algorithms only for K−1 classes, byusing one class as the base class.

2. At each boosting iteration, adaptively select the baseclass according to the training loss (3). [12] suggestedan exhaustive search strategy.

Abc-boost by itself is not a concrete algorithm. [12] de-veloped abc-mart by combining abc-boost with mart. Inthis paper, we develop abc-logitboost, a new algorithm bycombining abc-boost with (robust) logitboost.

Alg. 3 presents abc-logitboost, using the derivatives in (5)and (6) and the same exhaustive search strategy as usedby abc-mart. Again, abc-logitboost differs from abc-martonly in the tree-split procedure (Line 5 in Alg. 3).

Compared to Alg. 2, abc-logitboost differs from (robust)logitboost in that they use different derivatives and abc-logitboost needs an additional loop to select the base classat each boosting iteration.

Algorithm 3 Abc-logitboost using the exhaustive searchstrategy for the base class, as suggested in [12]. The vectorB stores the base class numbers.1: Fi,k = 0, pi,k = 1K , k = 0 to K − 1, i = 1 to N2: For m = 1 to M Do3: For b = 0 to K − 1, Do4: For k = 0 to K − 1, k 6= b, Do5: {Rj,k,m}Jj=1 = J-terminal node regression tree from: {−(ri,b − pi,b) + (ri,k − pi,k), xi}Ni=1 with weights: pi,b(1−pi,b)+pi,k(1−pi,k)+2pi,bpi,k, as in Sec. 2.1.6: βj,k,m =

∑xi∈Rj,k,m −(ri,b−pi,b)+(ri,k−pi,k)∑

xi∈Rj,k,m pi,b(1−pi,b)+pi,k(1−pi,k)+2pi,bpi,k

7: Gi,k,b = Fi,k + ν∑J

j=1 βj,k,m1xi∈Rj,k,m8: End9: Gi,b,b = −

∑k 6=b Gi,k,b

10: qi,k = exp(Gi,k,b)/∑K−1

s=0 exp(Gi,s,b)

11: L(b) = −∑Ni=1∑K−1

k=0 ri,k log (qi,k)

12: End13: B(m) = argmin

b

L(b)

14: Fi,k = Gi,k,B(m)15: pi,k = exp(Fi,k)/

∑K−1s=0 exp(Fi,s)

16: End

3.1 Why Does the Choice of Base Class Matter?It matters because of the diagonal approximation; that is,fitting a regression tree for each class at each boosting iter-ation. To see this, we can take a look at the Hessian matrix,for K = 3. Using the original logitboost/mart derivatives(4), the determinant of the Hessian matrix is

∣∣∣∣∣∣∣∣

∂2Li∂p20

∂2Li∂p0p1

∂2Li∂p0p2

∂2Li∂p1p0

∂2Li∂p21

∂2Li∂p1p2

∂2Li∂p2p0

∂2Li∂p2p1

∂2Li∂p22

∣∣∣∣∣∣∣∣

=

∣∣∣∣∣∣p0(1− p0) −p0p1 −p0p2−p1p0 p1(1− p1) −p1p2−p2p0 −p2p1 p2(1− p2)

∣∣∣∣∣∣= 0

as expected, because there are only K − 1 degrees offreedom. A simple fix is to use the diagonal approxima-tion [8, 7]. In fact, when trees are used as the base learner,it seems one must use the diagonal approximation.

Next, we consider the derivatives (5) and (6). This time,when K = 3 and k = 0 is the base class, we only have a 2by 2 Hessian matrix, whose determinant is

∣∣∣∣∣∣∣∣

∂2Li∂p21

∂2Li∂p1p2

∂2Li∂p2p1

∂2Li∂p22

∣∣∣∣∣∣∣∣=

∣∣∣∣p0(1− p0) + p1(1− p1) + 2p0p1 p0 − p20 + p0p1 + p0p2 − p1p2p0 − p20 + p0p1 + p0p2 − p1p2 p0(1− p0) + p2(1− p2) + 2p0p2

∣∣∣∣

=p0p1 + p0p2 + p1p2 − p0p21 − p0p22 − p1p22 − p2p21 − p1p20 − p2p20+ 6p0p1p2,

which is non-zero and is in fact independent of the choiceof the base class (even though we assume k = 0 as the base

-

in this example). In other words, the choice of the baseclass would not matter if the full Hessian is used.

However, the choice of the base class will matter becausewe will have to use diagonal approximation in order to con-struct trees at each iteration.

4 Experiments on Multi-class Classification4.1 DatasetsTable 1 lists the datasets used in our study.

Table 1: Datasetsdataset K # training # test # featuresCovertype290k 7 290506 290506 54Covertype145k 7 145253 290506 54Poker525k 10 525010 500000 25Poker275k 10 275010 500000 25Poker150k 10 150010 500000 25Poker100k 10 100010 500000 25Poker25kT1 10 25010 500000 25Poker25kT2 10 25010 500000 25Mnist10k 10 10000 60000 784M-Basic 10 12000 50000 784M-Rotate 10 12000 50000 784M-Image 10 12000 50000 784M-Rand 10 12000 50000 784M-RotImg 10 12000 50000 784M-Noise1 10 10000 2000 784M-Noise2 10 10000 2000 784M-Noise3 10 10000 2000 784M-Noise4 10 10000 2000 784M-Noise5 10 10000 2000 784M-Noise6 10 10000 2000 784Letter15k 26 15000 5000 16Letter4k 26 4000 16000 16Letter2k 26 2000 18000 16

Covertype The original UCI Covertype dataset is fairlylarge, with 581012 samples. To generate Covertype290k,we randomly split the original data into halves, one half fortraining and another half for testing. For Covertype145k,we randomly select one half from the training set of Cover-type290k and still keep the same test set.

Poker The UCI Poker dataset originally had 25010samples for training and 1000000 samples for testing.Since the test set is very large, we randomly divide itequally into two parts (I and II). Poker25kT1 uses the orig-inal training set for training and Part I of the original testset for testing. Poker25kT2 uses the original training set fortraining and Part II of the original test set for testing. Thisway, Poker25kT1 can use the test set of Poker25kT2 for val-idation, and Poker25kT2 can use the test set of Poker25kT1for validation.The two test sets are still very large.

In addition, we enlarge the training set to form Poker525k,Poker275k, Poker150k, Poker100k. All four enlarged train-ing sets use the same test set as Pokere25kT2 (i.e., Part II ofthe original test set). The training set of Poker525k containsthe original (25k) training set plus Part I of the original testset. The training set of Poker275k/Poker150k/Poker100kcontains the original training set plus 250k/125k/75k sam-ples from Part I of the original test set.

Mnist While the original Mnist dataset is extremelypopular, it is known to be too easy [10]. Originally, Mnist

used 60000 samples for training and 10000 samples fortesting. Mnist10k uses the original (10000) test set fortraining and the original (60000) training set for testing.

Mnist with Many Variations[10] created a variety of difficult datasets by adding back-ground (correlated) noises, background images, rotations,etc, to the original Mnist data. We shortened the names ofthe datasets to be M-Basic, M-Rotate, M-Image, M-Rand,M-RotImg, and M-Noise1, M-Noise2 to M-Noise6.

Letter The UCI Letter dataset has in total 20000 sam-ples. In our experiments, Letter4k (Letter2k) use the last4000 (2000) samples for training and the rest for testing.The purpose is to demonstrate the performance of the al-gorithms using only small training sets. We also includeLetter15k, which is one of the standard partitions, by using15000 samples for training and 5000 samples for testing.

4.2 The Main Goal of Our ExperimentsThe main goal of our experiments is to demonstrate that

1. Abc-logitboost and abc-mart outperform (robust) log-itboost and mart, respectively.

2. (Robust) logitboost often outperforms mart.3. Abc-logitboost often outperforms abc-mart.4. The improvements hold for (almost) all reasonable pa-

rameters, not just for a few selected sets of parameters.

The main parameter is J , the number of terminal treenodes. It is often the case that test errors are not very sensi-tive to the shrinkage parameter ν, provided ν ≤ 0.1 [7, 3].4.3 Detailed Experiment Results on Mnist10k,

M-Image, Letter4k, and Letter2kFor these datasets, we experiment with every combinationof J ∈ {4, 6, 8, 10, 12, 14, 16, 18, 20, 24, 30, 40, 50} andν ∈ {0.04, 0.06, 0.08, 0.1}. We train the four boosting al-gorithms till the training loss (3) is close to the machineaccuracy to exhaust the capacity of the learners, for reli-able comparisons, up to M = 10000 iterations. We reportthe test mis-classification errors at the last iterations.

For Mnist10k, Table 2 presents the test mis-classificationerrors, which verifies the consistent improvements of (A)abc-logitboost over (robust) logitboost, (B) abc-logitboostover abc-mart, (C) (robust) logitboost over mart, and (D)abc-mart over mart. The table also verifies that the perfor-mances are not too sensitive to the parameters, especiallyconsidering the number of test samples is 60000. In App.B, Table 12 reports the testing P -values for every combi-nation of J and ν.

Table 3, 4 , 5 present the test mis-classification errors onM-Image, Letter4k, and Letter2k, respectively.

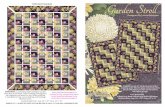

Fig. 1 provides the test errors for all boosting iterations.While we believe this is the most reliable comparison, un-fortunately there is no space to present them all.

-

Table 2: Mnist10k. Upper table: The test mis-classification er-rors of mart and abc-mart (bold numbers). Bottom table: The testerrors of logitboost and abc-logitboost (bold numbers)

mart abc-martν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 3356 3060 3329 3019 3318 2855 3326 2794J = 6 3185 2760 3093 2626 3129 2656 3217 2590J = 8 3049 2558 3054 2555 3054 2534 3035 2577J = 10 3020 2547 2973 2521 2990 2520 2978 2506J = 12 2927 2498 2917 2457 2945 2488 2907 2490J = 14 2925 2487 2901 2471 2877 2470 2884 2454J = 16 2899 2478 2893 2452 2873 2465 2860 2451J = 18 2857 2469 2880 2460 2870 2437 2855 2454J = 20 2833 2441 2834 2448 2834 2444 2815 2440J = 24 2840 2447 2827 2431 2801 2427 2784 2455J = 30 2826 2457 2822 2443 2828 2470 2807 2450J = 40 2837 2482 2809 2440 2836 2447 2782 2506J = 50 2813 2502 2826 2459 2824 2469 2786 2499

logitboost abc-logitν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 2936 2630 2970 2600 2980 2535 3017 2522J = 6 2710 2263 2693 2252 2710 2226 2711 2223J = 8 2599 2159 2619 2138 2589 2120 2597 2143J = 10 2553 2122 2527 2118 2516 2091 2500 2097J = 12 2472 2084 2468 2090 2468 2090 2464 2095J = 14 2451 2083 2420 2094 2432 2063 2419 2050J = 16 2424 2111 2437 2114 2393 2097 2395 2082J = 18 2399 2088 2402 2087 2389 2088 2380 2097J = 20 2388 2128 2414 2112 2411 2095 2381 2102J = 24 2442 2174 2415 2147 2417 2129 2419 2138J = 30 2468 2235 2434 2237 2423 2221 2449 2177J = 40 2551 2310 2509 2284 2518 2257 2531 2260J = 50 2612 2353 2622 2359 2579 2332 2570 2341

Table 3: M-Image. Upper table: The test mis-classification er-rors of mart and abc-mart (bold numbers). Bottom table: The testof logitboost and abc-logitboost (bold numbers)

mart abc-martν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 6536 5867 6511 5813 6496 5774 6449 5756J = 6 6203 5471 6174 5414 6176 5394 6139 5370J = 8 6095 5320 6081 5251 6132 5141 6220 5181J = 10 6076 5138 6104 5100 6154 5086 6332 4983J = 12 6036 4963 6086 4956 6104 4926 6117 4867J = 14 5922 4885 6037 4866 6018 4789 5993 4839J = 16 5914 4847 5937 4806 5940 4797 5883 4766J = 18 5955 4835 5886 4778 5896 4733 5814 4730J = 20 5870 4749 5847 4722 5829 4707 5821 4727J = 24 5816 4725 5766 4659 5785 4662 5752 4625J = 30 5729 4649 5738 4629 5724 4626 5702 4654J = 40 5752 4619 5699 4636 5672 4597 5676 4660J = 50 5760 4674 5731 4667 5723 4659 5725 4649

logitboost abc-logitν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 5837 5539 5852 5480 5834 5408 5802 5430J = 6 5473 5076 5471 4925 5457 4950 5437 4919J = 8 5294 4756 5285 4748 5193 4678 5187 4670J = 10 5141 4597 5120 4572 5052 4524 5049 4537J = 12 5013 4432 5016 4455 4987 4416 4961 4389J = 14 4914 4378 4922 4338 4906 4356 4895 4299J = 16 4863 4317 4842 4307 4816 4279 4806 4314J = 18 4762 4301 4740 4255 4754 4230 4751 4287J = 20 4714 4251 4734 4231 4693 4214 4703 4268J = 24 4676 4242 4610 4298 4663 4226 4638 4250J = 30 4653 4351 4662 4307 4633 4311 4643 4286J = 40 4713 4434 4724 4426 4760 4439 4768 4388J = 50 4763 4502 4795 4534 4792 4487 4799 4479

Table 4: Letter4k. Upper table: The test mis-classification errorsof mart and abc-mart (bold numbers). Bottom table: The testerrors of logitboost and abc-logitboost (bold numbers)

mart abc-martν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 1681 1415 1660 1380 1671 1368 1655 1323J = 6 1618 1320 1584 1288 1588 1266 1577 1240J = 8 1531 1266 1522 1246 1516 1192 1521 1184J = 10 1499 1228 1463 1208 1479 1186 1470 1185J = 12 1420 1213 1434 1186 1409 1170 1437 1162J = 14 1410 1190 1388 1156 1377 1151 1396 1160J = 16 1395 1167 1402 1156 1396 1157 1387 1146J = 18 1376 1164 1375 1139 1357 1127 1352 1152J = 20 1386 1154 1397 1130 1371 1131 1370 1149J = 24 1371 1148 1348 1155 1374 1164 1391 1150J = 30 1383 1174 1406 1174 1401 1177 1404 1209J = 40 1458 1211 1455 1224 1441 1233 1454 1215J = 50 1484 1203 1517 1233 1487 1248 1522 1250

logitboost abc-logitν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 1460 1296 1471 1241 1452 1202 1446 1208J = 6 1390 1143 1394 1117 1382 1090 1374 1074J = 8 1336 1089 1332 1080 1311 1066 1297 1046J = 10 1289 1062 1285 1067 1380 1034 1273 1049J = 12 1251 1058 1247 1069 1261 1044 1243 1051J = 14 1247 1063 1233 1051 1251 1040 1244 1066J = 16 1244 1074 1227 1068 1231 1047 1228 1046J = 18 1243 1059 1250 1040 1234 1052 1220 1057J = 20 1226 1084 1242 1070 1242 1058 1235 1055J = 24 1245 1079 1234 1059 1235 1058 1215 1073J = 30 1232 1057 1247 1085 1229 1069 1230 1065J = 40 1246 1095 1255 1093 1230 1094 1231 1087J = 50 1248 1100 1230 1108 1233 1120 1246 1136

Table 5: Letter2k. Upper table: The test mis-classification errorsof mart and abc-mart (bold numbers). Bottom table: The testerrors of logitboost and abc-logitboost (bold numbers)

mart abc-martν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 2694 2512 2698 2470 2684 2419 2689 2435J = 6 2683 2360 2664 2321 2640 2313 2629 2321J = 8 2569 2279 2603 2289 2563 2259 2571 2251J = 10 2534 2242 2516 2215 2504 2210 2491 2185J = 12 2503 2202 2516 2215 2473 2198 2492 2201J = 14 2488 2203 2467 2231 2460 2204 2460 2183J = 16 2503 2219 2501 2219 2496 2235 2500 2205J = 18 2494 2225 2497 2212 2472 2205 2439 2213J = 20 2499 2199 2512 2198 2504 2188 2482 2220J = 24 2549 2200 2549 2191 2526 2218 2538 2248J = 30 2579 2237 2566 2232 2574 2244 2574 2285J = 40 2641 2303 2632 2304 2606 2271 2667 2351J = 50 2668 2382 2670 2362 2638 2413 2717 2367

logitboost abc-logitν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 2629 2347 2582 2299 2580 2256 2572 2231J = 6 2427 2136 2450 2120 2428 2072 2429 2077J = 8 2336 2080 2321 2049 2326 2035 2313 2037J = 10 2316 2044 2306 2003 2314 2021 2307 2002J = 12 2315 2024 2315 1992 2333 2018 2290 2018J = 14 2317 2022 2305 2004 2315 2006 2292 2030J = 16 2302 2024 2299 2004 2286 2005 2262 1999J = 18 2298 2044 2277 2021 2301 1991 2282 2034J = 20 2280 2049 2268 2021 2294 2024 2309 2034J = 24 2299 2060 2326 2037 2285 2021 2267 2047J = 30 2318 2078 2326 2057 2304 2041 2274 2045J = 40 2281 2121 2267 2079 2294 2090 2291 2110J = 50 2247 2174 2299 2155 2267 2133 2278 2150

-

1 2000 4000 6000 8000 100002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rsMnist10k: J = 4, ν = 0.04

martabc−mart

logitabc−logit

1 2000 4000 6000 8000 100002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 4, ν = 0.06

martabc−mart

logit

abc−logit

1 2000 4000 6000 8000 100002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 4, ν = 0.1

mart

abc−mart

logit

abc−logit

1 2000 4000 6000 8000 100002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 6, ν = 0.04

mart

abc−mart

logit

abc−logit1 2000 4000 6000 8000 10000

2000

2500

3000

3500

4000

4500

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 6, ν = 0.06

mart

abc−martlogit

abc−logit1 2000 4000 6000 8000 10000

2000

2500

3000

3500

4000

4500

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 6, ν = 0.1

mart

logitabc−logit

abc−mart

1 2000 4000 6000 8000 100002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 8, ν = 0.04

mart

abc−martlogit

abc−logit1 2000 4000 6000 8000 10000

2000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 8, ν = 0.06

mart

abc−martlogit

abc−logit

1 1000 2000 3000 4000 5000 60002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 8, ν = 0.1

mart

abc−martlogit

abc−logit

1 2000 4000 6000 8000 100002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 10, ν = 0.04

mart

abc−martlogitabc−logit

1 2000 4000 6000 80002000

2500

3000

3500

4000

4500

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

abc−mart

mart

logitabc−logit

Mnist10k: J = 10, ν = 0.06

1 1000 2000 3000 40002000

2500

3000

3500

4000

4500

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 10, ν = 0.1

mart

logitabc−logit

abc−mart

1 2000 4000 60002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 12, ν = 0.04

abc−logit

mart

abc−martlogit

1 1000 2000 3000 4000 50002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 12, ν = 0.06

mart

abc−martlogit

abc−logit1 500 1000 1500 2000 2500 3000

2000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 12, ν = 0.1

mart

abc−martlogit

abc−logit

1 2000 4000 60002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 14, ν = 0.04

mart

abc−martlogit

abc−logit1 1000 2000 3000 4000

2000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 14, ν = 0.06

mart

abc−martlogit

abc−logit1 500 1000 1500 2000 2500

2000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 14, ν = 0.1

mart

abc−martlogit

abc−logit

1 1000 2000 3000 4000 50002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 16, ν = 0.04

martmart

abc−martlogit

abc−logit1 1000 2000 3000 4000

2000

2500

3000

3500

4000

4500

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 16, ν = 0.06

mart

abc−logitlogit

abc−mart

1 500 1000 1500 20002000

2500

3000

3500

4000

4500

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 16, ν = 0.1

logitabc−logit

abc−mart

mart

1 1000 2000 30002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 24, ν = 0.04

mart

abc−martlogit

abc−logit

1 500 1000 1500 2000 25002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 24, ν = 0.06

mart

abc−martlogit

abc−logit1 500 1000 1500

2000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 24, ν = 0.1

mart

abc−martlogit

abc−logit

1 500 1000 1500 2000 25002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 30, ν = 0.04

mart

abc−martlogit

abc−logit

mart

1 500 1000 1500 20002000

2500

3000

3500

4000

4500

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 30, ν = 0.06

mart

abc−martlogit

abc−logit1 200 400 600 800 1000 1200

2000

2500

3000

3500

4000

4500

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 30, ν = 0.1

mart

abc−logitlogit

abc−mart

1 500 1000 1500 20002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 40, ν = 0.04

martabc−mart

logitabc−logit

1 500 1000 15002000

2500

3000

3500

4000

4500

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 40, ν = 0.06

mart

abc−martlogit

abc−logit

1 200 400 600 8002000

2500

3000

3500

4000

4500

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 40, ν = 0.1

mart

abc−martlogit

abc−logit

1 500 1000 15002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 50, ν = 0.04

logitabc−logit

abc−martmart

1 200 400 600 800 10002000

3000

4000

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 50, ν = 0.06

martabc−martlogit

abc−logit

1 200 400 600 8002000

2500

3000

3500

4000

4500

5000

Boosting iterations

Tes

t mis

−cl

assi

ficat

ion

erro

rs

Mnist10k: J = 50, ν = 0.1

martabc−martlogit

abc−logit

Figure 1: Mnist10k. Test mis-classification errors of fourboosting algorithms, for shrinkage ν = 0.04 (left), 0.06(middle), 0.1 (right), and selected J terminal nodes.

4.4 Experiment Results on Poker25kT1, Poker25kT2Recall, to provide a reliable comparison (and validation),we form two datasets Poker25kT1 and Poker25kT2 byequally dividing the original test set (1000000 samples)into two parts (I and II). Both use the same training set.Poker25kT1 uses Part I of the original test set for testingand Poker25kT2 uses Part II for testing.

Table 6 and Table 7 present the test mis-classificationerrors, for J ∈ {4, 6, 8, 10, 12, 14, 16, 18, 20}, ν ∈{0.04, 0.06, 0.08, 0.1}, and M = 10000 boosting itera-tions (the machine accuracy is not reached). Comparingthese two tables, we can see the corresponding entries arevery close to each other, which again verifies that the fourboosting algorithms provide reliable results on this dataset.Unlike Mnist10k, the test errors, especially using mart andlogitboost, are slightly sensitive to the parameter J .

Table 6: Poker25kT1. Upper table: The test mis-classificationerrors of mart and abc-mart (bold numbers). Bottom table: Thetest of logitboost and abc-logitboost (bold numbers).

mart abc-martν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 145880 90323 132526 67417 124283 49403 113985 42126J = 6 71628 38017 59046 36839 48064 35467 43573 34879J = 8 64090 39220 53400 37112 47360 36407 44131 35777J = 10 60456 39661 52464 38547 47203 36990 46351 36647J = 12 61452 41362 52697 39221 46822 37723 46965 37345J = 14 58348 42764 56047 40993 50476 40155 47935 37780J = 16 63518 44386 55418 43360 50612 41952 49179 40050J = 18 64426 46463 55708 45607 54033 45838 52113 43040J = 20 65528 49577 59236 47901 56384 45725 53506 44295

logitboost abc-logitν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 147064 102905 140068 71450 128161 51226 117085 42140J = 6 81566 43156 59324 39164 51526 37954 48516 37546J = 8 68278 46076 56922 40162 52532 38422 46789 37345J = 10 63796 44830 55834 40754 53262 40486 47118 38141J = 12 66732 48412 56867 44886 51248 42100 47485 39798J = 14 64263 52479 55614 48093 51735 44688 47806 43048J = 16 67092 53363 58019 51308 53746 47831 51267 46968J = 18 69104 57147 56514 55468 55290 50292 51871 47986J = 20 68899 62345 61314 57677 56648 53696 51608 49864

Table 7: Poker25kT2. The test mis-classification errors.

mart abc-martν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 144020 89608 131243 67071 123031 48855 113232 41688J = 6 71004 37567 58487 36345 47564 34920 42935 34326J = 8 63452 38703 52990 36586 46914 35836 43647 35129J = 10 60061 39078 52125 38025 46912 36455 45863 36076J = 12 61098 40834 52296 38657 46458 37203 46698 36781J = 14 57924 42348 55622 40363 50243 39613 47619 37243J = 16 63213 44067 55206 42973 50322 41485 48966 39446J = 18 64056 46050 55461 45133 53652 45308 51870 42485J = 20 65215 49046 58911 47430 56009 45390 53213 43888

logitboost abc-logitν = 0.04 ν = 0.06 ν = 0.08 ν = 0.1

J = 4 145368 102014 138734 70886 126980 50783 116346 41551J = 6 80782 42699 58769 38592 51202 37397 48199 36914J = 8 68065 45737 56678 39648 52504 37935 46600 36731J = 10 63153 44517 55419 40286 52835 40044 46913 37504J = 12 66240 47948 56619 44602 50918 41582 47128 39378J = 14 63763 52063 55238 47642 51526 44296 47545 42720J = 16 66543 52937 57473 50842 53287 47578 51106 46635J = 18 68477 56803 57070 55166 54954 49956 51603 47707J = 20 68311 61980 61047 57383 56474 53364 51242 49506

-

4.5 Summary of Test Mis-classification ErrorsTable 8 summarizes the test mis-classification errors. Sincethe test errors are not too sensitive to the parameters, forall datasets except Poker25kT1 and Poker25kT2, we simplyreport the test errors with tree size J = 20 and shrinkageν = 0.1. (More tuning will possibly improve the results.)

For Poker25kT1 and Poker25kT2, as we notice the perfor-mance is somewhat sensitive to the parameters, we use eachothers’ test set as the validation set to report the test errors.

For Covertype290k, Poker525k, Poker275k, Poker150k,and Poker100k, as they are fairly large, we only trainM = 5000 boosting iterations. For all other datasets, wealways train M = 10000 iterations or terminate when thetraining loss (3) is close to the machine accuracy. Sincewe do not notice obvious over-fitting on these datasets, wesimply report the test errors at the last iterations.

Table 8 also includes the results of regular logistic re-gression. It is interesting that the test errors are all thesame (248892) for Poker525k, Poker275k, Poker150k, andPoker100k (but the predicted probabilities are different).

Table 8: Summary of test mis-classification errors.

Dataset mart abc-mart logit abc-logit logi. regres.Covertype290k 11350 10454 10765 9727 80233Covertype145k 15767 14665 14928 13986 80314Poker525k 7061 2424 2704 1736 248892Poker275k 15404 3679 6533 2727 248892Poker150k 22289 12340 16163 5104 248892Poker100k 27871 21293 25715 13707 248892Poker25kT1 43573 34879 46789 37345 250110Poker25kT2 42935 34326 46600 36731 249056Mnist10k 2815 2440 2381 2102 13950M-Basic 2058 1843 1723 1602 10993M-Rotate 7674 6634 6813 5959 26584M-Image 5821 4727 4703 4268 19353M-Rand 6577 5300 5020 4725 18189M-RotImg 24912 23072 22962 22343 33216M-Noise1 305 245 267 234 935M-Noise2 325 262 270 237 940M-Noise3 310 264 277 238 954M-Noise4 308 243 256 238 933M-Noise5 294 249 242 227 867M-Noise6 279 224 226 201 788Letter15k 155 125 139 109 1130Letter4k 1370 1149 1252 1055 3712Letter2k 2482 2220 2309 2034 4381

P -values Table 9 summarizes four types of P -values:

• P1: for testing if abc-mart has significantly lowererror rates than mart.

• P2: for testing if (robust) logitboost has significantlylower error rates than mart.

• P3: for testing if abc-logitboost has significantlylower error rates than abc-mart.

• P4: for testing if abc-logitboost has significantlylower error rates than (robust) logitboost.

The P -values are computed using binomial distributionsand normal approximations. Recall, if a random variablez ∼ Binomial(N, p), then the probability p can be esti-mated by p̂ = zN , and the variance of p̂ by p̂(1− p̂)/N .

Note that the test sets for M-Noise1 to M-Noise6 are verysmall as [10] did not intend to evaluate the statistical sig-nificance on those six datasets. (Private communications.)

Table 9: Summary of test P -values.

Dataset P1 P2 P3 P4Covertype290k 3× 10−10 3× 10−5 9× 10−8 8× 10−14Covertype145k 4× 10−11 4× 10−7 2× 10−5 7× 10−9Poker525k 0 0 0 0Poker275k 0 0 0 0Poker150k 0 0 0 0Poker100k 0 0 0 0Poker25kT1 0 —- —- 0Poker25kT2 0 —- —- 0Mnist10k 5× 10−8 3× 10−10 1× 10−7 1× 10−5M-Basic 2× 10−4 1× 10−8 1× 10−5 0.0164M-Rotate 0 5× 10−15 6× 10−11 3× 10−16M-Image 0 0 2× 10−7 7× 10−7M-Rand 0 0 7× 10−10 8× 10−4M-RotImg 0 0 2× 10−6 4× 10−5M-Noise1 0.0029 0.0430 0.2961 0.0574M-Noise2 0.0024 0.0072 0.1158 0.0583M-Noise3 0.0190 0.0701 0.1073 0.0327M-Noise4 0.0014 0.0090 0.4040 0.1935M-Noise5 0.0188 0.0079 0.1413 0.2305M-Noise6 0.0043 0.0058 0.1189 0.1002Letter15k 0.0345 0.1718 0.1449 0.0268Letter4k 2× 10−6 0.008 0.019 1× 10−5Letter2k 2× 10−5 0.003 0.001 4× 10−6

These results demonstrate that abc-logitboost and abc-martoutperform logitboost and mart, respectively. In addition,except for Poker25kT1 and Poker25kT2, abc-logitboostoutperforms abc-mart and logitboost outperforms mart.

App. B provides more detailed P -values for Mnsit10k andM-Image, to demonstrate that the improvements hold for awide range of parameters (J and ν).

4.6 Comparisons with SVM and Deep LearningFor Poker dataset, SVM could only achieve a test error rateof about 40% (Private communications with C.J. Lin). Incomparison, all four algorithms, mart, abc-mart, (robust)logitboost, and abc-logitboost, could achieve much smallererror rates (i.e., < 10%) on Poker25kT1 and Poker25kT2.

Fig. 2 provides the comparisons on the six (correlated)noise datasets: M-Noise1 to M-Noise6, with SVM and deeplearning based on the results in [10].

1 2 3 4 5 60

10

20

30

40

Degree of correlation

Err

or r

ate

(%) SVM

rbf

SAA−3

DBN−3

1 2 3 4 5 6

10

12

14

16

18

Degree of correlation

Err

or r

ate

(%)

mart

abc−logit

abc−mart

logit

Figure 2: Six datasets: M-Noise1 to M-Noise6. Left panel:Error rates of SVM and deep learning [10]. Right panel:Errors rates of four boosting algorithms. X-axis: degree ofcorrelation from high to low; the values 1 to 6 correspondto the datasets M-Noise1 to M-Noise6.

-

Table 10: Summary of test error rates of various algorithmson the modified Mnist dataset [10].

M-Basic M-Rotate M-Image M-Rand M-RotImgSVM-RBF 3.05% 11.11% 22.61% 14.58% 55.18%SVM-POLY 3.69% 15.42% 24.01% 16.62% 56.41%NNET 4.69% 18.11% 27.41% 20.04% 62.16%DBN-3 3.11% 10.30% 16.31% 6.73% 47.39%SAA-3 3.46% 10.30% 23.00% 11.28% 51.93%DBN-1 3.94% 14.69% 16.15% 9.80% 52.21%

mart 4.12% 15.35% 11.64% 13.15% 49.82%abc-mart 3.69% 13.27% 9.45% 10.60% 46.14%logitboost 3.45% 13.63% 9.41% 10.04% 45.92%abc-logitboost 3.20% 11.92% 8.54% 9.45% 44.69%

Table 10 compares the error rates on M-Basic, M-Rotate,M-Image, M-Rand, and M-RotImg, with the results in [10].

Fig. 2 and Table 10 illustrate that deep learning algorithmscould produce excellent test results on certain datasets (e.g.,M-Rand and M-Noise6). This suggests that there is stillsufficient room for improvements in future research.

4.7 Test Errors versus Boosting IterationsAgain, we believe the plots for test errors versus boostingiterations could be more reliable than a single number, forcomparing boosting algorithms.