Lancaster Central School District / Welcome to Lancaster ...

A Vision-Based Positioning System ... - Lancaster University

Transcript of A Vision-Based Positioning System ... - Lancaster University

A vision-based positioning system with inversedead-zone control for dual-hydraulic manipulators

C. West, S. D. Monk, A. Montazeri and C. J. TaylorEngineering Department, Lancaster University, UK

Email: [email protected]

Abstract—The robotic platform in this study is being usedfor research into assisted tele-operation for common nucleardecommissioning tasks, such as remote pipe cutting. It has dual,seven-function, hydraulically actuated manipulators mounted ona mobile base unit. For the new visual servoing system, theuser selects an object from an on-screen image, whilst thecomputer control system determines the required position andorientation of the manipulators; and controls the joint angles forone of these to grasp the pipe and the second to position fora cut. Preliminary testing shows that the new system reducestask completion time for both inexperienced and experiencedoperators, in comparison to tele-operation. In a second contri-bution, a novel state-dependent parameter (SDP) control systemis developed, for improved resolved motion of the manipulators.Compared to earlier SDP analysis of the same device, whichused a rather ad hoc scaling method to address the dead-zone,a state-dependent gain is used to implement inverse dead-zonecontrol. The new approach integrates input signal calibration,system identification and nonlinear control design, allowing forstraightforward recalibration when the dynamic characteristicshave changed or the actuators have deteriorated due to age.

I. INTRODUCTION

The need for nuclear decommissioning is increasing glob-ally. Following the Fukushima disaster in 2011, Japan is clos-ing most of its 54 reactors. In Europe, more than 50 reactorsare being closed in the next 10 years. Nuclear installationshave materials that present various radioactive and chemicalhazards; their architecture is often complex; and they werenot necessarily designed with the decommissioning problemin mind. Hence, improved robotic operations are essentialfor safety and efficiency, notably for access to areas of highradiation where it is dangerous for human workers.

However, full autonomy of robotic systems is undesirabledue to the high-risk environment. Increased automation yieldscomplex interactions with the user and a reduction in situ-ational awareness [1]. In fact, most robotic systems in thenuclear sector are directly teleoperated, as originally was thecase for the robotic platform considered in the present article.Here, two seven-function, hydraulically actuated manipulatorshave been mounted on a mobile BROKK base unit [2].

Achieving accurate movement of such manipulators is achallenge, even following lengthy training and practice. Asa result, many methods other than joystick control exist in theliterature. These include master-slave systems [3] and devicesthat track user arm movement [4]. Various graphical interfacesare also possible, such as systems in which an operator usesa mouse to control a 6 degree–of–freedom (DOF) ring and

arrow marker to set end-effector position and orientation onscreen [5]. Such systems are only possible when the workenvironment is already well-known, or where multiple fixedsensors can combine to give detailed scene information.

Control methods that add a level of automation abovedirect teleoperation are often termed assisted teleoperation [6].However, as the level of assistance increases, the requirementfor scene information is also increased. Multiple stereo or 3Dcameras are often used, placed in different fixed positions toobserve the work environment from different angles. Unfor-tunately, a multiple camera approach is not always practicalin a nuclear decommissioning context. In a nuclear envi-ronment, electronics are easily damaged by radiation, henceit is preferable to reduce the number of sensors and otherelectronics, creating restrictions that need to be consideredwhen developing assisted teleoperation [7]. The fact that thepresent BROKK system is necessarily mobile (via caterpillartracks) adds further constraints to the available data.

Although prevalent in many other industries, vision systemsare rare in the nuclear industry, except for those used simplyto allow for remote teleoperation. In one recent example, amonocular vision system is used to control a manipulator tofasten bolts onto a sealing plate for a steam generator [8]. Therobot operates by having a camera locate bolts around theplate and subsequently moving to fasten these to a set torquevalue. However, the system is limited in that it only looks forhexagonal bolts on a specially designed, bespoke plate.

Hence, the aim of the present research is to reduce theoperator’s workload, speeding up task execution and reducingoperator-training time, whilst minimizing the introduction ofadditional sensors and other components. As pointed out byKatz et al. [9], the challenges associated with unstructuredenvironments are a consequence of the high-dimension ofthe problem, including robotic perception of the environment,motion planning and manipulation, and human-robot interac-tions. Due to limited sensor data availability, a system that cangrasp generic objects, for example, could be unreliable. As aresult, the developed visual servoing approach is based on theconcept of multiple subsystems for common tasks under oneuser interface: one subsystem for pipe cutting, one for pickand place operations, and so on. This approach reduces thecomplexity of the problem, potentially leading to improvedperformance and reliability. Furthermore, cognitive workloadis generally reduced by tailoring the information shown to theoperator to one particular (decommissioning) task at a time.

This article focuses on pipe cutting as an illustration of thegeneric approach, since this is a common repetitive task innuclear decommissioning. The user selects the object to becut from an on-screen image with a mouse click, whilst thecomputer control system determines the required position andorientation of the manipulators in 3D space, and calculatesthe necessary joint angles i.e. for one manipulator to graspand stabilise the selected object and the other to positionfor a cutting operation. The approach is similar to Kent etal. [10], who use a single manipulator with two 3D cameras.By contrast, we have dual manipulators on a mobile platform,and use a single camera. Marturi et al. [7] discuss some ofthe challenges involved in a nuclear context, and the resultsof a related pilot study. In all these cases, it is clear that, toimprove task execution speed and accuracy, high performancecontrol of nonlinear manipulator dynamics is required.

In this regard, conventional identification methods forrobotic systems include, for example, maximum likeli-hood [11], Kalman filtering [12] and inverse dynamic iden-tification model with least squares (IDIM-LS) [13]. RefinedInstrumental Variable (RIV) algorithms [14] are also used,sometimes in combination with State-Dependent Parameter(SDP) [2] and inverse dynamic models [15]. SDP models areestimated from data within a stochastic state-space frameworkand take a similar structural form to linear parameter-varyingsystems [16]. The parameters of SDP models are functionallydependent on measured variables, such as joint angles andvelocities in the case of manipulators. Such models havebeen successfully used for control of a KOMATSU hydraulicexcavator [17], while [18] further demonstrates the advantagesof the SDP approach, in comparison to IDIM-LS methods.

In comparison to a typical machine driven by electricmotors, hydraulic actuators generally have higher loop gainsand lightly-damped, nonlinear dynamics [19]. The manipu-lators used in the present research have been representedusing physically-based equations [20]. However, the presentarticle instead focuses on the identification of a relativelystraightforward SDP model. In contrast to earlier SDP controlof the same device [2], a new model structure is identified,one that provides estimates of the dead-zone and angularvelocity saturation, in a similar manner to the friction analysisof Janot et al. [18]. This model facilitates use of an InverseDead-Zone (IDZ) [21] controller, which is combined withconventional Proportional-Integral-Plus (PIP) methods [22]. Incontrast to reference [23], the present work considers resolvedmotion, within the context of the vision system.

Section II briefly introduces the robotic platform, section IIIdescribes the vision system and section IV the SDP controlmethodology. This is followed by the experimental results andconclusions in sections V and VI respectively.

II. ROBOTIC PLATFORM

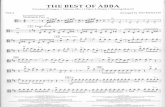

The BROKK–40 consists of a moving vehicle, hydraulictank, remote control system and manipulator. The latter islinked via a bespoke back-plate to two HYDROLEK–7Wmanipulators (Fig. 1). The unit is electrically powered, with an

Fig. 1. BROKK–HYDROLEK (further images: https://tinyurl.com/yd3bvslo).

on-board hydraulic pump to power the caterpillar tracks and,by means of hydraulic pistons, the manipulators. Each manip-ulator has 6 DOF with a continuous jaw rotation mechanism.The end-effectors can be equipped with a variety of tools, suchas hydraulic crushing jaws. The manipulator joints are fittedwith potentiometer feedback sensors, allowing the position ofthe end-effector to be determined during operation [2].

An input device, such as a joystick, is connected to aPC running a graphical user interface (GUI) developed bythe authors with National Instruments (NI) Labview software.The PC transmits information to a NI Compact FieldpointReal-Time controller (CFP) via an Ethernet connection. Theplatform has some similarity to the Hitachi system [24]. TheHitachi consists of two hydraulic manipulators on a trackedvehicle. However, it is teleoperated with a rather complexuser interface. This complexity arises from the many camerasand sensors on the Hitachi, as well as from the teleoperatedcontrol system. By contrast, the assisted teleoperation systemdeveloped below is designed to keep the user engaged and incontrol at all times, whilst being as straightforward as possible.

III. VISION SYSTEM

The vision system is largely based on off–the–shelf com-ponents and image processing algorithms, that have beenintegrated and adapted for this application [25]. A MicrosoftKinect is mounted between the two manipulators, giving aview of the workspace directly to the front. Although orig-inally developed for gaming applications, the Kinect is nowwidely for robotics research. It couples a standard camera witha structured light depth sensor, allowing both colour and depthdata to be used and combined. Placing the camera betweenthe manipulators provides an intuitive system for the humanoperator. A live video stream is displayed on the GUI as themobile base unit is positioned and stabilised. The colour anddepth images are aligned and converted to grayscale. To reducethe size of the image and computational complexity, as wellas to only present useful information to the operator, all areasthat are either out of reach of the system or that have no depthdata available, are removed. This leaves the image with onlythe reachable objects (Fig. 2).

Fig. 2. Preliminary image processing: (a) original and (b) simplified image.

A. Edge Detection

Three copies of the image are created, each with a differentcontrast level, adjusted via the GUI. Two copies are set usingsliders and the third is adjusted to a pre-set mid value. Thesethree images, together with the original, are forwarded to theedge detection algorithm. Since the working environment islikely to be poorly lit, this approach lessens the impact ofshadows and highlights, and is found to capture more detailin practice (Fig. 3). The four images are passed through acanny edge detection algorithm [26] in MATLAB. The cannyalgorithm has been shown to perform better than other edgedetection algorithms in most situations, albeit at a relativelyhigh computational cost [27]. Reliable detection of solid edgesis a key requirement here. A third slider on the GUI determinesthe sensitivity threshold, allowing for adjustments to whichedges are detected. The images are combined and the edgesdilated to improve clarity. The three sliders on the GUI updatethe image in real-time, hence allowing the operator to makeadjustments, potentially removing superfluous edges or fillingin missing edges, without having to reposition the system.

B. Object Selection

An object is selected with a mouse. A colour screengrabwith the selected item highlighted is shown, providing visualconfirmation. In the case of a pipe, the user proceeds to clickon the point they determine the manipulator should grasp, andthen the location of the cutting operation. At this stage, theselected positions for grasp and cut are snapped to the majoraxis of the object i.e. the centre line of the pipe, determinedusing the MATLAB Image Processing Toolbox. The cutterpath is perpendicular to the major axis, and also takes a pre-set distance from the centreline. Hence, any orientation of pipecan be addressed and, since depth data are available, the pipedoes not even have to be in one plane. To reduce the possibilityof collisions, and to help position the gripper in the targetorientation, the end-effector is first moved to a position infront of the target; once it has reached a set error tolerance forthat position, it is moved to the final grasp position. Althoughmore sophisticated collision avoidance algorithms are availablein the literature, this pragmatic approach ensures that the end-effector always moves directly forward onto the pipe to graspit, and is found to work very well in practice. Of course, theoperator retains the option to immediately return to full tele-operation when the unexpected arises.

Fig. 3. Edge detection: (a) original and (b) combining four contrasts.

C. Real–Time Implementation

Target locations above refer to pixel coordinates and depthvalues. However, to be used as Inverse Kinematics (IK) solverarguments, these are converted into the manipulator coordinatesystem. A trigonometric approach is taken. The number ofpixels in the Kinect image is 640×480, while the camera fieldof view is 57◦ horizontal and 43◦ vertical. Using the angle andthe depth data, ‘real world’ coordinates relative to the cameraorigin are determined. For the Kinect, points on a plane thatare parallel to the sensor have the same depth value and thisdefines the trigonometric identity. At this stage, the coordinatesare relative to the camera centre. A translation is used toconvert these into a usable form [25]. No analytical IK solutionexists for this manipulator design, hence the present workutilises the Jacobian transpose to determine target angles [28].The algorithms behind the vision system are all implementedin MATLAB. However, the interface to the robotic actuatorsis via NI Labview, with these elements connected via TCP-IP locally. This architecture would allow for the GUI to beimplemented on one PC and the Labview manipulator controlto be performed on another for remote operation. Finally, theLabview control software moves the joints to the set pointsprovided by MATLAB, as discussed below.

IV. CONTROL SYSTEM

The SDP model is yk = wTkpk, with SDP parameter vector,

pk = [a1 {χk} · · · an {χk} b1 {χk} · · · bm {χk}]T

and wTk = [yk−1 · · · − yk−n vk−1 · · · vk−m]. Here vk and

yk are the input voltage and joint angle respectively, whileai {χk} and bj {χk} are n and m state dependent parametersi.e. functions of a non-minimal state vector χk. The hydraulicmanipulator model is identified in three stages:

Step 1. Open-loop step experiments using the manipulatorsuggest that a first order linear difference equation, i.e.,

yk = −a1yk−1 + bτvk−τ (1)

provides an approximate representation of individual joints,with the time delay τ depending on the sampling interval ∆t.

Step 2. The values of {a1, bτ} are not repeatable forexperiments with different input magnitudes. However, SDPanalysis of experimental data suggests that n = m = 1,a1 = −1 is time invariant and bτ {χk} = bτ {vk−τ} is astatic nonlinear function [23].

-10 -5 0 5 10v

0

0.05

0.1

0.15b

RIVSDP

-2 0 2v

-2

-1

0

1

2

q

vmin 63

vmax

0 50 100 150 200 250 300-20

0204060

y

dataSDP

0 50 100 150 200 250 300time (s)

-2

0

2

v

Fig. 4. Top left: state-dependent parameter plotted against input magnitude(i.e. steady state voltage to NI-CFP, v∞), showing RIV estimates bτ for in-dividual experiments (circles) and optimised SDP bτ {χk} (solid). Top right:IDZ approximation, showing angular velocity qk (◦/∆tc, ∆tc = 0.05s)against input i.e. bτ {χk}×v∞/∆tc (thin solid) and linearised model (thickred trace); dead-zone and velocity limits are highlighted (dashed). Lower:illustrative SDP model evaluation, showing yk(

◦) against time (s).

SNL LD C IDZ q y d u v

SDP model

Fig. 5. Schematic diagram of the control system, in which C, IDZ, SNLand LD represent the (linear) Controller, Inverse Dead-Zone control element,Static NonLinearity and Linear Dynamics, respectively.

Step 3. Finally, bτ {vk−τ} is parameterized, here using ex-ponential functions for the nonlinearity. To illustrate using theright hand side ‘shoulder’ joint, denoted J2, with ∆t = 0.01sand τ = 15, RIV estimates bτ for step input experiments ofvarying magnitudes are shown in Fig. 4, demonstrating theclear state-dependency on the input. The mechanistic inter-pretation is straightforward: using equation (1) with a1 = −1,yields bτvk−τ = yk −yk−1 i.e. bτvk−τ represents a smoothedestimate of the differenced sampled output and bτvk−τ/∆tprovides an estimate of the angular velocity, hence identifyingthe dead-zone and velocity saturation limits. As a result,denoting q {vk} = bτ {vk} × vk, the SDP model for J2 is,

yk = yk−1 + q {vk−τ} (2)

with constraints ymin < yk < ymax, where ymin = −13.8◦ andymax = 63.6◦ are hardware limits and,

q {vk} = (1− α1) eα2(α3−vk) for vk < α3

q {vk} = 0 for α3 ≤ vk ≤ α6 (3)q {vk} = (1− α4) e

α5(vk−α6) for vk > α6

For J2, α̂i = [−0.295, 1.356,−1.330, 0.389, 1.771, 1.312].To obtain these estimates, experimental data are comparedwith the model response and the mean sum of the leastsquares output errors are used as the objective function for

fminsearch in MATLAB. Here, (α2, α5) are curve coefficients;α1/∆t = −29.5◦/s and α4/∆t = 38.9◦/s provide minimumand maximum angular velocity saturation limits; and α3 =−1.33V to α6 = 1.31V is the dead-zone. These objectiveestimates compare closely with equivalent values obtainedfrom extensive prior ad hoc experimental work.

Similar SDP model forms are identified for each jointof both manipulators. In the case of Joints 1–3 (azimuthyaw, shoulder pitch and elbow pitch), these yield satisfactoryevaluation results (Fig. 4), including for experiments involvingsimultaneous movement of all joints. However, the two jointsclosest to the end-effector (Joints 4–5: forearm roll and wristpitch), yield inconsistencies in the time-delay and this is thesubject of on-going research. For the present article, a slightlydifferent parameterisation is utilised for J4: see [25].

Fig. 5 shows the control system, in which dk is the desiredjoint angle, uk is the PIP control input, vk is the voltage to theNI-CPF computer, qk is the angular velocity and yk is the jointangle. Whilst previous research has utilised the SDP model fornonlinear pole assignment [2], the present article develops arather simpler IDZ approach. Selecting a control sampling rate∆tc = 0.05s as a compromise between satisfactory reactiontimes and a relatively low order control system, Fig. 4 showsthe linearised relationships for vk and qk in the range (i) vmin toα̂3 and (ii) α̂6 to vmax in the negative and positive directions ofmovement, respectively. For J2, vmin = −2.2 and vmax = 1.75,selected since they yield an angular velocity ≈ 20◦/s in eitherdirection, and it seems unlikely that faster movement would bedesirable in practice. This approximation of the SNL elementof Fig. 5 is defined as follows (cf. equations (3)),

q {vk} = sn (vk − α̂3) for vk < α̂3

q {vk} = 0 for α̂3 ≤ uk ≤ α̂6 (4)q {vk} = sp (vk − α̂6) for vk > α̂6

where sn = q {vmin} /(vmin − α̂3) and sp = q {vmax} /(vmax −α̂6). Adapting from e.g. [21], the IDZ control element is,

vk = uk/sn + α̂3 for uk < −β

vk = 0 for − β ≤ uk ≤ β (5)vk = uk/sp + α̂6 for uk > β

where β = 0.05 is a ‘chatter’ coefficient. Eqns. (5) aim tocancel the dead-zone and allow use of linear control methods.For J2 with ∆tc = 0.05, τ = 3 and hence, using standardmethods [22], based on the model (1) with b3 = −a1 = 1, thePIP control algorithm takes the following incremental form,

uk = uk−1 − g1(uk−1 − uk−2)− g2(uk−2 − uk−3)

− f0(yk − yk−1) + kI(dk − yk) (6)

with constraints umin < uk < umax, in which umin = −2.5and umax = 2.5 are introduced to avoid potential integral-wind up problems, and the control gains g1, g2, f0 and kIare determined by e.g. conventional Linear Quadratic (LQ)optimisation. Finally, two standard implementation forms ofthe PIP controller are investigated below, a feedback andforward path form [22].

TABLE IJOYSTICK TELEOPERATION AND VISION-BASED CONTROLLER, SHOWING

THE TIME (SECONDS) TO COMPLETE A GRASPING TASK.

Inexperienced operator Experienced operatorTeleoperation Visual servoing Teleoperation Visual servoing

122 31 57 20Failed 22 64 21148 27 68 20

V. RESULTS

A cardboard tube is used as the target pipe for the pre-liminary experiments, so that if anything went wrong themanipulators would knock it out of the way without causingdamage to the robot or laboratory. The operator selects thetube, and the manipulator grasps and moves as though cuttingthrough it (presaging the use of appropriate laser cuttingtools in future research). Tests were successfully repeatedfrom various random starting positions. Since the new systemactuates all the joints in parallel, significantly faster taskcompletion times are acheived, in comparison to conventionalteleoperation via joystick control, as illustrated by Table I.Here, an operator uses the joystick to grasp the tube threetimes. The same user subsequently operates the new visionsystem three times. An inexperienced user received just 15minutes training with the joysticks, whilst an experienced userwas already familiar with the system. These initial tests areclearly quite limited in scope. Nonetheless, the results providean indication of the potential for improved performance usingthe developed approach, compared to the type of teleoperatedsystem presently used on nuclear sites.

With regard to the new control system, Table II comparesthe feedback and forward path PIP-IDZ designs, togetherwith two pre-existing control systems, namely a linear PIPcontroller and a PI algorithm that had been tuned by handto obtain a reasonable response for the preliminary visionsystem testing. For the latter two cases, instead of IDZ control,the dead-zone was addressed using a rather ac hoc inputscaling approach [2]. It is clear that the forward path PIP-IDZcontroller yields the best performance. Fig. 6 illustrates thesmooth, relatively fast closed-loop response of the joint anglesfor this controller. It also demonstrates the accuracy of the SDPsimulation model. In this example, four manipulator joints aresimultaneously moved in the form of a sinusoid signal, wherethe latter have been designed to approximately activate thefull robot joint space. The joint angles and input to the CFPcomputer for the experimental data and SDP model are almostvisually indistinguishable in this example. It should be notedthat Table II is based on this type of sinusoid experiment (i.e.simultaneous motion of four manipulator joints) but similarresults have also been obtained for various step changes inthe set point and for pipe grasp scenarios [25]. Finally, Fig. 7shows an example of the manipulator moving to a target grasplocation. It shows small movements around the initial targetposition, before proceeding to the final grasp. The time takento complete the movement shown in Fig. 7 is 5.4 seconds.

TABLE IIRESOLVED MOTION LABORATORY EXPERIMENTS, COMPARING PIP–IDZ

FORWARD PATH (FP) AND FEEDBACK (FB) CONTROLLERS, WITH LINEARPIP AND HAND–TUNED PI CONTROL: (I) AVERAGE EUCLIDEAN NORM(OVERALL PERFORMANCE BASED ON END-EFFECTOR POSITION); (II)

MEAN SQUARE ERROR BETWEEN SET POINT AND ANGLE FOR EACH JOINT;(III) VARIANCE OF END-EFFECTOR POSITIONAL ERRORS; AND (IV)

VARIANCE OF DIFFERENCED INPUT TO THE NI–CFP COMPUTER FOR EACHJOINT (LOWER VALUE IMPLIES SMOOTHER ACTUATION).

Performance PIP-IDZ-FP PIP-IDZ-FB PIP-Old PI-oldAve.Eucl.Norm 77 132 190 160

MSE–J1 5.7 17.8 56.8 20.5MSE–J2 1.9 3.5 12.8 8.0MSE–J3 5.6 12.0 64.0 81.9MSE–J4 85.4 56.7 77.1 144.8Var(E)–X 7.34 22.9 408 44.7Var(E)–Y 17.5 89.4 158 132Var(E)–Z 48.5 185.7 210 112

Var(CFP)–J1 0.02 0.38 0.03 0.03Var(CFP)–J2 0.03 0.27 0.11 0.05Var(CFP)–J3 0.27 0.49 0.04 0.05Var(CFP)–J4 0.23 0.30 0.19 0.17

VI. CONCLUSIONS

This article has developed and evaluated a vision basedsemi-autonomous object grasping system for a hydraulicallyactuated, dual manipulator nuclear decommissioning robot.The system presents a straightforward GUI to the operator,who with just four mouse clicks can select target positionsfor each manipulator to perform a pipe grasp and cut action.Throughout the process, the user can view the live colour videoand terminate manipulator movements at any time. The systemwas tested on a full scale hardware system in a laboratory en-vironment. It is shown to work successfully, outperforming thetraditional joystick-based teleoperation approach. The systemkeeps the user in control of the overall system behaviour butsignificantly reduces user workload and operation time.

Further research is required to support these conclusions,including additional experiments with more users, and par-ticularly in relation to attaching suitable cutters and workingwith real pipes and other objects. Future experiments includeusing both manipulators to grasp the same object, in order tomove particularly heavy objects. In addition, one limitation ofthe present prototype is the lack of a sophisticated collisionavoidance system i.e. to prevent the manipulators collidingwith each other or the work environment.

In a second contribution of the article, a new approachto SDP modelling for manipulators has been described andevaluated. The models obtained facilitate use of an IDZmethod for control system design. This is rather simplerto implement than an earlier SDP approach and has theadvantage of combining the input signal calibration, systemidentification and control system design steps, all based on arelatively small data-set. This allows for rapid application toeach joint and straightforward recalibration when the dynamiccharacteristics have changed due to age and use. Resolvedmotion tests successfully demonstrate the efficacy of the newcontrol system.

0 10 20

-20

0

20

J1 angle

0 10 20-10

0

10J2 angle

0 10 20-20

0

20J3 angle

0 10 20-40

-20

0

20

40J4 angle

commandsimulationexperiment

0 10 20-1

0

1J1 control

0 10 20-0.5

0

0.5J2 control

0 10 20-0.5

0

0.5

1J3 control

0 10 20-1

0

1J4 control

sim.exp.

0 10 20-2

0

2J1 CFP voltage

0 10 20-2

0

2J2 CFP voltage

0 10 20time (s)

-2

0

2J3 CFP voltage

0 10 20time (s)

-2

0

2J4 CFP voltage

sim.exp.

Fig. 6. Control diagnostic resolved motion experiment based on sine wave joint angle trajectories, showing command (dashed), HYDROLEK data (solid)and SDP simulation (thick, red). From left to right, 4× 4 subplots show joint angle yk (◦), control input uk and NI-CFP voltage vk , against time (s).

-200

0

400

200

1000

y

400

200

Z

600

X (depth)

9000

800

-200 800

Initial targetFinal targetWrist pathTarget pipe

Fig. 7. Illustrative resolved motion experiment for a pipe grasp (units: mm).

ACKNOWLEDGMENTS

This work is supported by the UK Engineering and PhysicalSciences Research Council (EPSRC) grant EP/R02572X/1.The authors are also grateful for the support of the NationalNuclear Laboratory and Nuclear Decommissioning Authority.Finally, the CAPTAIN Toolbox for MATLAB was used [29]:http://wp.lancs.ac.uk/captaintoolbox/

REFERENCES

[1] G. D. Baxter, J. Rooksby, Y. Wang, and A. Khajeh-Hosseini, “Theironies of automation... still going strong at 30?” in European Con-ference on Cognitive Ergonomics, 2012.

[2] C. J. Taylor and D. Robertson, “State–dependent control of ahydraulically–actuated nuclear decommissioning robot,” Control Engi-neering Practice, vol. 21, no. 12, pp. 1716–1725, 2013.

[3] A. Kitamura, M. Watahiki, and K. Kashiro, “Remote glovebox sizereduction in glovebox dismantling facility,” Nuclear Engineering andDesign, vol. 241, pp. 999–1005, 2011.

[4] J. Kofman, X. Wu, and T. J. Luu, “Teleoperation of a robot manipulatorusing a vision–based human–robot interface,” IEEE Transactions onIndustrial Electronics, vol. 52, pp. 1206–1219, 2005.

[5] A. Leeper, K. Hsiao, and M. Ciocarlie, “Strategies for human-in-the-loop robotic grasping,” in 7th ACM/IEEE International Conference onHuman–Robot Interaction, 2012.

[6] K. Hauser, “Recognition, prediction, and planning for assisted teleoper-ation,” Autonomous Robots, vol. 35, pp. 241–254, 2013.

[7] N. Marturi, A. Rastegarpanah, C. Takahashi, M. Adjigble, R. Stolkin,S. Zurek, M. Kopicki, M. Talha, J. A. Kuo, and Y. Bekiroglu, “Towardsadvanced robotic manipulation for nuclear decommissioning: A pilotstudy on tele-operation and autonomy,” in IEEE Robotics & Automationfor Humanitarian App., Kollam, 2016.

[8] X. Duan, Y. Wang, Q. Liu, M. Li, and X. Kong, “Manipulation robotsystem based on visual guidance for sealing blocking plate of steamgenerator,” J. Nuclear Science & Technology, vol. 53, pp. 281–288, 2016.

[9] D. Katz, J. Kenney, and O. Brock, “How can robots succeed in unstruc-tured environments?” in Workshop on Robot Manipulation: Intelligencein Human Environments at Robotics, Cambridge, UK, 2008.

[10] D. Kent, C. Saldanha, and S. Chernova, “A comparison of remote robotteleoperation interfaces for general object manipulation,” in ACM/IEEEInternational Conference on Human–Robot Interaction, Vienna, 2017.

[11] M. M. Olsen, J. Swevers, and W. Verdonck, “ML identification of adynamic robot model: Implementation issues,” Int. J. Robotics Research,vol. 21, pp. 9–96, 2001.

[12] M. Gautier and P. Poignet, “Extended Kalman filtering and weighted LSdynamic identification of robot,” Control Engineering Practice, vol. 9,pp. 1361–1372, 2001.

[13] M. Gautier, A. Janot, and P. O. Vandanjon, “A new closed–loop outputerror method for parameter identification of robot dynamics,” IEEETransactions on Control SystemTechnology, vol. 21, pp. 428–444, 2013.

[14] P. C. Young, Recursive Estimation and Time Series Analysis: AnIntroduction for the Student and Practitioner. Springer, 2011.

[15] A. Janot, P. O. Vandanjon, and M. Gautier, “An instrumental variableapproach for rigid industrial robots identification.” Control EngineeringPractice, vol. 25, pp. 85–101, 2014.

[16] S. Hashemi, H. Abbas, and H. Werner, “Low–complexity LPV modelingand control of a robotic manipulator,” Control Engineering Practice,vol. 12, pp. 248–257, 2012.

[17] C. J. Taylor, E. M. Shaban, M. A. Stables, and S. Ako, “PIP controlapplications of state dependent parameter models,” IMechE Proceedings,vol. 221, no. 17, pp. 1019–1032, 2007.

[18] A. Janot, P. C. Young, and M. Gautier, “Identification and control ofelectro–mechanical systems using state–dependent parameter estima-tion,” International Journal of Control, vol. 90, pp. 643–660, 2017.

[19] M. R. Sirouspour and S. E. Salcudean, “Nonlinear control of hydraulicrobots,” IEEE Trans. Robotics & Aut., vol. 17, pp. 173–182, 2001.

[20] A. Montazeri, C. West, S. Monk, and C. J. Taylor, “Dynamic modelingand parameter estimation of a hydraulic robot manipulator using a multi–objective genetic algorithm,” Int. J. Control, vol. 90, pp. 661–683, 2017.

[21] J. D. Fortgang, L. E. George, and W. J. Book, “Practical implementationof a dead zone inverse on a hydraulic wrist,” in ASME InternationalMechanical Engineering Congress and Exposition, New Orleans, 2002.

[22] C. J. Taylor, P. C. Young, and A. Chotai, True Digital Control: StatisticalModelling and Non–Minimal State Space Design. Wiley, 2013.

[23] C. West, E. D. Wilson, Q. Clairon, A. Montazeri, S. Monk, and C. J.Taylor, “State–dependent parameter model identification for inversedead–zone control of a hydraulic manipulator,” in 18th IFAC Symposiumon System Identification (SYSID), Stockholm, Sweden, July 2018.

[24] H. Kinoshita, R. Tayama, Y. Kometani, T. Asano, and Y. Kani, “Devel-opment of new technology for Fukushima Daiichi nuclear power stationreconstruction,” Hitachi Hyoron, vol. 95, pp. 41–46, 2013.

[25] C. West, “A vision-based pipe cutting system,” Ph.D. dissertation,Engineering Department, Lancaster University, 2018 (in preparation).

[26] J. Canny, “A computational approach to edge detection. ieee transactionson pattern analysis and machine intelligence,” IEEE Transactions onPattern Analysis and Machine Intelligence, vol. 6, pp. 679–698, 1986.

[27] R. Maini and H. Aggarwal, “Study and comparison of various imageedge detection techniques,” Int. J. Image Proc., vol. 3, pp. 1–11, 2009.

[28] T. Burrell, A. Montazeri, S. Monk, and C. J. Taylor, “Feedback control–based IK solvers for a nuclear decommissioning robot,” in 7th IFACSymp. Mechatronic Systems, Loughborough, UK, September 2016.

[29] C. J. Taylor, P. C. Young, W. Tych, and E. D. Wilson, “New develop-ments in the CAPTAIN Toolbox for Matlab,” in 18th IFAC Symposiumon System Identification (SYSID), Stockholm, Sweden, July 2018.