A REFERENCE-SET APPROACH TO INFORMATION EXTRACTION...

Transcript of A REFERENCE-SET APPROACH TO INFORMATION EXTRACTION...

A REFERENCE-SET APPROACH TO INFORMATION EXTRACTION FROMUNSTRUCTURED, UNGRAMMATICAL DATA SOURCES

by

Matthew Michelson

A Dissertation Presented to theFACULTY OF THE GRADUATE SCHOOL

UNIVERSITY OF SOUTHERN CALIFORNIAIn Partial Fulfillment of theRequirements for the Degree

DOCTOR OF PHILOSOPHY(COMPUTER SCIENCE)

May 2009

Copyright 2009 Matthew Michelson

Dedication

To Sara, whose patience and support

never waiver.

ii

Acknowledgments

First and foremost, thanks to Sara, my wife. Her love, patience, and support made this

thesis possible.

Of course, many thanks to my family for their patience. I know it took almost 90%

of my life so far to finish school, but I promise this is my last degree! (Unless...)

I would especially like to thank my thesis adviser, Craig Knoblock, who taught me the

mechanics of research, the elements of style and grammar, and the patience required to

find the right solution. Craig was (and still is) always free to talk to me when I needed it,

especially when I felt like I was floundering in the muck of trial-and-error (usually more

error, less trial). Most importantly, he gave me enough freedom to try my own solutions,

but also helped reign my ideas back to practicality. I am grateful for all his help.

Many thanks to the rest of my thesis committee: Kevin Knight, Cyrus Shahabi and

Daniel O’Leary. My committee helped me focus my thesis during my qualification exam

with their comments and questions. Their patience and kind demeanor made the practical

aspects of my defense, such as scheduling, painless!

To my ISI-mates, both past (Marty, Snehal, Pipe, Mark, and Wesley) and present

(Yao-yi, Anon, and Amon), thanks for all the laughs, games, Web-based time wasters,

iii

and general harassment, without which the life of a graduate student would feel more like

“real work!”

This research is based upon work supported in part by the National Science Foun-

dation under award number IIS-0324955; in part by the Air Force Office of Scientific

Research under grant number FA9550-07-1-0416; and in part by the Defense Advanced

Research Projects Agency (DARPA) under Contract No. FA8750-07-D-0185/0004.

The United States Government is authorized to reproduce and distribute reports for

Governmental purposes not withstanding any copyright annotation thereon. The views

and conclusions contained herein are those of the authors and should not be interpreted as

necessarily representing the official policies or endorsements, either expressed or implied,

of any of the above organizations or any person connected with them.

iv

Table of Contents

Dedication ii

Acknowledgments iii

List Of Tables vii

List Of Figures x

Abstract xi

Chapter 1: Introduction 11.1 Motivation and Problem Statement . . . . . . . . . . . . . . . . . . . . . . 11.2 Proposed Approach . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41.3 Thesis Statement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91.4 Contributions of the Research . . . . . . . . . . . . . . . . . . . . . . . . . 91.5 Outline of the thesis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Chapter 2: An Automatic Approach to Extraction from Posts 112.1 Automatically Choosing the Reference Sets . . . . . . . . . . . . . . . . . 122.2 Matching Posts to the Reference Set . . . . . . . . . . . . . . . . . . . . . 152.3 Unsupervised Extraction . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192.4 Experimental Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.4.1 Results: Selecting reference set(s) . . . . . . . . . . . . . . . . . . . 212.4.2 Results: Matching posts to the reference set . . . . . . . . . . . . . 302.4.3 Results: Extraction using reference sets . . . . . . . . . . . . . . . 37

Chapter 3: Automatically Constructing Reference Sets for Extraction 413.1 Reference Set Construction . . . . . . . . . . . . . . . . . . . . . . . . . . 433.2 Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 603.3 Applicability of the ILA Method . . . . . . . . . . . . . . . . . . . . . . . 67

3.3.1 Reference-Set Properties . . . . . . . . . . . . . . . . . . . . . . . . 68

Chapter 4: A Machine Learning Approach to Reference-Set Based Extrac-tion 814.1 Aligning Posts to a Reference Set . . . . . . . . . . . . . . . . . . . . . . . 83

v

4.1.1 Generating Candidates by LearningBlocking Schemes for Record Linkage . . . . . . . . . . . . . . . . 84

4.1.2 The Matching Step . . . . . . . . . . . . . . . . . . . . . . . . . . . 934.2 Extracting Data from Posts . . . . . . . . . . . . . . . . . . . . . . . . . . 1024.3 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

4.3.1 Record Linkage Results . . . . . . . . . . . . . . . . . . . . . . . . 1104.3.1.1 Blocking Results . . . . . . . . . . . . . . . . . . . . . . . 1104.3.1.2 Matching Results . . . . . . . . . . . . . . . . . . . . . . 117

4.3.2 Extraction Results . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

Chapter 5: Related Work 130

Chapter 6: Conclusions 138

Bibliography 143

vi

List Of Tables

1.1 Three posts for Honda Civics from Craig’s List . . . . . . . . . . . . . . . 3

1.2 Methods for extraction/annotation under various assumptions . . . . . . . 8

2.1 Automatically choosing a reference set . . . . . . . . . . . . . . . . . . . . . . 14

2.2 Vector-space approach to finding attributes in agreement . . . . . . . . . . . . 17

2.3 Cleaning an extracted attribute . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.4 Reference Set Descriptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.5 Posts Set Descriptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.6 Using Jensen-Shannon distance as similarity measure . . . . . . . . . . . . . . 24

2.7 Using TF/IDF as the similarity measure . . . . . . . . . . . . . . . . . . . . . 26

2.8 Using Jaccard as the similarity measure . . . . . . . . . . . . . . . . . . . . . 27

2.9 Using Jaro-Winkler TF/IDF as the similarity measure . . . . . . . . . . . . . . 28

2.10 A summary of each method choosing reference sets . . . . . . . . . . . . . . . 28

2.11 Annotation results using modified Dice similarity . . . . . . . . . . . . . . . . 32

2.12 Annotation results using modified Jaccard similarity . . . . . . . . . . . . . . . 33

2.13 Annotation results using modified TF/IDF similarity . . . . . . . . . . . . . . 35

2.14 Modified Dice using Jaro-Winkler versus Smith-Waterman . . . . . . . . . . . . 37

2.15 Extraction results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

vii

3.1 Constructing a reference set . . . . . . . . . . . . . . . . . . . . . . . . . . 47

3.2 Posts with a general trim token: LE . . . . . . . . . . . . . . . . . . . . . 48

3.3 Locking attributes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

3.4 ILA method for building reference sets . . . . . . . . . . . . . . . . . . . . 54

3.5 Reference set constructed by flattening the hierarchy in Figure 3.4 . . . . 57

3.6 Reference set constructed by flattening the hierarchy in Figure 3.5 . . . . 58

3.7 Reference set constructed by flattening the hierarchy in Figure 3.6 . . . . 58

3.8 Post Sets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

3.9 Field-level extraction results . . . . . . . . . . . . . . . . . . . . . . . . . . 62

3.10 Manually constructed reference-sets . . . . . . . . . . . . . . . . . . . . . . . 64

3.11 Comparing field-level results . . . . . . . . . . . . . . . . . . . . . . . . . . 65

3.12 Comparing the ILA method . . . . . . . . . . . . . . . . . . . . . . . . . . 66

3.13 Two domains where manual reference sets outperform . . . . . . . . . . . 68

3.14 Results on the BFT Posts and eBay Comics domains . . . . . . . . . . . . 69

3.15 Bigram types that describe domain characteristics . . . . . . . . . . . . . 71

3.16 Number of bootstrapped posts . . . . . . . . . . . . . . . . . . . . . . . . 73

3.17 K-L divergence between domains using average distributions . . . . . . . . 77

3.18 Percent of trials where domains match (under K-L thresh) . . . . . . . . . 78

3.19 Method to determine whether to automatically construct a reference set. . 79

3.20 Two domains for testing the Bootstrap-Compare method . . . . . . . . . 79

3.21 Extraction results using ILA on Digicams and Cora . . . . . . . . . . . . . 80

4.1 Modified Sequential Covering Algorithm . . . . . . . . . . . . . . . . . . . 87

4.2 Learning a conjunction of {method, attribute} pairs . . . . . . . . . . . . 89

viii

4.3 Learning a conjunction of {method, attribute} pairs using weights . . . . 92

4.4 Blocking results using the BSL algorithm (amount of data used for trainingshown in parentheses). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

4.5 Some example blocking schemes learned for each of the domains. . . . . . 113

4.6 A comparison of BSL covering all training examples, and covering 95% ofthe training examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

4.7 Record linkage results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

4.8 Matching using only the concatenation . . . . . . . . . . . . . . . . . . . . 120

4.9 Using all string metrics versus using only the Jensen-Shannon distance . . 122

4.10 Record linkage results with and without binary rescoring . . . . . . . . . . 124

4.11 Field level extraction results: BFT domain . . . . . . . . . . . . . . . . . 126

4.12 Field level extraction results: eBay Comics domain. . . . . . . . . . . . . . 127

4.13 Field level extraction results: Craig’s Cars domain. . . . . . . . . . . . . . 128

4.14 Summary results for extraction showing the number of times each systemhad highest F-Measure for an attribute. . . . . . . . . . . . . . . . . . . . 128

ix

List Of Figures

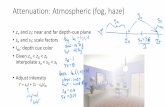

1.1 Fitting posts into information integration . . . . . . . . . . . . . . . . . . 2

1.2 Framework for reference-set based extraction and annotation . . . . . . . 6

2.1 Unsupervised extraction with reference sets . . . . . . . . . . . . . . . . . . . 21

3.1 Hierarchies to reference sets . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.2 Creating a reference set from posts . . . . . . . . . . . . . . . . . . . . . . 45

3.3 Locking car attributes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

3.4 Hierarchy constructed by ILA from car classified ads . . . . . . . . . . . . 55

3.5 Hierarchy constructed by ILA from laptop classified ads . . . . . . . . . . 56

3.6 Hierarchy constructed by ILA from ski auction listings . . . . . . . . . . . 56

3.7 Average distribution values for each domain using 10 random posts . . . . 74

4.1 The traditional record linkage problem . . . . . . . . . . . . . . . . . . . . 94

4.2 The problem of matching a post to the reference set . . . . . . . . . . . . 94

4.3 Two records with equal record level but different field level similarities . . 95

4.4 The full vector of similarity scores used for record linkage . . . . . . . . . 100

4.5 Our approach to matching posts to records from a reference set . . . . . . 103

4.6 Extraction process for attributes . . . . . . . . . . . . . . . . . . . . . . . 104

4.7 Algorithm to clean an extracted attribute . . . . . . . . . . . . . . . . . . 108

x

Abstract

This thesis investigates information extraction from unstructured, ungrammatical text on

the Web such as classified ads, auction listings, and forum postings. Since the data is un-

structured and ungrammatical, this information extraction precludes the use of rule-based

methods that rely on consistent structures within the text or natural language processing

techniques that rely on grammar. Instead, I describe extraction using a “reference set,”

which I define as a collection of known entities and their attributes. A reference set can be

constructed from structured sources, such as databases, or scraped from semi-structured

sources such as collections of Web pages. In some cases, as I shown in this thesis, a ref-

erence set can even be constructed automatically from the unstructured, ungrammatical

text itself. This thesis presents methods to exploit reference sets for extraction using

both automatic techniques and machine learning techniques. The automatic technique

provides a scalable and accurate approach to extraction from unstructured, ungrammat-

ical text. The machine learning approach provides even higher accuracy extractions and

deals with ambiguous extractions, although at the cost of requiring human effort to label

training data. The results demonstrate that reference-set based extraction outperforms

the current state-of-the-art systems that rely on structural or grammatical clues, which

is not appropriate for unstructured, ungrammatical text. Even the fully automatic case,

xi

which constructs its own reference set for automatic extraction, is competitive with the

current state-of-the-art techniques that require labeled data. Reference-set based extrac-

tion from unstructured, ungrammatical text allows for a whole category of sources to

be queried, allowing for their inclusion in data integration systems that were previously

limited to structured and semi-structured sources.

xii

Chapter 1

Introduction

This chapter introduces the issue of information extraction from unstructured, ungram-

matical text on the Web. In this chapter, I first motivate and define the problem explicitly.

Then, I briefly outline my approach to the problem and describe the general framework

for reference-set based information-extraction. I then describe my contributions to the

field of information extraction, and then present the outline of this thesis.

1.1 Motivation and Problem Statement

As more and more information comes online, the ability to process and understand this

information becomes more and more crucial. Data integration attacks this problem by

letting users query heterogeneous data sources within a unified query framework, com-

bining the results to ease understanding. However, while data integration can integrate

data from structured sources such as databases, semi-structured sources such as that

extracted from Web pages, and even Web Services [58], this leaves out a large class of

useful information: unstructured and ungrammatical data sources. I call such unstruc-

tured, ungrammatical data “posts.” Posts range in source from classified ads to auction

1

listings to forum postings to blog titles to paper references. The goal of this thesis

is to structure sources of posts, such that they can be queried and included

in data integration systems. Figure 1.1 shows an example integration system that

combines a database of car safety information, semi-structured Web pages of car reviews,

and posts of car classifieds. The box labeled “THESIS” is the mechanism by which to tie

the posts of classified ads into the integration system via structured queries, and this is

the motivation behind my research.

Figure 1.1: Fitting posts into information integration

Posts are full of useful information, as defined by the attributes that compose the

entity within the post. For example, consider the posts about cars from the online

classified service Craigslist,1 shown in Table 1.1. Each used car for sale is composed of

attributes that define this car; and if we could access the individual attributes we could

include such sources in data integration systems, such as that of Figure 1.1, and answer1www.craigslist.org

2

interesting queries such as “return the classified ads and car reviews for used cars that are

made by Honda and have a 5 star crash rating.” Such a query might requires combining

the structured database of safety ratings with the posts of the classified ads and the car

review websites. Clearly, there is a case to be made for the utility of structured queries

such as joins and aggregate selections of posts.

Craig’s List Post93 civic 5speed runs great obo (ri) $180093- 4dr Honda Civc LX Stick Shift $180094 DEL SOL Si Vtec (Glendale) $3000

Table 1.1: Three posts for Honda Civics from Craig’s List

However, currently accessing the data within posts does not go much beyond keyword

search. This is precisely because the ungrammatical, unstructured nature of posts makes

extraction difficult, so the attributes remain embedded within the posts. These data

sources are ungrammatical, since they do not conform to the proper rules of written

language (English in this case). Therefore, Natural Language Processing (NLP) based

information extraction techniques are not appropriate [57; 9]. Further, the posts are

unstructured since the structure can vary vastly between each listing. (That is, we can

not assume that the ordering of the attributes across the posts are preserved.) So, wrapper

based extraction techniques will not work either [49; 19] since the assumption that the

structure can be exploited does not hold. Lastly, even if one can extract the data from

within posts, you would need to assure that the extracted values map to the same value

for accurate querying. Table 1.1 shows three example posts about Honda Civics, but each

refers to the model differently. The first post mentions a “civic,” the next writes “Civc”

which is a spelling mistake, and the last calls the model “DEL SOL.” Yet, if we do a

3

query for all “civic” cars, all of these posts should be returned. Therefore, not only is it

important to extract the values from the posts, but we should clean the values in that all

values for a given attribute should be transformed to a single, uniform value. This thesis

presents an extraction methodology for posts that handles both the difficulties imposed

by the lack of grammar and structure, and also can assign a single, uniform value for an

attribute (called semantic annotation).

1.2 Proposed Approach

As stated, the method of extraction presented in this thesis involves exploiting structured,

relational data to aid the extraction, rather than relying on NLP or structure based meth-

ods. I call this structured data a “reference set.” A reference set consists of collections

of known entities with the associated, common attributes. Examples of reference sets in-

clude online (or offline) reference documents, such as the CIA World Fact Book;2 online

(or offline) databases, such as the Comics Price Guide;3 and with the Semantic Web one

can envision building reference sets from the numerous ontologies that already exist.

These are all cases of manually constructed reference sets since they require user

effort to construct the database, scrape the data off of Web pages, or create the ontology.

As this thesis shows, however, in many cases we can automatically generate a reference

set from the posts themselves, which can then be used for extraction. Nonetheless,

manual reference sets provide a stronger intuition into the strengths of reference-set based

information extraction.2http://www.cia.gov/cia/publications/factbook/3www.comicspriceguide.com

4

Using the car example, a manual reference set might be the Edmunds car buying

guide,4 which defines a schema for cars (the set of attributes such as make and trim)

as well as standard values for attributes themselves. Manually constructing reference

sets from Web sources such as the Edmunds car buying guide is done using wrapper

technologies such as Agent Builder5 to scrape data from the Web source using the schema

the source defines for the car.

Regardless of whether the reference set is constructed manually or automatically, the

high-level procedure for exploiting a reference set for extraction is the same. To use the

reference sets, the methods in this thesis first find the best match between each post and

the tuples of the reference set. By matching a post to a member of the reference set, the

algorithm can use the reference set’s schema and attribute values as semantic annotation

for the post (a single, uniform value for that attribute). Further, and importantly, these

reference set attributes provide clues for the information extraction step.

After the matching step, the methods perform information extraction to extract the

actual values in the post that match the schema elements defined by the match from the

reference set. This is the information extraction step. During the information extraction

step, the parts of the post are extracted that best match the attribute values from the

reference set member chosen during the matching step.

Figure 1.2 presents the basic methodology for reference-set based extraction, using

the second post of Table 1.1 as an example, and assuming it matches the reference

set tuple with the attributes {MAKE=HONDA, MODEL=CIVIC, TRIM=2 DR LX,

YEAR=1993}. As shown, first, the algorithm selects the best match from a reference set.4www.edmunds.com5A product of Fetch Technologies http://www.fetch.com/products.asp

5

Next, it exploits the match to aid information extraction. While this basic framework

stays consistent across the different methods described in this thesis, the details vary in

accordance with the different algorithms.

Figure 1.2: Framework for reference-set based extraction and annotation

This thesis presents three approaches to reference-set based extraction that are each

suitable depending on certain circumstances. The main algorithm of this thesis, presented

in Chapter 2 automatically matches a post to a reference set, and then uses the results

of this automatic matching to aid automatic extraction. This method assumes that there

exists a repository of manually created reference sets from which the system itself can

choose the appropriate one(s) to for matching/extraction. By using a repository, this

may lessen the burden on users who do not need to create new reference sets each time,

but can reuse previously created ones.

6

However, requiring a manually created reference set is not always even necessary. As

I show in Chapter 3, in many cases the algorithm I describe can construct the reference

set automatically, directly from the posts. It can then use the automatically constructed

reference set using the automatic matching and extraction techniques of Chapter 2.

There are two cases where a manually constructed reference set is preferred over

one that is automatically constructed. First, it’s possible that a user requires a certain

attribute to be added as a semantic annotation to a post in support of future, structured

queries (that is, after extraction). For example, suppose an auto-parts company has

an internal database of parts for cars. This company might want to join their parts

database with the classified ads about cars in a effort to advertise their mechanics service.

Therefore, they could use reference-set based extraction to identify the cars for sale in

the classified ads, which they can then join with their internal database. However, each

car in their database might have its own internal identification and this attribute might

be needed for their query. In this case, since none of the classified ads would already

contain this company-specific attribute, the company would have to manually create their

own reference set and include this attribute. So, in this case, the user would manually

construct a reference set and then use it for extraction.

Second, the textual characteristics of the posts might make it difficult to automatically

create a high quality reference set using the technique of Chapter 3. In fact, in Chapter 3

I present an analysis of the characteristics that define these domains where automatically

creating a reference set is appropriate. Further, that chapter describes a method that

can easily decide whether to use the automatic approach of Chapter 3, or suggest to the

user to manually construct a reference set.

7

The automatic extraction approach can be improved using machine learning tech-

niques at the cost of labeling data, as I describe in Chapter 4. This is especially use-

ful when very high quality extractions are required. Further, a user may require high-

accuracy extraction of attributes that are not easily represented in a reference set, such

as prices or dates. I call these “common attributes.” In particular, common attributes

are usually a very large set (such as prices or dates), but they contain characteristics that

can be exploited for extraction. For instance, regular expressions can identify prices.

However, when extracting both reference-set and common attributes there are ambi-

guities that must be accounted for to extract common attributes accurately. For instance,

while a regular expression for prices might pull out the token “626” from a classified ad for

cars, it is more likely that the “626” in the post refers to the car model, since the Mazda

company makes a car called the 626. By using a reference set to aid disambiguation, the

system handles extraction from posts with high accuracy. For instance, the system can

be trained that when a token matches both a regular expression for prices and the model

attribute from the reference set, it should be labeled as a car model. Chapter 4 describes

my machine learning approach to matching and extraction.

Table 1.2 summarizes and compares all three methods.

Table 1.2: Methods for extraction/annotation under various assumptionsSummary Situation

Chapter 2 Requires repository of manually Cannot use automatically constructed reference set(“ARX” approach) constructed reference sets; because require specific, unattainable attribute

Automatically selects appropriate or characteristics of text preventreference set(s); Automatic extraction automatic construction

Chapter 3 Automatically construct reference set; Data set conforms to characteristics(“ILA” algorithm) Use constructed reference set with for constructing reference set,

automatic method from Chapter 2 which can be testedChapter 4 Supervised machine learning; Require high-accuracy extraction(“Phoebus” approach) Requires manually constructed including common attributes that may be

reference set and labeled training data ambiguous

8

1.3 Thesis Statement

I demonstrate that we can exploit reference sets for information extraction on

unstructured, ungrammatical text, which overcomes the difficulties encoun-

tered by current extraction methods that rely on grammatical and structural

clues in the text for extraction; furthermore, I show that we can automatically

construct reference sets from the unstructured, ungrammatical text itself.

1.4 Contributions of the Research

The key contribution of the thesis is a method for information extraction that exploits

reference sets, rather than grammar or structure based techniques. The research includes

the following contributions:

• An automatic matching and extraction algorithm that exploits a given reference set

(Chapter 2)

• A method that selects the appropriate reference sets from a repository and uses

them for extraction and annotation without training data (Chapter 2)

• An automatic method for constructing reference sets from the posts themselves.

This algorithm also is able to suggest the number of posts required to automatically

construct the reference set sufficiently (Chapter 3)

9

• An automatic method whereby the machine can decide whether to construct its

own reference set, or require a person to do it manually (Chapter 3)

• A method that uses supervised machine learning to perform high-accuracy extrac-

tion on posts, even in the face of ambiguity. (Chapter 4)

1.5 Outline of the thesis

The remainder of this thesis is organized as follows. Chapter 2 presents my automatic

approach to matching posts to a reference set and then using those matches for automatic

extraction. This technique requires no more manual effort beyond constructing a reference

set (and even that can be avoided if the repository of reference sets is comprehensive

enough). Chapter 3 describes the technique for automatically constructing a reference set,

which can then be exploited using the methods of Chapter 2. Chapter 3 also describes an

algorithm for deciding whether or not the reference set can be automatically constructed.

Chapter 4 describes the approach to reference-set based extraction that uses machine

learning. Chapter 5 reviews the work related to the research in this thesis. Chapter 6

finishes with a conclusion of the contributions of this thesis and points out future courses

of research.

10

Chapter 2

An Automatic Approach to Extraction from Posts

Unsupervised Information Extraction (UIE) has recently witnessed significant progress [8;

28; 51]. However, current work on UIE relies on the redundancy of the extractions to

learn patterns in order to make further extractions. Such patterns rely on the assumption

that similar structures will occur again to make the learned extraction patterns useful.

Initially, the approaches can be seeded with manual rules [8], example extractions [51],

or sometimes nothing at all, relying solely on redundancy [28]. Regardless of how the

extraction process starts, extractions are validated via redundancy, and they are then

used to generalize patterns for extractions.

This approach to UIE differs from the one in this thesis in important ways. First, the

use of patterns makes assumptions about the structure of the data. However, I cannot

make any structural assumptions because my target data for extraction is defined by its

lack of structure and grammar. Second, since these systems are not clued into what can

be extracted, they rely on redundancy for their confidence in their extractions. In my

case, the confidence in the extracted values comes from their similarity to the reference-

set attributes exploited during extraction. Lastly, the goals differ. Previous UIE systems

11

seek to build knowledge bases through extraction. For instance, they aim to find all types

of cars on the Web. Our extraction creates relational data, which allows us to classify

and query posts. For this reason, we believe the previous work complements ours well

because we could use their techniques to automatically build our reference sets.

This chapter presents my algorithm for unsupervised information extraction of un-

structured, ungrammatical text. The algorithm has three distinct steps. As stated in

the introduction, reference-set based extraction relies on exploiting relational data from

reference sets, so the first step of the algorithm is to choose the applicable reference set

from a repository of reference sets. This is done so that users can easily reuse reference

sets they may have constructed in the past. Of course, a user can skip this step and just

provide his or her reference set directly to the algorithm instead, but by having the ma-

chine select the appropriate reference sets, this eases some of the burden and guesswork

from a human user. Once the reference sets are chosen, the second step in the algorithm is

for the system to match each post to members of the reference set, which allows it to use

these members’ attributes as clues to aid in the extraction. Finally, the system exploits

these reference-set attributes to perform the unsupervised extraction. It is important to

note that the approach of this chapter is totally automated beyond the construction of

the reference sets.

2.1 Automatically Choosing the Reference Sets

As stated above, the first step in the algorithm is to select the appropriate reference sets.

Given that the repository of reference sets grows over time, the system should choose the

12

reference sets to exploit for a given set of posts. The algorithm chooses the reference sets

based on the similarity between the set of posts and the reference sets in the repository.

Intuitively, the most appropriate reference set is the one with the most useful tokens in

common with the posts. For example, if we have a set of posts about cars, we expect a

reference set with car makes, such as Honda or Toyota, to be more similar to the posts

than a reference set of hotels.

To choose the reference sets, the system treats each reference set in the repository as

a single document and the set of posts as a single document. This way, the algorithm

calculates a similarity score between each reference set and the full set of posts. Next

these similarity scores are sorted in descending order, and the system traverses this list,

computing the percent difference between the current similarity score and the next. If

this percent difference is above a threshold, and the score of the current reference set is

greater than the average similarity score for all reference sets, the algorithm terminates.

Upon termination, the algorithm returns as matches the current reference set and all

reference sets that preceded it. If the algorithm traverses all of the reference sets with-

out terminating, then no reference sets are relevant to the posts. Table 2.1 shows the

algorithm.

I choose the percent difference as the splitting criterion between the relevant and

irrelevant reference sets because it is a relative measure. Comparing only the actual

similarity values might not capture how much better one reference set is as compared

to another. Further, I require that the score at the splitting point be higher than the

average score. This requirement captures cases where the scores are so small at the end of

the list that their percent differences can suddenly increase, even with a small difference

13

Table 2.1: Automatically choosing a reference set

Given posts P , threshold T , and reference set repository Rp← SingleDocument(P )For all reference sets ref ∈ Rri ← SingleDocument(ref)SIM(ri, p)← Similarity(ri, p)

For all SIM(ri, p) in descending orderIf PercentDiff (SIM(ri, p), SIM(ri+1, p)) > T ANDSIM(ri, p) > AV G(SIM(R, p))

Return SIM(rx, p), 1 > x > iReturn nothing (No matching reference sets)

in score. This difference does not mean that the algorithm found relevant reference sets,

rather it just means that the next reference set is that much worse than the current, bad

one.

By treating each reference set as a single document, the algorithm of Table 2.1 scales

linearly with the size of the repository. That is, each reference set in the repository is

scored against the posts only once. Furthermore, as the number of reference sets increases,

the percent difference still determines which reference sets are relevant. If an irrelevant

reference set is added to the repository, it will score low, so it will still be relatively that

much worse than the relevant one. If a new relevant set is added, the percent difference

between the new one and the one already chosen will be small, but both of them will still

be much better than the irrelevant sets in the repository. Thus, the percent difference

remains a good splitting point.

I do not require a specific similarity measure for this algorithm. Instead, the ex-

periments of Section 2.4 demonstrate that certain classes of similarity metrics perform

14

well. So, rather than picking just one as the best I try many different metrics and draw

conclusions about what types of similarity scores should be used and why.

2.2 Matching Posts to the Reference Set

As outlined in Chapter 1, reference-set based extraction hinges upon exploiting the best

matching member of a reference set for a given post. Therefore, after choosing the

relevant reference sets, the algorithm matches each post to the best matching members of

the reference set. When selecting multiple reference sets, the matching algorithm executes

iteratively, matching the set of posts once to each chosen reference set. However, if two

chosen reference sets have the same schema, it only selects the higher ranked one to

prevent redundant matching.

To match the reference set records to the posts, I employ a vector-space model. By

using a vector-space approach to matching, rather than machine learning, I can lessen the

burden required by tasks such as labeling training matches. Furthermore, a vector-space

model allows me to use information-retrieval techniques such as inverted indexes, which

are fast and scalable.

In my model, I treat each post as a “query” and each record of the reference set as

a “document,” and I use token-based similarity metrics to define their likeness. Again, I

do not tie this part of the algorithm to a specific similarity metric because I find a class

of token-based similarity metrics works well, which I justify in the experiments.

However, for the matching algorithm I do modify the similarity metrics I use. This

modification considers two tokens as matching if their Jaro-Winkler [62] similarity is

15

greater than a threshold. For example, consider the classic Dice similarity, defined over

a post p and a record of the reference set r, as:

Dice(p, r) =2 ∗ (p ∩ r)|p|+ |r|

If the threshold is 0.95, two tokens are put into the intersection of the modified Dice

similarity if those two tokens have a Jaro-Winkler similarity above 0.95. This Jaro-

Winkler modification captures tokens that might be misspelled or abbreviated, which is

common in posts. The underlying assumption of the Jaro-Winkler metric is that certain

attributes are more likely similar if they share a certain prefix. This works particularly

well for proper nouns, which many reference set attributes are, such as “Honda Accord”

cars. Using the modified similarity metric, the algorithm compares each post, pi, to each

member of the reference set and returns the reference set record(s) with the maximum

score, called rmaxi . In the experiments, I vary this threshold to test its effect on the

performance of matching the posts. I also justify using the Jaro-Winkler metric to modify

the Dice similarity, rather than another edit distance metric.

However, because more than one reference set record can have a maximum similarity

score with a post (rmaxi is a set), an ambiguity problem exists with the attributes provided

by the reference set records. For example, consider a post “Civic 2001 for sale, look!” If

we have the following 3 matching reference records: {HONDA, CIVIC, 4 Dr LX, 2001},

{HONDA, CIVIC, 2 Dr LX, 2001} and {HONDA, CIVIC, EX, 2001}, then we have an

ambiguity problem with the trim. We can confidently assign HONDA as the make, CIVIC

as the model, and 2001 as the year, because all of the matching records agree on these

16

attributes. We say that these attributes are “in agreement.” Yet, there is disagreement

on the trim because we cannot determine which value is best for this attribute. All the

reference records are equally acceptable from the vector-space perspective, but they differ

in value for this attribute. Therefore, the algorithm removes all attributes that do not

agree across all matching reference set records from the annotation (e.g., the trim in

our example). Once this process executes, the system has all of the attributes from the

reference set that it can use to aid extraction. The full automatic matching algorithm is

shown in Table 2.2.

Table 2.2: Vector-space approach to finding attributes in agreement

Given posts P and reference set RFor all pi ∈ Prmaxi ← MAX( SIM (pi,R))Remove attributes not in agreement from rmaxi

Selecting all the reference records with the maximum score, without pruning possible

false positives, introduces noisy matches. These false positives occur because posts with

small similarity scores still ‘match’ certain reference set records. For instance, the post

“We pick up your used car” matches the Renault Le Car, Lincoln Town Car, and Isuzu

Rodeo Pick-up, albeit with small similarity scores. However, since none of these attributes

are in agreement, this post gets no annotation. Therefore, by using only the attributes in

agreement, I essentially eliminate these false positive matches because no annotation will

be returned for this post. That is, because no annotation is returned for such posts, it is

as if there are no matching records for it. In this manner, by using only the attributes

“in agreement,” I separate the true matches from the false positives.

17

Once the system matches the posts to a reference set, users can query the posts

structurally like a database. This is a tremendous advantage over the traditional keyword

search approach to searching unstructured, ungrammatical text. For example, keyword

search cannot return records for which an attribute is missing, whereas the approach in

this thesis can. If a post were “2001 Accord for sale,” and the keyword search was Honda,

this record would not be returned. However, after matching posts to the reference set,

if we select all records where the matched-reference-set attribute is “Honda” this record

would be returned. Another benefit of matching the posts is that aggregate queries are

possible. This mechanism is not supported by keyword search.

Perhaps one of the most useful aspects of matching posts to the reference set is that one

can include the posts in an information-integration system (e.g., [38; 58]). In particular,

my approach is well suited for Local-as-View (LAV) information integration systems [37],

which allow for easy inclusion of new sources by defining their “source description” as a

view over known domain relations. For example, assume a user has a reference set of cars

from Edmunds car buying guide for the years 1990-2005 in his repository. If one includes

this source in an LAV integration system, the source description is:

Edmunds(make, model, year, trim) :-

Cars(make, model, year, trim) ∧

year ≥ 1995 ∧ year ≤ 2005

Now, assume a set of posts comes in and the system matches them to the records of

this Edmunds source. Before matching, the user had no idea how to include this source in

the integration system, but after matching the user can use the same source description

18

of the matching reference set, along with a variable to output the “post” attribute, to

define a source description of the unstructured, ungrammatical source. This is possible

because the algorithm appends records from the unstructured source with the attributes

in agreement from the reference set matches. So, the new source description becomes:

UnstructuredSource(post, make, model, year, trim) :-

Cars(make, model, year, trim) ∧

year ≥ 1995 ∧ year ≤ 2005

In this manner, one can collect and include new sources of unstructured, ungrammat-

ical text in LAV information integration systems without human intervention.

2.3 Unsupervised Extraction

Yet, the annotation that results from the matching step may not be enough. There are

many cases where a user wants to see the actual extracted values for a given attribute,

rather than the reference set attribute from the matching record. Therefore, once the

system retrieves the attributes in agreement from the reference set matches, it exploits

these attributes for information extraction. First, for each post, each token is compared

to each of the attributes in agreement from the matches. The token is labeled as the

attribute for which it has the maximum Jaro-Winkler similarity. I label a token as ‘junk’

(to be ignored) if it receives a score of zero against all reference-set attributes.

However, this initial labeling generates noise because the attributes are labeled in

isolation. To remedy this, the algorithm uses the modified Dice similarity, and generates

a baseline score between the extracted field and the reference-set field. Then, it goes

19

Table 2.3: Cleaning an extracted attribute

CLEAN-ATTRIBUTE (extracted attribute E, reference set attribute A)ExtCands ← {}baseline ← DICE(E,A)For all tokens ei ∈ E

score ← DICE(E/ei,A)IF score > baseline

ExtCands ← ExtCands ∪ eiIF ExtCands is empty

return EELSE

select ci ∈ ExtCands that yieds max scoreCLEAN-ATTRIBUTE (E/ci,A)

through the extracted attribute, removing one token at a time, and calculates a new

Dice similarity value. If this new score is higher than the baseline, the removed token

is a candidate for permanent removal. Once all tokens are processed in this way, the

candidate for removal that yielded the highest new score is removed. Then, the algorithm

updates the baseline to the new score, and repeats the process. When none of the tokens

yield improved scores when removed, this process terminates. This cleaning is shown in

Table 2.3. For this part of the algorithm, I use the modified Dice since our experiments in

the annotation section show this to be one of the best performing similarity metrics. Note,

my unsupervised extraction is O(‖p‖2) per post, where ‖p‖ is the number of tokens in

the post, because, at worst, all tokens are initially labeled, and then each one is removed,

one at a time. However, because most posts are relatively short, this is an acceptable

running time.

The whole multi-pass procedure for unsupervised extraction is given by Figure 2.1.

By exploiting reference sets, the algorithm performs unsupervised information extraction

without assumptions regarding repeated structure in the data.

20

Figure 2.1: Unsupervised extraction with reference sets

2.4 Experimental Results

This section presents results for my unsupervised approach to selecting a reference set,

finding the attributes in agreement, and exploiting these attributes for extraction.

2.4.1 Results: Selecting reference set(s)

The algorithm for choosing the reference sets is not tied to a particular similarity function.

Rather, I apply the algorithm using many different similarity metrics and draw conclusions

about which ones work best and why. This yields insight as to which types of metrics

users can plug in and have the algorithm perform accurately. 1

The experiments for selecting reference sets uses six reference sets and three sets posts

to test out the various situations where the algorithm should work correctly: returning a

single matching reference set, multiple matching reference sets, and no matching reference

sets.1Note that for the following metrics: Jensen-Shannon distance, Jaro-Winkler similarity, TF/IDF, and

Jaro-Winkler TF/IDF, I use the SecondString package’s implementation [17].

21

I use six reference sets, most of which have been used in past research. First, I use the

Hotels reference set from [44], which consists of 132 hotels each with a star rating, a hotel

name, and a local area. Another reference set from the same paper is the Comics reference

set, which has 918 Fantastic Four and Incredible Hulk comics from the Comic Books Price

guide, each with a title, issue number, and publisher. I also use two restaurant reference

sets, which were both used previously for record linkage [6]. One I call Fodors, which

contains 534 restaurant records, each with a name, address, city, and cuisine. The other

is Zagat, with 330 records.

I also have two reference sets of cars. The first, called Cars, contains 20,076 cars from

the Edmunds Car Buying Guide for 1990-2005. The attributes in this set are the make,

model, year, and trim. I supplement this reference set with cars from before 1990, taken

from the auto-accessories company, Super Lamb Auto. This supplemental list contains

6,930 cars from before 1990. I call this combined set of 27,006 records the Cars reference

set. The other reference set of cars also has the attributes make, model, year, and trim.

However, it is a subset of the cars covered by Cars. This data set comes from the Kelly

Blue Book car pricing service containing 2,777 records for Japanese and Korean cars from

1990-2003. I call this set KBBCars. This data set has also been used in the record linkage

community [46]. A summary of the reference sets is given in Table 2.4.

Table 2.4: Reference Set DescriptionsName Source Website Attributes RecordsFodors Fodors Travel Guide www.fodors.com name, address, city, cuisine 534Zagat Zagat Restaurant Guide www.zagat.com name, address, city, cuisine 330Comics Comics Price Guide www.comicspriceguide.com title, issue, publisher 918Hotels Bidding For Travel www.biddingfortravel.com star rating, name, local area 132Cars Edmunds and www.edmunds.com and make, model, trim, year 27,006

Super Lamb Auto www.superlambauto.comKBBCars Kelly Blue Book Car Prices www.kbb.com make, model, trim, year 2,777

22

I chose sets of posts to test different cases that exist for finding the appropriate

reference sets. One set of posts matches only a single reference set in the experimental

repository. It contains 1,125 posts from the forum Bidding For Travel. These posts, called

BFT, match only the Hotels reference set. This data set was used previously in [44].

Because my approach can also select multiple relevant reference sets, I use a set

of posts that matches both reference sets of cars. This set, which I call Craig’s Cars,

contains 2,568 car posts from Craigslist classifieds. Note that while there may be multiple,

appropriate reference sets, they also might have an internal ranking. In this case I expect

that both the Cars and KBBCars reference sets are selected, but Cars should be ranked

first (since KBBCars ⊂ Cars).

Lastly, I must examine whether the algorithm suggests that no relevant reference sets

exist in the repository. To test this feature, I collected 1,099 posts about boats from

Craigslist, called Craig’s Boats. Boats are similar enough to cars to make this task non-

trivial, because boats and cars are both made by Honda, for example, so that keyword

appears in both sets of posts. However, boats also differ from each of the reference sets

so that no reference set should be selected.

All three sets of posts are summarized in Table 2.5.

Table 2.5: Posts Set DescriptionsName Source Website Reference Set Match RecordsBFT Bidding For Travel www.biddingfortravel.com Hotels 1,125Craig’s Cars Craigslist Cars www.craigslist.org Cars, KBBCars 2,568Craig’s Boats Craigslist Boats www.craigslist.org 1,099

23

The first metric I test is the Jensen-Shannon distance (JSD) [39]. This information-

theoretic metric quantifies the difference in probability distributions between the tokens

in the reference set and those in the set of posts. Because JSD requires probability distri-

butions, I define the distributions as the likelihood of tokens occurring in each document.

Table 2.6 shows the results for choosing relevant reference sets using JSD. The ref-

erence set names in bold reflect those that are selected for the given set of posts. (This

means Craig’s Boats should have no bold names.) The scores in bold are the similarity

scores for the chosen reference sets. The percent difference in bold is the point at which

the algorithm breaks out and returns the appropriate reference sets. In particular, us-

ing JSD the algorithm successfully identifies the multiple cases of a single appropriate

reference set, multiple reference sets, or no reference set. Note that in all experiments I

maintain the percent-difference splitting-threshold at 0.6.

Table 2.6: Using Jensen-Shannon distance as similarity measureBFT Posts Craig’s Cars

Ref. Set Score % Diff. Ref. Set Score % DiffHotels 0.622 2.172 Cars 0.520 0.161Fodors 0.196 0.050 KBBCars 0.447 1.193Cars 0.187 0.248 Fodors 0.204 0.144KBBCars 0.150 0.101 Zagat 0.178 0.365Zagat 0.136 0.161 Hotels 0.131 0.153Comics 0.117 Comics 0.113Average 0.234 Average 0.266

Craig’s BoatsRef. Set Score % Diff.Cars 0.251 0.513Fodors 0.166 0.144KBBCars 0.145 0.088Comics 0.133 0.025Zagat 0.130 0.544Hotels 0.084Average 0.152

24

The next metric I test is cosine similarity using TF/IDF for the weights. These

results are shown in Table 2.7. Similarly to JSD, TF/IDF is able to identify all of the

cases correctly. However, in choosing both car reference sets for the Craig’s Cars posts,

TF/IDF incorrectly determines the internal ranking, placing KBBCars ahead of Cars.

This due to the IDF weights that are calculated for the reference sets. Although the

algorithm treats the set of posts and the reference set as a single document for comparison,

the IDF weights are based on individual records in the reference set. Therefore, since

Cars is a superset of KBBCars, certain tokens are weighted more in KBBCars than

in Cars, resulting in higher matching scores. These results also justify the need for a

double stopping criterion. It is not sufficient to only consider the percent difference as

an indicator of relative superiority amongst the reference sets. The scores must also be

compared to an average to ensure that the algorithm does not errantly choose a bad

reference set simply because it is relatively better than an even worse one. The last two

rows of the Craig’s Boats posts and the BFT Posts in Table 2.7 show this behavior.

I also tried a simpler bag-of-words metric that does not use any sort of token prob-

abilities or weights. Namely, I use the Jaccard similarity, which is defined as the tokens

in common divided by the union of the token sets. That is, given token set S and token

set T , Jaccard is:

Jaccard(S, T ) =S ∩ TS ∪ T

The results using the Jaccard similarity are shown in Table 2.8. One of the most

surprising aspects of these results is that the Jaccard similarity actually does pretty well.

It gets all but one of the cases correct. It is able to link the BFT Posts to the Hotels

25

Table 2.7: Using TF/IDF as the similarity measureBFT Posts Craig’s Cars

Ref. Set Score % Diff. Ref. Set Score % DiffHotels 0.500 1.743 KBBCars 0.122 0.239Fodors 0.182 0.318 Cars 0.099 1.129Comics 0.134 0.029 Zagat 0.046 0.045Zagat 0.134 0.330 Fodors 0.044 0.093Cars 0.100 1.893 Comics 0.041 0.442KBBCars 0.035 Hotels 0.028Average 0.182 Average 0.063

Craig’s BoatsRef. Set Score % Diff.Cars 0.200 0.189Comics 0.168 0.220Fodors 0.138 0.296Zagat 0.107 0.015KBBCars 0.105 0.866Hotels 0.056Average 0.129

set only and it is able to determine that no reference set is appropriate for the Craig’s

Boats posts. However, with the Craig’s Cars posts, it is only able to determine one of the

reference sets. It ranks the Fodors and Zagat restaurants ahead of KBBCars because the

restaurants have city names in common with some of the classified car listings. However,

it is unable to determine that city name tokens are not as important as car makes and

models. This is a problem if, for instance, the post is small and it contains a few more

city name tokens than car tokens.

Lastly, I investigate whether requiring that tokens match strictly, as in the above

metrics, is more useful than a soft matching technique that considers tokens matching

based on string similarities. To test this idea I use a modified version of TF/IDF where

tokens are considered a match when their Jaro-Winkler score is greater than 0.9. These

results are shown in Table 2.9. This metric is able to correctly retrieve the reference set

26

Table 2.8: Using Jaccard as the similarity measureBFT Posts Craig’s Cars

Ref. Set Score % Diff. Ref. Set Score % DiffHotels 0.272 1.489 Cars 0.207 1.339Comics 0.109 0.166 Fodors 0.088 0.126Fodors 0.094 0.004 Zagat 0.078 0.005Zagat 0.093 0.640 KBBCars 0.078 0.089Cars 0.057 0.520 Comics 0.072 2.536KBBCars 0.037 Hotels 0.020Average 0.110 Average 0.091

Craig’s BoatsRef. Set Score % Diff.Cars 0.129 0.366Comics 0.095 0.347Fodors 0.070 0.112Zagat 0.063 0.261KBBCars 0.050 1.917Hotels 0.017Average 0.071

for the BFT Posts, and for the Craig’s Boats posts this metric chooses no appropriate

reference set. However, for the Craig’s Boats case, this metric is close to returning many

incorrect reference sets since with the Zagat reference set the score is very close to the

average while the percent difference is huge. The largest failure is with the Craig’s Cars

posts. For this set of posts the ordering of the reference sets is correct because the Cars

and KBBCars have the highest scores, but no reference set is chosen because the percent

difference is never above the threshold with a similarity score above the average. The

percent differences are low because of the soft nature of the token matching. For example,

the Comics contains many matches because the issue number of the comics, such as #99,

#199, #299, etc. match an abbreviated car year in a post such as ’99. So, the similarity

scores between the cars and comics reference sets are close. From these sets of results

it becomes clear that it is better to have strict token matches, although they may be

27

less frequent. The strict nature of the matches ensures that the tokens particular to a

reference set are used for matches, which helps differentiate reference sets.

Table 2.9: Using Jaro-Winkler TF/IDF as the similarity measureBFT Posts Craig’s Cars

Ref. Set Score % Diff. Ref. Set Score % DiffHotels 0.593 1.232 Cars 0.699 0.174Fodors 0.266 0.173 KBBCars 0.595 0.174Zagat 0.227 0.110 Comics 0.505 0.050Cars 0.204 0.068 Fodors 0.481 0.107Comics 0.191 0.777 Zagat 0.435 0.948KBBCars 0.108 Hotels 0.223Average 0.265 Average 0.490

Craig’s BoatsRef. Set Score % Diff.Cars 0.469 0.096Comics 0.428 0.106Fodors 0.387 0.110KBBCars 0.349 0.082Zagat 0.322 1.212Hotels 0.146Average 0.350

The performance of each metric is shown in Table 2.10. This table shows each metric

I tested, and whether or not it correctly identified the reference set(s) for that set of

posts. In the case of Craig’s Boats, I consider it correct if no reference set is chosen. The

last column shows whether the method was able to also rank the chosen reference sets

correctly for the Craig’s Cars posts.

Table 2.10: A summary of each method choosing reference setsMethod BFT Craig’s Boats Craig’s Cars Craig’s Cars rank

JSD√ √ √ √

TF/IDF√ √ √

XJ-W TF/IDF

√ √X X

Jaccard√ √

X X

28

Based on these sets of results, I draw some conclusions about which metrics should be

used and why. Comparing the Jaccard similarity results to the success of both JSD and

TF/IDF, it is clear that it is necessary to include some notion of importance regarding

the matching tokens. The results argue that probability distributions of the tokens as

defined in JSD are a better metric, since TF/IDF can be overly sensitive, i.e., ignoring

tokens that may be important, even though they are frequent. This claim is also justified

by the fact that using JSD the algorithm does not run into the situation where it needs to

use the average stopping criterion, although it does with the TF/IDF metric. However,

since JSD and TF/IDF both do well, I can say that if a similarity metric can differentiate

important tokens from those that are not, then it can be used successfully in the algorithm

to choose reference sets. This is why I do not tie this algorithm to any particular metric,

since many could work.

Another interesting aspect of these results is the poor performance of TF/IDF using

the Jaro-Winkler modification. It seems that boosting the number of tokens that match

by using a less strict token matching method actually harms the ability to differentiate

between reference sets. This suggests that the tokens that define reference sets need to be

emphasized by matching them exactly. Lastly, across different domains and even across

different similarity metrics, the chosen threshold of 0.6 is appropriate for the cases where

the algorithm chooses the correct reference set. In all of the cases where the correct

reference set(s) is chosen, this threshold is exceeded.

29

2.4.2 Results: Matching posts to the reference set

Once the relevant reference sets are chosen, I use them to find the attributes in agreement

for the different sets of posts, since the algorithm will use these attributes during extrac-

tion. For the set of posts with reference sets (i.e., BFT and Craig’s Cars), I compare the

attributes in agreement found by the vector-space model to the attributes in agreement

for the true matches between the posts and the reference set. To evaluate the annotation,

I use the traditional measures of precision, recall, and F-measure (i.e., the harmonic mean

between precision and recall). A correct match occurs when the attributes match between

the true matches and the predictions of the vector-space model. As stated previously, I

try multiple modified token-similarity metrics to draw conclusions about what types of

metrics work and why.

Table 2.11 shows the results using the modified Dice similarity for the BFT and Craig’s

Cars posts, varying the Jaro-Winkler token-match threshold from 0.85 to 0.99. That is,

above this threshold two tokens will be considered a match for the modified Dice. In

both domains there is an improvement in F-measure as the threshold increases. This

is because more relevant tokens are being included when the threshold increases. If the

threshold is low then many irrelevant tokens are considered matches, so the algorithm

makes incorrect record level matches. For instance, as the threshold becomes low in the

Craig’s Cars domain, the results for the year attribute drop steeply because often the

difference in years is a single digit, which in turn yields errantly matched tokens for a

low threshold string similarity. Since errant matches are ignored (because they are not

“in agreement”) the scores are low. Note, however, that once the threshold becomes too

30

high, at 0.99, the results start to decrease. This happens because a very strict (high

value) edit-distance is too restrictive, so does not capture some of the possible matching

tokens that might be slightly misspelled.

The only attribute where the F-measure increases at 0.99 versus 0.95 is the year

attribute of the Craig’s Cars domain. In this case, the recall slightly increases at 0.99,

but the precisions are almost the same, yielding a higher F-measure for the matching

threshold of 0.99. This is due to more year values being “in agreement” with the higher

threshold since there will be less variation in terms of which reference set values can match

for this attribute, so those that do will likely be in agreement. For an example where

the threshold of 0.95 includes years that are not in agreement with high Jaro-Winkler

scores, consider a post with the token “19964” which might be a price or a year. If the

reference set record’s year attribute is “1994,” the Jaro-Winkler score between “19964”

and “1994” is 0.953. If the reference set record’s year is “1996” the Jaro-Winkler score is

0.96. In both cases, a threshold of 0.95 includes both years, so if this post matches two

reference set records with the same make, model and trim, but differing years of 1994 and

1996, then the year is discarded because it is not in agreement. We almost see the same

behavior with the trim attribute as well. This is because with both of these attributes, a

single difference in a character, say “LX” versus “DX” for a trim (or a digit for the year)

yields a completely different attribute, which can then become not “in agreement.”

Table 2.12 shows the results using the modified Jaccard similarity. As with the Dice

similarity, the modification is such that two tokens are put into the intersection of the

Jaccard similarity if their Jaro-Winkler score is above the threshold. The most striking

31

Table 2.11: Annotation results using modified Dice similarityBFT posts

Threshold Attribute Recall Precision F-Measure0.85 Hotel name 76.46 78.13 77.29

Star rating 80.74 77.86 79.27Local area 88.04 85.46 86.73

0.9 Hotel name 88.23 88.49 88.36Star rating 91.73 87.64 89.64Local area 93.09 89.36 91.19

0.95 Hotel name 88.42 88.51 88.47Star rating 92.32 87.79 90.00Local area 93.97 89.44 91.65

0.99 Hotel name 87.84 88.36 88.10Star rating 92.02 87.76 89.84Local area 93.39 89.39 91.34

Craig’s Cars postsThreshold Attribute Recall Precision F-Measure0.85 make 81.57 77.64 79.55

model 57.61 61.15 59.33trim 38.76 29.80 33.70year 2.38 8.14 3.68

0.9 make 88.41 82.82 85.52model 76.28 77.16 76.72trim 65.57 48.12 55.51year 69.45 82.88 75.58

0.95 make 93.96 86.35 89.99model 82.62 81.35 81.98trim 71.62 51.95 60.22year 78.86 91.01 84.50

0.99 make 93.51 86.33 89.78model 81.29 81.25 81.27trim 71.75 51.85 60.20year 79.14 90.94 84.63

result is that the scores match exactly to those using the Dice similarity. The Dice sim-

ilarity and Jaccard similarity can be used interchangeably. Further investigation reveals

that the actual similarity scores between the posts and their reference set matches are

different, which should be the case, but the resulting attributes that are “in agreement”

are the same using either metric. Therefore, they yield the same annotation from the

matches.

32

Table 2.12: Annotation results using modified Jaccard similarityBFT posts

Threshold Attribute Recall Precision F-Measure0.85 Hotel name 76.46 78.13 77.29

Star rating 80.74 77.86 79.27Local area 88.04 85.46 86.73

0.9 Hotel name 88.23 88.49 88.36Star rating 91.73 87.64 89.64Local area 93.09 89.36 91.19

0.95 Hotel name 88.42 88.51 88.47Star rating 92.32 87.79 90.00Local area 93.97 89.44 91.65

0.99 Hotel name 87.84 88.36 88.10Star rating 92.02 87.76 89.84Local area 93.39 89.39 91.34

Craig’s Cars postsThreshold Attribute Recall Precision F-Measure0.85 make 81.57 77.64 79.55

model 57.61 61.15 59.33trim 38.76 29.80 33.70year 2.38 8.14 3.68

0.9 make 88.41 82.82 85.52model 76.28 77.16 76.72trim 65.57 48.12 55.51year 69.45 82.88 75.58

0.95 make 93.96 86.35 89.99model 82.62 81.35 81.98trim 71.62 51.95 60.22year 78.86 91.01 84.50

0.99 make 93.51 86.33 89.78model 81.29 81.25 81.27trim 71.75 51.85 60.20year 79.14 90.94 84.63

Table 2.13 shows results using the Jaro-Winkler TF/IDF similarity measure. Similarly

to the other metrics, for the BFT domain as the threshold increases so does the F-measure,

until the threshold peaks at 0.95 after which it decreases in accuracy. However, with the

Craig’s Cars domain the modified TF/IDF performs the best with a threshold of 0.99.

From these results, across all metrics, a threshold of 0.95 performs the best for the

BFT domain. In the Cars domain, the 0.95 threshold works best for the modified Dice

and Jaccard, and at this threshold both methods outperform the TF/IDF metric, even

33

when its threshold is at its best at 0.99. Therefore, the most meaningful comparisons

between the different metrics can be made at the threshold 0.95. In the BFT domain, the

modified TF/IDF outperforms the Dice and Jaccard metrics, until this threshold of 0.95.

At this threshold level, the Jaccard and Dice metrics outperform the TF/IDF metric on

the two harder attributes, the hotel name and the hotel area. In the Cars domain, the

TF/IDF metric is outperformed at every threshold level, except on the year attribute.

Interestingly, at the lowest threshold levels TF/IDF performs terribly because the tokens

that match in the computation have very low IDF scores since they match so many other

tokens in the corpus, resulting in very low TF/IDF scores. If the scores are low, then

many records will be returned and almost no attributes will ever be in agreement, yielding

very few correct annotations.

These three sets of results allows me to draw some conclusions about the utility of

different metrics for the vector-space matching task. The biggest difference between the

TF/IDF metric and the other two is that the TF/IDF metric uses term weights computed

from the set of tokens in the reference set. The key insight of IDF weights are their ability

to discern meaningful tokens from non-meaningful ones based on the inter-document

frequency. The assumption is that more meaningful tokens occur less frequently. However,

almost all tokens in a reference set are meaningful, and it is sometimes the case that very

meaningful tokens in a reference set occur very often. The most glaring instances of this

occur with the make and year attributes in the Cars reference set used for the Craig’s

Cars posts. Makes such as “Honda” occur quite frequently in the data set, and given

that for 20,076 car records the years only range from 1990 to 2005, the re-occurrence

of the same year tokens are very, very frequent. These attributes will be deemphasized

34

Table 2.13: Annotation results using modified TF/IDF similarityBFT posts

Threshold Attribute Recall Precision F-Measure0.85 Hotel name 85.89 78.91 82.25

Star rating 92.32 84.81 88.40Local area 94.55 86.86 90.54

0.9 Hotel name 90.76 83.83 87.16Star rating 95.33 88.05 91.55Local area 94.36 87.15 90.61

0.95 Hotel name 90.47 83.63 86.92Star rating 97.18 89.84 93.36Local area 94.55 87.41 90.84

0.99 Hotel name 89.69 82.91 86.17Star rating 96.89 89.57 93.08Local area 93.68 86.60 90.00

Craig’s Cars postsThreshold Attribute Recall Precision F-Measure0.85 make 51.14 44.51 47.60

model 41.93 35.54 38.47trim 43.10 15.50 22.80year 35.58 29.18 32.06

0.9 make 67.07 58.53 62.51model 61.79 52.48 56.76trim 67.81 22.90 34.24year 58.48 48.24 52.87

0.95 make 88.55 77.30 82.54model 84.55 71.84 77.68trim 81.21 26.86 40.37year 76.29 62.78 68.88

0.99 make 88.90 77.65 82.90model 84.74 72.02 77.86trim 80.29 26.52 39.87year 76.67 63.12 69.24

significantly because of their frequency. If the matching token metric ignores the year,

this attribute will often not be in agreement since multiple records of the same car for

different years will be returned. Thus, TF/IDF has low scores for the year attribute.

TF/IDF also creates problems by overemphasizing unimportant tokens that occur rarely.

Consider the following post, “Near New Ford Expedition XLT 4WD with Brand New 22

Wheels!!! (Redwood City - Sale This Weekend !!!) $26850” which TF/IDF matches to

the reference set record

35

{VOLKSWAGEN, JETTA, 4 Dr City Sedan, 1995}. In this case, the very rare token

“City” causes an errant match because it is weighted so heavily. In the case of the

BFT posts, since the Hotel reference set has few commonly occurring tokens amongst a

small set of records, this phenomena is not as observable. Since weights, whether based

on TF/IDF or probabilities, rely on frequencies, such an issue will likely occur in most

matching methods that rely on the frequencies of tokens to determine their importance.

Therefore, I draw the following conclusions. In the matching step, an edit-distance

should be used to make soft matches between the tokens of the post and the reference set

records. If the Jaro-Winkler metric is used, the threshold should be set to 0.95, since that

yields the highest improvement using the best metrics. Lastly, and most importantly,

reference sets do not adhere to the assumptions made by weighting schemes, so only

metrics that do not use such schemes, such as the Dice and Jaccard similarities, should

be used, rather than TF/IDF.

Earlier I stated that the Jaro-Winkler metric emphasizes matching proper nouns,

rather than more common words, because it considers the prefix of words to be an im-

portant indicator of matching. This is in contrast to traditional edit distances that define

a transformation over a whole string, which are better for generic words where the prefix

might not indicate matching. Table 2.14 compares using Dice similarity modified with the

Jaro-Winkler metric to Dice modified with the Smith-Waterman distance [56]. (Smith-

Waterman is a classic edit distance originally developed to align DNA sequences.) As

with the Jaro-Winkler score, if two tokens have a Smith-Waterman distance above 0.95

they are considered a match in the modified Dice similarity. As the table shows, the

Jaro-Winkler Dice score outperforms the Smith-Waterman variant. Since many of the

36

reference set attributes are proper nouns, the Jaro-Winkler is better suited for matching,

which is especially apparent when using the Cars reference set.

Table 2.14: Modified Dice using Jaro-Winkler versus Smith-WatermanBFT posts

Method Attribute Recall Precision F-MeasureJaro-Winkler Hotel name 88.42 88.51 88.47

Star rating 92.32 87.79 90.00Local area 93.97 89.44 91.65

Smith-Waterman Hotel name 67.80 69.56 68.67Star rating 75.39 73.88 74.63Local area 84.34 81.72 83.01

Craig’s Cars postsMethod Attribute Recall Precision F-MeasureJaro-Winkler make 93.96 86.35 89.99

model 82.62 81.35 81.98trim 71.62 51.95 60.22year 78.86 91.01 84.50

Smith-Waterman make 24.12 27.18 25.56model 14.71 18.82 16.52trim 11.17 5.81 7.64year 29.17 52.30 37.45

2.4.3 Results: Extraction using reference sets

The main research exercise of the this thesis is information extraction from unstructured,

ungrammatical text. Therefore, Table 2.15 shows our “field-level” results for performing

information extraction exploiting the attributes in agreement. Field-level extractions

are counted as correct only if all tokens that compromise that field in the post are

correctly labeled. Although this is a much stricter rubric of correctness (versus token-

level correctness), it more accurately models how useful an extraction system would be.

The results from my system are presented as ARX 2

I compare ARX’s results to three systems that rely on supervised machine learn-

ing for extraction. I compare against two different Conditional Random Field (CRF)2Automatic Reference-set based eXtraction

37

[32] extractors built using MALLET [42], since CRFs are a comparison against both

state-of-the-art extraction and methods that rely on structure. The first method, called

“CRF-Orth” includes only orthographic features, such as whether a token is capitalized

or contains punctuation. The second CRF method, called “CRF-Win” contains ortho-

graphic features and sliding window features such that it considers both the two-tokens

preceding the current token, and two-tokens afterward for labeling. CRF-Win in partic-

ular will demonstrate how the unstructured nature of posts makes extraction difficult. I

trained these extractors using 10% of the labeled posts for training, since this is small

enough amount to make it a fair comparison to the automatic method, but is also large

enough such that the extractor can learn well enough.

I also compare ARX to Amilcare [14], which relies shallow NLP parsing for extraction.

Amilcare also provides a good comparison because it can be supplied with “gazetteers”

(lists of nouns) to aid extraction, so it was provided with the unique set of attribute

values (such as Hotel areas) for each attribute. Note, however, that Amilcare was trained

using 30% of the labeled posts for training so that it could learn a good model (however,

even this large amount of training did not seem to help as it only outperformed ARX on

two attributes). All supervised methods are run 10 times, and the results reported are

averages.

Although the results are not directly comparable since I compare systems that require

labeled data (supervised machine learning) to ARX, they still point to the fact that ARX

performs very well. Although the ARX system is completely automatic from selecting

its own reference set all the way through to extraction, it still performs the best on 5/7