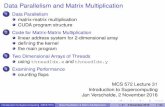

A probabilistic corpus-based model of syntactic parallelism

-

Upload

amit-dubey -

Category

Documents

-

view

224 -

download

2

Transcript of A probabilistic corpus-based model of syntactic parallelism

Cognition 109 (2008) 326–344

Contents lists available at ScienceDirect

Cognition

journal homepage: www.elsevier .com/ locate/COGNIT

A probabilistic corpus-based model of syntactic parallelism

Amit Dubey a, Frank Keller a,*, Patrick Sturt b,1

a School of Informatics, University of Edinburgh, 10 Crichton Street, Edinburgh EH8 9AB, UKb Department of Psychology, School of Philosophy, Psychology and Language Sciences, University of Edinburgh, 7 George Square, Edinburgh EH8 9JZ, UK

a r t i c l e i n f o a b s t r a c t

Article history:Received 30 January 2007Revised 19 September 2008Accepted 26 September 2008

Keywords:Syntactic primingSyntactic parallelismCognitive architecturesProbabilistic grammarsCorpora

0010-0277/$ - see front matter � 2008 Elsevier B.Vdoi:10.1016/j.cognition.2008.09.006

* Corresponding author. Tel.: +44 131 650 4407; faE-mail addresses: [email protected] (A. Dub

ac.uk (F. Keller), [email protected] (P. Sturt).1 Tel.: +44 131 651 1712; fax: +44 131 650 3461.

Work in experimental psycholinguistics has shown that the processing of coordinate struc-tures is facilitated when the two conjuncts share the same syntactic structure [Frazier, L.,Munn, A., & Clifton, C. (2000). Processing coordinate structures. Journal of PsycholinguisticResearch, 29(4) 343–370]. In the present paper, we argue that this parallelism effect is aspecific case of the more general phenomenon of syntactic priming—the tendency to repeatrecently used syntactic structures. We show that there is a significant tendency for struc-tural repetition in corpora, and that this tendency is not limited to syntactic environmentsinvolving coordination, though it is greater in these environments. We present two differ-ent implementations of a syntactic priming mechanism in a probabilistic parsing modeland test their predictions against experimental data on NP parallelism in English. Basedon these results, we argue that a general purpose priming mechanism is preferred over aspecial mechanism limited to coordination. Finally, we show how notions of activationand decay from ACT-R can be incorporated in the model, enabling it to account for a setof experimental data on sentential parallelism in German.

� 2008 Elsevier B.V. All rights reserved.

1. Introduction

Over the last two decades, the psycholinguistic litera-ture has provided a wealth of experimental evidence forsyntactic priming, an effect in which processing facilitationis observed when syntactic structures are re-used. Mostwork on syntactic priming has been concerned with sen-tence production (e.g., Bock, 1986; Pickering & Branigan,1998). Such studies typically show that people prefer toproduce sentences using syntactic structures that have re-cently been processed.

There has been less experimental work investigatingthe effect of syntactic priming on language comprehen-sion. Work on comprehension priming has shown that,under certain conditions, the processing of a target sen-tence is faster when that target sentence includes a struc-ture repeated from the prime. Branigan, Pickering,

. All rights reserved.

x: +44 131 650 6626.ey), frank.keller@ed.

Liversedge, Stewart, and Urbach (1995) showed this effectin whole-sentence reading times for garden path sen-tences, while Ledoux, Traxler, and Swaab (2007) havemore recently shown similar effects using Event RelatedPotentials. Moreover, work using a picture matching par-adigm (Branigan, Pickering, & McLean, 2005) has shownevidence for the priming for prepositional phraseattachments.

A phenomenon closely related to syntactic priming incomprehension is the so-called parallelism effect demon-strated by Frazier, Munn, and Clifton (2000): speakers pro-cesses coordinated structures more quickly when thesecond conjunct repeats the syntactic structure of the firstconjunct. The parallelism preference in NP coordinationcan be illustrated using (Frazier et al.’s, 2000) Experiment3, which recorded subjects’ eye-movements while theyread sentences like (1):

(1) a. Terry wrote a long novel and a short poem duringher sabbatical.

b. Terry wrote a novel and a short poem during hersabbatical.

A. Dubey et al. / Cognition 109 (2008) 326–344 327

Total reading times for the underlined region were fas-ter in (1a), where short poem is coordinated with a syntac-tically parallel noun phrase (a long novel), compared to(1b), where it is coordinated with a syntactically non-par-allel phrase.

In this paper, we will contrast two alternative accountsof the parallelism effect. According to one account, the par-allelism effect is simply an instance of a pervasive syntacticpriming mechanism in human parsing. This priming ac-count predicts that parallelism effects should be obtainableeven in the absence of syntactic environments involvingcoordination.

According to the alternative account, the parallelism ef-fect is due to a specialized copy mechanism, which is appliedin coordination and related environments. One such mech-anism is copy-a, proposed by Frazier and Clifton (2001). Un-like priming, this mechanism is highly specialized and onlyapplies in certain syntactic contexts involving coordination.When the second conjunct is encountered, instead of build-ing new structure, the language processor simply copies thestructure of the first conjunct to provide a template intowhich the input of the second conjunct is mapped. Frazierand Clifton (2001) originally intended copy-a to apply in ahighly restricted range of contexts, particularly in caseswhere the scope of the left conjunct is unambiguouslymarked, for example gapping structures, where parallelismeffects are well documented (Carlson, 2002). However, it isclear that a mechanism like copy-a could potentially pro-vide an account for parallelism phenomena if allowed toapply to coordination more generally. This is because in aparallel coordination environment, the linguistic input ofthe second conjunct matches the copied structure, whilein a non-parallel case it does not, yielding faster readingtimes for parallel structures. If the copying account is cor-rect, then we would expect parallelism effects to be re-stricted to coordinate structures and would not apply inother contexts.

There is some experimental evidence that bears on theissues outlined above. In addition to testing items involv-ing coordination like (1) above, Frazier et al. (2000) also re-port an experiment in which they manipulated thesyntactic parallelism of two noun phrases separated by averb (as in a (strange) man noticed a tall woman). Unlikethe coordination case in (1), no parallelism advantagewas observed in this experiment. Taken together, the twoexperiments appear to favor an account in which parallel-ism effects are indeed restricted to coordination, as wouldbe predicted by a model based on a copy mechanism. How-ever, the results should be interpreted with caution, be-cause the coordination experiment, which showed theparallelism effect (Frazier et al.’s, 2000 Experiment 3) useda very sensitive eye-tracking technique, while the non-coordination experiment, which showed no such effect(Frazier et al.’s, 2000 Experiment 4) used the less sensitivetechnique of self-paced reading.

Apel, Crocker, and Knöferle (2007) described two exper-iments in German, which had a similar design to the Fra-zier et al. (2000) experiments summarized above. LikeFrazier et al. (2000), they found evidence for parallelismwhen the two relevant noun phrases were coordinated

(their Experiment 1), but not when they were subjectand object of the same verb (their Experiment 2). However,although both of Apel et al.’s (2007) experiments used eye-tracking, their conclusion relies on a cross-experimentcomparison. Moreover, the non-coordinating contexts con-sidered by both Frazier et al. (2000) and Apel et al. (2007)used sentences in which the relevant noun phrases differedin grammatical function (e.g., a (strange) man noticed a tallwoman), while two coordinated phrases share the samegrammatical function by definition. This may have affectedthe size of the parallelism effect.

The aim of the present paper is to compare the two ac-counts outlined above using a series of corpus studies andcomputational simulations. The basis for our modelingstudies is a probabilistic parser similar to those proposedby Jurafsky (1996) and Crocker and Brants (2000). We inte-grate both the priming account and the copying account ofparallelism into this parser, and then evaluate the predic-tions of the resulting models against reading time patternssuch as those obtained by Frazier et al. (2000). Apart fromaccounting for the parallelism effect, our model simulatestwo important aspects of human parsing: (i) it is broad-coverage (rather than only covering specific experimentalitems) and (ii) it processes sentences incrementally.

This paper is structured as follows. In Section 2, we pro-vide evidence for parallelism effects in corpus data. Wefirst explain how we measure parallelism, and then pres-ent two corpus studies that demonstrate the existence ofa parallelism effect in coordinate structures and in non-coordinate structures, both within and between sentences.These corpus results are a crucial prerequisite for our mod-eling effort, as the probabilistic parsing model that wepresent is trained on corpus data. Such a model is only ableto exhibit a parallelism preference if such a preference ex-ists in its training data, i.e., the syntactically annotated cor-pora we explore in Section 2.

In Section 3, we present probabilistic models that aredesigned to account for the parallelism effect. We firstpresent a formalization of the priming and copying ac-counts of parallelism and integrate them into an incremen-tal probabilistic parser. We then evaluate this parseragainst reading time patterns in Frazier et al.’s (2000) par-allelism experiments. Based on a consideration of the roleof distance in priming, we then develop more cognitivelyplausible parallelism model in Section 4 inspired by Ander-son et al.’s (2004) ACT-R framework. This model is againevaluated against the experimental items of Frazier et al.(2000). To show the generality of the model across lan-guages and syntactic structures, we also test this newmodel against the experimental items of Koeferle andCrocker (2006) which cover parallelism in sentential coor-dination in German. We conclude with a general discus-sion in Section 5.

2. Corpus studies

2.1. Adaptation

Psycholinguistic studies have shown that priming af-fects both production (Bock, 1986) and comprehension

328 A. Dubey et al. / Cognition 109 (2008) 326–344

(Branigan et al., 2005). The importance of comprehensionpriming at the lexical level has also been noted by thespeech recognition community (Kuhn & Mori, 1990), whouse so-called caching language models to improve the per-formance of speech comprehension software. The conceptof caching language models is quite simple: a cache of re-cently seen words is maintained, and the probability ofwords in the cache is higher than those outside the cache.

While the performance of caching language models isjudged by their success in improving speech recognitionaccuracy, it is also possible to use an abstract measure todiagnose their efficacy more closely. Church (2000) intro-duces such a diagnostic for lexical priming: adaptationprobabilities. Adaptation probabilities provide a methodto separate the general problem of priming from a particu-lar implementation (i.e., caching models). They measurethe amount of priming that occurs for a given construction,and therefore provide an upper limit for the performanceof models such as caching models.

Adaptation is based upon three concepts. First is theprior, which serves as a baseline. The prior measures theprobability of a word appearing, ignoring the presence orabsence of a prime. Second is the positive adaptation, whichis the probability of a word appearing given that it hasbeen primed. Third is the negative adaptation, the probabil-ity of a word appearing given it has not been primed.

In Church’s case, the prior and adaptation probabilitiesare defined as follows. If a corpus is divided into individualdocuments, then each document is then split in half. Werefer to the first half as the prime (half) and to the secondhalf as the target (half).2 If p is a random variable denotingthe appearance of a word in the prime half, and s is a ran-dom variable denoting the appearance of a word w in thetarget half, then we define the prior probability PPrior(w) as

PPriorðwÞ ¼Pðs ¼ wÞ ¼ Pðs ¼ w j p ¼ wÞPðp ¼ wÞþ Pðs ¼ w j p–wÞPðp–wÞ ð1Þ

Intuitively, PPrior(w) is the probability that w occurs in thetarget, independently of whether it has occurred in theprime. As indicated in Eq. (1), this can be computed bysumming the relevant conditional probabilities: the proba-bility that w occurs in the target given that it has occurredin the prime, and the probability that w occurs in the targetgiven that it has not occurred in the prime. According tothe rule of total probability, each conditional probabilityhas to be multiplied by the independent probability ofthe conditioning variable (P(p = w) and P(p – w),respectively).

The positive adaptation probability P+(w) and the nega-tive adaptation P�(w) can then be defined as follows:

PþðwÞ ¼ Pðs ¼ w j p ¼ wÞ ð2ÞP�ðwÞ ¼ Pðs ¼ w j p–wÞ ð3Þ

In other words, P+(w) is the conditional probability that theword w occurs in the target, given that it also occurred in

2 Our terminology differs from that of Church, who uses ‘history’ todescribe the first half, and ‘test’ to describe the second. Our terms avoid theambiguity of the phrase ‘test set’ and coincide with the common usage inthe psycholinguistic literature.

the prime. Conversely, P�(w) is the probability that w oc-curs in the target, given that it did not occur in the prime.

In the case of lexical priming, Church observes thatP+� PPrior > P�. In fact, even in cases when PPrior is quitesmall, P+ may be higher than 0.8. Intuitively, a positiveadaptation which is higher than the prior entails that aword is likely to reappear in the target given that it has al-ready appeared in the prime. In order to obtain corpus evi-dence for priming, we need to demonstrate that theadaptation probabilities for syntactic constructions behavesimilarly to those for lexical items, showing positive adap-tation P+ greater than the prior. As P�must become smallerthan PPrior whenever P+ is larger than PPrior, we only reportthe positive adaptation P+ and the prior PPrior.

2.2. Estimation

There are several methods available for estimating theprior and adaptation probabilities from corpora. The moststraightforward approach is to compute the maximumlikelihood empirical distribution, which can be achievedby simply counting the number of times a word w occurs(or fails to occur) in the prime and target positions in thecorpus. However, this approach has two shortcomingswhich preclude us from using it here.

First, the existing literature on priming in corpora (e.g.,Gries, 2005; Szmrecsanyi, 2005; Reitter, Moore, & Keller,2006; Jaeger, 2006a; Jaeger, 2006b; Jaeger & Snider,2007) reports that a variety of factors can influence prim-ing, including the distance between prime and target, thetype and genre of the corpus, and whether prime and tar-get are uttered by the same speaker. Previous work hasused multiple regression methods to study priming in cor-pora; this approach is particularly useful when several fac-tors are confounded, as regression makes it possible toquantify the relative contribution of each factor.

A second argument against a simple maximum likeli-hood approach emerges when carrying out statistical sig-nificance tests based on the word counts obtained fromthe corpus. Such tests often require that the occurrenceof a word wi is independent of the occurrence of anotherword wj. However, this independence assumption is trivi-ally false in our case: if wi occurs in a certain context, thenwe know that wj does not occur in that context. This im-plies that P(s = wjp = wi) and P(s = wjp = wj) are not statis-tically independent (if wi and wj are in the same context),therefore we are not able to apply independent statisticaltests to these two probabilities (or the underlying corpuscounts).

Both these shortcomings can be overcome by usingmultinomial logistic regression to estimate prior and adap-tation probabilities. In multinomial logistic regression, aresponse variable is defined as a function of a set of predic-tor variables. The response variable is multinomial, i.e., it isdrawn from a set of discrete categories (in contrast to bin-ary logistic regression, where the response variable cantake on only two different values). The predictor variablescan either be categorical or continuous. In the case of mea-suring Church-like lexical adaptation, each possible wordcorresponds to a category, and the response variable de-scribes the occurrence of word wj in the target position,

A. Dubey et al. / Cognition 109 (2008) 326–344 329

while the predictor variable describes the occurrence ofword wi in the prime position. In the priming case, wi = wj,in the non-priming case, wi – wj.

A multinomial logistic regression model includes aparameter vector b for each predictor variable. In the caseof lexical adaptation, this means that there is a parameterbi for each word wi which may occur in the priming posi-tion. One of the values of the predictor variable (the valuew0) serves as the reference category, which is assumed tohave a parameter estimate of 0. After parameter fitting,we have an estimated value b for the parameter vector,and the positive adaptation probability can then be com-puted according to the following formula:

bPþðs ¼ wiÞ ¼expðXbiÞ

1þP

j expðXbjÞð4Þ

Here, X is a vector in which the i-th element takes on thevalue 1 if p = wi, and the value 0 otherwise. Note in partic-ular that we can straightforwardly include predictor vari-ables other than the prime word in our regression model;technically, this corresponds to adding extra parametersto b and their corresponding explanatory variables to X;mathematically, this amounts to computing the condi-tional probability P(s = wijp = wi,r), where r is an addi-tional predictor variable.

The negative adaptation is estimated in the same wayas the positive adaption. The prior is estimated as follows,based on the definition in Eq. (1)

bPPriorðwiÞ ¼bPþðs ¼ wiÞbPðp ¼ wiÞþ bP�ðs ¼ wiÞbPðp–wiÞ ð5Þ

where bPðp ¼ wiÞ and bPðp–wiÞ can be estimated directlyfrom the corpus using maximum likelihood estimation,and the positive and negative adaptation terms are esti-mated using the regression coefficients.

Multinomial logistic regression makes it possible tocompute prior and adaptation probabilities while solvingboth of the problems with maximum likelihood estimationnoted above. First, we can freely include additional predic-tor variables if we want to determine the influence of pos-sible confounding variables on priming. Second, statisticalsignificance tests can now be performed for each predictorvariable in the regression, without requiring independencebetween the categories that a variable can take.

There is one potentially confounding variable in Studies1 and 2 that we need to pay particular attention to. This isthe general tendency of speakers to order syntactic phrasessuch that short phrases precede long phrases (Hawkins,1994). This short-before-long preference, sometimesknown as Behagel’s law, is also attested in corpus dataon coordination (Levy, 2002). The short-before-long prefer-ence can potentially amplify the parallelism effect. Forexample, consider a case in which the first conjunct con-sists of a relatively long phrase. Here, Behagel’s law wouldpredict that the second conjunct should also be long (infact, it should be even longer than the first). Assuming thatlong constituents tend to be generated by a certain specificset of rules, this would mean that the two conjuncts wouldhave an above-chance tendency to be parallel in structure,

and this tendency could be attributable to Behagel’s lawalone.

Studies 1 and 2 have the aim of validating our approach,and laying the ground for our modeling work in Studies3–5. We will use Church adaptation probabilities esti-mated using multinomial regression to investigate paral-lelism effects within coordination (Study 1) and outsidecoordination (Study 2), both within sentences and betweensentences. It is important to show that adaptation effectsexist in corpus data before we can build a model thatlearns the adaptation of syntactic rules from corpus fre-quencies. Our studies build on previous corpus-based workwhich demonstrated parallelism in coordination (Levy,2002; Cooper & Hale, 2005), as well as between-sentencepriming effects. Gries (2005), Szmrecsanyi (2005), Jaeger(2006a, 2006b) and Jaeger and Snider (2007) investigatedpriming in corpora for cases of structural choice (e.g., be-tween a dative object and a PP object or between activeand passive constructions). These results have been gener-alized by Reitter et al. (2006), who showed that arbitraryrules in a corpus can be subject to priming.

2.3. Study 1: parallelism in coordination

In this section, we test the hypothesis that coordinatednoun phrases in a corpus are more likely to be structurallyparallel than we would expect by chance. We show howChurch’s adaptation probabilities, as defined in the previ-ous section, can be used to measure syntactic parallelismin coordinate structures. We restrict our study to the con-structions used in Frazier et al.’s (2000) experiments, all ofwhich involve two coordinated NPs. This ensures that a di-rect comparison between our corpus results and the exper-imental findings is possible.

2.3.1. MethodThis study was carried out on the English Penn Tree-

bank (Release 2, annotated in Treebank II style; Marcuset al., 1994), a collection of documents which have beenannotated with parse trees by automatically parsing andthen manually correcting the parses. This treebank com-prises multiple parts drawn from distinct text types. To en-sure that our results are not limited to a particular genre,we used two parts of the treebank: the Wall Street Journal(WSJ) corpus of newspaper text and the Brown corpus ofwritten text balanced across genres. In both cases, we usedthe entire corpus for our experiments.

In the Penn Treebank, NP coordination is annotatedusing the rule NP ? NP1 CC NP2 where CC represents acoordinator such as and. The application of the adaptationmetric introduced in Section 2.1 to such a rule is straight-forward: we pick NP1 as the prime p and NP2 as the targets. We restrict our investigation to the following syntacticrules:

SBAR An NP with a relative clause, i.e., NP ? NP SBAR.PP An NP with a PP modifier, i.e., NP ? NP PP.N An NP with a single noun, i.e., NP ? N.

Det N An NP with a determiner and a noun, i.e.,NP ? Det N.

N Det N Det Adj N PP SBAR0

0.2

0.4

0.6

0.8

1

Prob

abili

ty

PriorAdaptShort PriorShort Adapt

Fig. 1. Prior, adaptation, and short-before-long prior and adaptation for

330 A. Dubey et al. / Cognition 109 (2008) 326–344

Det Adj N An NP with a determiner, an adjective, and anoun, i.e., NP ? Det Adj N.

Our study focuses on NP ? Det Adj N and NP ? Det N asthese are the rules used in the items of Frazier et al. (2000);NP ? N is a more frequent variant; NP ? NP PP andNP ? NP SBAR were added as they are the two most com-mon NP rules that include non-terminals on the right-handside.

To count the relative number of occurrences of eachprime and target pair, we iterate through each parsed sen-tences in the corpus. Each time the expansion NP ? NP1

CC NP2 occurs in a tree, we check if one (or both) of theNP daughters of this expansion matches one of the fiverules listed above. Each of these five rules constitutes acategory of the response and predictor variables in themultinomial logistic regression (see Section 2.2); we alsouse an additional category ‘other’ that comprises all othersrules. This category serves as the reference category forthe regression.

As noted in Section 2.1, a possible confound for a corpusstudy of parallelism is Behagel’s law, which states thatthere is a preference to order short phrases before longones. We deal with this confound by including an addi-tional predictor in the multinomial regression: the indica-tor variable r takes the value r = 1 if the first conjunct isshorter than the right conjunct, and r = 0 if this is notthe case. The regression vector X is then augmented to in-clude this indicator variable, and an additional parameteris likewise added to the parameter vector b to accommo-date the short-before-long predictor variable. In additionto the main effect of short-before-long, we also include apredictor that represents the interaction between adaptionand short-before-long.

The addition of the interaction term makes the compu-tation of the prior and adaptation probabilities slightlymore complicated. The prior can now be computed accord-ing to the following formula:

PPriorðwÞ ¼Pðs ¼ w j p ¼ w;r ¼ 0ÞPðp ¼ w;r ¼ 0Þþ Pðs ¼ w j p–w;r ¼ 0ÞPðp–w;r ¼ 0Þþ Pðs ¼ w j p ¼ w;r ¼ 1ÞPðp ¼ w;r ¼ 1Þþ Pðs ¼ w j p–w;r ¼ 1ÞPðp–w;r ¼ 1Þ ð6Þ

The positive adaptation probability must likewise includeboth cases or short-before-long and long-before-short:

bPþðs ¼wiÞ ¼ Pðs ¼ w j p ¼ w;r ¼ 0ÞPðp ¼ w;r ¼ 0Þþ Pðs ¼ w j p ¼ w;r ¼ 1ÞPðp ¼ w;r ¼ 1Þ ð7Þ

In addition, we compute two additional probabilities,the first of which is a prior for cases where the short phraseprecedes the long one (which we will refer to as the short-before-long prior). This is the probability of seeing a rule inthe second conjunct (regardless if it occurred in the firstconjunct or not) in cases where short rules precede longrules:

PShortðwÞ ¼Pðs ¼ w j p ¼ w;r ¼ 1ÞPðp ¼ w;r ¼ 1Þþ Pðs ¼ w j p–w;r ¼ 1ÞPðp–w;r ¼ 1Þ ð8Þ

The second probability is P(s = wjp = w,r = 1), the probabil-ity of priming given that the prime is shorter than the tar-get (i.e., r = 1). This probability, which in the regressiondirectly corresponds to the coefficient of the interactionbetween the predictors adaptation and short-before-long,will be referred to as the short-before-long adaptationprobability.

Based on the parameter estimates of the multinomiallogistic regression, we compute a total of 14 probabilityvalues for each corpus. We report the prior probabilityand positive adaptation probability for all rules; as Beha-gel’s Law only confounds the adaptation of complex rules,we report the short-before-long prior and the short-be-fore-long adaptation probabilities only for these rules(noun phrases of type PP and SBAR).

Our main hypothesis is that the adaptation probabilityis higher than the prior probability in a given corpus. Astronger hypothesis is that the short-before-long adapta-tion probability as defined above is also higher than theshort-before-long prior probability, i.e., that the parallel-ism preference holds even in cases that match the pre-ferred phrase order in coordinate structure (i.e., theshorter conjunct precedes the longer one). To test thesehypotheses, we perform v2 tests which compare the log-likelihood difference between a model that includes therelevant predictor and a model without that predictor.We report whether the following predictors are significant:the main effect of adaptation (corresponding toP(s = wjp = w)), the main effect of short-before-long, andthe interaction of adaptation and short-before-long (corre-sponding to P(s = wjp = w,r = 1)).

2.3.2. ResultsThe probabilities computed using multinomial regres-

sion are shown in Fig. 1 for the Brown corpus and Fig. 2for the Wall Street Journal corpus. The results of the signif-icance tests are given in Table 1 for Brown and in Table 2for WSJ. Each figure shows the prior probability (Prior)and the adaptation probability (Adapt) for all five construc-tions: single common noun (N), determiner and noun (Det

N), determiner, adjective, and noun (Det Adj N), NP with PP

modifier (PP), NP with relative clause (SBAR). In addition,

coordinate structures in the Brown corpus.

N Det N Det Adj N PP SBAR0

0.2

0.4

0.6

0.8

1

Prob

abili

ty

PriorAdaptShort PriorShort Adapt

Fig. 2. Prior, adaptation, and short-before-long prior and adaptation forcoordinate structures in the WSJ corpus.

Table 1Summary of the log-likelihood v2 statistics for the predictors in themultinomial regression for coordinate structures in the Brown corpus.

Predictor v2 df Probability

Adaptation 4055 25 p < 0.0001Short-before-long 2726 5 p < 0.0001Interaction 201 25 p < 0.0001

Table 2Summary of the log-likelihood v2 statistics for the predictors in themultinomial regression for coordinate structures in the WSJ corpus.

Predictor v2 df Probability

Adaptation 1686 25 p < 0.0001Short-before-long 1002 5 p < 0.0001Interaction 146 25 p < 0.0001

A. Dubey et al. / Cognition 109 (2008) 326–344 331

the figures show the short-before-long prior probability(Short Prior) and short-before-long adaptation probability(Short Adapt) for PP and SBAR categories.

For both corpora, we observe a strong adaptation effect:the adaptation probability is consistently higher than theprior probability across all five rules. According to thelog-likelihood v2 tests (see Tables 1 and 2), there was ahighly significant effect of adaptation in both corpora.

Turning to the short-before-long adaptation probabili-ties, we note that these also are consistently higher thanthe short-before-long prior probabilities in both corporafor the rules PP and SBAR. Short-before-long probabilitiescannot be computed for the rules N, Det N, and Det Adj N, asthey are of fixed length (one, two, and three words, respec-tively), due to the fact that they only contain pre-terminalson the right-hand side. The log-likelihood v2 tests alsoshow a significant interaction of adaptation and short-be-fore-long, consistent with the observation that there ismore adaptation in the short-before-long case (see Figs. 1and 2). There is also a main effect of short-before-long,which confirms that there is a short-before-long prefer-ence, but this is not the focus of the present study.

2.3.3. DiscussionThe main conclusion we draw is that the parallelism ef-

fect in corpora mirrors the one found experimentally byFrazier et al. (2000), if we assume higher probabilities arecorrelated with easier human processing. This conclusionis important, as the experiments of Frazier et al. (2000)only provided evidence for parallelism in comprehensiondata. Corpus data, however, are production data, whichmeans that our findings provide an important generaliza-tion of Frazier et al.’s experimental findings. Furthermore,we were able to show that the parallelism effect in corporapersists even if the preference for short phrases to precedelong ones is taking into account.

2.4. Study 2: parallelism in non-coordinated structures

The results in the previous section showed that the par-allelism effect found experimentally by Frazier et al. (2000)is also present in corpus data. As discussed in Section 1,there are two possible explanation for the effect: one interms of a construction-specific copying mechanism, andone in terms of a generalized syntactic priming effect. Inthe first case, we predict that the parallelism effect is re-stricted to coordinate structures, while in the second case,we expect that parallelism (a) is independent of coordina-tion, i.e., also applies to non-coordinate structures, and (b)occurs in the wider discourse, i.e., not only within sen-tences but also between sentences. The purpose of thepresent corpus study is to test these two predictions.

2.4.1. MethodThe method used was the same as in Study 1 (see Sec-

tion 2.3.1), with the exception that the prime and the tar-get are no longer restricted to being the first and secondconjunct in a coordinate structure. We investigated twolevels of granularity: within-sentence parallelism occurswhen the prime NP and the target NP appear within thesame sentence, but stand in an arbitrary structural rela-tionship. Coordinate NPs were excluded from this analysis,so as to make sure that any parallelism effects are not con-founded by coordination parallelism as established inStudy 1. For between-sentence parallelism, the prime NP oc-curs in the sentence immediately preceding the sentencecontaining the target NP.

The data for both types of parallelism analysis wasgathered as follows. Any NP in the corpus was considereda target NP if it was preceded by another NP in the relevantcontext (for within-sentence parallelism, the context wasthe same sentence, for between-sentence parallelism, itwas the preceding sentence). If there was more than onepossible prime NP (because the target was preceded bymore than one NP in the context), then the closest NP

was used as the prime. In the case of ties, the dominatingNP was chosen to accurately account for PP and SBAR

priming (i.e. in in the case of a noun with a relative clause,the entire NP will be chosen over the object of the relativeclause). The NP pairs gathered this way could either consti-tute positive examples (both NPs instantiate the samerule) or negative example (they do not instantiate the samerule). As in Study 1, the NPs were grouped into six catego-ries: the five NP rules listed in Section 2.3.1, and ‘other’ for

Table 3Summary of the log-likelihood v2 statistics for the predictors in themultinomial regression for within-sentence priming in the Brown corpus.

Predictor v2 df Probability

Adaptation 13,782 25 p < 0.0001Short-before-long 23,9732 5 p < 0.0001Interaction 49,226 25 p < 0.0001

Table 4Summary of the log-likelihood v2 statistics for the predictors in themultinomial regression for within-sentence priming in the WSJ corpus.

Predictor v2 df Probability

Adaptation 37,612 25 p < 0.0001Short-before-long 447,652 5 p < 0.0001Interaction 64,112 25 p < 0.0001

332 A. Dubey et al. / Cognition 109 (2008) 326–344

all other NP rules. A multinomial logistic regression modelwas then fit on this data set.

2.4.2. ResultsThe results for the within-sentence analysis for non-

coordinate structures are graphed in Figs. 3 and 4 for theBrown and WSJ corpus, respectively. The results of the sta-tistical tests are given in Tables 3 and 4. We find that thereis a parallelism effect in both corpora across NP types. Theadaptation probabilities are higher than the prior probabil-ities, except for two cases: SBAR rules in Brown, and PP

rules in WSJ. However, even in these cases, the short-be-fore-long adaptation is higher than the short-before-longprior, which indicates that there is a parallelism effect forstructures in which the short phrase precedes the longone. Furthermore, the log-likelihood v2 tests show signifi-cant effects of adaptation for both corpora, as well as sig-nificant main effects of short-before-long, and asignificant interaction of adaptation and short-before-long.

A parallelism effect was also found in the between-sen-tence analysis, as shown by Figs. 5 and 6, with the corre-sponding statistical tests summarized in Tables 5 and 6.In both corpora and for all structures, we found that theadaptation probability was higher than the prior probabil-

N Det N Det Adj N PP SBAR0

0.1

0.2

Prob

abili

ty

PriorAdaptShort PriorShort Adapt

Fig. 3. Prior, adaptation, and short-before-long prior and adaptation forwithin-sentence priming in the Brown corpus.

N Det N Det Adj N PP SBAR0

0.1

0.2

Prob

abili

ty

PriorAdaptShort PriorShort Adapt

Fig. 4. Prior, adaptation, and short-before-long short-before-long priorand adaptation for within-sentence priming in the WSJ corpus.

ity. In addition, the short-before-long adaptation was high-er than the short-before-long prior, confirming the findingthat the parallelism effect persists when the short-before-long preference for phrases is taken into account. (Recallthat only the SBAR and PP noun phrases can differ inlength, so short-before-long probabilities are only avail-able for these rule types.)

2.4.3. DiscussionThis experiment demonstrated that the parallelism ef-

fect is not restricted to coordinate structures. Rather, wefound that it holds across the board: for NPs that occurin the same sentence (and are not part of a coordinatestructure) as well as for NPs that occur in adjacent sen-tences. Just as for coordination, we found that this effectpersists if we only consider pairs of NPs that respect theshort-before-long preference. However, this study alsoindicated that the parallelism effect is weaker in within-sentence and between-sentence configurations comparedto in coordination: The differences between the prior prob-abilities and the adaptation probabilities are markedlysmaller than those uncovered for parallelism in coordinatestructure. (Note that Figs. 1 and 2 range from 0 to 1 on they-axis, while Figs. 3–6 range from 0 to 0.25.)

N Det N Det Adj N PP SBAR0

0.1

0.2

Prob

abili

ty

PriorAdaptShort PriorShort Adapt

Fig. 5. Prior, adaptation, and short-before-long prior and adaptation forbetween-sentence priming in the Brown corpus.

N Det N Det Adj N PP SBAR0

0.1

0.2

Prob

abili

ty

PriorAdaptShort PriorShort Adapt

Fig. 6. Prior, adaptation, and short-before-long prior and adaptation forbetween-sentence priming in the WSJ corpus.

Table 5Summary of the log-likelihood v2 statistics for the predictors in themultinomial regression for between-sentence priming in the Brown corpus.

Predictor v2 df Probability

Adaptation 21,952 25 p < 0.0001Short-before-long 699,252 5 p < 0.0001Interaction 55,943 25 p < 0.0001

Table 6Summary of the log-likelihood v2 statistics for the predictors in themultinomial regression for between-sentence priming in the WSJ corpus.

Predictor v2 df Probability

Adaptation 49,657 25 p < 0.0001Short-before-long 918,087 5 p < 0.0001Interaction 86,643 25 p < 0.0001

A. Dubey et al. / Cognition 109 (2008) 326–344 333

The fact that parallelism is a pervasive phenomenon,rather than being limited to coordinate structures, is com-patible with the claim that it is an instance of a generalsyntactic priming mechanism, which has been an estab-lished feature of accounts of the human sentence produc-tion system for a while (e.g., Bock, 1986). This runscounter to claims by Frazier et al. (2000), who argue thatparallelism only occurs in coordinate structures. (It isimportant to bear in mind, however, that Frazier et al.,2000 only make explicit claims about comprehension, notabout production.)

The question of the relationship between comprehen-sion and production data is an interesting one. One wayof looking at a comprehension-based priming mechanismmay be in terms of a more general sensitivity of compreh-enders towards distributional information. According tosuch a hypothesis, processing should easier if the currentinput is more predictable given previous experience (Reali& Christiansen, 2007). The corpus studies described hereand in previous work have shown that similar structuresdo tend to appear near to each other more often thanwould be expected by chance. If comprehenders are sensi-tive to this fact, then this could be the basis for the priming

effect. This is an attractive hypothesis, as it requires noadditional mechanism other than prediction, and providesa very general explanation that is potentially able to unifyparallelism and priming effects with experience-basedsentence processing in general, as advocated, for instance,by constraint-based lexicalist models (MacDonald, Pearl-mutter, & Seidenberg, 1994) or by the tuning hypothesis(Mitchell, Cuetos, Corley, & Brysbaert, 1996). The probabi-listic model we will propose in the remainder of this paperis one possible instantiation of such an experience-basedapproach.

3. Modeling studies

3.1. Priming models

In Section 2, we provided corpus evidence for syntacticparallelism at varying levels of granularity. Focusing on NP

rules, we found the parallelism effect in coordinate struc-tures, but also in non-coordinate structures and betweenadjacent sentences. These corpus results form an impor-tant basis for the modeling studies to be presented in therest of this paper. Our modeling approach uses a probabi-listic parser, which obtains probabilities from corpus data.Therefore, we first needed to ascertain that the corpus dataincludes evidence for parallelism. If there was no parallel-ism in the training data of our model, it would be unlikelythat the model would be able to account for the parallelismeffect.

Having verified that parallelism is present in the corpusdata, in this section we will propose a set of models de-signed to capture the priming hypothesis and the copyhypothesis of parallelism, respectively. To keep the modelsas simple as possible, each formulation is based on anunlexicalized probabilistic context-free grammar (PCFG),which also serves as a baseline for evaluating more sophis-ticated models. In this section, we describe the baseline,the copy model, and the priming model in turn. We willalso discuss the design of the probabilistic parser used toevaluate the models.

3.1.1. Baseline modelPCFGs serve as a suitable baseline for our modeling ef-

forts as they have a number of compelling and well-under-stood properties. For instance, PCFGs make a probabilisticindependence assumption which closely corresponds tothe context-free assumption: each rule used in a parse isconditionally independent of other rules, given its parent.This independence assumption makes it relatively simpleto estimate the probability of context-free rules. The prob-ability of a rule N ? f is estimated as

bPðf j NÞ ¼ cðN ! fÞcðNÞ ð9Þ

where the function c(�) counts the number of times a ruleor the left-hand side of a rule occurs in a training corpus.

3.1.2. Copy modelThe first model we introduce is a probabilistic variant of

Frazier and Clifton’s (2001) copying mechanism: it models

334 A. Dubey et al. / Cognition 109 (2008) 326–344

parallelism only in coordination. This is achieved byassuming that the default operation upon observing acoordinator (assumed to be anything marked up with aCC tag in the corpus, e.g., and) is to copy the full subtreeof the preceding coordinate sister. The copying operationis depicted in Fig. 7: upon reading the and, the parser at-tempts to copy the subtree for a novel to the second NP abook.

Obviously, copying has an impact on how the parserworks (see Section 3.1.5 for details). However, in a proba-bilistic setting, our primary interest is to model the copyoperation by altering probabilities compared to a standardPCFG. Intuitively, the characteristic we desire is a higherprobability when the conjuncts are identical. More for-mally, if PPCFG(t) is the probability of a tree t according toa standard PCFG and PCopy(t) is the probability of copyingthe tree t, then if two the subtrees t1 and t2 are parallel,we can say that PCopy(t2) > PPCFG(t2). For simplicity’s sake,we assume that PCopy(t) can be approximated by a singleparameter PCopy for all trees t, which can then be estimatedfrom the training corpus.

A naive probability assignment would decide betweencopying with probability PCopy(t2) or analyzing the subtreerule-by-rule with the probability (1 � PCopy(t2)) � PPCFG(t2).However, the PCFG distribution assigns some probabilityto all trees, including a tree which is equivalent to t2 ‘bychance’. The probability that t1 and t2 are equal ‘by chance’is PPCFG(t1). We must therefore properly account for theprobability of these chance derivations. This is done by for-malizing the notion that identical subtrees could be due toeither a copying operation or by chance, giving the follow-ing probability for identical trees:

PCopyðt2Þ þ PPCFGðt1Þ ð10Þ

Similarly, the probability of a non-identical tree is

1� PPCFGðt1Þ � PCopy

1� PPCFGðt1Þ� PPCFGðt2Þ ð11Þ

This accounts for both a copy mismatch and a PCFG deriva-tion mismatch, and assures the probabilities still sum toone. These definitions for the probabilities of parallel andnon-parallel coordinate sisters therefore form the basis ofthe Copy model.

novel a bookTerry

NP

a novel and a bookTerry wrote

andwrote a

NP

NP

NP

NP

CC

CC

Fig. 7. The Copy Model copies entire subtrees upon observing acoordinator.

3.1.2.1. Estimation. We saw in Section 2.3 that the parallel-ism effect can be observed in corpus data. We make use ofthis fact to estimate the non-PCFG parameter of the Copymodel, bPCopy (the PCFG parameters are estimated in thesame way as for a standard PCFG, as explained above).While we cannot observe copying directly because of the‘chance’ derivations, we can use Eqs. (10) and (11) aboveto derive a likelihood equation, which can then be maxi-mized using a numerical method. A common approach tonumerical optimization is the gradient ascent algorithm(Press, Teukolsky, Vetterling, & Flannery, 1988), which re-quires a gradient of the likelihood (i.e., the partial deriva-tive of the likelihood with respect to PCopy). If we let cident

be the number of trees in the corpus which are identical,and if j counts through the non-identical trees (and tj1 isthe first conjunct of the jth non-identical tree in the cor-pus), then the gradient of the log-likelihood equation (withrespect to PCopy) is

r ¼ cident

PCopy�X

j

1� PPCFGðtj1 Þ1� PCopy � PPCFGðtj1 Þ

ð12Þ

This equation is then fed to the gradient ascent algorithm,producing an estimate bPCopy which maximizes the likeli-hood of the training corpus. This approach ensures thatthe copy parameter bPCopy is set to the optimal value, i.e.,the value that results in the best fit with the training data,and thus maximizes our chance of correctly accounting forthe parallelism effect.

3.1.3. Between modelWhile the Copy model limits itself to parallelism in

coordination, the next two models simulate structuralpriming in general. Both are similar in design, and arebased on a simple insight: we can condition a PCFG ruleexpansion on whether the rule occurred in some previouscontext. If Prime is a binary-valued random variable denot-ing if a rule occurred in the context, then we can define anadaptation probability for PCFG rules as

bPðf j N; PrimeÞ ¼ cðN ! f; PrimeÞcðN; PrimeÞ ð13Þ

This is an instantiation of Church’s (2000) adaptation prob-ability, used in a similar fashion as in our corpus studies inSection 2. Our aim here is not to show that certain factorsare significant predictors; rather, we want to estimate theparameters of a model that simulates the parallelism effectby incrementally predicting sentence probabilities. There-fore, unlike in the corpus studies, we do not need to carryout hypothesis testing and we can simply use the empiricaldistribution to estimate our parameters, rather than rely-ing on multinomial logistic regression.

For our first model, the context is the previous sentence.Thus, the model can be said to capture the degree to whichrule use is primed between sentences. We henceforth referto this as the Between model. Each rule acts once as a tar-get (i.e., the event of interest) and once as a prime.

3.1.4. Within modelJust as the Between model conditions on rules from the

previous sentence, the Within sentence model conditions

A. Dubey et al. / Cognition 109 (2008) 326–344 335

on rules from earlier in the current sentence. Each rule actsonce as a target, and possibly several times as a prime (foreach subsequent rule in the sentence). A rule is considered‘used’ once the parser passes the word on the leftmost cor-ner of the rule. Because the Within model is finer grainedthan the Between model, it should be able to capture theparallelism effect in coordination. In other words, thismodel could explain parallelism in coordination as an in-stance of a more general priming effect.

3.1.5. ParserReading time experiments, including the parallelism

studies of Frazier et al. (2000), measure the time taken toread sentences on a word-by-word basis. Slower readingtimes are assumed to indicate processing difficulty, andfaster reading times (as is the case with parallel structures)are assumed to indicate processing ease, and the locationof the effect (which word or words it occurs on) can beused to draw conclusions about the nature of the difficulty.

As our main aim is to build a psycholinguistic model ofstructural repetition, the most important feature of theparsing model is to build structures incrementally, i.e., ona word-by-word basis. In order to achieve incrementality,we need a parser which has the prefix property, i.e., it isable to assign probabilities to arbitrary left-most sub-strings of the input string.

We use an Earley-style probabilistic parser, which hasthese properties and outputs the most probable parses(Stolcke, 1995). Furthermore, we make a number of modi-fications to the grammar to speed up parsing time. Thetreebank trees contain annotations for grammatical func-tions (i.e., subject, object, different types of modifier) andco-indexed empty nodes denoting long-distance depen-dencies, both of which we removed.

The Earley algorithm requires a modification to supportthe Copy model. We implemented a copying mechanismthat activates every time the parser comes across a CC

tag in the input string, indicating a coordinate structure,as shown in Fig. 7. Before copying, though, the parser looksahead to check if the part-of-speech tags after the CC areequivalent to those inside first conjunct. In the examplein Fig. 7, the copy operation succeeds because the tags ofthe NPs a book and a novel are both Det N.

Mathematically, the copying operation is guaranteed toreturn the most probable parse because an incrementalparser is guaranteed to know the most likely parse of thefirst conjunct by the time it reaches the coordinator.

The Between and Within models also require a changeto the parser. In particular, they require a cache or historyof recently used rules. This raises a dilemma: whenever aparsing error occurs, the accuracy of the contextual historyis compromised. However, the experimental items usedwere simple enough that no parsing errors occurred. Thus,it was always possible to fill the cache using rules from thebest incremental parse so far.3

3 In unrestricted text, where parsing errors are more common, alterna-tive strategies are required. One possibility is to use an accurate lexicalizedparser. A second possibility arises if an ‘oracle’ can suggest the correct parsein advance: then the cache may be filled with the correct rules suggested bythe oracle.

3.2. Study 3: modeling parallelism experiments

The purpose of this study is to evaluate the models de-scribed in the previous section by using them to simulatethe results of a reading time experiment on syntactic par-allelism. We will test the hypothesis that our models cancorrectly predict the pattern of results found in the exper-iment study. We will restrict ourselves to evaluating thequalitative pattern of results, rather than modeling thereading times directly.

Frazier et al. (2000) reported a series of experimentsthat examined the parallelism preference in reading. Intheir Experiment 3, they monitored subjects’ eye-move-ments while they read sentences like (2):

(2) a. Terry wrote a long novel and a short poem duringher sabbatical.

b. Terry wrote a novel and a short poem during hersabbatical.

They found that total reading times were faster on thephrase a short poem in (2-a), where the coordinated nounphrases are parallel in structure, compared with in (2-b),where they are not.

The probabilistic models presented here do not directlymake predictions about total reading times as reported byFrazier et al.’s (2000). Therefore, a linking hypothesis is re-quired to link the predictions of the model (e.g., in the formof probabilities) to experimentally observed behavior (e.g.,in the form of processing difficulty). The literature on prob-abilistic modeling contains a number of different linkinghypotheses. For example, one possibility is to use an incre-mental parser with beam search (e.g., an n-best approach).Processing difficulty is predicted at points in the inputstring where the current best parse is replaced by an alter-native derivation, and garden-pathing occurs when theultimately correct parse has dropped out of the beam(Jurafsky, 1996; Crocker & Brants, 2000). However, this ap-proach is only suited to ambiguous structures.

An alternative is to keep track of all derivations, and pre-dict difficulty if there is a change in the probability distribu-tions computed by the parser. One way of conceptualizingthis is Hale’s (2001) notion of surprisal. Intuitively, surprisalmeasures the change in probability mass as structural predic-tions are disconfirmed when a new word is processed. If thenew word disconfirms predictions with a large probabilitymass (high surprisal), then high processing complexity is pre-dicted, corresponding to increased processing difficulty. If thenew word only disconfirms predictions with a low probabil-ity mass (low surprisal), then we expect low processing com-plexity and reduced processing difficulty. Technically, thesurprisal Sk at input word wk corresponds to the differencebetween the logarithm of the prefix probabilities of wordwk�1 and wk (for a detailed derivation, see Levy, 2008):

Sk ¼ log Pðw1 � � �wkÞ � log Pðw1 � � �wk�1Þ ð14Þ

The standard definition of surprisal given in Eq. (14) is use-ful for investigating word-by-word reading time effects. Inthe present parallelism studies, however, we are interestedin capturing reading time differences in regions containingseveral words. Therefore, we introduce a more general

Table 7Mean log surprisal values for items based on Frazier et al. (2000).

Model Para:(3a)

Non-para:(3b)

Non-para:(3c)

Para:(3d)

(3a) � (3b) (3c) � (3d)

Baseline �0.34 �0.48 �0.62 �0.74 0.14 0.12Within �0.30 �0.51 �0.53 �0.30 0.20 �0.20Copy �0.52 �0.83 �0.27 �0.31 0.32 0.04

336 A. Dubey et al. / Cognition 109 (2008) 326–344

notion of surprisal computed over an m word region span-ning from wk+1 to wk+m+1:

Sk...kþm ¼ log Pðw1 � � �wkþmÞ � log Pðw1 � � �wk�1Þ ð15ÞSubsequent uses of ‘surprisal’ will refer to this region-based surprisal quantity, and the term ‘word surprisal’ willbe reserved for the traditional word-by-word measure.Both word surprisal and region surprisal have the usefulproperty that they can be easily computed from the prefixprobabilities returned by our parser.

In addition to surprisal, we also compute a simpler met-ric: we calculate the probability of the best parse of thewhole sentence (Stolcke, 1995). Low probabilities are as-sumed to correspond to high processing difficulty, andhigh probabilities predict low processing difficulty. As weuse log-transformed sentence probabilities, this metrichypothesizes a log-linear relationship between modelprobability and processing difficulty.

3.2.1. MethodThe item set we used for evaluation was adapted from

that of Frazier et al. The original two relevant conditionsof their experiment (see (2a) and (2b)) differ in terms oflength. This results in a confound in the PCFG-based frame-work, because longer sentences tend to result in lowerprobabilities (as the parses tend to involve more rules).To control for such length differences, we adapted thematerials by adding two extra conditions in which the rela-tion between syntactic parallelism and length was re-versed. This resulted in the following four conditions:

(3) a. Det Adj N and Det Adj N (parallel)Terry wrote a long novel and a short poem duringher sabbatical.

4

effecdesipredof r(durcons

b. Det N and Det Adj N (non-parallel)Terry wrote a novel and a short poem during hersabbatical.

c. Det Adj N and Det N (non-parallel)Terry wrote a long novel and a poem during hersabbatical.

d. Det N and Det N (parallel)Terry wrote a novel and a poem during hersabbatical.

In order to account for Frazier et al.’s parallelism effect aprobabilistic model should predict a greater difference inprobability between (3a) and (3b) than between (3c) and(3d) (i.e., for the reading times holds: (3a)–(3d) > (3c)–(3d)). This effect will not be confounded with length,because the relation between length and parallelism isreversed between (3a) � (3b) and (3c) � (3d). In order toobtain a more extensive evaluation set for our models,we added eight items to the original Frazier et al. materials,resulting in a new set of 24 items similar to (3).4

To ensure the new materials and conditions did not alter the parallelismt, we carried out a preliminary eye-tracking study based on an identical

gn to the modeling study (see (3)), with 36 participants. The interactionicted by parallelism ((3a)–(3b) > (3c)� (3d)) was obtained in probability

egression from the region immediately following the second conjuncting her sabbatical) and also in second-pass reading times on a regionisting of and followed by the second conjunct (e.g., and a short poem).

The models we evaluated were the Baseline, the Withinand the Copy models, trained as described in Section 3.1.We tested these three PCFG-based models on all 24 exper-imental sentences across four conditions. Each sentencewas input as a sequence of correct part-of-speech tags,and the surprisal of the sentence as well as the probabilityof the best parse was computed.

Note that we do not attempt to predict reading timedata directly. Rather, our model predictions are evaluatedagainst reading times averaged over experimental condi-tions. This means that we predict qualitative patterns inthe data, rather than obtaining a quantitative measure ofmodel fit, such as R2. Qualitative evaluation is standardpractice in the psycholinguistic modeling literature. It isalso important to note that reading times are contami-nated by non-syntactic factors such as word length andword frequency (Rayner, 1998) that parsing models arenot designed to account for.

3.2.2. Results and discussionTable 7 shows the mean log surprisal values estimated

by the models for the four experimental conditions, alongwith the differences between parallel and non-parallelconditions. Table 8 presents the mean log probabilities ofthe best parse in the same way.

The results indicate that both the Within and the Copymodel predict a parallelism advantage. We used a Wilco-xon signed rank test to establish if the difference insurprisal and probability values were statistically differentfor the parallel and the non-parallel conditions, i.e., wecompared the values for (3a) � (3b) with those for (3c) �(3d).5 In the surprisal case, significant results were obtainedfor both the Within model (N = 24, Z = 2.55, p < .01, one-tailed) and the Copy model (N = 24, Z = 3.87, p < .001, one-tailed). Using the probability of the best parse, a statisticallysignificant difference was again found for both the Within(N = 24, Z = 1.67, p < .05, one-tailed) and the Copy model(N = 24, Z = 4.27, p < .001, one-tailed).

The qualitative pattern of results is therefore the samefor both models: the Within and the Copy model both pre-dict that parallel structures are easier to process than non-parallel ones. However, there are quantitative differencesbetween the surprisal and the best-parse implementationsof the models. In the surprisal case, the parallelism effectfor the Within model is larger than the parallelism effectfor the Copy model. This difference is significant (N = 24,

5 The Wilcoxon signed rank test can be thought of as a non-parametricversion to the paired t-test for repeated measurements on a single sample.The test was used because it does not require strong assumptions about thedistribution of the log probabilities.

Table 8Mean log probability values for the best parse for items based on Frazier et al. (2000).

Model Para: (3a) Non-para: (3b) Non-para: (3c) Para: (3d) (3a) � (3b) (3c) � (3d)

Baseline �33.47 �32.37 �32.37 �31.27 �1.10 �1.10Within �33.28 �31.67 �31.70 �29.92 �1.61 �1.78Copy �16.18 �27.22 �26.91 �15.87 11.04 �11.04

A. Dubey et al. / Cognition 109 (2008) 326–344 337

Z = 2.93, p < .01, one-tailed). In the case of the best-parseimplementation, we observe the opposite pattern: theCopy model predicts a significantly larger parallelismadvantage than the Within model (N = 24, Z = 4.27,p < .001, one-tailed).

The Baseline model was not evaluated statistically,because by definition it predicts a constant value for(3a) � (3b) and (3c) � (3d) across all items (there aresmall differences due to floating point underflow). Thisis simply a consequence of the PCFG independenceassumption, coupled with fact that the four conditionsof each experimental item differ only in the occurrencesof two NP rules.

Overall, the results show that the approach taken herecan be successfully applied to model experimental data.Moreover, the effect is robust to parameter changes: wefound a significant parallelism effect for both the Withinand the Copy model, in both the surprisal and the best-parse implementation. It is perhaps not surprising thatthe Copy model shows a parallelism advantage for theFrazier et al. (2000) items, as this model was explicitlydesigned to prefer structurally parallel conjuncts. Themore interesting result is the parallelism effect we foundfor the Within model, which shows that such an effectcan arise from a more general probabilistic primingmechanism. We also found that the surprisal implemen-tation of the Within model predicts a larger parallelismeffect than the best-parse implementation (relative tothe Copy model, see Tables 7 and 8). This indicates thatusing all available parses (as in the surprisal case) ampli-fies the effect of syntactic repetition, perhaps because ittakes into account repetition effects in all possible syntac-tic structures that the parser considers, rather than onlyin the most probable structure (as in the best parseimplementation).

In spite of this difference in effect size, we can conclude,however, that best-parse probabilities are a good approxi-mation of surprisal values for the sentences under consid-eration, while being much simpler to compute. Wetherefore focus on best-parse probabilities for the remain-der of the paper.

4. Modeling priming and decay

4.1. A model of priming inspired by ACT-R

The Within and Between priming models introduced inSections 2 and 3 make the assumption that priming isbased upon a binary decision: a target item is eitherprimed or not. Moreover, the model ‘forgets’ what wasprimed once it finishes processing the target region, i.e.,

no learning occurs. As we saw in Section 3.2, these model-ing assumptions were sufficient to model a set of standardexperimental data. However, Section 2 showed that theparallelism effect applies to a range of different levels ofgranularity. Using a single binary decision makes it impos-sible to build a model which can simultaneously accountfor priming at multiple levels of granularity. In this section,we introduce the Decay model, which is able to account forpriming without making an arbitrary choice about the sizeof the priming region.

The structure of this model is inspired by two obser-vations. First, we found in Section 2 that priming effectswere smaller as the size of the priming region increased(from coordinate structures to arbitrary structures withinsentences, to arbitrary structures between sentences).Second, a number of authors (e.g., Gries, 2005; Szmrecs-anyi, 2005; Reitter et al., 2006) found in corpus studiesthat the strength of priming decays over time (but notJaeger, 2006b & Jaeger & Snider, 2007, who controlledfor speaker differences). Intuitively, these two effectsare related: by selecting a larger priming region, weeffectively increase the time between the onset of theprime and onset of the target. Therefore, by accountingfor decay effects, it may be possible to remove the arbi-trary choice of the size of the priming region from ourmodel. Given that we are assuming that priming is dueto a general cognitive mechanism, it is a logical next stepto model decay effects using a general, integrated cogni-tive architecture. We will therefore build on conceptsfrom the ACT-R framework, which has been successfullyused to account for a wide range of experimental data onhuman cognition (Anderson, 1983; Anderson et al.,2004).

The ACT-R system has two main elements: a plannerand a model of memory which places a cost on accessesto declarative memory (where declarative facts, also called‘chunks’, are stored) and on procedural memory (whichcontains information on how to carry out planning ac-tions). The Decay model uses the ACT-R memory systemto store grammar rules but we eschew the planner, insteadcontinuing to use the incremental Earley parser of previousexperiments.

Following earlier work on parsing with ACT-R (Lewis &Vasishth, 2005), we assume that grammar rules are storedin procedural memory. Lewis and Vasishth (2005) fullycommit to the ACT-R architecture, implementing theirincremental parser in the ACT-R planning system andtherefore storing partially constructed parses in declara-tive memory. Instead, we will assume that the Decay mod-el uses the same underlying chart parser presented inSection 3.1.5 instead of a planning system. This means

tall woman when ...Hilda noticed a strange man and a

Fig. 8. The log probability of the rule NP ? Det Adj N while reading a sentence, according to the Decay model. The probability of a rule changes graduallywhile reading: a small spike upon successfully detecting a use, a slow decay otherwise.

338 A. Dubey et al. / Cognition 109 (2008) 326–344

we make no particular claims about the memory cost ofaccessing partially constructed parses.6

A goal of the full ACT-R architecture is to model thetime course of cognitive behavior. Our restricted ACT-R-in-spired simulation, though, is limited to modeling the prob-ability of certain memory accesses, and, via our linkinghypothesis, we only make qualitative predictions aboutprocessing difficulty. This restriction is motivated by thedesire to maintain the underlying architecture and ap-proach developed for the Within and Copy models, whichwas successfully evaluated in the previous section.

In ACT-R, the probability of memory access dependsupon recency information both for declarative chunks,and, following the work of Lovett (1998), for productionrules. The probability of a production rule (or more specif-ically, the probability of successfully applying a productionrule) depends on the number of past successes and failuresof that rule. Lovett (1998) argues that the times of thesesuccesses and failures should be taken into account. Forour ACT-R-inspired Decay model, a ‘success’ will be a suc-cessful application of a rule N ? f, and a ‘failure’ will be anapplication of any other rule with the same left-hand sideN. The use of rule can therefore cause a short-lived spike inits probability, as depicted in Fig. 8. As we will see below,this choice of success or failure makes the Decay model asimple generalization of a PCFG.

Successes are counted in a similar manner to primedrules in the Between model: after each word, we computethe most probable parse, and compute the set of rulesused in this parse. Any new rule (compared to the setfrom the previous word) is considered to be a success atthis word.7

ACT-R assumes that the probability of declarativechunks is based upon their activation, and that this activa-tion directly influences the time taken to retrieve chunksfrom long-term memory into buffers, where they can beacted upon. There is no such direct influence of the proba-bility of success on the time to retrieve or act on a produc-tion rule. However, there is a clear, if indirect influence on

6 This assumption is reasonable on our low-memory load items, but maybe untenable for parsing high-memory load constructions such as objectrelative clauses.

7 If a structure is ambiguous and requires reanalysis, we make theassumption that the initial incorrect analysis acts as a prime. However,because the items of the parallelism experiment are unambiguous, thisassumption has no effect for our data set.

time: if the rule required for producing a parse has a highprobability, the expected number of incorrect rules at-tempted will be low, leading to the prediction of lower pro-cessing difficulty; on the other hand, if the required rulehas a low probability, the expected number of incorrectrules attempted will be high, leading to a prediction of highprocessing difficulty. This is no different than the intuitionbehind the linking hypothesis of Section 3.2, where we as-sumed that low probability (or high surprisal) correspondsto high processing difficulty. The novelty of the Decaymodel is that the time sensitivity of production rulesnow affects the calculated probabilities.

4.1.1. Parametrizing the modelRecall that in a probabilistic context-free grammar the

probability of a grammar rule N ? f is estimated as follows:

bPðf j NÞ ¼ cðN ! fÞcðNÞ ð16Þ

As before, the function c(�) counts the number of times theevent occurs. The Lovett model postulates that the proba-bility of a certain production rule being picked is:

bP ¼P

i2SuccessestdiP

i2Successestdi þ

Pj2Failurest

dj

ð17Þ

Here, d is the decay constant, Successes is the set of success-ful rule applications, Failures is the set of unsuccessful ruleapplications, and ti is time at which rule application i oc-curred. As described below, this time parameter can esti-mated from a corpus. Following convention in the ACT-Rliterature (see Lewis & Vasishth, 2005), we set d to 0.5.

As noted above, the model uses a notion of success andfailure appropriate for use with a probabilistic context-freegrammar: a success is an application of a rule N ? f, and afailure is all other rule applications with the same left-hand side N. The standard ACT-R model defines successesand failures in terms of a higher-level task. In our case,the task is finding the best way to rewrite an N to get a cor-rect parse. Using this choice for success and failure, we canrewrite the probability of a rule as

bPðf j NÞ ¼P

i2SuccessestdiP

i2Successestdi þ

Pj2Failurest

dj

ð18Þ

For example, if the rule NP ? Det Adj N is used to parse thetag sequence Det Adj N, then this rule will get a success attime ti while all other NP rules get a failure. Notice that if

Table 9Mean log probability estimates for the best parse for items based on Frazier et al. (2000).

Model Para: (3a) Non-para: (3b) Non-para: (3c) Para: (3d) (3a) � (3b) (3c) � (3d)

Baseline �33.47 �32.37 �32.37 �31.27 �1.10 �1.10Within �33.28 �31.67 �31.70 �29.92 �1.61 �1.78Copy �16.18 �27.22 �26.91 �15.87 11.04 �11.04Decay �39.27 �38.14 �38.02 �36.86 �1.13 �1.16

A. Dubey et al. / Cognition 109 (2008) 326–344 339

the parameter d is set to 0, all the exponentiated timeparameters td

i are set to 1, giving the maximum likelihoodestimator for a PCFG in Eq. (16), which is what we used asour Baseline model. In other words, our Decay model has astandard PCFG as a special case.

Standard ACT-R uses the activation of procedural rulesas an intermediate step toward calculating time courseinformation. However, the model presented here does notmake any time course predictions. This choice was madedue to our focus on syntactic processing behavior: obvi-ously, time is also spent doing semantic, pragmatic and dis-course inferences, which we do not attempt to model.Although this simplifies the model, it does pose a problem.One of the model parameters, ti, is expressed in units oftime, and cannot be observed directly in the corpus. Toovercome this difficulty, we assume each word uniformlytakes 500 ms to read. This is meant as an approximationof the average total reading time of a word.8 Because theprevious occurrence of a constituent can be several sentencesaway, we expect that local inaccuracies will average out overa sufficiently large training corpus.

4.2. Study 4: modeling parallelism experiments using thedecay model

The purpose of this experiment is to evaluate the ACT-R-inspired Decay model described in the previous section.Our hypothesis is that adding decay to the model, whileincreasing its cognitive realism, does not impair the mod-el’s ability to predict the pattern of results in experimentsof syntactic parallelism.

4.2.1. MethodAs in Study 3, we estimated the model probabilities

using the WSJ corpus. Similar to the Within model, param-eter estimation requires traversing the rules in the sameorder the parser does, here to get accurate statistics forthe time parameter.

4.2.2. Results and discussionFollowing the method of Study 3, we test the model on

the extended set of experimental stimuli based on Frazieret al. (2000). As described in Section 3.2, we use 24 itemsin four conditions, and compute the probability of the bestparse for the sentences in each condition (see (3) in Section

8 This value is arbitrary, but could be made precise using eye-trackingcorpora which provide estimates for word reading times in continuous text(e.g., Kennedy & Pynte, 2005). Any constant value would produce the samemodeling result. In general, frequency and length effects on reading timeare well documented (Rayner, 1998) and could be incorporated into themodel.

3.2 for example sentences). The hypothesis of interest isagain that the difference between (3a) � (3b) is greaterthan the difference between (3c) � (3d).

The results for the Decay model are shown in Table 9,with the results of the Baseline, Within, and Copy modelfrom Study 3 as comparison. We find that the Decay modelgives a significant parallelism effect using the Wilcoxonsigned rank test (N = 24, Z = 4.27, p < .001, one-tailed). Likethe Within model, the effect size is quite small, as a generalmechanism is used to predict the parallelism effect, ratherthan a specialized one as in the Copy model.

However, the Within model and the Decay model donot make identical predictions. The differences betweenthe two models become clear upon closer examination ofthe experimental items. Some of the materials have a Det

N as the subject, for instance The nurse checked a young wo-man and a patient before going home. In this example, theWithin model will predict a speedup for a patient eventhough Frazier et al. (2000) would not consider it to be aparallel sentence. In such cases, the Decay model predictssome facilitation at the target NP, but the effect is weakerbecause of the greater distance from the target to the sub-ject NP. This example illustrates how the Decay modelbenefits from the fact that it incorporates decay and there-fore captures the granularity of the priming effect moreaccurately. This contrasts with the coarse-grained binaryprimed/unprimed distinction made by the Within model.We will return to this observation in the next section.

Moreover, the Decay model is more cognitively plausi-ble than the Within model because it is grounded in re-search on cognitive architectures: we were able to re-usemodel parameters proposed in the ACT-R literature (suchas the decay parameter) without resorting to stipulationor parameter tuning.

4.3. Study 5: the parallelism effect in german sentencecoordination

Sections 3.2 and 4.1 introduced models which were ableto simulate Experiment 3 of Frazier et al. (2000). The Fra-zier et al. experimental items are limited to English nounphrases. This raises the question whether our models gen-eralize to other constructions and to other languages. Thepurpose of the present study is to address this questionsby modeling additional experimental data, viz., (Koeferle& Crocker’s, 2006) experiment on parallelism in Germansentence coordination. Our hypothesis is that both theCopy model and the Decay model will be able to accountfor the German data.

Koeferle and Crocker’s (2006) items take advantage ofGerman word order. Declarative sentences normally havea subject–verb–object (SVO) order, such as (4a). A temporal

340 A. Dubey et al. / Cognition 109 (2008) 326–344

modifier can appear before the object, as illustrated in (4b).However, word order is flexible in German. The temporalmodifier may be focused by bringing it to the front of thesentence, a process known as topicalization. The topicalizedversion of the last sentence is (4c). We will refer to this asan VSO (verb–subject–object) or subject-first order. A moremarked word order would be topicalized verb–object–sub-ject (VOS), as in (4d). We will refer to such sentences as VOSor object-first.

(4) a. Der Geiger lobte den Sänger.‘The violinist complimented the singer.’

b. Der Geiger lobte vor ein paar Minuten den Sänger.‘The violinist complimented the singer several min-utes ago.’

c. Vor ein paar Minuten lobte der Geiger den Sänger.‘Several minutes ago, the violinist complimentedthe singer.’

d. Vor ein paar Minuten lobte den Sänger der Geiger.‘Several minutes ago, it was the singer that the vio-linist complimented.’

In the experiment of Koeferle and Crocker, each itemcontains two coordinated sentences, each of which iseither subject-first or object-first. The experiment uses a2 � 2 design: either subject-first or object-first in the firstconjunct with either subject-first or object-first in the sec-ond conjunct. This leads to two parallel and two non-par-allel conditions, as shown in (5) below. (In reality,Koeferle & Crocker’s (2006) items contain a spillover regionwhich we removed, as explained below.)

(5) a. Vor ein paar Minuten lobte der Geiger den Sängerund in diesem Augenblick preist der Trommlerden Dichter.‘Several minutes ago, the violinist complimentedthe singer and at this moment the drummer ispraising the poet.’

b. Vor ein paar Minuten lobte den Sänger der Geigerund in diesem Augenblick preist der Trommlerden Dichter.‘Several minutes ago, it was the singer that theviolinist complimented and at this moment thedrummer is praising the poet.’

c. Vor ein paar Minuten lobte der Geiger den Sängerund in diesem Augenblick preist den Dichter derTrommler.‘Several minutes ago, the violinist complimentedthe singer and at this moment it is poet that thedrummer is praising.’