A Cost-Effective and Scalable Merge Sort Tree on FPGAs

-

Upload

takuma-usui -

Category

Engineering

-

view

74 -

download

1

Transcript of A Cost-Effective and Scalable Merge Sort Tree on FPGAs

A Cost-Effective and Scalable

Merge Sorter Tree on FPGAs

☆Takuma Usui, Thiem Van Chu, and Kenji Kise

Tokyo Institute of Technology, Japan

Department of Computer Science

CANDAR’16@Hiroshima, Japan

11:35-12:00 (Presentation: 20min, Q&A: 5min),

November 24, 2016

Executive summary

Integer sorting is a very important computing kernel which

can be accelerated using FPGAs.

FPGA resources are too limited to build a high performance

merge sorter tree.

We propose effective designs of cost-effective and scalable

merge sorter trees which have high performance in little

FPGA resource requirement.

We evaluate our architecture, and it achieves 52.4x lower

FPGA slice usage without serious throughput degradation.

1

2

Introduction

Sorting is important

Integer sorting is a fundamental computation kernel

3

Database OperationImage Processing Data Compression

Sorting

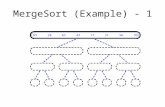

Merge Sorter Tree [4]

It merges multiple sorted record sequences.

𝐾: the number of input leaves (called as “ways”)

4[4] Dirk Koch et al, “FPGASort”, FPGA’11

4-way merge sorter tree

<

<

<

Stage 1 Stage 0Stage 2

18

24

37

09

Input leaves

(ways)01234789

FIFO

< Sorter cell

Performance and our purpose

Sorting time: 𝑂(log𝐾 #𝑟𝑒𝑐𝑜𝑟𝑑𝑠)

► Increasing 𝐾 is effective

FPGA resource requirement: 𝑂(𝐾)

►Cannot be implemented with 𝐾 ≥ 2,048 even if using a large FPGA

Our purpose: Build an optimal architecture for large trees

54-way merge sorter tree (𝐾 = 4)

<

<

<

Stage 1 Stage 0Stage 2

< Sorter cell

FIFO

Merge Sorter Tree: Steady state

Only one sorter cell is operating in each stage at one time

This feature is mentioned by the paper [12].

6

<< Active sorter cell Non-active sorter cell

13

11 10

82 12

81 80

2

15

23

1<

<

<

Stage 1 Stage 0Stage 2

[12]Megumi Ito et al, “Logic-Saving FPGA-Based Merge Sort on Single Sort Cells” (in Japanese), IPSJ SIG Technical Report,

vol. 2014-ARC-208.

Proposed by the paper [12] to reduce FPGA slices to 𝑂(log𝐾).

►Only 8-way and 16-way trees are built

►Reduced slices: 19%, 43% by using BRAMs for the RAM layer.

Single Sort Cells Merge Sorter Tree (SSC) [12]

7

<<

4-way SSC

<

<

<4-way merge

sorter tree

RAM layer

Only 1 cell is

located.FIFOs are

gathered.

Cycle N: How to control

FIFOs are numbered in each stage.

Cell 0: 2(FIFO 0 of stage 1) < 3(FIFO 1 of stage 1), 2 to the root

Send a request “Refill FIFO 0” to “Request queue”

8

<<

Request queue1

2

5 2

3

13

11 11

82 12

81 80

Cell 0Cell 1

13

10

10

2

3

2

FIFO 0

4-way SSC (𝐾 = 4)

0

1

2

3

0

1

23

13

10

Stage 1Stage 2

0 FIFO 0 is selected

Stage 0

Request: Refill FIFO 0

RAM layer

Cycle N+1: Execute the request

It is difficult to detect that FIFO 0 of stage 1 is not full.

► It is necessary that Cell 1 observe the state of all FIFOs.

Instead of that, the cell executes the issued request.

1. Read 13 and 11 from the two corresponding FIFOs

2. Write 11 to FIFO 0 of stage 1 (selected at the previous cycle)

3. Send request: “Refill FIFO 1” to Request queue 2

9

<<

Request queue1

Stage 1 Stage 0Stage 2

2

5

10 3

23 13

11

82 12

81 80

Cell 0Cell 1

13

11

11

10

3

3

FIFO 0

RAM layer

4-way SSC (𝐾 = 4)

0

1

2

3

0

1

15

Request queue 2

Execute the

request: Read,

select, and write

Write

Request: Refill FIFO 0

13

11

Request:

Refill FIFO 1

1

Cycle N+2: Complete the Request

The request “Refill FIFO 0” has been completed.

SSC repeats the operation recursively.

All cells operates at the same time every cycle.

SSC can operate every cycle.

10

<<

Request queue1

Stage 1 Stage 0Stage 2

3

11 10

5

23 13

15

82 12

81 80

Cell 0Cell 1

12

80

12

10

5

5

FIFO 0

RAM layer

4-way SSC (𝐾 = 4)

0

1

21

Request queue2

Read the

corresponding

2 records

Write

Request: Refill FIFO 1

Refilled

0

1

2

3

Request:

Refill FIFO 2Request: Refill

FIFO 1

11

Proposal of Effective Designs

and

Evaluation

Design goals of Our Proposal

Minimum performance degradation from the normal tree

Minimum FPGA resource requirement

Increasing 𝐾 does not decrease the frequency seriously.

12

Designs

1. Baseline design

2. Proposal 1: Critical-path optimized

►For not so large trees

3. Proposal 2: Record management with Block RAMs

►For so large trees

4. Combination of Proposal 1 and Proposal 2

13

Request queue 2

Baseline design

Minimal design of SSC

BRAMs for RAM layers (as [12]).

A cell sends a request in the form of an ID of the selected FIFO.

To execute a request by the cell, proper read addresses and a

write address have to be given to BRAMs.

Address calculation logic converts an ID into the addresses.

14

An ID of the

selected FIFO

Stage 2 Stage 1 Stage 0

Request queue 1

Read addresses

An ID of the

selected FIFO

Cell1

< <

Issue

a request

Cell0

BRAMs

0

1

Write

addressExecute a

request

0

1

2

3

Address

calculation

logic 1

Request queue2

Cell1

Address calculation logic

Focus on reading operation

It is a combinational circuit.

Each FIFO has a head pointer for reading.

Address calculation logic contains FIFO IDs and head pointers.

►Managed with Distributed RAMs

15

< <

Issue

a request

An ID of the

selected FIFO

Cell0

Request

queue 1

Stage 2 Stage 1 Stage 0

BRAMs

Address

calculation

logic 1Read addresses

An ID of the

selected FIFO

0

1

2

3

Head 0

Head 1

Head 2

Head 3

Distributed

RAMs

Request queue and BRAM cycle latency

A case where just giving the top of the request queue

A BRAM emits an entry 1 cycle after given an read address.

The sorter cell can operate once per 2 cycle.

16

Cell1

<

10

22

21

14

45

34

50

23

Request

queue 1

0Address

calculation

logic 1

1

Cell1

<

0 1Address

calculation

logic 1

10

Stall

Request

queue 1

10

22

21

14

45

34

50

23

22

10

Cycle N Cycle N+1

Read

addresses

Read

addresses

Request queue 1

1Address

calculation

logic 10

Solution

When the sorter cell is operating,

the 2nd request is given to the Address calculation logic.

Request queue is divided into 2 parts.

To operate cells every cycle, an input request is sometimes

passed through.

17Cycle N

Cell1<

Request queue 1

0

1

Address

calculation

logic 1

10

Cycle N+1

Cell1<

21

14

14

Active at Cycle N

Active at

Cycle N+1

10

22

21

14

45

34

50

23

45

22

21

14

34

50

23

10

22Active at

Cycle N+1Active at

Cycle N+2

Read

addresses

Read

addresses

14

Address

calculation

logic 1

Request full

The sorter cell has to stall if the output request queue is full.

► It occurs when the main inputs get empty.

When the cell is stalling, the top of the queue is given to the

Address calculation logic to keep the active elements.

18

Cell1

<

Address

calculation

logic 1

Cycle N+2

Cell1

<

Request queue 1

1 0

Stall

Request queue 2

is full

Request queue 1

1

0

Cycle N+1

Active at

Cycle N+1Active at

Cycle N+2

Active at

Cycle N+1Active at

Cycle N+2

45

22

21

14

34

50

23

Keep

active21

14

45

22

21

14

56

34

50

23

1421

14

Operate

correctly

Request

queue 1

is full

Read

addresses

Read

addresses

Designs

1. Baseline design

2. Proposal 1: Critical-path optimized

►For not so large trees

3. Proposal 2: Record management with BRAMs

►For so large trees

4. Combination of Proposal 1 and Proposal 2

19

Cell1

Proposal 1: Critical-path optimized

The rear part of the request queue becomes a 2 entry FIFO.

►The wire to operate the cell every cycle is long, so divided.

A pipeline register is inserted after a sorter cell.

20

< <

Issue

a request

Cell0

Stage 2 Stage 1 Stage 0

BRAMs

Request queue 1

Address

calculation

logic 1

An ID of the

selected FIFO

An ID of the

selected FIFORequest

queue 2

Designs

1. Baseline design

2. Proposal 1: Critical-path optimized

►For not so large trees

3. Proposal 2: Record management with BRAMs

►For so large trees

►BRAMs on Address calculation logic

4. Combination of Proposal 1 and Proposal 2

21

Request

queue 2

Cell1

Proposal 2: Record management with BRAMs

Where 𝐾 is so large, Distributed RAMs in Address calculation

logic becomes too large

►Decrease performance and increase slice requirement

Proposal: Record management with BRAMs

22

< <

Issue

a request

Cell0

BRAMs

Request

queue 1

Address

calculation

logic 1

Address

calculation

logic 1

Distributed

RAMsBRAMs

Stage 2 Stage 1 Stage 0

Problem of Record management with BRAMs

In Proposal 1, Required latency of the logics: 1

A BRAM emits an entry 1 cycle after given a read address.

Required latency of the logics: 2

Doubles BRAM capacity (Please see our manuscript).

23

Request

queue 2

Cell1

< <

Issue

a request

Cell0

BRAMs

Request

queue 1

Address

calculation

logic 1

Address

calculation

logic 1

Stage 2 Stage 1 Stage 0

Overall Design of Proposal 2

Exchange: Request queue and Address calculation logic

►Calculate the addresses just after the cell issues a request

Sometimes through Request queue to address ports

Required latency of the logics: 1 (as Proposal 1)

FIFO capacity becomes the same as Proposal 1.

24

Request

queue 1

Address

calculation

logic 1

ExchangedRequest

queue 2

Cell1

< <

Issue

a request

Cell0

BRAMs

Stage 2 Stage 1 Stage 0

Designs

1. Baseline design

2. Proposal 1: Critical-path optimized

►For not so large trees

3. Proposal 2: Record management with BRAMs

►For so large trees

►BRAMs on Address calculation logic

4. Combination of Proposal 1 and Proposal 2

25

Design Combination

Proposal 2 is effective only for large trees.

Threshold: 𝐾 = 1,024 (determined by the evaluation).

We combine Proposal 1 and Proposal 2

26

Proposal 1

>>…>>

Proposal 2

1,024 ways

2,048 ways

Evaluated Designs

Normal merge sorter tree (Not SSC)

►A component of FACE [11]

SSC

►Baseline: Baseline design

►Proposal 1: Critical-path optimized

►Proposal 2: Record management with BRAMs

►Combination: Combination of Proposal 1 and Proposal 2

27

<

<

<

<<

[11] R. Kobayashi et al, “FACE: Fast and Customizable Sorting Accelerator for Heterogeneous Many-core Systems,” MCSoC’15

Evaluation Setup

Data: 64-bit integer

16 ≤ 𝐾 ≤ 𝟒, 𝟎𝟗𝟔

Terms: Resource usage, clock frequency

Simulation Tool: Synopsys VCS

Design Tool: Xilinx Vivado 2014.4

►Synthesis option: Flow_PerfOptimized_High

► Implementation option: Performance ExplorePostRoutePhysOpt

Target FPGA: Xilinx Virtex7 XC7VX485T-2

► It is on a VC707 Evaluation Kit, which is an ordinary evaluation

environment.

28[11] R. Kobayashi et al, “FACE: Fast and Customizable Sorting Accelerator for Heterogeneous Many-core Systems,” MCSoC’15

Slice Usage

52.4x better than the normal tree (𝐾 = 1024, Proposal 1)

Slice usage is roughly proportional to log𝐾 in almost all SSCs.

Where 𝐾 ≥ 2,048, Proposal 1 consumes more slices.

► In the combined design, the usage is reduced to 𝑂(log𝐾).

The 4,096-way tree (Combination) utilizes only 1.72% of slices.

29

0

0.5

1

1.5

2

2.5

16 32 64 128 256 512 1024 2048 4096

Slice

usa

ge [

%]

Number of ways (K)

Baseline Proposal 1

Proposal 2 Combination

Operating Clock Frequency

Almost equal to merging throughput

Baseline is the lowest (about 150[MHz]).

While the degradation is 1.61x in Baseline compared to Normal,

it is suppressed to 1.31x in Proposal 1 (𝐾 = 1,024).

149[Million records/s] where 𝐾 = 4,096 in Combination

► 1.23x better than Baseline

30

0

50

100

150

200

250

300

16 32 64 128 256 512 1024 2048 4096

Fre

qu

en

cy [

MH

z]

Number of ways (K)

Normal Baseline Proposal 1

Proposal 2 Combination

Conclusion

We propose effective designs of cost-effective and scalable

merge sorter trees for FPGAs based on [12].

►For trees with thousands of input leaves

►Some optimizations and record management with BRAMs

Our proposed optimizations lead to 1.23x performance

improvement compared to Baseline (𝐾 = 4096, Combination)

Slice requirement is reduced to 𝑂(log𝐾) even where 𝐾 is so

large without serious performance degradation compared to

the normal tree which consumes 𝑂(𝐾) slices.

► 1,024-way: 52.4x fewer slices with only 1.31x performance degradation

► 4,096-way: 149[Million records(64-bit)/s] ,1.72% slices

31[12]Megumi Ito et al, “Logic-Saving FPGA-Based Merge Sort on Single Sort Cells” (in Japanese), IPSJ SIG Technical Report, vol. 2014-ARC-208.