A Convex Optimization Framework for Active Learningyang/paper/ICCV2013_active_learn… · chine...

Transcript of A Convex Optimization Framework for Active Learningyang/paper/ICCV2013_active_learn… · chine...

A Convex Optimization Framework for Active Learning

Ehsan ElhamifarUniversity of California, Berkeley

Guillermo SapiroDuke University

Allen Yang, S. Shankar SastryUniversity of California, Berkeley

Abstract

In many image/video/web classification problems, wehave access to a large number of unlabeled samples. How-ever, it is typically expensive and time consuming to obtainlabels for the samples. Active learning is the problem ofprogressively selecting and annotating the most informa-tive unlabeled samples, in order to obtain a high classi-fication performance. Most existing active learning algo-rithms select only one sample at a time prior to retrainingthe classifier. Hence, they are computationally expensiveand cannot take advantage of parallel labeling systems suchas Mechanical Turk. On the other hand, algorithms thatallow the selection of multiple samples prior to retrainingthe classifier, may select samples that have significant in-formation overlap or they involve solving a non-convex op-timization. More importantly, the majority of active learn-ing algorithms are developed for a certain classifier typesuch as SVM. In this paper, we develop an efficient activelearning framework based on convex programming, whichcan select multiple samples at a time for annotation. Unlikethe state of the art, our algorithm can be used in conjunc-tion with any type of classifiers, including those of the fam-ily of the recently proposed Sparse Representation-basedClassification (SRC). We use the two principles of classi-fier uncertainty and sample diversity in order to guide theoptimization program towards selecting the most informa-tive unlabeled samples, which have the least informationoverlap. Our method can incorporate the data distributionin the selection process by using the appropriate dissimi-larity between pairs of samples. We show the effectivenessof our framework in person detection, scene categorizationand face recognition on real-world datasets.

1. Introduction

The goal of recognition algorithms is to obtain the high-est level of classification accuracy on the data, which canbe images, videos, web documents, etc. The common firststep of building a recognition system is to provide the ma-chine learner with labeled training samples. Thus, in su-pervised and semi-supervised frameworks, the classifier’s

performance highly depends on the quality of the providedlabeled training samples. In many problems in computervision, pattern recognition and information retrieval, it isfairly easy to obtain a large number of unlabeled trainingsamples, e.g., by downloading images, videos or web doc-uments from the Internet. However, it is, in general, dif-ficult to obtain labels for the unlabeled samples, since thelabeling process is typically complex, expensive and timeconsuming. Active learning is the problem of progressivelyselecting and annotating the most informative data pointsfrom the pool of unlabeled samples, in order to obtain ahigh classification performance.

Prior Work. Active learning has been well studied inthe literature with a variety of applications in image/videocategorization [5, 15, 16, 22, 30, 33, 34, 37], text/web clas-sification [25, 29, 38], relevance feedback [3, 36], etc. Themajority of the literature consider the single mode activelearning [21, 23, 25, 27, 29, 31], where the algorithm se-lects and annotates only one unlabeled sample at a timeprior to retraining the classifier. While this approach is ef-fective in some applications, it has several drawbacks. First,there is a need to retrain the classifier after adding each newlabeled sample to the training set, which can be computa-tionally expensive and time consuming. Second, such meth-ods cannot take advantage of parallel labeling systems suchas Mechanical Turk or LabelMe [7, 24, 28], since they re-quest annotation for only one sample at a time. Third, sin-gle mode active learning schemes might select and annotatean outlier instead of an informative sample for classifica-tion [26]. Fourth, these methods are often developed for acertain type of a classifier such as SVM or Naive Bayes andcannot be easily modified to work with other classifier types[21, 23, 25, 29, 31].

To address some of the above issues, more recent meth-ods have focused on the batch mode active learning, wherethey select and annotate multiple unlabeled samples at atime prior to retraining the classifier [2, 5, 12, 17, 18]. No-tice that one can run a single mode active learning methodmultiple times without retraining the classifier in order toselect multiple unlabeled samples. However, the drawbackof this approach is that the selected samples can have sig-nificant information overlap, hence, they do not improve

4321

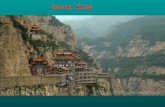

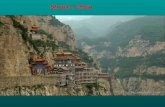

Figure 1. We demonstrate the effectiveness of our proposed active learning framework on three problems of person detection, scene categorization andface recognition. Top: sample images from the INRIA Person dataset [6]. The dataset contains images from 2 classes, either containing people or not.Middle: sample images from the Fifteen Scene Categories dataset [19]. The dataset contains images from 15 different categories, such as street, building,mountain, etc. Bottom: sample images from the Extended YaleB Face dataset [20]. The dataset contains images from 38 classes, corresponding to 38different individuals, captured under a fixed pose and varying illumination.

the classification performance compared to the single modeactive learning scheme. Other approaches try to decreasethe information overlap among the selected unlabeled sam-ples [2, 12, 13, 18, 36]. However, such methods are oftenad-hoc or involve a non-convex optimization, which can-not be solved efficiently [12, 13], hence approximate solu-tions are sought. Moreover, similar to the single mode ac-tive learning, most batch mode active learning algorithmsare developed for a certain type of a classifier and can-not be easily modified to work with other classifier types[12, 13, 17, 29, 32, 34].

Paper Contributions. In this paper, we develop an effi-cient active learning framework based on convex program-ming that can be used in conjunction with any type of clas-sifiers. We use the two principles of classifier uncertaintyand sample diversity in order to guide the optimization pro-gram towards selecting the most informative unlabeled sam-ples. More specifically, for each unlabeled sample, we de-fine a confidence score that reflects how uncertain the sam-ple’s predicted label is according to the current classifierand how dissimilar the sample is with respect to the labeledtraining samples. A large value of the confidence scorefor an unlabeled sample means that the current classifier ismore certain about the predicted label of the sample andalso the sample is more similar to the labeled training sam-ples. Hence, annotating it does not provide significant addi-tional information to improve the classifier’s performance.On the other hand, an unlabeled sample with a small con-fidence score is more informative and should be labeled.Since we can have many unlabeled samples with low con-fidence scores and they may have information overlap witheach other, i.e., can be similar to each other, we need to se-lect a few representatives of the unlabeled samples with lowconfidence scores. We perform this task by employing andmodifying a recently proposed algorithm for finding datarepresentatives based on simultaneous sparse recovery [9].

The algorithm that we develop has the following advantageswith respect to the state of the art:

– It addresses the batch mode active leaning problem,hence, it can take advantage of parallel annotation systemssuch as Mechanical Turk and LabelMe.

– It can be used in conjunction with any type of classi-fiers. The choice of the classifier affects selection of un-labeled samples through the confidence scores, but the pro-posed framework is generic. In fact, in our experiments, weconsider the problem of active learning using the recentlyproposed Sparse Representation-based Classification (SRC)method [35]. To the best of our knowledge, this is the firstactive learning framework for the SRC algorithm.

– It is based on convex programming, hence can be solvedefficiently. Unlike the state of the art, it incorporates boththe classifier uncertainty and sample diversity in a convexoptimization to select multiple informative samples that arediverse with respect to each other and the labeled samples.

– It can incorporate the distribution of the data by using anappropriate dissimilarity matrix in the convex optimizationprogram. The dissimilarity between pairs of points can beEuclidean distances (when the data come from a mixtureof Gaussians), geodesic distances (when data lie on a man-ifold) or other types of content/application-dependent dis-similarity, which we do not restrict to come from a metric.

Paper Organization. The organization of the paper is asfollows. In Section 2, we review the Dissimilarity-basedSparse Representative Selection (DSMRS) algorithm thatwe leverage upon in this paper. In Section 3, we proposeour framework of active learning. We demonstrate exper-imental results on multiple real-world problems in Section4. Finally, Section 5 concludes the paper.

4322

2. Dissimilarity-based Sparse Modeling Repre-sentative Selection (DSMRS)

In this section, we review the Dissimilarity-based SparseModeling Representative Selection (DSMRS) algorithm[9, 10] that finds representative points of a dataset. Assumewe have a dataset with N points and we are given dissim-ilarities {dij}i,j=1,...,N between every pair of points. dijdenotes how well i represents j. The smaller the value ofdij is, the better point i is a representative of point j. We as-sume that the dissimilarities are nonnegative and djj ≤ dijfor every i and j. We can collect the dissimilarities in amatrix as

D =

d>1...

d>N

=

d11 d12 · · · d1N...

.... . .

...dN1 dN2 · · · dNN

∈ RN×N . (1)

Given the dissimilarities, the goal is to find a few points thatwell represent the dataset. To do so, [9] proposes a convexoptimization framework by introducing variables zij asso-ciated to dij . zij ∈ [0, 1] indicates the probability that i isa representative of j. We can collect the optimization vari-ables in a matrix as

Z =

z>1...

z>N

=

z11 z12 · · · z1N...

.... . .

...zN1 zN2 · · · zNN

∈ RN×N . (2)

In order to select a few representatives that well encode thecollation of points in the dataset, two objective functionsshould be optimized. The first objective function is the en-coding cost of theN data points via the representatives. Theencoding cost of j via i is set to dijzij ∈ [0, dij ], hence thetotal encoding cost for all points is∑

i,j

dijzij = tr(D>Z). (3)

The second objective function corresponds to penalizing thenumber of selected representatives. Notice that if i is arepresentative of some points in the dataset, then zi 6= 0and if i does not represent any point in the dataset, thenzi = 0. Hence, the number of representatives correspondsto the number of nonzero rows of Z. A convex surrogate forthe cost associated to the number of selected representativeis given by

N∑i=1

‖zi‖q , ‖Z‖1,q, (4)

where q ∈ {2,∞}. Putting the two objectives together, theDSMRS algorithm solves

min λ ‖Z‖q,1 + tr(D>Z) s. t. Z ≥ 0, 1>Z = 1>,(5)

G(1)1

G(1)2

G(1)3

G(2)1G(2)

2

G(1)1

G(1)2

G(1)3

G(2)1G(2)

2

Figure 2. Separating data in two different classes. Class 1 consists ofdata in {G(1)

1 ,G(1)2 ,G(1)

3 } and class 2 consists of data in {G(2)1 ,G(2)

2 }.Left: a max-margin linear SVM learned using two training samples (greencrosses). Data in G(1)

2 are misclassified as belonging to class 1. Note that

labeling samples from G(1)3 or G(2)

2 does not change the decision boundary

much and G(1)2 will be still misclassified. Right: labeling a sample that the

classifier is more uncertain about its predicted class, helps to improve theclassification performance. In this case, labeling a sample from G(1)

2 thatis close to the decision boundary, results in changing the decision boundaryand correct classification of all samples.

where the constraints ensure that each column of Z is aprobability vector, denoting the association probability of jto each one of the data points. Thus, the nonzero rows ofthe solution Z indicate the indices of the representatives.Notice that λ > 0 balances the two costs of the encodingand the number of representatives. A smaller value of λputs more emphasis on better encoding, hence results in ob-taining more representatives, while a larger value of λ putsmore emphasis on penalizing the number of representatives,hence results in obtaining less representatives.

3. Active Learning via Convex Programming

In this section, we propose an efficient algorithm for ac-tive learning that takes advantage of convex programmingin order to find the most informative points. Unlike thestate of the art, our algorithm can be used in conjunctionwith any classifier type. To do so, we use the two principlesof classifier uncertainty and sample diversity to define con-fidence scores for unlabeled samples. A lower confidencescore for an unlabeled sample indicates that we can obtainmore information by annotating that sample. However, thenumber of unlabeled samples with low confidence scorescan be large and, more importantly, the samples can haveinformation overlap with each other or they can be outliers.Thus, we integrate the confidence scores in the DSMRSframework in order to find a few representative unlabeledsamples that have low confidence scores. In the subsequentsections, we define the confidence scores and show how touse them in the DSMRS framework in order to find the mostinformative samples. We assume that we have a total of Nsamples, where U and L denote sets of indices of unlabeledand labeled samples, respectively.

4323

3.1. Classifier Uncertainty

First, we use the classifier uncertainty in order to selectinformative points for improving the classifier performance.The uncertainty sampling principle [4] states that the infor-mative samples for classification are the ones that the clas-sifier is most uncertain about.

To illustrate this, consider the example shown in the leftplot of Figure 2, where the data belong to two differentclasses. G(i)j denotes the j-th cluster of samples that be-long to class i. Assume that we already have two labeledsamples, shown by green crosses, one from each class. Forthis specific example, we consider the linear SVM classifierbut the argument is general and applies to other classifiertypes. A max-margin hyperplane learned via SVM for thetwo training samples is shown in the figure. Notice that theclassifier is more confident about the labels of samples inG(1)3 and G(2)2 as they are farther from the decision boundary,while it is less confident about the labels of samples in G(1)2 ,since they are closer to the hyperplane boundary. In thiscase, labeling any of the samples in G(1)3 or G(2)2 does notchange the decision boundary, hence, samples in G(1)2 willstill be misclassified. On the other hand, labeling a samplefrom G(1)2 changes the decision boundary so that points inthe two classes will be correctly classified, as shown in theright plot of Figure 2.

Now, for a generic classifier, we define its confidenceabout the predicted label of an unlabeled sample. Con-sider data in L different classes. For an unlabeled sample i,we consider the probability vector pi =

[pi1 · · · piL

],

where pij denotes the probability that sample i belongs toclass j. We define the classifier confidence score of point ias

cclassifier(i) , σ − (σ − 1)E(pi)

log2(L)∈ [1, σ], (6)

where σ > 1 and E(·) denotes the entropy function. Notethat when the classifier is most certain about the label of asample i, i.e., only one element of pi is nonzero and equalto one, then the entropy is zero and the confidence scoreis maximum, i.e., is equal to σ. On the other hand, whenthe classifier is most uncertain about the label of a samplei, i.e., when all the elements of pi are equal to 1/L, thenthe entropy is equal to log2(L) and the confidence score isminimum, i.e., is equal to one.

Remark 1 For probabilistic classifiers such as NaiveBayes, the probability vectors, pi, are directly given bythe output of the algorithms. For SVM, we use the re-sult of [14] to estimate pi. For SRC, we can computethe multi-class probability vectors as follows. Let xi =[x>i1 · · · x>iL

]>be the sparse representation of an un-

labeled sample i, where xij denotes the representation co-efficients using labeled samples from class j. We set pij ,

G(1)1

G(1)2

G(2)1

G(2)2

G(1)1

G(1)2

G(2)1

G(2)2

Figure 3. Separating data in two different classes. Class 1 consists of datain {G(1)

1 ,G(1)2 } and class 2 consists of data in {G(2)

1 ,G(2)2 }. Left: a max-

margin linear SVM learned using two training samples (green crosses).Data in G(1)

2 and G(2)2 are misclassified as belonging to class 2 and 1, re-

spectively. Note that the most uncertain samples according to the classifierare samples from G(1)

1 and G(2)1 , which are close to the decision boundary.

However, labeling such samples does not change the decision boundarymuch and samples in G(1)

2 and G(2)2 will still be misclassified. Right:

labeling samples that are sufficiently dissimilar from the labeled trainingsamples helps to improve the classification performance. In this case, la-beling a sample from G(1)

2 and a sample from G(2)2 results in changing the

decision boundary and correct classification of all samples.

‖xij‖1/‖xi‖1.

3.2. Sample Diversity

We also use the sample diversity criterion in order to findthe most informative points for improving the classifier per-formance. More specifically, sample diversity states that in-formative points for classification are the ones that are suf-ficiently dissimilar from the labeled training samples (andfrom themselves in the batch mode setting).

To illustrate this, consider the example of Figure 3,where the data belong to two different classes. G(i)j denotesthe j-th cluster of samples that belong to class i. Assumethat we already have two labeled samples, shown by greencrosses, one from each class. For this example, we con-sider the linear SVM classifier but the argument applies toother classifier types. The max-margin hyperplane learnedvia SVM for the two training samples is shown in the theleft plot of Figure 3. Notice that samples in G(1)1 and G(2)1

are similar to the labeled samples (have small Euclidean dis-tances to the labeled samples in this example). In fact, label-ing any of the samples in G(1)1 or G(2)1 does not change thedecision boundary much, and the points in G(1)2 will be stillmisclassified as belonging to class 2. On the other hand,samples in G(1)2 and G(2)2 are more dissimilar from the la-beled training samples. In fact, labeling a sample from G(1)2

or G(2)2 changes the decision boundary so that points in thetwo classes will be correctly classified, as shown in the right

4324

G(1)1

G(1)2

G(2)1

G(2)2

G(1)1

G(1)2

G(2)1

G(2)2

G(1)1

G(1)2

G(2)1

G(2)2

Figure 4. Separating data in two different classes. Class 1 consists of data in {G(1)1 ,G(1)

2 } and class 2 consists of data in {G(2)1 ,G(2)

2 }. Left: a max-margin

linear SVM learned using two training samples (green crosses). All samples in G(2)2 as well as some samples in G(1)

2 are misclassified. Middle: two

samples with lowest confidence scores correspond to two samples from G(1)2 that are close to the decision boundary. A retrained classifier using these two

samples, which have information overlap, still misclassifies samples in G(2)2 . Right: two representatives of samples with low confidence scores correspond

to a sample from G(1)2 and a sample from G(2)

2 . A retrained classifier using these two samples correctly classifies all the samples in the dataset.

plot of Figure 3.To incorporate diversity with respect to the labeled train-

ing set, L, for a point i in the unlabeled set, U , we definethe diversity confidence score as

cdiversity(i) , σ − (σ − 1)minj∈L dji

maxk∈U minj∈L djk∈ [1, σ],

(7)where σ > 1. When the closest labeled sample to an unla-beled sample i is very similar to it, i.e., minj∈L dji is closeto zero, then the diversity confidence score is large, i.e., isclose to σ. This means that sample i does not promote di-versity. On the other hand, when all labeled samples arevery dissimilar from an unlabeled sample i, i.e., the fractionin (7) is close to one, then the diversity confidence score issmall, i.e., is close to one. This means that selecting andannotating sample i promotes diversity with respect to thelabeled samples.

3.3. Selecting Informative Samples

Recall that our goal is to have a batch mode active learn-ing framework that selects multiple informative and diverseunlabeled samples, with respect to the labeled samples aswell as each other, for annotation. One can think of a sim-ple algorithm that selects samples that have the lowest con-fidence scores. The drawback of this approach is that whilethe selected unlabeled samples are diverse with respect tothe labeled training samples, they can still have significantinformation overlap with each other. This comes from thefact that the confidence scores only reflect the relationshipof each unlabeled sample with respect to the classifier andthe labeled training samples and do not capture the relation-ships among the unlabeled samples.

To illustrate this, consider the example of Figure 4,where the data belong to two different classes. Assumethat we already have two labeled samples, shown by greencrosses, one from each class. A max-margin hyperplanelearned via SVM for the two training samples is shown inthe the left plot of Figure 4. In this case, all samples in

G(2)2 as well as some samples in G(1)2 are misclassified. No-tice that samples in G(1)2 have small classifier and diversityconfidence scores and samples in G(2)2 have small diversityconfidence scores. Now, if we select two samples with low-est confidence scores, we will select two samples from G(1)2 ,as they are very close to the decision boundary. However,these two samples have information overlap, since they be-long to the same cluster. In fact, after adding these twosamples to the labeled training set, the retrained classifier,shown in the middle plot of Figure 4, still misclassifies sam-ples in G(2)2 . On the other hand, two representatives of sam-ples with low confidence scores, i.e., two samples that cap-ture the distribution of samples with low confidence scores,correspond to one sample from G(1)2 and one sample fromG(2)2 . As shown in the right plot of Figure 4, the retrainedclassifier using these two points correctly classifies all ofthe samples.

To select a few diverse representatives of unlabeled sam-ples that have low confidence scores, we take advantageof the DSMRS algorithm. Let D ∈ R|U|×|U| be the dis-similarity matrix for samples in the unlabeled set U ={i1, · · · , i|U|}. We propose to solve the convex program

min λ ‖CZ‖1,q+tr(D>Z) s. t. Z ≥ 0, 1>Z = 1>,(8)

over the optimization matrix Z ∈ R|U|×|U|. The matrixC = diag(c(i1), . . . , c(i|U|)) is the confidence matrix withthe active learning confidence scores, c(i), defined as

c(ik) , min{cclassifier(ik), cdiversity(ik)} ∈ [1, σ]. (9)

More specifically, for an unlabeled sample ik that has asmall confidence score c(ik), the optimization program putsless penalty on the k-th row of Z being nonzero. On theother hand, for a sample ik that has a large confidence scorec(ik), the optimization program puts more penalty on thek-th row of Z being nonzero. Hence, the optimization pro-motes selecting a few unlabeled samples with low confi-dence scores that are, at the same time, representatives of

4325

0 50 100 150 200 250 3000.65

0.7

0.75

0.8

0.85

0.9

0.95

1

Labeled training set size

Test

set a

ccur

acy

CPALCCALRAND

Figure 5. Classification accuracy of different active learning algorithmson the INRIA Person dataset as a function of the total number of labeledtraining samples selected by each algorithm.

the distribution of the samples. This therefore addressesthe main problems with previous active learning algorithms,which we discussed in Section 1.

Remark 2 We should note that other combinations of theclassifier and diversity scores can be used, such as c(i) ,√cclassifier(i) · cdiversity(i) ∈ [1, σ]. However, (9) is very in-

tuitive and works best in our experiments.

Remark 3 In the supplementary materials, we discuss theappropriate choice for the range of the regularization pa-rameter, λ, in (8). We also explain how our algorithm is ro-bust to outliers and can reject them for annotation. More-over, we present an alternative convex formulation to (8),where we can impose the number of selected samples andcan also address the active learning with a given budget[11, 31, 34].

4. ExperimentsIn this section, we examine the performance of our pro-

posed active learning framework on several real-world ap-plications. We consider person detection, scene catego-rization and face recognition from real images (see Figure1). We refer to our approach, formulated in (8), as Con-vex Programming-based Active Learning (CPAL) and im-plement it using an Alternating Direction Method of Mul-tipliers method [1], which has quadratic complexity in thenumber of unlabeled samples. For all the experiments, wefix σ = 20 in (6) and (7), however, the performances donot change much for σ ∈ [5, 40]. As the experimental re-sults show, our algorithm works well with different types ofclassifiers.

To illustrate the effect of confidence scores and represen-tativeness of samples in the performance of our proposedframework, we consider several methods for comparison.

1 2 3 4 5 6 7 8 9 10 11 120

20

40

60

80

100

120

140

160

180

Iteration number

Lab

eled

tra

inin

g s

et s

ize

Person

Nonperson

Figure 6. Total number of samples from each class of INRIA Persondataset selected by our proposed algorithm (CPAL) at different activelearning iterations.

Assume that our algorithm selectsKt samples at iteration t,i.e., prior to training the classifier for the t-th time.

– We select Kt samples uniformly at random from the poolof unlabeled samples. We refer to this method as RAND.

– We selectKt samples that have the smallest classifier con-fidence scores. For an SVM classifier, this method corre-sponds to the algorithm proposed in [29]. We refer to thisalgorithm as Classifier Confidence-based Active Learning(CCAL).

4.1. Person Detection

In this section, we consider the problem of detecting hu-mans in images. To do so, we use the INRIA Person dataset[6] that consists of a set of positive training/test images,which contain people, and a set of negative train/test im-ages, which do not contain a person (see Figure 1). For eachimage in the dataset, we compute the Histogram of OrientedGradients (HOG), which has been shown to be an effectivedescriptor for the task of person detection [6, 8]. We usethe positive/negative training images in the dataset to formthe pool of unlabeled samples (2, 416 positive and 2, 736negative samples) and use the the positive/negative test im-ages for testing (1, 126 positive and 900 negative samples).For this binary classification problem (L = 2), we use thelinear SVM classifier, which has been shown to work wellwith HOG features for the person detection task [6, 8]. Weuse the χ2-distance to compute the dissimilarity betweenthe histograms, as it works better than other dissimilaritytypes, such as the `1-distance and KL-divergence, in ourexperiments.

Figure 5 shows the classification accuracy of differentactive learning methods on the test set as a function of thetotal number of labeled samples. From the results, we makethe following conclusions:

– Our proposed active learning algorithm, consistently out-

4326

200 400 600 800 1000 12000.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

Labeled training set size

Test

set a

ccur

acy

CPALCCALRAND

Figure 7. Classification accuracy of different active learning algorithmson the Fifteen Scene Categories dataset as a function of the total numberof labeled training samples selected by each algorithm.

performs other algorithms. In fact, with 316 labeled sam-ples, CPAL obtains 96% accuracy while other methods ob-tain less than 84% accuracy on the test set.– CCAL and RAND perform worse than our proposed algo-rithm. This comes from the fact that the selected samples byCCAL can have information overlap and are not necessar-ily representing the distribution of unlabeled samples withlow confidence scores. Also, RAND ignores all confidencescores and obtains, in general, lower classification accuracythan CCAL.

Figure 6 shows the total number of samples selected byour method from each class. Although our active learningalgorithm is unaware of the separation of unlabeled samplesinto classes, it consistently selects about the same numberof samples from each class. Notice also that our methodselects a bit more samples from the nonperson class, since,as expected, the negative images have more variation thanthe positive ones.

4.2. Scene Categorization

In this section, we consider the problem of scene cate-gorization in images. We use the Fifteen Scene Categoriesdataset [19] that consists of images from L = 15 differ-ent classes, such as coasts, forests, highways, mountains,stores, etc (see Figure 1). There are between 210 and 410images in each class, making a total of 4, 485 images in thedataset. We randomly select 80% of images in each class toform the pool of unlabeled samples and use the rest of the20% of images in each class for testing. We use the kernelSVM classifier (one-versus-rest) with the Spatial PyramidMatch (SPM) kernel, which has been shown to be effectivefor scene categorization [19]. More specifically, the SPMkernel between a pair of images is given by the weightedintersection of the multi-resolution histograms of the im-ages. We use 3 pyramid levels and 200 bins to compute thehistograms and the kernel. As the SPM is itself a similar-

300 400 500 600 7000.75

0.8

0.85

0.9

Labeled training set size

Test

set a

ccur

acy

CPALCCALRAND

Figure 8. Classification accuracy of different active learning algorithmson the Extended YaleB Face dataset as a function of the total number oflabeled training samples selected by each algorithm.

ity between pairs of images, we also use it to compute thedissimilarities by negating the similarity matrix and shiftingthe elements to become non-negative.

Figure 7 shows the accuracy of different active learningmethods on the test set as a function of the total number ofselected samples. Our method consistently performs betterthan other approaches. Unlike the experiment in the pre-vious section, here the RAND method, in general, has abetter performance than CCAL method that selects multi-ple samples with low confidence scores. A careful look intothe selected samples by different methods shows that, thisis due to the fact that CCAL may repeatedly select similarsamples from a fixed class while a random strategy, in gen-eral, does not get stuck to repeatedly select similar samplesfrom a fixed class.

4.3. Face Recognition

Finally, we consider the problem of active learning forface recognition. We use the Extended YaleB Face dataset[20], that consists of face images of L = 38 individuals(classes). Each class consists of 64 images captured un-der the same pose and varying illumination. We randomlyselect 80% of images in each class to form the pool of unla-beled samples and use the rest of the 20% of images in eachclass for testing. We use the Sparse Representation-basedClassification (SRC), which has been shown to be effectivefor the classification of human faces [35]. To the best of ourknowledge, our work is the first one addressing the activelearning problem in conjunction with SRC. We downsam-ple the images and use the 504-dimensional vectorized im-ages as the feature vectors. We use the Euclidean distanceto compute dissimilarities between pairs of samples.

Figure 8 shows the classification accuracy of differentactive learning methods as a function of the total number oflabeled training samples selected by each algorithm. Onecan see that our proposed algorithm performs better than

4327

other methods. With a total of 790 labeled samples (aver-age of 21 samples per class), we obtain the same accuracy(about 97%) as reported in [35] for 32 random samples perclass. It is important to note that the performances of RANDand CCAL are very close. This comes from the fact that thespace of images from each class are not densely sampled.Hence, samples are typically dissimilar from each other. Asa result, samples with low confidence scores are generallydissimilar from each other.

5. ConclusionsWe proposed a batch mode active learning algorithm

based on simultaneous sparse recovery that can be usedin conjunction with any classifier type. The advantage ofour algorithm with respect to the state of the art is thatit incorporates classifier uncertainty and sample diversityprinciples via confidence scores in a convex programmingscheme. Thus, it selects the most informative unlabeledsamples for classification that are sufficiently dissimilarfrom each other as well as the labeled samples and repre-sent the distribution of the unlabeled samples. We demon-strated the effectiveness of our approach by experiments onperson detection, scene categorization and face recognitionon real-world images.

AcknowledgmentE. Elhamifar, A. Yang and S. Sastry are supported

by grants ONR-N000141310341, ONR-N000141210609,BAE Systems (94316), DOD Advanced Research Project(30989), Army Research Laboratory (31633), University ofPennsylvania (96045). G. Sapiro acknowledges the supportby ONR, NGA, NSF, ARO and AFOSR grants.

References[1] S. Boyd, N. Parikh, E. Chu, B. Peleato, and J. Eckstein. Dis-

tributed optimization and statistical learning via the alternating di-rection method of multipliers. Foundations and Trends in MachineLearning, 2010.

[2] K. Brinker. Incorporating diversity in active learning with supportvector machines. ICML, 2003.

[3] E. Chang, S. Tong, K. Goh, , and C. Chang. Support vector machineconcept-dependent active learning for image retrieval. IEEE Trans.on Multimedia, 2005.

[4] D. Cohn, L. Atlas, and R. Ladner. Improving generalization withactive learning. Journal of Machine Learning, 1994.

[5] B. Collins, J. Deng, K. Li, and L. Fei-Fei. Towards scalable datasetconstruction: An active learning approach. ECCV, 2008.

[6] N. Dalal and B. Triggs. Histograms of oriented gradients for humandetection. CVPR, 2005.

[7] J. Deng, W. Dong, R. Socher, L. J. Li, K. Li, and L. Fei-Fei. Ima-genet: A large-scale hierarchical image database. CVPR, 2009.

[8] P. Dollar, C. Wojek, B. Schiele, and P. Perona. Pedestrian detection:A benchmark. CVPR, 2009.

[9] E. Elhamifar, G. Sapiro, and R. Vidal. Finding exemplars from pair-wise dissimilarities via simultaneous sparse recovery. NIPS, 2012.

[10] E. Elhamifar and S. S. Sastry. Dissimilarity-based sparse modelingrepresentative selection. submitted to IEEE Trans. PAMI, 2013.

[11] R. Greiner, A. Grove, and D. Roth. Learning cost-sensitive activeclassiers. Articial Intelligence, 2002.

[12] Y. Guo and D. Schuurmans. Discriminative batch mode active learn-ing. NIPS, 2007.

[13] S. Hoi, R. Jin, J. Zhu, and M. Lyu. Semi-supervised svm batch modeactive learning with applications to image retrieval. ACM Trans. onInformation Systems, 2009.

[14] T. K. Huang, R. C. Weng, and C. J. Lin. Generalized bradley-terrymodels and multi-class probability estimates. JMLR, 2006.

[15] A. Joshi, F. Porikli, and N. Papanikolopoulos. Multi-class activelearning for image classification. IEEE CVPR, 2009.

[16] A. Kapoor, K. Grauman, R. Urtasun, and T. Darrell. Active learningwith gaussian processes for object categorization. ICCV, 2007.

[17] A. Kovashka, S. Vijayanarasimhan, and K. Grauman. Actively se-lecting annotations among objects and attributes. ICCV, 2011.

[18] A. Krause and C. Guestrin. Nonmyopic active learning of gaussianprocesses: an exploration-exploitation approach. ICML, 2007.

[19] S. Lazebnik, C.Schmid, and J. Ponce. Beyond bags of features:Spatial pyramid matching for recognizing natural scene categories.CVPR, 2006.

[20] K. C. Lee, J. Ho, and D. Kriegman. Acquiring linear subspaces forface recognition under variable lighting. IEEE Trans. PAMI, 2005.

[21] K. Nigam, A. McCallum, S. Thrun, and T. Mitchell. Text classifi-cation from labeled and unlabeled documents using em. Journal ofMachine Learning, 2000.

[22] G. J. Qi, X. S. Hua, Y. Rui, J. Tang, and H. J. Zhang. Two-dimensional active learning for image classification. CVPR, 2008.

[23] N. Roy and A. McCallum. Toward optimal active learning throughsampling estimation of error reduction. ICML, 2001.

[24] B. Russell, A. Torralba, K. Murphy, and W. Freeman. Labelme: adatabase and web-based tool for image annotation. IJCV, 2008.

[25] G. Schohn and D. Cohn. Less is more: Active learning with supportvector machines. ICML, 2000.

[26] B. Settles and M. Craven. An analysis of active learning strategiesfor sequence labeling tasks. Conference on Empirical Methods inNatural Language Processing, 2008.

[27] B. Siddiquie and A. Gupta. Beyond active noun tagging: Modelingcontextual interactions for multi-class active learning. CVPR, 2010.

[28] A. Sorokin and D. Forsyth. Utility data annotation with amazon me-chanical turk. CVPR, 2008.

[29] S. Tong and D. Koller. Support vector machine active learning withapplications to text classification. JMLR, 2001.

[30] S. Vijayanarasimhan and K. Grauman. Multi-level active predictionof useful image annotations for recognition. NIPS, 2008.

[31] S. Vijayanarasimhan and K. Grauman. Whats it going to cost you?:Predicting effort vs. informativeness for multi-label image annota-tions. CVPR, 2009.

[32] S. Vijayanarasimhan and K. Grauman. Large-scale live active learn-ing: Training object detectors with crawled data and crowds. CVPR,2011.

[33] S. Vijayanarasimhan and K. Grauman. Active frame selection forlabel propagation in videos. ECCV, 2012.

[34] S. Vijayanarasimhan, P. Jain, and K. Grauman. Far-sighted activelearning on a budget for image and video recognition. CVPR, 2010.

[35] J. Wright, A. Yang, A. Ganesh, S. Sastry, and Y. Ma. Robust facerecognition via sparse representation. IEEE Trans. PAMI, 2009.

[36] Z. Xu, R. Akella, and Y. Zhang. Incorporating diversity and densityin active learning for relevance feedback. European Conference onInformation Retrieval, 2007.

[37] R. Yan, J. Yang, and A. Hauptmann. Automatically labeling videodata using multiclass active learning. ICCV, 2003.

[38] C. Zhang and T. Chen. An active learning framework for content-based information retrieval. IEEE Trans. on Multimedia, 2002.

4328