A APM’s Analysis Workbenchscribblecat.com/lgb/docs/Analysis_Workbench_Showcase... ·...

Transcript of A APM’s Analysis Workbenchscribblecat.com/lgb/docs/Analysis_Workbench_Showcase... ·...

CA APM’s Analysis Workbench:

Agile Integrations and the Importance of Information Architecture

What Was Asked For

Automatic detection of unusual patterns in application performance metrics

What We Built

An integration with a third-party anomaly detection engine, and an “Analysis Workbench” that allowed

the user to search for and view relevant anomalies, as well as console notifications that informed the

user about recent anomalies and brought them to the Workbench for more information.

Why the Design was Especially Cool

The existing third-party product had no inherent semantics, which made the results very hard to

understand and to use. When designing our integration, I structured it around the semantics that we

had previously built into our product (as part of the Application Triage Map feature), thus making the

anomaly detection far more useful and allowing a tighter integration with our product.

Praise from the Critics

“Application Performance Monitoring (APM) 9.5… includes the embedded Prelert engine (with a slick CA

developed UI) for analytics” – Jonah Kowall, Gartner Blog Network (April 25 2013) [emphasis added]

“After a demo of this feature [Application Behavior Analytics], I was really impressed with how easy it is

to use and how quickly problems could be identified and diagnosed.” – Clabby Analytics Research Report,

May 2013 [emphasis added]

Patents Filed

Display and Analysis of Information Related to Monitored Elements of a Computer System –

Inventors: Laura G. Beck, Tim Smith; Filed: October 2013

Automatic Process for Identifying Possible Root Cause of Anomalous Activity in Applications –

Inventors: Laura G. Beck, Tim Smith; Filed: January 2014

Show me more! Design Details Backstory Related Features

- & -

Design Details

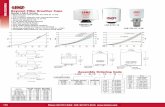

The Analysis Workbench was designed to report and visualize any anomalies detected in the

user’s monitored software. We designed for two primary flows – one where the end-users

were reporting strange or problematic behavior in the software, leading our user to come to

the Workbench to look for corresponding anomalies; and the other where the system detected

an anomaly and warned our user more actively.

In both cases, the user’s first goal is to determine whether a specific anomaly pertains to the

application the user is interested in (or someone else’s application), and whether it’s a real

performance problem or just random noise.

Basic Elements

Screen Placement and Dependencies:

A

A. “Analysis Workbench” tab.

Use:

o Allows the user to navigate to the Analysis Workbench to find and view any relevant

anomalies.

o Appears only if feature was installed and configured on the system. If the analytics engine is

not available or cannot be found, a banner message is displayed at the top of the page

(similar to one already in use in the product for server issues).

o Depends on global time controller; only functions in Historical mode. Page is masked and

message shown in-page when user navigates here in Live mode.

Notes:

o Placement of functionality within the product seemed appropriate since workflows would

include navigation to and from the Triage Map and Metric Browser.

o Product Management decided on the label – the intent was to associate it with “Analytics.”

o Decision to restrict availability to Historical mode resulted from a combination of need to

limit development scope and usability/design concerns. Live mode involves a sliding time

window (last 8 minutes) that updates every 15 seconds. Some of the issues that arose:

With each refresh of the table, the user’s view would have to be preserved. But what if

the anomaly being viewed now falls outside the time window? Do we add a dummy

Time Window

Set to Live

Message over

masked screen

item to the list, and drop it once the user changes selection? This adds lots of

complexity to the code and the behavior.

Anomalies can take several minutes to be reported to the system, which is out of synch

with the 15-second updates and is likely to violate the user’s expectation of the

freshness of the data. User may be confused if new items are added with old

timestamps.

Primary Content Areas:

B. “Unusual Behaviors” list.

Use:

o Table displays all anomalies that a) overlap with the currently selected time window and b)

exceed the score threshold. (A default anomaly score threshold is set in the Analytics

properties file.) Results in this list may be further filtered by specifying a participating

component – more on that below.

o Anomalies are identified by Start Time, Latest Data, and Anomaly Score. Label reads “Score”

to reduce column width, since numbers are typically 2 digits, or at most 3.

o Selection in the table drives the content on the rest of the screen (see Note below).

B

o Table is sortable; default sort is by Score. (Current sort column should be indicated in

header – appears to be a bug here.)

Notes:

o The intent was to allow the user to change the anomaly score threshold on the fly, via the UI,

but that was not implemented in this release.

o Again, terminology was determined by Product Management.

C. “Participating Components” list.

C

B

Use:

o Populates when an anomaly is selected in Unusual Behaviors list (B). Displays components

found in selected anomaly, sorted alphabetically by name.

o Standard components are labeled to correspond with the Triage Map. Names for custom

components are derived more directly from the metric path.

o “Server Resources” components and items with a “Location” suffix represent data from

individual servers, rather than aggregates. These components may be followed by a number

in square brackets, e.g., “[2]”, indicating the number of different servers reporting the same

component as part of the anomaly.

o User can uncheck checkboxes to focus on individual components in the graph and table at

the right. Checkbox in table header provides “select all” and “clear all” actions.

Notes:

o “Components” are the elements in the system that are being monitored – Business

Transactions, Frontends, Calls to Backends, Server Resources, and any other type of

software or specific element the user is interested in. The characteristics being measured

for each component – response time, errors, etc. – are “metrics”. Although the analysis

engine consumes only metrics and reports anomalies accordingly, when an anomaly is

detected, the first thing the user needs to know is which components were behaving

strangely. The specific metrics involved are secondary. We therefore included the

Participating Components list to let the user review the components and decide which to

investigate further, rather than exposing them immediately to all the complexity of the

individual metrics.

o A set of regular expressions are used to send a predetermined (but not fixed) collection of

metrics – all derived from Triage Map components – to the analytics engine by default; this

made it possible for us to parse out the metric names into user-friendly component labels

resembling what is seen in the Triage Map UI. If these expressions are customized to send

additional metrics, we simply trim off the server identifier and the metric name to create

the component label. (The helpfulness of this label depends on the semantics exposed in

the metric path.)

o Feedback on the components list indicated that users needed more assistance in recalling

how the components worked together in the application, in particular, which component

depended on which. This information is available in the Triage Map – so I proposed adding

context menus to each component in the list. These menus would include a “select only this”

option, as well as navigation to the corresponding Triage Map or Metric Browser tree node.

This became a roadmap item, but was not implemented.

D. Metric table.

Use:

o Populates based on checkbox selections in Participating Components list (C), listing metrics

found in the selected anomaly for those components, along with their “Deviation” and

“Association” scores (explanation below).

D

C

E

After “Show

More” button is

clicked

o Table also serves as a legend for the graph (E). User can hover over individual metrics to

highlight the corresponding trend in the graph. User can also uncheck individual metrics to

remove them from the graph (see following screen).

o Scores in the table are provided by Prelert for each metric found in the anomaly. They

indicate how much the metric deviated from its normal behavior, and how closely

correlated the metric is with the anomaly as a whole. By default, the table is sorted by

Association.

o The “Show More” button adds optional host and container columns for each metric; this

information is also available in a tooltip when user hovers over the component name. The

button reads “Show Less” when the extra columns are showing.

o The blue arrow buttons at the left of each row allow the user to jump to the corresponding

metric in the Metric Browser tree. The trends shown there include the Y-axis magnitude

labels, and related metrics are available nearby for comparison and context.

Notes:

o The “Show More” button corresponds to a similar feature on other tables in WebView

(adding Max and Min columns); the original design would have allowed users to add and

remove columns via a checkbox menu in the table header, but this functionality was not

available for this particular table widget.

o The inclusion of the Deviation and Association scores was the PM’s idea. It was really an

experiment, as we did not know how meaningful these values would be. I objected on the

grounds that they would confuse the user, since they did not correspond to anything in CA

APM, but the PM felt they must be useful or Prelert would not include them. As I predicted,

we got a lot of questions about what they were and why they didn’t seem to correspond to

visible deviations.

o The blue arrow buttons are used in other tables throughout WebView, and in all cases they

bring the user to a corresponding location in the Metric Browser tree. These were included

in the thin client as a replacement for the double-click functionality provided in the original

thick-client product.

E. Metric graph.

Use:

o Populates based on checkbox selections in Metric table (D), displaying the corresponding

metric trends over the currently selected time window.

o The specific values for each metric are transformed so that each point represents a

percentage of the min-max range for that metric over that time window. This captures the

trend line of each metric without reference to the actual magnitude.

o The tooltip for each point provides the actual value for that time interval along with the full

metric path and a link to that metric in the Metric Browser.

o The trend line thickens when the corresponding legend row is hovered over, as above.

Notes:

o No values are depicted on the Y-axis, since users would be tempted to read them as the

actual metric value range.

o Time window labels are included at the top of the graph as is done throughout the product

when the time controller is set to Historical mode.

o Data point tooltips are also consistent with those found throughout the product in terms of

format and function.

E

D

A Note on the Flow of Control:

CA APM generally follows the pattern of left-side “Master”, right-side “Detail”. The user typically selects

a node from a tree at the left, and corresponding data views or editors appear in the larger area at the

right. The right-side content is often arranged in a vertically-stacked series of collapsible panes. When

there is a single large graph at the right, it usually includes a legend table with metric names, associated

values, and checkboxes that allow the user to reduce the number of metrics shown in the graph.

The usual Master-Detail pattern described above was modified slightly in the Analysis Workbench. With

the creation of the “Participating Components” concept, we now had two different “Detail” views for

the selected anomaly – a higher-level components list, and a lower-level metric graph with legend. It

seemed valuable to allow the user to filter the metric graph by component, so the one view needed to

drive the other. That left us with two options: the components list could appear above the metric graph

at the right, or it could appear below the anomaly list at the left.

Placing it at the right probably would have made the relationship between the components list and the

metric details a little clearer, but it would have also made the Details area very busy – while reducing

the space available for the all-important metric display. Moreover, two tables with checkboxes on the

same side of the page, one above and one below the metric graph, would have looked peculiar and

likely confused users. Finally, the flow of control would have been extremely strange, with the initial

left-to-right flow (anomaly to details) followed by top-to-bottom (components to metric displays),

followed by bottom-to-top (legend to graph).

Placing it at the left worked better, since it led to a smooth counter-clockwise flow of control.

Furthermore, since the components list offers a high-level view of the anomaly’s contents, we expected

users to scan it first, to check the anomaly for relevance before jumping into the details – and this is

much easier to do when the components list is closer to the anomaly list and farther from the distracting

metric graph. As for users’ ability to make the association between the components’ checkboxes and

the metrics at the right – since the two tables were essentially side-by-side, the user could see the effect

of changing the selections quite easily. (The corner of the eye is very sensitive to movement.)

Other, smaller considerations played a role in the decision as well: The components list did not require

much width, so it fit nicely below the anomaly list. Shortening the anomaly list was a concern, but the

table would have to be paged anyway, and we concluded that users were unlikely to need to scan more

than 10 anomalies at a time. And so on…

The result was the following counter-clockwise flow: Selection in the Unusual Behaviors list (B) at top

left initially drives the contents of both the Participating Components list (C) at the bottom left and the

Metric Table (D) and Metric Graph (E) that appear at the right. However, the checkboxes in the

Participating Components list also modify the contents of the two Metric displays at the right, and of

course, the checkboxes in the Metric Table further refine the contents of the graph, above.

Secondary Features:

Component Search:

Our scenario proposed that users would be looking for anomalies that occurred within their applications

during a particular period of time. It therefore made sense to provide some means of searching the

anomalies for metrics from a specific application. Unfortunately, CA APM does not have a strong

concept of application – and constructing a query based on multiple, related components proved

challenging. So, as a first step, we provided search based on individual components, including Business

Transactions. If end-users were complaining that logging in was taking too long, our user could search

for anomalies that included client and server metrics from the Login transaction. Similarly, if multiple

transactions involving authentication were reported as behaving badly, our user could search for

anomalies that involved the AuthenticationEngine component. Going forward, searching by component

would also allow us to provide useful integrations with the Triage Map.

F. Component Search controls.

F

B

Use:

o The first dropdown allows the user to select the type of component to search for. Default is

“(any component)” – i.e., no filtering. “Matching component” offers a partial string search;

it was provided for users with custom components, but may also be used to find standard

components based on their metric path rather than their computed component name.

o Selecting “Frontend or Backend Call” from the first dropdown populates the second

dropdown with the names of all the Frontends currently known to the system (not just the

ones found in the list of anomalies). The default text in the second dropdown prompts the

user to “(Choose Frontend). Once a Frontend is selected, the third dropdown populates

with all the Backend Calls corresponding to this Frontend. The prompt is “(Optional

Backend Call)”, because the user may wish to search for the Frontend itself, not one of the

backends. Note that the “Go” button is enabled here; the user can simply press enter at this

point to issue the search.

o Selecting “Business Transaction” in the first dropdown (not depicted above) similarly causes

the second dropdown to populate with the names of all the Business Services that have

been defined in the system; the default text reads “(Choose Business Service).” Once a

Business Service has been selected, the third dropdown populates with all the

corresponding Business Transactions, and the text prompts the user to “(Choose Business

Transaction)”. In this case, the selection is mandatory, so the “Go” button does not enable

until an item is selected from the third dropdown.

o Selecting “Matching Component” in the first dropdown causes the two other dropdowns to

be replaced by a free text field, with the prompt “(Enter Matching Component)”. The “Go”

button is disabled until text has been entered into the field.

o The “Go” button submits the search and returns all Unusual Behaviors overlapping the

current time window that contain metrics from the selected component (or any component

matching the entered text). In other words, the search filters the contents of the Unusual

Behaviors list (B). The label above the list changes to indicate that the filtering has been

applied.

Notes:

o As noted above, the dropdowns offer all components currently known to the system, not

just those found in the current set of anomalies – thus, the term “Search” is used, rather

than “Filter.” If the user is looking for anomalies involving a particular component (such as a

Business Transaction), s/he may well change the time window in an attempt to find them.

The flow would be greatly complicated – and completely unintuitive – if the component of

interest disappeared and reappeared from the dropdown as the time window was changed

(not to mention the technical cost of computing the available list of components each time a

new set of anomalies was returned).

o The placement of the Search controls was a compromise. Given that the search affects the

Unusual Behaviors list, one would expect the controls to appear directly above that list.

However, since the controls are much wider than the list, this wouldn’t actually work

visually. Moreover, the search is an optional task, so putting it front and center – making it

the first thing the user sees as s/he enters the page – is problematic. In addition, creating a

separate toolbar for it on the page would push the “Unusual Behaviors” label onto its own

line, wasting valuable vertical space on the page. The current placement was seen as still

being above the Unusual Behaviors list, as it should be, and linked to it by the “Unusual

Behaviors” label; reading the panel from left-to-right suggests to the user that it is the

Unusual Behaviors that may be “searched.”

Notifications:

G. Recent Notifications.

Use:

o When an Unusual Behavior is detected that exceeds a pre-set “notification threshold,” it is

reported in the Recent Notifications list. A special icon is used to distinguish it from the

status notifications that also may appear there. The text reads “Unusual Behavior” along

G

with the Start Time and the Anomaly Score. Only the most recent five notifications are

shown here (and that number includes status notifications as well).

o Hovering over the notification brings up a tooltip with the first 5 components – those with

the highest Association scores – and a label indicating how many other components were

found in the anomaly, if any.

o Notification is linked to bring user to the Analysis Workbench with the specific anomaly

selected. To help ensure that the anomaly is available and easily viewed, the link sets the

time controller to a custom range corresponding with the anomaly period, with a one

minute buffer on either side. A confirmation message is displayed to alert the user to this

time change; the user may choose to decline and manually navigate to the Workbench

instead.

Notes:

o Given the small amount of space available for the Recent Notifications, we decided Start

Time and Anomaly Score were the most useful for identifying an anomaly, particularly if we

could display some component information in the tooltip to help the user assess its

relevance to their application(s). The Anomaly Score of course gives some indication of how

unusual the behavior was; Start Time was essential, since the notifications came in 3 to 5

minutes after the behavior began (compared to 15-30 seconds for a status alert).

o The icon was used because the existing notifications were all status notifications and

therefore included an alert status icon; the table of notifications had two columns. In

addition, our roadmap included the use of icons in the Triage Map to indicate that there

were anomalies associated with this context or this particular component, so it was

important to familiarize the user with the meaning of the icon.

o The decision to set the time window on the link was somewhat controversial, since this

“automatic” changing of the time was never previously done in the product. It was

necessary, however, because the Analysis Workbench could not be used in Live mode (as

mentioned above), and the Home page is typically viewed Live. Also, the anomalies were

being reported more-or-less as they came in, so if the user were in Historical mode, the

newest anomalies might well not overlap the currently viewed time window. My original

design had more complex logic about when and how to change the time, but in the end it

was decided that a consistent approach of using the anomaly time period with a one minute

buffer on each side – to make the change in behavior more visible – was the best way to go,

provided we informed the user that we were making this change and allowed them to

decline it (because they might not want the disruption, if they were busy with another

problem).

H. Notifications Pane.

Use:

o During a user session, a Notifications log is maintained in a floating, non-modal pane

accessible via a count link on the top-level toolbar. (The count increments to inform the

user that new items have come in.) These notifications are not linked, but may be used for

clarification and review of the items seen in the Recent Notifications pane.

Notes:

o Like the Recent Notifications pane, this pane already existed before the Analysis Workbench

feature was added to the product. We decided to include the Unusual Behavior

notifications here for consistency – the user would expect that all “Recent Notifications”

would also appear in the upper pane. Moreover, the longer history was useful, and the

extra space allowed for more descriptive labels.

o The fact that these notifications come in 3 to 5 minutes “late” was a serious problem in this

context, since the users are accustomed to the status notifications being listed in strict time

order. In fact, in these notifications, the timestamp is displayed prominently – it is the first

element shown, and it occupies its own line. When we tried to copy this format for the

Unusual Behavior notifications, we realized this would lead to tremendous confusion, as the

H

timestamps would appear significantly out of order. So we instead incorporated the

timestamp into the first line of the notification, making sure that the first words on the line

were “Unusual Behavior.” We also used the special icon to further distinguish the types of

notifications (all status notifications use a yellow triangle icon).

- & -

Backstory

I had been working for some months on forward-looking designs for improving our product, when I was

asked by one of the Product Managers to help him with a new set of features he was calling “Analytics”.

These were very similar to my own proposals around automatic problem detection and analysis – in fact,

he acknowledged that many of his ideas originated in my work – and he thought that incorporating

some of my designs would help him “sell” the features and get feedback from the field.

He sent me a document describing the features, and I responded with feedback about the need to

distinguish more clearly between management and “usage” tasks. I also proposed a new feature – an

“event feed” to actively alert users when unusual or troubling patterns are detected.

The following week the PM came to town and we brainstormed together, sketching out how the new

“Analytics Workspace” he was proposing might fit into the existing product and my own proposals for a

“problem-based” UI:

Afterwards, I went back to his document and took notes on the terminology and the implications, briefly

looking up how our competitors handled similar concerns. I also jotted down thoughts on the primary

functionality and flows:

I then converted my whiteboard flows into Visio sketches:

I proposed four possible starting points for the flows, all involving an alert or status indicator. These

starting points covered two user roles – the “monitoring” user and the “triaging” user. For Monitors,

the starting point might be a mobile alerts dashboard, an email notification, or a detection event that

was reported on the home page or an application dashboard. I assumed all of these triggers would be

associated with applications or business transactions, since these are the objects that Monitors are

typically watching. For Triagers, the starting point would likely be a detection event reported on the

Application Triage Map – at the business transaction or software component levels.

I went into Balsamiq to flesh out a few of the more interesting screens, using some design patterns I’d

been kicking around for a while. While the PM was reviewing those, I started sketching out the

management piece as well.

Eventually it became clear that our first priority was going to be what the PM called “unusual activity”

detection rather than the “disturbing patterns” that I had been focusing on, simply because it was easier

to do. We could partner with a company that already offered anomaly detection and incorporate their

engine into our product. But at this point I still had no idea how the engine would actually work, so I

made my best guess and designed one of the flows – with all the bells and whistles. (Note: I generally

use Balsamiq images placed on slides for my functional design sketches, since they are easy to share and

don’t look like official “specs” – while still allowing room for some explanation and notes.)

Sample slides from an early Pattern Workspace deck, showing parts of an Unusual Activity flow.

Key points that came out of these early designs were the importance of knowing which business

transactions and software components were showing unusual activity, when the unusual activity

occurred, and just how unusual the activity was. Unusual patterns occur all the time, and most are just

random noise. Our users don’t want to be alerted unless the information is both useful and relevant: is

the activity occurring in their own application or someone else’s? Is it happening now, does it correlate

with what the customers are experiencing? Does it indicate where to start looking for the cause?

When at last we acquired our anomaly detection engine – by partnering with Prelert – I was finally able

to get a more realistic understanding of how the detection worked. There was an “anomaly score” that

could be used to determine whether or not to issue notifications. But metrics were not processed by

application, or business transaction, or component. Every single metric sent to the Prelert engine was

combined in a single giant analysis. As a result, a given anomaly could consist of hundreds of metrics,

and they didn’t even have to be logically related, they just had to correlate somehow. This meant –

among other things – that there was no way to apply a useful label to an anomaly, to let the user know

what the anomaly was about.

Here’s what the Prelert UI looked like at the time – anomalies are represented by dots along a timeline,

with the size and color indicating the degree of anomaly. When a dot is selected, the details are shown

below. Note that the data legend is grouped by source and is not otherwise particularly informative:

To make the anomalies useful to our users, we needed to be able to associate them with the

applications being monitored – or at the very least, with the specific components that make up those

applications. That meant, first and foremost, that the metrics being analyzed had to come from known

components. I proposed a set of default metrics to be sent to the anomaly-detection engine – just those

metrics available from our Application Triage Map. The Triage Map was designed to help Triagers

visualize their business transactions, frontends, backend calls, and supporting resources. If these

components’ metrics were the ones appearing in the anomalies, we could present the anomalous

metrics in the UI with their component labels. This would help the user derive meaning and value from

the anomalies, and it would greatly enhance the integration of the anomalies into the Triage Map and

other portions of the existing UI.

The Participating Components then became the primary elements of each anomaly; the individual

metrics were secondary. A component name could be used to filter the list of anomalies; components

from the same application could be highlighted; users could filter their metric displays by component –

and more:

The use of components could also make the notifications more meaningful – users could hover over the

alert to see if the problem was in their application or someone else’s and if so, where:

At this point, we were ready to begin to develop the UI for this feature, and since we were using an Agile

process, this meant dividing the designs into smaller features that could be prioritized and sized. We

had a total of three months’ development time before we had to demo our new “Analytics” feature to

customers at our big annual event in Las Vegas, CA World, and it was important that we make a big

splash. So at the PM’s request, I created four sets of storyboards that captured various more-or-less

realistic options for scoping the feature. I started with the “optimal” design I’d been proposing, and

then scaled it all the way back to a “minimally useful” set of features. Then I came up with two options

that fell somewhere in between those extremes, and sent this slide deck to the scrum. They were able

to use this to define their stories – and even begin development.

Of course, I hadn’t intended them to use these wireframes as design specifications – they were written

only to capture the proposed functionality and flows. So I quickly created a “mini-spec” for their first UI

story and later, one for each sprint. I also created small docs when a particularly tricky problem arose

and I wanted to record the decisions.

Sample specification screens, from first UI Story spec and later Notifications sprint, respectively.

The problem of time came up at several points in this project – for instance, we discovered that Prelert

reported anomalies approximately three minutes after they began, which was a problem for

notifications. The CA APM product reports most performance problems within 15 seconds of their

occurrence, so we needed a way to set the user’s expectations about the “freshness” of their data. We

solved this by including the start time in the notification, as shown above.

Time was also an issue for the Analysis Workbench. We discovered rather belatedly that Prelert only

reported a single timestamp for each anomaly, based on the point of maximum deviation. All my

designs had assumed we would have a start time and an end time for each anomaly, so that we could

return anything that overlapped the selected time period. Triaging software problems depends critically

on knowing when the problem began, so it was crucial to have a start time – and how could the user

visualize the anomaly if we couldn’t graph it with a proper end time? Fortunately, we were able

convince Prelert to modify their engine to send us additional information about each anomaly – the

timestamp for the first unusual metric value seen, and the timestamp for the last unusual metric value

seen. (The latter was not technically the “end time,” since more data could still come in, so we ended

up calling it “Latest Data” instead.)

Related Features

Application Triage Map

Business Transaction Map with Locations Pane and Alert Details panes showing.

Frontend Map with Locations pane showing; Alert Details closed.

What Was Asked For

Out-of-the-box monitoring and first-level triage functionality for a less-sophisticated Triager persona.

What We Built

A system for detecting and displaying the calling relationships between subsystems, in the context of a

user-configured Business Transaction (BT) or an automatically discovered Frontend component,

displayed in a brand new, fully-featured UI. The UI consisted of a tree view with active status

monitoring; a set of dynamically generated dependency diagrams with on-demand detail panes; and

metric trend displays with relevant thresholds – along with links to additional, more detailed

information.

Why the Design was Especially Cool

This feature was the first piece of out-of-the-box UI created specifically for a critical primary

persona – the first-level Triager. This was a much less technically-knowledgeable user than our

product was originally designed for, so this new UI had to bridge the gap between what the

product offered under the covers and what the user really needed to see. The solution

involved new information architecture, on-demand detail panes to reduce complexity and allow

for progressive disclosure, and appropriate menus and links to direct the user’s workflow.

Rather than flood the user with hundreds of metrics from the individual servers in a cluster, we

summarized the data into the relevant subsystems – with status and dependency information –

and then provided a drill-down for viewing the per-server metrics and status for a selected

subsystem. From this “Locations” table, the user could jump to more information about a

specific server. (Note: this feature was initially designed as part of the Java Swing thick-client

and later ported – with minor modifications – to the new thin-client UI shown above.)

Patents Granted (for roadmap items)

US 8,438,427 B2 Visualizing relationships between a transaction trace graph and a map of

logical subsystems – Inventors: Laura G. Beck, Natalya E. Litt, Nathan A. Isley

US 8,516,301 B2 Visualizing transaction traces as flows through a map of logical subsystems –

Inventors: Laura G. Beck, Natalya E. Litt, Nathan A. Isley

WebView Homepage

Partially configured WebView Homepage with two Frontends showing critical statuses.

What Was Asked For

A landing page for the new thin-client UI that would serve as an out-of-the-box dashboard for all users

to monitor their applications, with links to the appropriate next steps – including setup tasks.

What We Built

A page that listed all the Business Transactions and Frontends found in the system, along with essential

performance metrics (Response Time and Errors) – and status information, if available. If an alert was

not defined for an element, the UI showed an “edit” icon to prompt the user to set one up; this could be

done from a dialog without leaving the page. Summary backend and resource status information across

all the user’s applications was displayed as well, in a box labeled “Risks.” A summary view of all the

alerts configured in the system was also included, along with a small feed of recent notifications; in

addition, a count at the top of the page indicated how many notifications were available for viewing in

the Notifications Pane. Every item on the page offered a link to additional information.

Why the Design was Especially Cool

This screen was originally designed to serve two purposes – 1) to offer meaningful data and

flows to our users, instantly, upon install; and 2) to help guide users toward the setup and

management tasks that would make the product even more useful. Although we weren’t able

to implement all the “guides” that we had planned (e.g., offering “next steps” text with links

when no Business Transactions were found), the edit icons and the associated dialogs alone

streamlined the setup dramatically. Links on the “Risk” and other alerts also led users to the

relevant editors, while links on the Business Transaction and Frontend names brought users to

the corresponding Triage Maps, advertising this important tool.

![hf]lvd Joj:yfkg of]hgf th'{df lgb]{lzsf, @)^*...:yfgLo -;fd'bflos tyf uflj;_ ljkb\hf]lvd Joj:yfkg of]hgf th'{df lgb]{lzsf, @)^*÷3:yfgLo ljkb\hf]lvd Joj:yfkg of]hgf th'{df lgb]{lzsf,](https://static.fdocuments.in/doc/165x107/5e663607b7760263f10c10ab/hflvd-jojyfkg-ofhgf-thdf-lgblzsf-yfglo-fdbflos-tyf-uflj-ljkbhflvd.jpg)