3D Facial Reconstruction Tissue Depth Measurement From MRI Scans

-

Upload

ridwan-fajiri -

Category

Documents

-

view

15 -

download

0

description

Transcript of 3D Facial Reconstruction Tissue Depth Measurement From MRI Scans

-

3D Facial Reconstruction

Tissue depth measurement from MRI scans

Gilberto Echeverria

University of Sheffield

Supervisor: Dr. Steve Maddock

This report is submitted in partial fulfilment of the requirement for the degree of MSc in Advanced Computer

Science

10/11/2003

-

Declaration

Declaration All sentences or passages quoted in this dissertation from other people's work have been specifically acknowledged by clear cross-referencing to author, work and page(s). Any illustrations, which are not the work of the author of this dissertation, have been used with the explicit permission of the originator and are specifically acknowledged. I understand that failure to do this amounts to plagiarism and will be considered grounds for failure in this dissertation and the degree examination as a whole. Name: Signature: Date:

I

-

Abstract

Abstract Facial reconstruction is a technique used in forensic science as a possible way to identify the remains of a person, based on the skull. A recreation of the individual's face is made by using information about the thickness of the tissue over several key landmark points. The information used so far for the landmark points is not very abundant, there are just a limited number of landmark points, and the data itself is not completely reliable. To solve the problem, it is being sought to analyse Magnetic Resonance Imaging (MRI) scans to get updated tissue depth data. The information gathered this way could provide more precise measurements, as well as a large source of new samples. The aim of the current project is to provide a method for updating the database of landmark points. This will be achieved using computational methods to filter the information gathered from the MRI data, and provide a usable interface that the specialists can employ to take new measures of the skin thickness. The information gathered in this way can later be applied in the reconstruction process used to identify corpses. The results obtained show how it is possible to obtain accurate measurements of the tissue depths over the face. The project utilizes several different computer techniques for image processing, to progressively extract only the useful information out of the input data, and computer graphics to make a usable display of the data extracted.

II

-

Acknowledgements

Acknowledgements I would like to thank Steph Davy from the Unit of Forensic Anthropology for all her help and support. Very special thanks to James Edge from the Computer Graphics Research Group at the University of Sheffield, who provided a lot of help and ideas for the development of the program. He also provided some sample code and DICOM images. For the development of the program, some pieces of code were based on previous programs: Stephen M. Smith produced the Susan set of algorithms for the image processing part of this project [Smith1995]. The majority of OpenGL code was based on the samples by Christophe Devine [Devine2003]. The algorithm for ray/triangle intersection is credited to Tomas Mller and Ben Trumbore [Mller1997]. The algorithm used for computing a normal to a plane was taken from the book: OpenGL Super Bible, by Richard Wright and Michael Sweet [Wright2000]. The sample data used for testing was obtained from the Siggraph Education Committee webpage [WWW8] and a sample provided by Dr Iain D Wilkinson and Charles Romanowski from Academic Radiology at the University of Sheffield.

III

-

Contents

Contents Declaration ........................................................................................................ I Abstract ............................................................................................................ II Acknowledgements ......................................................................................... III Contents .......................................................................................................... IV Index of figures................................................................................................ VI Index of tables ................................................................................................. VI 1 Introduction ............................................................................................... 1

1.1 Facial Reconstruction...................................................................................... 1 1.2 Aims of the project.......................................................................................... 2 1.3 Chapter Summary ........................................................................................... 2

2 Literature Survey ....................................................................................... 4 2.1 Traditional Reconstruction Technique............................................................ 4 2.2 Landmark Points ............................................................................................. 6 2.3 Previous Research on the Topic...................................................................... 9 2.4 Technology Evaluation ................................................................................. 11

2.4.1 Magnetic Resonance Imaging (MRI).................................................... 11 2.4.2 Image Processing .................................................................................. 12 2.4.3 3D Computer Graphics for Face Rendering ......................................... 13

2.5 Summary ....................................................................................................... 14 3 Requirements and analysis ..................................................................... 15

3.1 System Requirements.................................................................................... 15 3.2 Analysis of the Technologies to Use ............................................................ 15

3.2.1 Format of source data............................................................................ 15 3.2.2 Methods for analysing the image information ...................................... 16 3.2.3 Programming language to use............................................................... 18

3.3 Testing and Evaluation ................................................................................. 18 4 Design ..................................................................................................... 19

4.1 Data input...................................................................................................... 19 4.2 Edge Detection.............................................................................................. 20 4.3 Vertex Identification ..................................................................................... 20 4.4 3D Model generation .................................................................................... 21 4.5 User Interface................................................................................................ 22

5 Implementation........................................................................................ 23 5.1 Data acquisition ............................................................................................ 23 5.2 Edge Detection.............................................................................................. 24

5.2.1 PGM image files ................................................................................... 25 5.2.2 SUSAN algorithms ............................................................................... 26 5.2.3 Testing of the SUSAN algorithm.......................................................... 28 5.2.4 Evaluation of other techniques.............................................................. 29

5.3 Extraction of vertices .................................................................................... 31 5.4 Model generation .......................................................................................... 34

5.4.1 Special Effects ...................................................................................... 36 5.4.2 Computation of normal vectors ............................................................ 37 5.4.3 Display lists........................................................................................... 37

5.5 User interface ................................................................................................ 38 5.5.1 Landmark point selection...................................................................... 39

IV

-

Contents

5.5.2 Distance measurement .......................................................................... 40 5.6 Configuration file.......................................................................................... 40

6 Results .................................................................................................... 42 6.1 Evaluation of image processing methods ..................................................... 42 6.2 Information extraction .................................................................................. 45 6.3 Rendering...................................................................................................... 46 6.4 Distance Measuring ...................................................................................... 47 6.5 Speed Improvements..................................................................................... 49 6.6 Further work.................................................................................................. 49

6.6.1 Interface ................................................................................................ 49 6.6.2 Data filtering ......................................................................................... 50 6.6.3 Reconstruction of new faces ................................................................. 50 6.6.4 Alternate display of tissue depth measurements................................... 50

7 Conclusions............................................................................................. 52 7.1 Application of computer science to reconstruction ...................................... 52 7.2 User interface ................................................................................................ 52 7.3 Image processing .......................................................................................... 53

References ..................................................................................................... 54 Progress history.............................................................................................. 57

V

-

Index of figures

Index of figures Figure 2-1 Frontal view of a skull during a reconstruction [Source: WWW3] .............. 4 Figure 2-2 Lateral view of a reconstruction [Source: WWW3] ..................................... 4 Figure 2-3 Location of the landmark points [Source: Cairns2000] ................................ 7 Figure 2-4 Sample MRI image of the head. [Source: Evison2000].............................. 11 Figure 4-1 General system design................................................................................. 19 Figure 5-1 Extraction of slice data to be processed ...................................................... 24 Figure 5-2 Image processing using SUSAN................................................................. 25 Figure 5-3 Squares base image ..................................................................................... 28 Figure 5-4 Squares sample image ................................................................................. 28 Figure 5-5 Diamonds base image ................................................................................. 28 Figure 5-6 Diamonds sample image ............................................................................. 28 Figure 5-7 Stylised version of the human head ............................................................ 28 Figure 5-8 MRI slice and its associated histogram....................................................... 30 Figure 5-9 Indices of the edges in the array.................................................................. 32 Figure 5-10 Vertices taken every 10 lines in the image................................................ 33 Figure 5-11 Sequence of the stored vertices ................................................................. 33 Figure 5-12 Data structures for storing the vertices of the image slices....................... 34 Figure 5-13 Triangle strip formed with a list of points................................................. 35 Figure 5-14 Diagram of two contiguous slices having different list sizes.................... 36 Figure 5-15 Calculation of a normal vector [Source: Wright2000].............................. 37 Figure 5-16 Order of vertices for triangles used for picking ........................................ 40 Figure 6-1 3D head generated using a sample of 109 slices......................................... 47 Figure 6-2 Side view of the measuring vector .............................................................. 48 Figure 6-3 Measuring on the diamonds sample............................................................ 48 Figure 6-4 Measuring of the distance between borders (squares sample) .................... 48 Figure 6-5 Measuring of the distance between borders (diamonds sample) ................ 48

Index of tables Table 2-1Table of measurements for tissue depth. [Source: Cairns2000]...................... 8 Table 5-1 Sample test images with varying levels of noise.......................................... 29 Table 6-1 Different pixels. Testing with the square sample ......................................... 43 Table 6-2 False positive edges...................................................................................... 43 Table 6-3 False negative edges ..................................................................................... 43 Table 6-4 Edge detection on noisy data. X-axis = noise factor, Y-axis = brightness

threshold................................................................................................................ 44 Table 6-5 Images obtained after each stage of the processing...................................... 45

VI

-

Introduction Facial Reconstruction

1 Introduction

1.1 Facial Reconstruction In criminal forensics, it is often a challenging task to identify the corpses of crime victims. There are several methods commonly used for this: fingerprints, dental and medical records, etc. But in some cases, even this kind of evidence is not present or not useful. Sometimes, the only way to identify someone under these circumstances is to have someone visually recognize the dead person. But even this is not easy. Under certain circumstances, the face of the victim may not be in good condition, may be severely altered, or the passing of time may have destroyed any traces of the face covering the bone. Facial Reconstruction is used in such cases. This technique can produce a representation of how an individual's face might have looked like when alive, based on the features of the victim's skull, and according to a set of measures of the tissue depth at certain landmarks on the face. This method is mostly used when every other attempt has failed, because it is not very reliable. It has an average success rate of 50% when used for identification purposes [Prag1997]. The face obtained will most likely not be exactly the same as that of the person from whom the skull was used, but it may be similar enough that someone may recognize the reconstruction and provide further information to solve the case. For this technique to work effectively, it is necessary that the reconstruction is seen by the correct people, someone who has known the subject and is willing to cooperate with the investigation. This is not always the case, since someone related to the happenings may not want to make himself known to the authorities, or the victim may have had few acquaintances. Facial Reconstruction has also been used to reproduce the faces of ancient human remains, mostly of mummies. In these cases, the reconstruction is made only for the purpose of creating an accurate image of this person, rather than to make a match with some known individual. Every piece of evidence can affect the way the face is recreated. Usually any remains of clothing can hint to the body build of the subject; remains of hair can be used to latter add a similar style of hair to the reconstruction; any other information that can be found should be taken into account, such as medical conditions that the subject may have had and that are evident from the remains or from other evidence. In the case of historical reconstructions, any data obtained from the references associated to the subject can be useful. All

1

-

Introduction Aims of the project

of this information should be considered and integrated into the process of recreating the face of the victim.

1.2 Aims of the project Because of the limitations of the current methods employed to obtain tissue depth measurements, it is necessary to find alternative ways of updating the information available. The new techniques to be used must be able to extract the information desired with greater precision, make it easier to get the data from a large number of samples, and shall preferably obtain the data from live individuals, without being intrusive to the subjects. The performance of computer technology has improved greatly in recent years, making it possible to apply it to forensic science to obtain better results in a shorter time. A computer can analyse information in just a fraction of the time it would take a person to make the same task. Depth information can be gathered from the MRI scans in just a few minutes, allowing the system to have a reliable database in a short time. The information gathered can be filtered using automatic methods, to extract only the data required, and then it can be modelled into a usable format, using Computer Graphics (CG), giving forensic scientists an accessible method for measuring the thickness of the facial tissue in a reliable way. The aim for the current project is to produce a program capable of taking tissue depth measurements out of MRI scans. The system must be able to open files containing MRI data, automatically analyse the slice images, extract the relevant information about the locations of the skin and bone, and then create a 3D representation of both layers, that can be used to locate the feature points and then take the measure of the distance between the layers at the selected point. The program will require the interaction of a human user to manually find the location of the landmark points of interest in the 3D model.

1.3 Chapter Summary Chapter 2 is a review of information related to the project. It begins with a description of the traditional method for facial reconstruction, and the set of landmark points most commonly used. Then some other projects are presented, which have made use of distinct technologies to improve on facial reconstruction. The last section is a review of the technologies to be used in this Thesis. Chapter 3 presents the requirements made for the project by the external client, and deals with the evaluation of the techniques researched, to identify the best options to use for the project. It also describes the procedures that will be used to test the correctness of the results obtained.

2

-

Introduction Chapter Summary

Chapter 4 shows the design decisions taken for the development of the project, presenting some of the techniques to be used and how will they interact within the program. Chapter 5 is a detailed description of the whole process to create the working system. It describes the algorithms employed, the function of each of the processing stages and some of the problems that appeared during development and how they were solved. Chapter 6 has the results obtained from the testing of some of the algorithms, and the description of what the final program could achieve. The future work to be done as a result of the current Thesis is also found in this chapter. In chapter 7 the conclusions of the project are presented, how the research done can be used to improve the reconstruction of faces, and what problems need to be solved.

3

-

Literature Survey Traditional Reconstruction Technique

2 Literature Survey This chapter covers an explanation of how facial reconstruction has been performed manually. Then follows a description of the tissue depth measures used as the basis for the reconstructions. These measurements are very limited, and thus, the reason for the current project is to expand them. The previous research done on the topic is reviewed, showing how different approaches have been taken to improve the results offered by traditional facial reconstruction. The works presented deal mostly with methods used to apply Computer Graphics (CG) to visualize the reconstructions generated with the existing information. Finally the technology to be used in this project is evaluated, to demonstrate how it can evolve the methods currently employed, and generate more accurate reproductions of faces.

2.1 Traditional Reconstruction Technique The reconstruction of faces is traditionally carried out using a reproduction of the subject's skull, and then applying layers of clay on top of it, to simulate the tissues covering the bone. Eventually, this material is accumulated until it covers the depth of the correspondent tissue, according to a set of measures taken at key locations of the face, called "landmark points". Figure 2-1 and Figure 2-2 show two views of a skull with the dowels marking the landmark points and some of the initial layers of clay.

Figure 2-1 Frontal view of a skull during a

reconstruction [Source: WWW3]

Figure 2-2 Lateral view of a reconstruction [Source:

WWW3]

4

-

Literature Survey Traditional Reconstruction Technique

The whole process begins by creating a replica of the skull. This usually requires some attention, as the skull may be either important evidence of a crime, or an ancient relic that needs to be preserved. First a mould of the skull is taken, and then it is used to produce a duplicate to work with. Over the replica of the skull it is possible to drill holes at the landmark points, and insert the dowels, in a way such that the pins stand out from the surface of the skull at exactly the same height as the tissue depth at that part of the face. A realistic approach to the reconstruction of the face, as used by Richard Neave [Prag1997], is to model the main muscles in the face first, using the information that can be obtained from the skull to give the proper volume to the muscles. This provides a more accurate thickness to the tissue reproduced, although is more time consuming, and in the finished model the muscles will be covered and hidden behind other layers of clay representing the skin. Whether the muscles are reproduced or not, the head is then covered with strips of clay in a uniform manner, up to the level indicated by the depth measures. To cover the areas between the landmark points, the tissue depths are interpolated to create a realistic looking face. As a final step, details are added to the reconstruction to make it look more natural. These include adding hair, wrinkles, moustaches, spectacles, clothing, jewellery, etc. A good description of the whole process can be found in [WWW3]. John Prag and Richard Neave have been working on facial reconstruction for several years. They have worked on reconstructions for forensic identifications, and have also had great success recreating the faces of historical personalities and other ancient human remains. They have made the faces of Philip II of Macedonia, and of King Midas of Phrygia [Prag1997], among other reconstructions of this kind. The results of these reconstructions give a more real image of the people known to have lived long ago. It is also a good test for the reconstruction methods to be able to compare the recreated face with the images found in sculptures or paintings of the same person. A problem related to the creation of a face out of the skull is that it holds no information regarding the shape of the nose, lips, eyes and ears, because these are not dependant on the form of the skull. For this reason, it is necessary to model these features subjectively, using the most likely shape that would give as a result a natural looking face. The technique as previously described has several inherent problems:

It is necessary to create a copy of the original skull to work with, because in general it is desirable not to alter the original, whether it is forensic evidence or a relic of the past. It is very important that the replica is as exact as possible; otherwise the reconstruction will not be accurate.

5

-

Literature Survey Landmark Points

Modelling the face by adding layers of material is time consuming, and the result is subjective to the artist creating the reconstruction.

Because of the limited information provided by a skull, and the subjectivity of the method, it is sometimes necessary to present different alternative reconstructions, and creating them is a very slow process using the traditional technique, as it requires the construction of a whole new model.

In an effort to solve these problems, modern imaging techniques, normally used in medicine, have been applied to obtain a digital version of the skull, without the need to alter the original. If a physical model of the skull or the reconstructed head is later needed, it is possible to create one from the digital data, using the Stereolithography technology. The 3D computer model of the face is given as the input for a machine containing a liquid photopolymer plastic. This plastic hardens when a laser is applied to its surface. So the computer model is "drawn" on the liquid, one layer at a time, and then the already solid part is lowered so that the next layer can be plotted on the top. At the end, the complete solid model is extracted from the liquid, and cured to make it more resistant [WWW2]. Computer technology is also employed to produce 3D graphic models of the skull and the reconstructed faces. The general idea is to have computers perform the reconstruction process based only on scientific information, and avoid, as much as possible, the artistic and subjective aspects of the process. Computers will also reduce the time taken to produce a face, and make it easier to modify the resulting face if necessary.

2.2 Landmark Points The key information when making a reconstruction is the depth of the tissues over the skull. This is what guides the artist while giving shape to the face. Measurements of the thickness of the tissue covering the face have been taken since the 19th century and have not been thoroughly updated. Most of the measurements were formerly taken from dead bodies, by inserting pins into the face until the bone was reached and then marking how deep the pin went. The majority of the data was taken from Caucasian males. It is not until recently that there has been more interest in getting different sets of measurements for every distinct ethnic group and gender. The most traditional data set consists of 21 landmark points, and is due to J. Stanley Rhine and C. Elliot Moore [Prag1997]. These points are also classified by biological sex and build of the sample subjects. (Table 2-1) The landmark points are placed at the most distinguishable features of the face. The normal dataset includes information only for points in the front of the head, but there is no data for the back of the skull. The locations of the landmark points are shown on Figure 2-3

6

-

Literature Survey Landmark Points

Figure 2-3 Location of the landmark points [Source: Cairns2000]

Although this data is the most widely used for reconstructions, it is not very reliable and has several shortcomings:

The number of landmark points is relatively small (only 21 in Rhine and Moore's set). There are large areas of the face that do not have a specific depth measure, and the values at those points are interpolated from the surrounding points. There is no defined method to do the interpolation, so it is done according to the discretion of the artist, and thus, the resulting face is very subjective and not reproducible.

The landmark depth information was obtained from a small number of samples, and it is not easy to gather more information using the older methods. More recently, some other researchers have tried distinct sources to broaden the number of samples, but the results obtained so far have been limited.

Most of the depth samples have been taken from dead bodies, and this does not provide an accurate measurement corresponding to a living person. The thickness of the tissues changes as soon as the body starts to decompose, and the tissues themselves deform when the body is lying down, instead of standing [Prag1997].

7

-

Literature Survey Landmark Points

Table 2-1Table of measurements for tissue depth. [Source: Cairns2000]

Recently, modern technologies have been used to obtain new and more accurate information. The first attempt at this was done using Ultrasound imaging to get the measurements from live subjects. Computed Tomography (CT) has also been used to obtain even better results. The current project will retrieve information from the analysis of MRI files. The use of these new techniques has also allowed to obtain the data corresponding to the skull of a living person, and thus allowed the technique to be tested by comparing the reproduction obtained to the face of the actual person. The results show that Facial Reconstruction can be used to produce a very similar reproduction of the real face, although it still is not possible to make an exact match, based only on the skull.

8

-

Literature Survey Previous Research on the Topic

2.3 Previous Research on the Topic Several researchers have worked on using computer technology to aid in the reconstruction of faces. Most of the research has been done on using computer graphics to present the reconstructions of faces, based on the traditional set of landmark points. There has also been some work done on obtaining more data for the depth of the tissues, by using modern technologies such as MRI and CT. P. Vanezis et al [Vanezis2000] have successfully used computer graphics to generate face models from skulls. They use a 3D scan of the skull, and then manually place the landmark points over the computer model, using their own software package. The next step is to overlay a previously scanned face model on top of the skull, and transform the face template, matching the landmark points in the face with those on the skull. The model generated can later be used along with police identification programs, such as CD-fit and E-fit, to add detail to the reconstructed face. This method has been successfully used to identify murder victims, and to reconstruct the faces of anthropological remains of mummified corpses. At the University of British Columbia there have been two research projects on the application of computer graphics to generate and display a reconstructed face. Katrina Archer [Archer1997] uses a face modelled with hierarchical B-splines to fit over a scanned skull, using the traditional set of landmark points and then extrapolating some other points to make the fitting correctly. In her work, it was made clear that it is necessary to have depth measurements for the whole head, and not only the face; otherwise, the back of the skull remains exposed. B-splines are appropriate to make the necessary interpolation between the landmark points provided, and allow for several levels of adaptation to the shape of the skull. By allowing certain hierarchical levels of the curves to conform to the landmark points, it is possible to maintain the appearance of some features of the original face model. This was used to preserve the shape of the nose and lips from the facemask, while the rest of the face was altered to fit on top of the skull. The end result is not a completely accurate reconstruction, because it depends on the features of the pre-rendered mask, but the model obtained is very flexible. By editing the parameters or the control points of the B-splines, the face can be easily modified. David Bullock [Bullock1999] takes a first step by interpolating the tissue depths between the known measurements to produce more landmark points, and later uses these interpolated values across the face to produce the reconstructions. He uses two different methods to produce a face on top of a digitised skull:

9

-

Literature Survey Previous Research on the Topic

Isosurfaces are employed to "grow" the tissue on top of the skull. The surface of the skull emits points within the limits of the tissue depth at each spot, and then a modified version of the Marching Cubes algorithm is used to generate the polygons that contain the emitted points. The end result is incomplete because it does not generate a representation for the nose or the lips. Its advantage is the more automatic generation of a face.

The other method is an extension of the work previously done by Archer. It uses a face model based on hierarchical B-splines, fit over the skull using the interpolated landmark points.

At the University of Sheffield, Jonathan Ratnam [Ratnam1999] has previously worked on a system similar to the one proposed for this Thesis. The objective of his research was to obtain a new set of landmark points from the analysis of MRI files. He worked with an MRI dataset corresponding to a 3-year-old child, and was able to obtain measurements for tissue depth, and then reconstruct the face of the same child using computer graphics. He used 2 different techniques to obtain the information of tissue depths from MRI data:

One approach was to construct both the skull and the face as 3D models, and then trace rays through the head. The intersections of these rays with the meshes corresponding to the skull and the face would give the tissue depth at that point.

The other technique used was to acquire the tissue depth values directly from image analysis of the MRI slices. This process consisted on finding where the values on a MRI image changed from 0 to a higher value (at the air-face interface) and then again to a low value (at the face-bone interface).

The points obtained from the measurements were later used to generate a polygon mesh and render the face of the child. The model created was composed of a very large amount of polygons, and some research was carried out to reduce the number of vertices and make the model more manageable. The results he obtained were not used afterwards, because the samples obtained from the head of a child cannot be applied to a reconstruction of an adult person. This is the reason why the system produced was not tested to generate the face of other subjects. Time constraints did not allow the system to be used on other MRI samples. Some attempts at obtaining new tissue depth information have used Computed Tomography (CT) scans, instead of MRI. The advantage of this technology is that bones are clearly identifiable on the scans, which makes it easier to locate the face and bone interfaces. Unfortunately, the process of obtaining CT scans is harmful for live humans, as it uses X-rays, limiting the availability of new samples [WWW1]. That is the reason why this technology has been mostly used for the reconstruction of mummies, since CT poses no danger to the remains.

10

-

Literature Survey Technology Evaluation

A mummy from the National Museum of Scotland had a clay reconstruction done, and then compared to a portrait found with the mummy to show a very noticeable resemblance. The skull was first scanned using CT, and then a physical model of the skull was produced using stereolithography. [Macleod2000] A group of Italian scientists at Pisa have also worked on the reconstruction of a mummy, obtaining base information from CT scan of the subject, and then applying computer graphic techniques to reconstruct a face. Textures, taken from photographs of a live subject of the same racial group as the mummy, were applied to get a more realistic portrait [Attardi1999].

2.4 Technology Evaluation

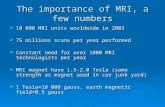

2.4.1 Magnetic Resonance Imaging (MRI) MRI consists of emitting radio waves through the human body, while within a strong magnetic field. The absorption and emission of those waves by the different tissues in the body is registered. The Magnetic Resonance produces images of the protons in the body, found mostly in hydrogen nuclei, as a component of water molecules. [WWW1]

Figure 2-4 Sample MRI image of the head. [Source: Evison2000]

Because of the varying levels of water in each human organ, MRI scans can show different tissues. The images obtained represent higher amounts of water with brighter colours, while organs with less water appear darker. Void spaces show up entirely black. Because the bones in the head have a low content of water, they appear very dark on the scans.

11

-

Literature Survey Technology Evaluation

Unlike traditional X-ray imaging, MRI is a volume imaging technique, meaning it can produce a series of plaques that show the scans for slices of the body. In this way, a three-dimensional "image" of the patient's body can be obtained, by joining the sequence of slices. [Hornak2002]

2.4.2 Image Processing Image processing techniques can be used to find the interface between bone and tissue in the MRI files. These are basically greyscale images where each pixel has an associated brightness level (BL) that corresponds to the kind of tissue shown in the image. Soft tissue is brighter, while bone appears almost black on MRI scans (Figure 2-4). Finding the sudden changes in the intensity of the pixels will show where the skin and bone interfaces are. This is achieved with edge detector methods. Edges in an image are the pixels where there is a large variation in the brightness values represented. Edges are defined with two properties: direction and magnitude. All the pixels within an edge have approximately the same brightness values. The way brightness changes from pixel to pixel can be represented as a function. Most of the techniques for edge detection are based on the derivatives of this function. The derivate of a function represents the gradient, or rate of change of the function's values. By analysing the behaviour of the derivates, it is possible to find where a sudden change in the brightness levels of the image has occurred. Some edge detection algorithms rely on a first step in which a Gaussian smoothing process is carried out to get rid of noise in the image, that may produce undesired results when enhancing the edges. The Gaussian smoothing is chosen so that the edges will not be blurred during the process. Some of the algorithms to detect edges are [Sonka1999]:

Convolution based operators: These use convolution masks corresponding to the directions of the edge. The masks used are matrices of varying sizes, depending of the number of neighbouring pixels to be considered when looking for an edge. The number of masks to be used also depends on the number of neighbours. The Laplacian operator is independent of the direction, so it uses only one convolution mask.

Zero-crossing methods: They use the second derivate to find where the values of the pixels change from a low to a high range, and vice versa. These changes correspond to where the second derivate becomes 0. The second derivate is approximated by using a Difference of Gaussians (DoG) or Laplacian of Gaussians (LoG).

Canny edge detection: Is based on some rules to finding the edges only once and in the correct location. It makes use of the edge's direction to

12

-

Literature Survey Technology Evaluation

make a convolution with a Gaussian and find the maximum values of the gradient perpendicular to the edge.

2.4.3 3D Computer Graphics for Face Rendering After the information necessary to create a reconstruction has been gathered, it is necessary to produce a representation of the face. Computer graphics can be used to do this in a versatile way, while integrating the larger number of landmark points that can be gained from the MRI files. There are several techniques that can be used to create and to display the faces. Archer uses a pre-defined 3D face modelled with B-splines as a generic mask, which can be adjusted to fit on top of the scanned skull. This technique requires the placement of the landmark points on top of the skull model, and then mapping those points to the corresponding control points of the B-splines. The advantages of this method are that the curves will automatically interpolate the values between the known tissue depths. The resulting face is smooth and can be modified by altering the control points to generate variations of the face. Another technique is to add the tissue depth measurements to the coordinates of the points on the skull. This will produce new points that can be used as the vertices of a polygon mesh representing the face. The resulting mesh can be manipulated by direct edition of the vertices, or using other techniques, such as Free Form Deformations (FFD). The benefit of using a polygon mesh is that most graphic hardware has routines to draw and shade polygons efficiently. Polygons can also be easily employed in a system that generates the whole face model automatically, without the need for a human operator to aid in the process. [Watt2000] A drawback of traditional landmark points when applied in conjunction with computer graphics is that the data available is only from the face, and there is no depth information about the rest of the head. When automatically creating a face on top of the landmark measurements, the back of the skull is normally left uncovered, because there is no information for the algorithm to generate the skin. In order to make an appropriate reproduction of the whole head, it is necessary to obtain measurements from all around the head. This will be solved by the automatic analysis of scans of the whole head, increasing the number of landmark points to cover the entire skull. A further step that can be taken to improve the likeliness of the face obtained is to apply texture maps on top of the model generated. These textures should be taken from a person of the same group as the victim, at least when such information can be obtained from evidence available. Textures can greatly aid in making the face modelled be more human and recognizable by someone who might have known the subject. [Attardi1999]

13

-

Literature Survey Summary

2.5 Summary The technique of facial reconstruction is already well developed, and has produced impressive results when applied both to criminal and historical reconstructions. It has some limitations, mainly the long time it takes to produce a reconstruction, and the fact that it is a very artistic process, in which there is a lot of ground for subjectivity. One of the aspects that make facial reconstruction unreliable is the fact that it is based on very limited information about the thickness of the skin of the face. The normally used data is not very detailed, so the person doing the reconstruction has to adapt the information according to his judgement. To solve these shortcomings, several scientists have done research on how to apply more reliable computer science methods to create faces objectively. Most of the research has sought to use computer graphics to automatically adapt a face on top of a digitised skull. The results obtained so far have been incomplete, but show that there is a great potential in the use of computers to make facial reconstruction more reliable. The technologies to be used in the current project are based on the task of obtaining new tissue depth data. The proposed source of information to be used is MRI files, because it presents a very appropriate reference to improve the existing knowledge at a low cost. The techniques that can be used to obtain the data required have been described: Image processing will aid in automating the extraction of information from the MRI scans, while computer graphics will provide quick feedback on the faces reconstructed.

14

-

Requirements and analysis System Requirements

3 Requirements and analysis

3.1 System Requirements The general requirement for the system is to create a computer program capable of getting tissue depth information from MRI scans. The information gathered would be used to specify a new set of landmark points that can be applied to produce more accurate reconstructions. The program must be able to open any number of files containing MRI data specified in a common format, extract all the slices from the scan, and then analyse each of the individual images. Out of them, the locations of the edges representing the skin and the skull must be obtained and stored as 3D coordinates. Using these points, a 3D model of the skull and the head must be produced. The user of the program will determine the actual location of the landmark points manually; using the 3D interface to view the information extracted from the MRI data. Once the user has marked a landmark point, the system will compute the distance between the two surfaces extracted. The previous work by Ratnam can be used as a basis on how to process MRI images and how to construct a 3D model based on this information. The system to be developed must fulfil the following list of requirements:

Read MRI files as input. Automatic analysis of the files. Extraction of the edges of relevance, using image processing. Creation of a 3D display of the data obtained. Allow user selection of points on the surface of the skull. Compute the thickness of the tissue covering the skull at the point

selected, in a normal direction.

3.2 Analysis of the Technologies to Use

3.2.1 Format of source data MRI has already been chosen as the source of information, because of its characteristics. The advantages of using this technology are: [WWW1]

The technique used does not perturb the patient, and thus allows the data to be taken from live individuals. Because of this the measurements are more realistic than those taken from cadavers.

15

-

Requirements and analysis Analysis of the Technologies to Use

By using a non-intrusive technology to gather the data, it is possible to increase the number of samples available and to obtain better average measurements.

Using image processing, the acquisition of data can be made automatically, taking as many feature measurements as necessary. This allows the user to have a far larger number of landmark points than with manual measurement methods.

The most common format in which MRI data is stored is called Digital Imaging and Communications in Medicine (DICOM) and was proposed by the National Electrical Manufacturers Association. This format stores the information about the scan, and also information about the patient, such as name and age [WWW4]. Because of the lack of DICOM data available, another format of MRI data will also be used. This format encodes the entire group of slice images of a scan in a single file, but has the disadvantage of not having any information regarding the characteristics of the data, not even the number of slices that compose the sample or the size of the individual images. Other alternatives to MRI that could be used to obtain tissue depth data are Ultrasound and Computed Tomography. Ultrasound imaging has been used before with the same purpose; it was the first method used to acquire measurements from live individuals. Unfortunately the image resolution obtained from this technology is inferior to what can be obtained from MRI. [Prag1997] CT can provide a much better resolution; particularly because the bones do show up noticeably on CT scans, making it easier to take measurements. The problem with this method is that the radiation used can be harmful to the subject, and thus cannot be used freely to obtain new samples.

3.2.2 Methods for analysing the image information The simplest technique that could be used to extract the desired information from the MRI files consists in reading slice-by-slice, line-by-line, beginning from the front of the head, or the face. When reading the files this way, the initial values should be almost 0. When the skin is reached, the values obtained as input will jump up, and this must be registered as the air-skin interface. From here begins the measurement of the tissue thickness, ending when a very low value is encountered, that should be the bone. At this point the measurement of the tissue depth is complete, and the value obtained is stored. The same process is to be repeated for the back of the head, this time looking for a low value first, followed by a higher one, and then an almost 0 return. To facilitate the location of these important points in an image, where the values change from one area to another, it is possible to use edge detection algorithms. There are multiple techniques that can be used to enhance the differences found in an image, and extract only the information required. Some

16

-

Requirements and analysis Analysis of the Technologies to Use

of these methods include: histogram equalization, edge detection and contrasting. The problem with extracting the tissue depth measures by direct analysis of the MRI files is that all of the distances will be parallel to the slices, and not normal to the skull as the current data is measured. This problem could be overcome by taking a large enough number of landmark points. The system will then apply this kind of data to reconstruct a face in a way similar to how the MRI files are read. The skull will be divided in slices, and the tissue thickness values will be added to each slice on top of the skull surface. Ratnam used also another method to gather thickness data. By rendering both the surface of the face and the underlying bone he was able to trace rays from the centre of the skull outward in all directions. This technique provides more accurate measures, because the values taken are normal to the skeleton surface. He used an algorithm called Marching Cubes, to extract the volume information for the head and the skull. This produces a very large number of triangles for each voxel. The drawback of using a 3D model to take measurements is the much larger complexity added to the system, since it needs to render two complex surfaces with enough detail to preserve all the important information. Ray-tracing techniques are used to measure the tissue thickness, but these represent a computationally expensive operation, since a large number of tests must be done against the primitives that compose the 3D model. Another method commonly used for CG reconstructions is fitting a predefined model of a facemask on top of the skull. For this procedure to be successful, it requires an existing database of pre-rendered faces corresponding to different types of faces. Even when having those masks available, it still is time consuming to make certain the mask correctly matches the underlying skull. The advantage of this kind of methods is how they can cope with the limited number of landmark points by interpolation. This process requires the existence of already taken tissue depths, and is not very useful when extracting that kind of information, so it is only useful for doing the reconstruction of a skull. The system developed will make use of image processing algorithms to locate the edges of the image, and from them obtain 3D coordinates of vertices, that will later be used to create a computer model of the head. Polygon meshes will be used to render the information extracted. This will permit to have a versatile display that can show enough detail about the face extracted, it is also a user-friendly interface to use for the location of the landmark points and at the same time, the program can remain within acceptable levels of performance. The actual measuring of the tissue depths will be done using ray tracing techniques, but limiting the number of polygons to be used for the intersection tests to those that may contain the nearest skin surface to a point selected on the skull. In general, only a very small number of the polygons generated will be close enough to the location selected on the skull.

17

-

Requirements and analysis Testing and Evaluation

3.2.3 Programming language to use Ratnam made an attempt to use Java as the programming language for his project. His research showed that such a choice was not the most adequate, due to issues of speed and memory management. The system was then developed using C++, obtaining a better performance. The choice of the programming language is not of real importance to the end user, but the system is expected to work at an acceptable speed. For the current program, both C and C++ have been selected. These languages allow for an efficient management of memory, necessary to deal with the very large MRI files. There are also several tools already available in these languages that can be used for several of the stages in the processing of the information and the generation of computer graphics. The selection is also based on the possibility of portability of the code between distinct platforms; while not as simple as with Java, if the code is correctly written, it should be easy to transfer from one platform to another. The graphics API to be used is OpenGL, as it is widely supported by the graphics cards of most modern computers, and it allows for quick development of 3D models with a number of effects. It also has the possibility of having user interaction with the models created. An advantage of OpenGL is its platform independence, since it is only a series of libraries that can be invoked form another program. As such, it does not incorporate system specific functions for input, output, or window management.

3.3 Testing and Evaluation For the evaluation of the image processing algorithms, a group of simple images will be produced, with characteristics similar to those found in the real MRI images. These samples will be employed to test the whole process, from the edge detection, to the generation of a usable 3D model and the acquisition of distances between the layers. To test the tolerance of the program to noise in the input information, it will be necessary to create noisy versions of the sample data, as well as of some of the real MRI images. Some tests will be carried out to determine how much noise can a sample contain while still being correctly processed, and what level of corruption will make an image to be considered useless. The tissue depth measurements taken can be compared to the traditional data set, to compare the general range of the depths obtained. This can only be done for the measurements taken that correspond to the 21 landmark points, but will provide a point of reference to the rest of the information collected. The measurements obtained will be considered correct if they are within an acceptable range from the known values for the corresponding group.

18

-

Design Data input

4 Design The system will consist of five main parts:

Reading of the input MRI data Detection of edges in the MRI slice images Selection of a set of representative points out of the edges found. Generation of a 3D model of the head and the skull Interface to measure distances in the 3D model

The output of each phase should be taken as the input for the next one.

Data extraction Input File

Edge detection MRI image

3D model generation

User interface:Tissue depth measurement

Vertex identification

Figure 4-1 General system design

4.1 Data input The requirement for the project was to take images encoded in the DICOM format as the input for the program. Each file generally contains a single slice of the scan. These files have a complex data structure, which contains information about the patient, as well as the actual data that describes each image. The relevant information must be extracted and stored into simpler structures before being processed. Another common format for MRI data is a file with the extension .img, in which all the slices are stored together in binary format, one after the other, as a series of bitmaps, without a header or any other information. The files contain a single brightness value for each pixel, so that only greyscale images are encoded this way. This format presents the problem of not containing any information about the size of each slice, or the number of slices contained in the file. The samples available for the project had a similar format, having images of 256 X 256 pixels, with each pixel having 2 bytes to store its brightness value.

19

-

Design Edge Detection

The range of values used in each sample varied significantly, requiring a normalization of the image before being further processed. In order to analyse the information in IMG files, all the slices contained must be stored independently, to be passed as individual images to the next phases of the program.

4.2 Edge Detection Edge detection consists of image processing algorithms that find the locations within an image where the values of the pixels vary from one area of the image to another. This is normally used to detect different objects shown in an image. The amount of variation necessary for an edge to be found depends of the purpose of the image processing, and of the nature of the picture. For this project, edge detection will be used to find the transitions from dark to light areas in MRI images. These images show both void areas and bones with very low values, while other tissues have various levels of grey. Finding the edges of highest contrast, it will be possible to identify the tissues useful to generate 3D models of the head and the skull. Some other algorithms can be used to facilitate the location of the edges of interest to the project. Some of these techniques are well known and available in the majority of image manipulation programs. Most of these require the adjustment of parameters to vary the results of applying the transformations to an image. It will be necessary to implement these methods so that they will be executed automatically on every one of the images processed, using parameters that will produce good results in the majority of the samples.

4.3 Vertex Identification After obtaining an image with the edges highlighted, another process will select only the relevant borders that represent the skin and the bone, and discard the rest of the edges found that are not useful for the process. The program will focus on the two outermost edges found in each image, giving only the contours of the head and the skull inside. The simplest way to locate the outermost edges is to scan an image from one side to the other, and mark where the first two edges are found, for the front of the head, and then where the last two are, representing the back of the head. This will give 4 edges in most parts of the input image. The only point where there should only be 2 edges is at the top of the head, where the edges curve to meet each other. When the edges have been located, the coordinates where they were found can be transformed to 3D space, using both the X and Y-axis of the image,

20

-

Design 3D Model generation

and adding depth information from the number of slice being processed, as the Z-axis. These coordinates can be stored to represent vertices. The use of the slice number as the Z coordinate is not very accurate, because the sample data available does not contain information about the distance between slices. It was not possible to contact the source of the data in time for this writing. Currently the distance between slices is a parameter that the user can specify through a configuration file related to each sample scan. Each of the processing steps so far takes the output of the previous phase, performs its task, and passes on the results to the next program. Initially, each process generated a new image of the output obtained, and presented visible representation of the results. This method was not useful for a working project, as it produced a large quantity of images that were not of any real use after the whole process had finished. The interchange of data between the tasks in the program was modified, using internal data structures instead of images. The first few steps of image processing share only a pointer to the array where the information of each slice is stored, and modify the data directly. Later the points found are stored in linked lists, before being used to render the 3D model.

4.4 3D Model generation The output produced by the edge detection phase will be used to generate the polygon meshes corresponding to the skin and the bone of the head. The vertices found in the previous stage can now be used to create primitives in 3D with which to model the surfaces. The vertices found individually must be ordered in a way that will allow them to be strung together forming a polygon mesh. The amount of points is related to the detail of the image produced, and to the performance of the program. Having more vertices in the polygons means a smoother surface, but also makes the rendering more computationally intensive. Before the actual rendering, the normal vectors of the polygons must be computed. These vectors are perpendicular to the plane of a polygon, and are used for lighting calculations, to correctly predict the direction where light will be reflected when it hits the surface of the rendered objects [Wright2000]. Some effects commonly used in CG will also be applied to allow a better visualization of the surfaces. Lighting provides a way of giving the impression of volume to the polygons that would otherwise look flat. Transparencies can be used to show the inner skull layer even when it is entirely hidden behind the skin. Hidden surface removal can be used to reduce the computational load on the models rendered. And finally, the pre-definition of the 3D models before displaying can also increase the speed of the interface.

21

-

Design User Interface

4.5 User Interface After extracting the relevant information from the MRI files and creating the 3D models, the user must be able to interact with the head generated and select points on the surface of the skull to obtain the tissue depth measures required. The use of CG is very fitting to this task, since it allows the user to manipulate the object in 3D space: rotation of the model to view it from any angle, and scaling to permit zooming in and out. The mouse can be used to select individual points on top of the skull. To permit the location of these points, the skin will be rendered as a transparent object. It will also be possible to hide the 3D model for the skin or the skull, to permit a better view of any one of these individually. The user will be able to toggle some other features of the rendering, to provide different views of the scene and means of interacting with it. Lighting can be turned on and of. The surfaces can be shown as filled polygons or as a wire frame that can be used to more precisely select individual points. To permit both the manipulation of the object and the selection of points to be done using the mouse, it is necessary to create a selection mode, in which the mouse no longer controls the rotation or scaling of the object, but can be used to pick points. Even when this mode of operation is enabled, it will still be possible to rotate and scale the head using the keyboard. Once the landmark points on the surface of the skull have been selected by the user, a normal vector will be traced from this point, looking for the closest polygons on the skin surface it intersects, and then measuring the distance to the nearest skin triangle. The output of the program should be the distance found at this point. Currently, the data samples available do not contain information about the real sizes of the images. This is required to translate the measurement done in 3D space to a real scale that can be of use for the purposes intended. The system developed will thus return the distances measured in voxels in the 3D space. It will be necessary to transform these measurements using other information about the scale of the MRI data.

22

-

Implementation Data acquisition

5 Implementation There have been several changes in the implementation of the application, as the development process progressed, and new tools were constantly being considered and evaluated. Windows was originally chosen as the platform to use for the implementation, because of its widespread use. The drawback was that most of the tools employed were native to Linux, while only the MRI reader contributed by James Edge was developed for Windows using Visual C++. Because of the lack of availability of DICOM data, the importance of the reader was lessened, and the selection of OpenGL as the graphics API allowed the system to be developed under Linux, and then be very easily ported over to Windows. The system is programmed as a series of functions distributed in separate files, which perform specific tasks, related to the extraction of individual images from the input files, image processing to locate the edges, sorting of the data and preparation to generate the graphical representation. The main function for the program calls upon other functions to progressively filter the information, and then to draw the 3D model out of the data obtained.

5.1 Data acquisition Because of the two different formats of the data available for testing, it was necessary to create alternate ways of extracting the relevant image information of each input file. To read the files encoded in DICOM format, a program written by James Edge in Visual C++ was used. This program was already capable of reading a group of files containing the slices of a data set, then create a 3D visualization of the slices, by using the image information of each slice as a texture, and then displaying a stack of transparent planes, each having the texture of an MRI slice. This creates a pseudo 3D representation of the scanned head. This program was first modified to output a series of image files, encoded in PGM format, to be used as the input in the next stage. The use of several images is cumbersome, but the program can be modified to output a single file encoded in the IMG format, as well as the basic configuration file that describes the properties of the IMG file, such as the number of slices contained and the size of each image. For the case of the data in IMG format, the program developed must first read a configuration file that contains information about the IMG file. With this it is possible to open the file, and read chunks of it, the size of a single slice at a time. For example, in the case of the sample files, to obtain a slice, it is

23

-

Implementation Edge Detection

necessary to read 256 X 256 X 2 bytes from the input. Once each slice has been separated, it can be passed to the image processing algorithms. The actual code implementation of this is done using a function that opens the input IMG file and goes into a loop to read the data from each of the slices. The data extracted is stored in a dynamic array, and passed to different functions. The array for each individual image is passed to the image processing algorithms, which progressively filter the information, first preparing the image, then detecting the edges and finally finding the vertices to be used for rendering. Once this information has been obtained for each slice, it is stored in linked lists, and returned to the main method.

Image processing: Edge detection

FacialMRI Main program

MRI reader: Split individual images

Slice image

Vertices

MRI images: DICOM format

IMG file: Contains all slices

DICOMViewer (Windows)

Figure 5-1 Extraction of slice data to be processed

5.2 Edge Detection The image processing necessary to obtain the required edges in a MRI image of the head is done using an already made program, called SUSAN. It was developed by: Stephen M. Smith, and is freely available online for its use in academic environments [Smith1995]. It implements algorithms to perform smoothing, edge and corner detection on an image. For each of these three modes of operation, the program can accept parameters to alter the behaviour of the algorithms used. For the purposes of this project, the edge detection algorithms are the most important, although the use of smoothing has also been explored to enhance the results of the edge detection. The original SUSAN program uses images in PGM format as both input and output, where the resulting image can consist of the original image with the edges or corners highlighted, or a smoothed picture, or an image with only the

24

-

Implementation Edge Detection

highlighted edges shown. This later kind of output is the one that will be used for the purposes of the project.

Edge detected image: PGM format

Original image: PGM format

SUSAN edge detection

Figure 5-2 Image processing using SUSAN

The program was modified to receive an array containing the data of the image. All the changes done by the processing are applied directly on the array, so that it can be passed to the next stage.

5.2.1 PGM image files This is a very simple format, which is basically a bitmap holding the values for each individual pixel in the image. It also has a header that defines the properties of the image. The header can have any number of comment lines, beginning with the character #, but must also have at least three lines with this information:

An identifier for the encoding method The size of the image The maximum value that pixels can have

The identifier of the format is of the form P# where the # is a number representing [Bourke1997]:

P3 Colour image, written in ASCII P6 Colour image, raw (binary) format P2 Greyscale image, written in ASCII P5 Greyscale image, raw (binary) format

In the line immediately following the header comes the data for the image. Colour images have 3 values for each pixel, representing the RGB values, while greyscale images consist of a single value per pixel. If the encoding of the image is ASCII, then the numerical value of the intensity is written in text format, separating each value with a white space. Binary images store the values as a single byte, one right after another. For this reason, images encoded in this format are limited to brightness values in the range of 0-255. The SUSAN program only recognizes PGM files with the header P5, restricting the input to be only greyscale images with a maximum of 256 intensities. There is no concern for the lack of colour in the format, as it is not used in raw MRI images, but the limited range of values for a pixel means that the input must be converted, possibly loosing some detail in the process.

25

-

Implementation Edge Detection

A normalization function was implemented in the program developed, to adjust the brightness of each pixel to fit into the range between 0 and 255. The samples available encode the images with each pixel having 2 bytes to store its brightness value, while the images used by SUSAN can only have one byte per pixel. Thus, the input images are read as unsigned short, and then stored as unsigned char, after normalizing the values. A first pass over the image is done to find the greatest and lowest brightness levels in each slice, and the values found are used to create a factor:

factor = (float) (255) / (maxPixel - minPixel + 1); Then, for each pixel, its new brightness level is computed by:

intensity = input[i] - minPixel; data[i] = (unsigned char) (intensity * factor);

The sample files available had the problem of containing very different maximum and minimum values: one of them had values in the range 0 to 65536, while another had values between 2 and 4095. Performing the normalization over the images in these files has the advantage of converting the input to the same range, which can then be processed in a consistent way form this point on.

5.2.2 SUSAN algorithms This is a general description of the algorithms used to detect edges and to smooth images. Both of them have common functions for the pre-processing of the images. Look-up-table (LUT): The LUT is the first thing created in both modes. It is an array containing all the possible differences in the intensity of a pixel. To store the LUT, an array of size 516 is created. In it, all the possible differences between two pixels in the range from 0 to 255 are stored, including the negative values. The creation of the LUT is affected by an input parameter, the Brightness Threshold (BT), which is the minimum difference between two pixels for them to be considered as belonging to another area of the image. Its value defaults to 20, but can be specified as an argument to the program. The value of the BT can be altered to make the algorithm more tolerant of noise in the image. A low threshold will find edges with greater ease, but is also very susceptible of any noise in the image. Using greater values of the threshold will make the program find a lesser number of edges, and thus avoid the effects of noise, but can also prevent the program from finding the true edges of an image. When doing Smoothing, the BT adjusts how much is the image going to be smoothed. Here, a larger value of the parameter will produce a more smoothed image, and thus be more blurry.

26

-

Implementation Edge Detection

The differences in the values of the LUT are based on the BT parameter. The value stored in each of the K positions of the array is the result of the formula:

n

BTkexp100

Where k is the index of the array, from 256 to 256, and n is either 2 or 6. This creates values from 0 to 100 that will be the brightness values assigned to pixels with a brightness difference corresponding with the one in the LUT. Masks: The algorithms can use two kinds of masks to analyse the area surrounding a pixel. The default is a circular mask of 37 pixels, with the following shape: XXX XXXXX XXXXXXX XXX+XXX XXXXXXX XXXXX XXX The other type of mask is a square of 9 pixels, and is used when the program is called with a special parameter. It provides a much faster evaluation. XXX X+X XXX The tests carried out showed that the larger mask gave better results for this project, as it was able to consider a larger area around each pixel, and this was especially useful because of the noise found in most of the samples. Edge Detection: The algorithm used runs over the whole image; at each pixel, the brightness difference between the pixel and each of the pixels in the mask is evaluated. This difference is used as the index for the LUT, and the value returned is summed to those of the other pixels in the mask. The resulting sum is the new value assigned to the pixel. If the end result is greater than a threshold, then the pixel is considered to be part of an edge, because that will mean the pixel and those surrounding it have very large differences. Smoothing The image is first scaled to make enough space for the mask to fit at the edges. To achieve this, the rows at the top and bottom of the image are repeated a number of times equal to half the size of the mask. The same is done with the columns at the sides. Once the image is scaled, the same process as for edge detection is followed. The brightness difference values are computed for each pixel in the mask, and an average is taken from all the values in the mask. The final average is assigned as the new value for the current pixel.

27

-

Implementation Edge Detection

5.2.3 Testing of the SUSAN algorithm The usefulness of the SUSAN algorithms for the purpose required was evaluated using simplified test images and running the edge detection under various scenarios of noise in the data, and different thresholds. The base images used for the tests were produced using the Gimp graphics package on Linux. The first step was to create the images that the algorithm is supposed to obtain: completely black images with only the white borders for the edges that must be found (Figure 5-3, Figure 5-5). The space between the borders was then filled with white, to simulate the skin tissue as it appears in MRI images (Figure 5-4, Figure 5-6).

Figure 5-3 Squares base image

Figure 5-4 Squares sample image

Figure 5-5 Diamonds base image

Figure 5-6 Diamonds sample image

Figure 5-7 Stylised version of the human head

The most basic test image is a black image with a white square in it, and another black square inside of the white one. The second of them is a simple black image with a hollow white diamond in the middle. A more elaborated test

28

-

Implementation Edge Detection

image is a stylised version of an MRI slice of a human head, including the main features relevant to this project, such as the skin, bone, and other internal tissues (Figure 5-7). Additional new noisy images were then created from the base samples, adding or subtracting an arbitrary amount to the value of each pixel. The amount is generated randomly within a certain limit that goes from 0 to a noise factor, which is the largest number generated. The noise factor was varied in increments of 50, from the base case of 0, up to the maximum value for a pixel in the image. In the images tested, the maximum value is 255, so the highest noise factor used is 250, thus obtaining 6 levels of noise for the tests (Table 5-1). After a random number has been added to each pixel, the final value is rounded to remain within the limits of 0 and 255. The SUSAN program was then run over these noisy data, using different values for the brightness threshold. The resulting edge detections were compared against the basic images that have only the outer edges. The comparison is made pixel by pixel, counting the number of those that have different values from the original image to the one obtained through processing. Table 5-1 Sample test images with varying levels of noise

Noise Level: 000

Noise Level: 050

Noise Level: 100