1 Universal Adversarial Audio Perturbations

Transcript of 1 Universal Adversarial Audio Perturbations

1

Universal Adversarial Audio PerturbationsSajjad Abdoli, Luiz G. Hafemann, Jerome Rony, Ismail Ben Ayed, Patrick Cardinal and Alessandro L.

Koerich

Abstract—We demonstrate the existence of universal adversarial perturbations, which can fool a family of audio classificationarchitectures, for both targeted and untargeted attack scenarios. We propose two methods for finding such perturbations. The firstmethod is based on an iterative, greedy approach that is well-known in computer vision: it aggregates small perturbations to the inputso as to push it to the decision boundary. The second method, which is the main contribution of this work, is a novel penaltyformulation, which finds targeted and untargeted universal adversarial perturbations. Differently from the greedy approach, the penaltymethod minimizes an appropriate objective function on a batch of samples. Therefore, it produces more successful attacks when thenumber of training samples is limited. Moreover, we provide a proof that the proposed penalty method theoretically converges to asolution that corresponds to universal adversarial perturbations. We also demonstrate that it is possible to provide successful attacksusing the penalty method when only one sample from the target dataset is available for the attacker. Experimental results on attackingvarious 1D convolutional neural network architectures have shown attack success rates higher than 85.0% and 83.1% for targeted anduntargeted attacks, respectively using the proposed penalty method.

Index Terms—adversarial perturbation, deep learning, audio processing, audio classification.

F

1 INTRODUCTION

D EEP learning models have been achieving state-of-the-art performance in various problems, notably in

image recognition [1], natural language processing [2], [3]and speech processing [4], [5]. However, recent studieshave demonstrated that such deep models are vulnerableto adversarial attacks [6], [7], [8], [9], [10], [11]. Adversarialexamples are carefully perturbed input examples that canfool a machine learning model at test time [6], [12], posingsecurity and reliability concerns for such models. The threatof such attacks has been mainly addressed for computervision tasks [9], [13]. For instance, Moosavi-Dezfooli etal. [14] have shown the existence of universal adversarialperturbation, which, when added to an input image, causesthe input to be misclassified with high probability. For theseuniversal attacks, the generated vector is independent fromthe input examples.

End-to-end audio classification systems have been gain-ing more attention recently [15], [16], [17], [18]. In suchsystems, the input to the classifier is the audio wave-form. Moreover, there have been some studies that embedtraditional signal processing techniques into the layers ofconvolutional neural networks (CNNs) [19], [20], [21]. Forsuch audio classification systems, the effect of adversarialattacks is not widely addressed [22], [23]. Creating attacksto threaten audio classification systems is challenging, duemainly to the signal variability in the time domain [22].

In this paper, we demonstrate the existence of universaladversarial perturbations, which can fool a family of audioclassification architectures, for both targeted and untargetedattack scenarios. We propose two methods for finding such

• Authors are with Ecole de Technologie Superieure (ETS), Universite duQuebec, Montreal, QC, CanadaE-mail: [email protected], [email protected], [email protected], {ismail.benayed, patrick.cardinal, alessandro.koerich}@etsmtl.ca

perturbations. The first method is based on the greedy-approach principle proposed by Moosavi-Dezfooli et al. [14],which finds the minimum perturbation that sends examplesto the decision boundary. The second method, which isthe main contribution of this work, is a novel penaltyformulation, which finds targeted and untargeted univer-sal adversarial perturbations. Differently from the greedyapproach, the penalty method minimizes an appropriate ob-jective function on a batch of samples. Therefore, it producesmore successful attacks than the previous method when thenumber of training samples is limited. We also show thatusing this method, it is possible to provide successful attackswhen only one sample from the target dataset is available tothe attacker. Moreover, we provide a proof that the proposedpenalty method theoretically converges to a solution thatcorresponds to universal adversarial perturbations. Bothmethods are evaluated on a family of audio classifiers basedon deep models for environmental sound classification andspeech recognition. The experimental results have shownthat both proposed methods can attack deep models, whichare used as target models, with a high success rate.

This paper is organized as follows: Section 2 presents anoverview of adversarial attacks on deep learning models.Section 3 presents the proposed methods to craft univer-sal audio adversarial perturbations. Section 4 presents thedataset, the target models used to evaluate the proposedmethods as well as the experimental results on two bench-marking datasets. are presented in Section 4.1. The conclu-sion and perspective of future work is presented in the lastsection.

2 ADVERSARIAL MACHINE LEARNING

Research on adversarial attacks has attracted considerableattention recently, due to its impact on the reliability ofshallow and deep learning models for computer vision tasks[8]. For a given example x, an attack can find a small

arX

iv:1

908.

0317

3v5

[cs

.LG

] 1

7 N

ov 2

020

2

perturbation δ, often imperceptible to a human observer, sothat an example x = x + δ is misclassified by a machinelearning model [6], [24], [25]. The attacker’s goal may covera wide range of threats like privacy violation, availability vi-olation and integrity violation [12]. Moreover, the attacker’sgoal may be specific (targeted), with inputs misclassified asa specific class or generic (untargeted), where the attackersimply wants to have an example classified differently fromits true class [12]. Attacks generated by targeting a specificclassifier are often transferable to other classifiers (i.e. alsoinduce misclassification on them) [26], even if they havedifferent architectures and do not use the same trainingset [6]. It has been also shown that such attacks can beapplied in the physical world too. For instance, Kurakin etal. [27] showed that printed adversarial examples were stillmisclassified after being captured by a camera; Athalye etal. [28] presented a method to generate adversarial examplesthat are robust under translation and scale transformations,showing that such attacks induce misclassification evenwhen the examples correspond to different viewpoints.

Research on adversarial attacks on audio classificationsystems is quite recent. Some studies focused mainly onproviding inaudible and hidden targeted attacks on thesystems. In such attacks, new audio examples are synthe-sized, instead of adding perturbations to actual inputs [29],[30]. Other works focus on untargeted attacks on speechand music classification systems [31], [32]. Du et al. [33]proposed a method based on particle swarm optimizationfor targeted and untargeted attacks. They evaluated theirattacks on a range of applications such as speech commandrecognition, speaker recognition, sound event detection andmusic genre classification. Alzantot et al. [34] proposed asimilar approach that uses a genetic algorithm for craftingtargeted black-box attacks on a speech command classifica-tion system [35], which achieved 87% of success. Carlini etal. [22] proposed a targeted attack on the speech processingsystem DeepSpeech [15], which is a state-of-the-art speech-to-text transcription neural network. A penalty method isused in such attacks, which achieved 100% of success.

Most studies consider that the attacker can directly ma-nipulate the input of the classifiers. Crafting audio attacksthat can work on the physical world (i.e. played over-the-air) presents challenges such as being robust to backgroundnoise and reverberations. Yakura et al. [36] showed thatover-the-air attacks are possible, at least for a dataset ofshort phrases. Qin et al. [37] also reported successful ad-versarial attacks on automatic speech recognition systems,which remain effective after applying realistic simulatedenvironmental distortions.

Universal adversarial perturbations are effective typeof attacks [14] because the additive noise is independentof the input examples, but once added to any example,it may fool a deep model to misclassify such an exam-ple. Moosavi-Dezfooli et al. [14] proposed a greedy algo-rithm to provide such perturbations for untargeted attacksto images. The perturbation is generated by aggregatingatomic perturbation vectors, which send the data points tothe decision boundary of the classifier. Recently, Moosavi-Dezfooli et al. [38] provided a formal relationship betweenthe geometry of the decision boundary and robustness touniversal perturbations. They have also shown the strong

vulnerability of state-of-the-art deep models to universalperturbations. Metzen et al. [39] generalized this idea to pro-vide attacks against semantic image segmentation models.Recently, Behjati et al. [40] generalized this idea to attack atext classifier in both targeted and non-targeted scenarios. Ina different approach, Hayes et al. [41] proposed a generativemodel for providing such universal perturbations. Recently,Neekhara et al. [42] were also inspired by this idea togenerate universal adversarial perturbations which causemis-transcription of audio signals of automatic transcriptionsystems. They reported success rates up to 88.24% on thehold-out set of the pre-trained Mozilla DeepSpeech model[15]. Nonetheless, their method is designed only for untar-geted attacks. For audio classification systems, the impact ofsuch perturbations can be very strong if these perturbationscan be played over the air even without knowing what thetest examples would look like.

In this work we show the existence of UAPs for attackingseveral audio processing models in both targeted and alsountargeted scenarios. The previous studies on UAPs forattacking audio processing systems, however, have focusedonly on untargeted attacking scenarios. We show that theiterative method proposed by Moosavi-Dezfooli [14] canbe generalized for attacking audio models. As a part ofthis algorithm, we show that the decoupled direction andnorm (DDN) attack [43], which was originally proposedfor targeting image processing models, can also be usedin an iterative method for targeting audio models. Besides,we also propose a novel penalty optimization formulationfor generating UAPs and we address new challenges suchas generating perturbations when a single audio exampleis available to the attacker, as well as the transferabilityof UAPs in both targeted and untargeted scenarios. It isshown that the proposed methods generate UAPs, whichgeneralize well across data points and they are to someextent model-agnostic (doubly universal) where UAPs aregeneralizable across different models specially for the un-targeted attacking scenario.

3 UNIVERSAL ADVERSARIAL AUDIO PERTURBA-TIONS

In this section, we formalize the problem of crafting uni-versal audio adversarial perturbations and propose twomethods for finding such perturbations. The first methodis based on the greedy-approach principle proposed byMoosavi-Dezfooli et al. [14] which finds the minimum per-turbation that sends examples to the decision boundary ofthe classifier or inside the boundary of the target class foruntargeted and targeted perturbations, respectively. The sec-ond method, which is the main contribution of this work, isa penalty formulation, which finds a universal perturbationvector that minimizes an objective function.

Let µ be the distribution of audio samples in Rd andk(x) = arg maxy P (y|x, θ) be a classifier that predicts theclass of the audio sample x, where y is the predicted labelof x and θ denotes the parameters of the classifier. Ourgoal is to find a vector v that, once added to the audiosamples can fool the classifier for most of the samples. Thisvector is called universal as it is a fixed perturbation thatis independent of the audio samples and, therefore, it can

3

be added to any sample in order to fool a classifier. Theproblem can be defined such that k(x + v) 6= k(x) for auntargeted attack and, for a targeted attack, k(x + v) = yt,where yt denotes the target class. In this context, the uni-versal perturbation is a vector with a sufficiently small `pnorm, where p ∈ [1,∞), which satisfies two constraints [14]:‖v‖p ≤ ξ and Px∼µ(k(x + v) 6= k(x)) ≥ 1 − δ, where ξcontrols the magnitude of the perturbation and δ controlsthe desired fooling rate. For a targeted attack, the secondconstraint is defined as Px∼µ(k(x + v) = yt) ≥ 1− δ.

3.1 Iterative Greedy AlgorithmLet X = {x1, . . . ,xm} be a set of m audio files sampledfrom the distribution µ. The greedy algorithm proposed byMoosavi-Dezfooli et al. [14] gradually crafts adversarial per-turbations in an iterative manner. For untargeted attacks, ateach iteration, the algorithm finds the minimal perturbation∆vi that pushes an example xi to the decision boundary,and adds the current perturbation to the universal pertur-bation. In this study, a targeted version of the algorithm isalso proposed such that the universal perturbation addedto the example must push it toward the decision boundaryof the target class. In more details, at each iteration of thealgorithm, if the universal perturbation makes the modelmisclassify the example, the algorithm ignores it, otherwise,an extra ∆vi is found and aggregated to the universalperturbation by solving the minimization problem with thefollowing constraints for untargeted and targeted attacksrespectively:

∆vi ← arg minr‖r‖2

s.t. k (xi + v + r) 6= k (xi) ,

or k (xi + v + r) = yt.

(1)

In order to find ∆vi for each sample of the dataset, anyattack that provides perturbation that misclassifies the sam-ple, such as Carlini and Wanger `2 attack [8] or DDN attack[43], can be used. Moosavi-Dezfooli et al. [44] used Deepfoolto find such a vector.

In order to satisfy the first constraint (||v‖p ≤ ξ), theuniversal perturbation is projected on the `p ball of radius ξand centered at 0. The projection function Pp,ξ is formulatedas:

Pp,ξ(v) = arg minv′

‖v − v′‖2 s.t. ‖v′‖p ≤ ξ. (2)

The termination criteria for the algorithm is defined suchthat the Attack Success Rate (ASR) on the perturbed trainingset exceeds a threshold 1− δ. In this protocol, the algorithmstops for untargeted perturbations when:

ASR (X,v) :=1

m

m∑i=1

1{k (xi + v) 6= k (xi)} ≥ 1− δ, (3)

where 1{·} is the true-or-false indicator function. For atargeted attack, we replace inequality k (xi + v) 6= k(xi)by k (xi + v) = yt in Eq. (3). The problem with iterativeGreedy formulation is that the constraint is only definedon universal perturbation (‖v‖p ≤ ξ). Therefore, the sum-mation of the universal perturbation with the data pointsresults in an audio signal that is out of a specific range such

as [0, 1], for almost all audio samples, even by selecting asmall value for ξ. In order to solve this problem, we mayclip the value of each resulting data point to a valid range.

3.2 Penalty Method

The proposed penalty method minimizes an appropriateobjective function on a batch of samples from a datasetfor finding universal adversarial perturbations. In the caseof noise perception in audio systems, the level of noiseperception can be measured using a realistic metric suchas the sound pressure level (SPL). Therefore, the SPL is usedinstead of the `p norm. In this paper, such a measure isused in one of the objective functions of the optimizationproblem, where one of the goals is to minimize the SPL ofthe perturbation, which is measured in decibel (dB) [22]. Theproblem of crafting a perturbation in a targeted attack canbe reformulated as the following constrained optimizationproblem:

minimize SPL(v)

s.t. yt = arg maxy

P (y|xi + v, θ)

and 0 ≤ xi + v ≤ 1 ∀i

(4)

For untargeted attacks we use yl 6= arg maxy P (y|xi + v, θ),where yl is the legitimate class.

Different from the iterative Greedy formulation, in theproposed penalty method the second constraint is definedon the summation of the data points and universal pertur-bation (xi + v) to keep the perturbed example in a validrange. As the constraint of the DDN attack, which is usedin the iterative greedy algorithm, is that the data must bein range [0, 1], for a fair comparison between methods, weimpose the same constraint on the penalty method. This boxconstraint should be valid for all audio samples.

In Eq. (4), the pressure level of an audio waveform(noise) can be computed as:

SPL(v) = 20 log10 P (v), (5)

where P (v) is the root mean square (RMS) of the perturba-tion signal v of length N , which is given by:

P (v) =

√√√√ 1

N

N∑n=1

v2n, (6)

where vn denotes the n-th component of the array v.The optimization problem introduced in Eq. (4) can

be solved by a gradient-based algorithm, which howeverdoes not enforce the box constraint. Therefore, we need tointroduce a new parameter w, which is defined in Eq. (7) toensure that the box constraint is satisfied.

This variable change is inspired by the `2 attack ofCarlini and Wagner [8].

wi =1

2(tanh(x′i + v′) + 1), (7)

where x′i = arctanh((2xi − 1) ∗ (1 − ε)) and v′ = arctanh((2v− 1) ∗ (1− ε)) are the audio example xi and the pertur-bation vector v represented in the tanh space, respectively,and ε is a small constant that depends on the extreme valuesof the transformed signal that ensures that x′i and v′ does

4

not assume infinity values. For instance, ε=1e−7 is a suitablevalue for the datasets used in Section 4. The audio examplexi must be transformed to tanh space and then Eq. (7) canbe used to transform the perturbed data to the valid range of[0, 1]. Since−1 ≤ tanh(x′i+v′) ≤ 1 then 0 ≤ wi ≤ 1 and thesolution will be valid according to the box constraint. Referto Appendix B for details. As a result of this transformation,the produced perturbation vector is also in tanh space, andv can be written as:

v =tanh(v′) + 1− ε

2− 2ε. (8)

In order to solve the optimization problem defined inEq. (4), we rewrite variable v′ as follows:

v′ =1

2ln

(wi

1−wi

)− x′i. (9)

The details of expressing v′ as a function of wi are shown inAppendix A. Therefore, we propose a penalty method thatoptimizes the following objective function:

minwi

L(wi, t) = SPL

(12 ln

(wi

1−wi

)− x′i

)+ c.G(wi, t),

G(wi, t) = max{maxj 6=t{f(wi)j} − f(wi)t,−κ}

(10)

where t is the target class, f(wi)j is the output of the pre-softmax layer (logit) of a neural network for class j, c is apositive constant known as ”penalty coefficient” and κ con-trols the confidence level of sample misclassification. Thisformulation enables the attacker to control the confidencelevel of the attack. For untargeted attacks, we modify theobjective function of Eq. (10) as:

minwi

L(wi, yl) = SPL

(12 ln

(wi

1−wi

)− x′i

)+ c.G(wi, yl),

G(wi, yl) = max{f(wi)yl −maxj 6=yl{f(wi)j} ,−κ}

(11)

where yl is the legitimate label for the i-th sample of thebatch. G(wi, t) is the hinge loss penalty function, which fora targeted attack and κ = 0, must satisfy:

G(wi, t) = 0 if yt = arg maxy

P(y|wi, θ),

G(wi, t) > 0 if yt 6= arg maxy

P(y|wi, θ),(12)

The same properties of the penalty function are also validfor untargeted perturbations. This penalty function is con-vex and has subgradients therefore, a gradient-based opti-mization algorithm, such as the Adam algorithm [45] canbe used to minimize the finite-sum loss defined in Eqs. (10)and (11). Several other optimization algorithms like Ada-Grad [46], standard gradient descent, gradient descent withNesterov momentum [47] and RMSProp [48] have also beenevaluated but Adam converges in fewer iterations and itproduces relatively similar solutions. Algorithm 1 presentsthe pseudo-code of the proposed penalty method.

Theorem 1: Let{vk}

, k = 1, ...,∞ be the sequencegenerated by the proposed penalty method in Algorithm1 for k iterations. Let v be the limit point of

{vk}

. Then

any limit point of the sequence is a solution to the originaloptimization problem defined in Eq. (4)1.

Proof: According to Eqs. (8) and (9), vk can be definedas:

v′k

=1

2ln

(wki

1−wki

)− x′i,

vk =tanh(v′

k

) + 1− ε2− 2ε

(13)

Before proving Theorem 1, a useful Lemma is also presentedand proved.

Lemma 1: Let v∗ be the optimal value of the originalconstrained problem defined in Eq. (4). Then SPL (v∗) ≥L(wki , t)≥ SPL

(vk)∀k.

Proof of Lemma 1:SPL(v∗) = SPL(v∗) + c.G(w∗i , t) (∵ G(w∗i , t) = 0)

≥ SPL(vk) + c.G(wki , t) (∵ c > 0, G(wk

i , t) ≥ 0,

wki minimizes L(wk

i , t))

≥ SPL(vk)

∴ SPL(v∗)≥L(wki , t) ≥ SPL(vk)∀k.

Proof of Theorem 1. SPL is a monotonically increasingfunction and continuous. Also, G is a hinge function, whichis continuous. L is the summation of two continuous func-tions. Therefore, it is also a continuous function. The limitpoint of

{vk}

is defined as: v = limk→∞ vk and since SPL isa continuous function, SPL(v) = limk→∞ SPL(vk). We canconclude that:

L∗ = limk→∞ L(wki , t) ≤ SPL(v∗) (∵ Lemma 1)

L∗ = limk→∞ SPL(vk) + limk→∞ c.G(wki , t) ≤ SPL(v∗)

L∗ = SPL(v) + limk→∞ c.G(wki , t) ≤ SPL(v∗).

If vk is a feasible point for the constrained optimizationproblem defined in Eq. (4), then, from the definition of func-tion G(.), one can conclude that limk→∞ c.G(wk

i , t) = 0.Then:

L∗ = SPL(v) ≤ SPL(v∗)

∴ v is a solution of the problem defined in Eq. (4)

4 EXPERIMENTAL PROTOCOL AND RESULTS

The proposed UAP is evaluated on two audio tasks: envi-ronmental sound classification and speech command recog-nition. For environmental sound classification, we haveused the full audio recordings of UrbanSound8k dataset [49]downsampled to 16 kHz for training and evaluating themodels as well as for generating adversarial perturbations.This dataset consists of 7.3 hours of audio recordings splitinto 8,732 audio clips of up to slightly more than threeseconds. Therefore, each audio sample is represented by a50,999-dimensional array. The audio clips were categorizedinto 10 classes. The dataset was split into training (80%),validation (10%) and test (10%) set. For generating pertur-bations, 1,000 samples of the training set were randomly

1. Theorem 1 applies to the context of convex optimization. Theneural network is defined as a functional constraint on the optimizationproblem defined in Eq. (4). Since neural networks are not convex, afeasible solution to the optimization problem may not be unique and itis not guaranteed to be a global optimum. Moreover, the Theorem 1 isproved based on the assumption defined in Eq. (12) i.e. κ=0

5

Algorithm 1: Penalty method for universal adver-sarial audio perturbations.

Input: Data points X = {x1, . . . ,xm} withcorresponding legitimate labels Y , desiredfooling rate on perturbed samples δ, andtarget class t (for targeted attacks)

Output: Universal perturbation signal v′

1 initialize v′ ← 0,2 while ASR (X,v′) ≤ 1− δ do3 Sample a mini-batch of size S from (X,Y )4 g← 05 for i← 1 to S do6 Transform the audio signal to tanh space:

x′i = arctanh ((2xi − 1) ∗ (1− ε)),7 Compute the transformation of the perturbed

signal for each sample i from mini-batch:wi = 1

2 (tanh(x′i + v′) + 1),Compute the gradient of the objectivefunction, i.e., Eq. (10) or Eq. (11), w.r.t. wi:

8 if targeted attack: then9 g← g + ∂L(wi,t)

∂wi

else10 g← g + ∂L(wi,yi)

∂wi

11 Compute update ∆v′ using g according toAdam update rule [45]

12 apply update: v′ ← v′ + ∆v′

return v

selected, and for penalty-based method a mini-batch size of100 samples is used. The perturbations were evaluated onthe whole test set (874 samples).

For speech command recognition, we have used theSpeech Command dataset, which consists of 61.83 hoursof audio sampled at 16 kHz [50], and categorized into 32classes. The training set consists of 17.8 hours of speechcommand recordings split into 64,271 audio clips of onesecond. The test set consists of 158,537 audio samples corre-sponding to 44.03 hours of speech commands. This datasetwas used as the benchmark dataset for TensorFlow SpeechRecognition (TFSR) challenge in 20172. This challenge wasbased on the principles of open science so, data, source code,and evaluation methods of over 1,300 participant teamsare publicly available for commercial and non-commercialusage. So, as it is shown in this study, it is quite straightfor-ward for the adversary to attack the model.

Signal-to-noise Ratio (SNR) is used as a metric to mea-sure the level of noise with respect to the original signal.This metric, which is also measured in dB, is used for mea-suring the level of the perturbation of the signal after addingthe universal perturbation. This measure is also used inprevious works for evaluating the quality of the generatedadversarial audio attacks [32], [33], and it is defined as:

SNR(x,v) = 20 log10

P (x)

P (v), (14)

2. https://www.kaggle.com/c/tensorflow-speech-recognition-challenge

where P (.) is the power of the signal defined in Eq. (6).A high SNR indicates that a low level of noise is added tothe audio sample by the universal adversarial perturbation.Additionally, the relative loudness of perturbation withrespect to audio sample, measured in dB, is also applied:

ldBx(v) = ldB(v)− ldB(x) (15)

where ldB(x) = maxn(20 log10(xn)) and xn denotes then-th component of the array x. This measure is similar to`∞ norm in image domain. Smaller values indicate quieterdistortions. This measure is also applied in recent studiesfor quality assessment of adversarial attacks against audioprocessing models [22], [42], [53].

We have chosen a family of diverse end-to-end archi-tectures as our target models. This selection is based onchoosing architectures which might learn representationsdirectly from the audio signal. We briefly describe thearchitecture of each model as follows. 1D CNN Rand, 1DCNN Gamma, ENVnet-V2, SincNet and SincNet+VGG19are just used for environmental sound classification whileSpchCMD model is used for speech command recognition.A detailed description of the architectures can be found insupplementary material.

1D CNN Rand [52]: This model consists of five one-dimensional convolutional layers (CLs). The output of CLsis used as input to two fully connected (FC) layers followedby an output layer with softmax activation function. Theweights of all of the layers are initialized randomly. Thismodel was proposed by Abdoli et al. [52] for environmentalsound classification.

1D CNN Gamma [52]: This model is similar to 1D CNNRand except that it employs a Gammatone filter-bank in itsfirst layer. Furthermore, this layer is kept frozen during thetraining process. Gammatone filters are used to decomposethe input signal to appropriate frequency bands.

ENVnet-V2 [51]: The architecture for sound recognitionwas slightly modified to make it compatible with the inputsize of the downsampled audio samples of UrbanSound8kdataset. This architecture uses the raw audio signal as input,and it extracts short-time frequency features by using twoone-dimensional CLs followed by a pooling layer (PL). Itthen swaps axes and convolves in time and in frequencydomain the features using five two-dimensional CLs. TwoFC layers and an output layer with softmax activationfunction complete the network.

SincNet [19]: The end-to-end architecture for sound pro-cessing extracts meaningful features from the audio signal atits first layer. In this model, several sinc functions are used asband-pass filters and only low and high cutoff frequenciesare learned from audio. After that, two one-dimensional CLsare applied. Two FC layers followed by an output layer withsoftmax activation are used for classification.

SincNet+VGG19 [19]: This model uses sinc filters toextract features from the raw audio signal as in SincNet [19].After an one-dimensional maxpooling layer, the output isstacked along time axis to form a 2D representation. Thistime-frequency representation is used as the input to aVGG19 network [54] followed by a FC layer and an outputlayer with softmax activation for classification. This time-frequency representation resembles a spectrogram represen-tation of the audio signal.

6

0 20 40 60 80Confidence value

50.0

60.0

70.0

80.0

Mea

n AS

R (%

)

UntargetedTargeted

(a)

0 20 40 60 80Confidence value

18.018.519.019.520.020.521.0

Mea

n SN

R (d

B)

UntargetedTargeted

(b)

0 20 40 60 80Confidence value

14

13

12

11

10

Mea

n l d

B X(V

)

UntargetedTargeted

(c)

0 20 40 60 80Confidence value

75.0

80.0

85.0

90.0M

ean

ASR

(%)

UntargetedTargeted

(d)

0 20 40 60 80Confidence value

12

14

16

18

20

22

24

Mea

n SN

R (d

B)

UntargetedTargeted

(e)

0 20 40 60 80Confidence value

16

14

12

10

8

6

Mea

n l d

B X(V

)

UntargetedTargeted

(f)

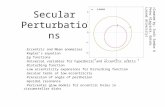

Fig. 1. Effect of different confidence values on the mean values of ASR, SNR and ldBx (v) for targeted and untargeted attacks. Top row (a, b, c):ENVnet-V2 [51] used as target model. Bottom row (d, e, f): 1D CNN Gamma [52] used as target model.

SpchCMD: This architecture was proposed by the win-ner of the TFSR challenge. The model is based on a CNN,which uses one-dimensional CLs and several depth-wiseCLs for extracting useful information from several chunksof raw audio. The model also uses an attention layer anda global average PL. According to the challenge rules,the model must handle silence signal and also unknownsamples from other proposed classes in Speech Commanddataset [50]. Therefore, the softmax layer has 32 outputs.

For the iterative method, several parameters must bechosen. In order to find the minimal perturbation ∆vi,we used the DDN `2 attack [43]. This attack is designedto efficiently find small perturbations that fool the model.The difference with DeepFool [44] is that it can be used forboth untargeted and targeted attacks, extending the iterativemethod to the targeted scenario. DDN was used with abudget of 50 steps and an initial norm of 0.2. Results arereported for p=∞ and we set ξ to 0.2 and 0.12 for untargetedand targeted attack scenarios, respectively. These valueswere chosen to craft perturbations in which the norm ismuch lower than the norm of the audio samples in thedataset.

For evaluating the penalty method we set the penaltycoefficient c to 0.2 and 0.15 for untargeted and targetedattack scenarios, respectively. The confidence value κ is setto 40 and 10 for crafting untargeted and targeted perturba-tions, respectively. For both methods, based on our initialexperiments, we found that these values are appropriate toproduce fine quality perturbed samples within a reasonablenumber of iterations. Fig. 1 shows the effect of differentconfidence values on mean ASR, mean SNR and meanldBx(v) for targeted and untargeted attacks on the ENVnet-V2 [51] and 1D CNN Gamma [52] models for the test set.For this experiment, 1,000 and 500 training samples for un-targeted and targeted attack scenarios are used, respectively.For targeted attacks, the target class is ”Gun shot”. Fig. 1shows that the ASR increases as confidence value increases.However, the SNR also decreases in the same way. Foruntargeted attacks, it has a more detrimental effect on themean ldBx(v) than targeted scenario. For both iterative and

penalty methods, we set the desired fooling rate on per-turbed training samples to δ=0.1. Both algorithms terminateexecution whether they achieve the desired fooling ratio, orthey reach 100 iterations. Both algorithms have been trainedand tested using a TITAN Xp GPU.

4.1 ResultsTable 1 shows the results of the iterative and penaltymethods against the five environmental sound classifica-tion models considered in this study. We evaluate bothtargeted and untargeted attacks in terms of mean ASR onthe training and test sets, as well as mean SNR and meanldBx(v) of the perturbed samples of the test set. For craftingthe perturbation vector 1,000 randomly chosen samples ofthe training set is used. For both untargeted and targetedattacks, the penalty method produces the highest ASR forall target models. Both methods produce relatively similarmean SNR on the test set. For targeted scenario, the penaltymethod produces better results in terms of mean ldBx(v) forattacking SincNet and SincNet+VGG19 models. The itera-tive method, however, works slightly better for attackingENVnet-V2 model. For the rest of the models, the differenceis negligible. The projection operation on `∞ ball used inthe iterative method is like clipping the perturbation vector.Since the maximum amplitude of the perturbation vectorsurpasses the limit (ξ= 0.12) while producing the perturba-tion, the projection operation causes the method to achievethe same mean ldBx(v) for all methods in this attacking sce-nario. For the untargeted scenario, the penalty method alsoproduces quieter perturbations in terms of mean ldBx(v) forattacking 1D CNN Gamma, SincNet and SincNet+VGG19models. The crafted universal perturbations produced fromthe training set by the penalty method generalize better thanthose produced by the iterative method for both attackingscenarios. We also observe a relatively low difference inASR between the training and test sets. The detailed resultsof the targeted attack scenario for each model is reported

7

TABLE 1Mean values of ASR, SNR and ldBx (v) on training and test sets for untargeted and targeted perturbations. Higher ASRs are in boldface.

Targeted Attack Untargeted Attack

Training Set Test Set Training Set Test Set

Method Model ASR ASR SNR (dB) ldBx (v) ASR ASR SNR (dB) ldBx (v)

Iterative

1D CNN Rand 0.926 0.672 25.321 -18.416 0.911 0.412 25.244 -14.2581D CNN Gamma 0.945 0.795 23.587 -18.416 0.904 0.737 22.922 -13.979ENVnet-V2 0.916 0.767 22.734 -18.416 0.910 0.669 24.960 -13.979SincNet 1.000 0.899 28.668 -21.044 0.915 0.886 24.025 -18.959SincNet+VGG19 0.985 0.872 26.203 -18.416 0.924 0.838 26.362 -14.007

Penalty

1D CNN Rand 0.917 0.854 23.468 -16.762 0.900 0.876 20.350 -13.3711D CNN Gamma 0.913 0.888 22.835 -17.375 0.901 0.858 20.551 -18.133ENVnet-V2 0.922 0.877 21.832 -14.198 0.900 0.831 18.727 -10.490SincNet 0.962 0.971 30.411 -31.916 0.900 0.919 29.972 -27.494SincNet+VGG19 0.916 0.898 26.736 -21.059 0.902 0.865 23.555 -17.759

TABLE 2Mean values of ASR, SNR and ldBx (v) on training and test sets for untargeted and targeted perturbations for targeting speech recognition model

(SpchCMD). Higher ASRs are in boldface.

Targeted Attack Untargeted Attack

Training Set Test Set Training Set Test Set

Method ASR ASR SNR (dB) ldBx (v) ASR ASR SNR (dB) ldBx (v) Model Acc.

Iterative 0.902 0.855 27.437 -18.924 0.901 0.834 28.524 -18.418 0.192

Penalty 0.903 0.850 26.728 -24.575 0.910 0.875 26.716 -21.462 0.191

in supplementary material. For a better assessment of theperturbations produced by both proposed methods, severalrandomly chosen examples of perturbed audio samples arepresented in supplementary material.

Table 2 shows the results achieved by iterative andpenalty methods for targeting the SpchCMD model. In orderto find the universal perturbation vector, 3,000 samples fromthe training set were randomly selected. All samples ofthe test set were used to evaluate the effectiveness of theperturbation vector. For targeting this model, the pertur-bation generated by the penalty method is also projectedaround the `2 ball according to Eq. (2) with radius ξ=6.Based on the preliminary experiments for this task, thisprojection produces slightly better results in terms of meanSNR and mean ldBx(v). For the targeted attack scenario,both methods produce similar results in terms of ASR andSNR however, penalty method generates higher-quality per-turbation vectors in terms of mean ldBx(v). For untargetedattacks, the penalty method produces better results in termsof mean ASR and mean ldBx(v) and both methods producesimilar perturbations in terms of SNR. Roughly speaking,SNRs higher than 20 are considered as acceptable ones. Asmentioned by Yang et al. [53], perturbed audio sampleswhich have ldBx(v) ranging from -15 dB to -45 dB aretolerable to human ears. From Tables 1 and 2, the meanSNR and ldBx(v) of the perturbed samples are mostly in thisrange. Neekhara et al. [42] also reported relatively similarresults (between -29.82 dB and -41.86 dB) for attacking aspeech-to-text model in an untargeted scenario. The modelpredictions after adding the perturbations were submittedto the evaluation system of the TFSR challenge to evaluatethe impact of the perturbations on the model accuracy.Table 2 shows that the accuracy of the winner speech recog-

nition model (Model Acc.) has dropped to near of randomguessing for the perturbations produced by both proposedalgorithms. The success rates reported in Tables 1 and 2 arealso statistically significant. Refer to supplementary materialfor details.

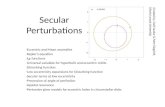

We now consider the influence of the number of trainingdata points on the quality of the universal perturbations.Fig. 2 shows the ASR and mean SNR achieved on thetest set with different number of data points, consideringtwo target models. The untargeted attack is evaluated onSincNet and the targeted attack is evaluated on 1D CNNGamma model. For targeted attacks, the target class is ”Gunshot”. For both targeted and untargeted scenarios, penaltymethod produces better ASR when the perturbations arecrafted with a lower number of data points. For the un-targeted scenario, iterative method produces perturbationswith a slightly better mean SNR than those produced bythe penalty method. However, this difference is perceptuallynegligible. When the number of data points are limited,the penalty method also produces better results in termsof mean ldBx(v). However, the iterative method producesslightly better results in terms of mean ldBx(v) when moredata points are available (e.g. more than 50 samples). For thetargeted attack scenario, when the number of data pointsare limited (e.g. lower than 100), the iterative method alsoproduces perturbations with a slightly better mean SNR.However, when the number of data points increases, thepenalty method produces better perturbations in terms ofSNR than those produced by the iterative method. For sucha scenario, the penalty method also produces quieter per-turbations in terms of mean ldBx(v). The Greedy algorithm

8

101 102 103

# data points

20.0

40.0

60.0

80.0

Mea

n AS

R (%

)

PenaltyIterative

(a)

101 102 103

# data points

25.0

27.5

30.0

32.5

35.0

37.5

Mea

n SN

R (d

B)

PenaltyIterative

(b)

101 102 103

# data points

24

22

20

18

16

Mea

n l d

B X(V

)

PenaltyIterative

(c)

101 102 103

# data points

60.0

70.0

80.0

90.0M

ean

ASR

(%)

PenaltyIterative

(d)

101 102 103

# data points

25

30

35

40

Mea

n SN

R (d

B)

PenaltyIterative

(e)

101 102 103

# data points

35

30

25

20

Mea

n l d

B X(V

)

PenaltyIterative

(f)

Fig. 2. Effect of the number of data points on the mean values of ASR, SNR and ldBx (v) for the test set. Top row (a, b, c): Targeted attack on the1D CNN Gamma model [52]. Bottom row (d, e, f): Untargeted attack on the SincNet model [19].

TABLE 3Results of the untargeted attack in terms of mean values of ASR, SNR and ldBx (v) on the test set. The perturbation is generated by a singlerandomly selected sample of each class. The proposed penalty method is used to generate attacks against the 1D CNN Gamma model [52].

Available Class

AI CA CH DO DR EN GU JA SI ST Mean

ASR 0.641 0.722 0.730 0.732 0.720 0.561 0.688 0.796 0.677 0.713 0.698SNR 17.718 18.317 19.597 18.813 16.699 19.039 17.908 20.046 18.014 18.743 18.489ldBx (v) -15.130 -16.574 -16.322 -16.052 -15.048 -16.963 -15.672 -15.510 -15.088 -16.086 -15.844

used in the iterative method is designed to generate pertur-bations with the least possible power level. At each iteration,if the perturbation vector misclassifies the example, it will beignored for the next iterations. Therefore, it is impossible forthe attacker to obtain higher ASRs at the expense of havinga universal perturbation with slightly higher SPL, especiallywhen the number of samples is limited. However, in thepenalty method, the algorithm is able to generate moresuccessful universal perturbations at the expense of havinga universal perturbation with a negligible higher powerlevel as long as the gradient-based algorithm can minimizethe objective functions defined in Eqs. (10) and (11). More-over, for all iterations of the penalty method, the algorithmexploits all available data to minimize the objective functionfor generating the universal perturbation.

Another advantage of the proposed penalty method isthat it is also able to generate perturbations when a singleaudio example is available to the attacker. For such an aim,a single audio sample of each class is randomly selectedfrom the training set, and the objective functions definedin Eqs. (10) and (11) are minimized iteratively using Al-gorithm 1 for targeted and untargeted attacks, respectively.For this experiment, we set the penalty coefficient c=0.2 andthe confidence value κ=90 for both untargeted and targetedattack scenarios. The algorithm is executed for 19 iterationsand the perturbation produced is used to perturb all audiosamples of the test set, which are then used to fool the 1DCNN Gamma model.

Table 3 shows the results of the untargeted attack interms of ASR and SNR on the perturbed samples of the test

set. Fig. 3 shows the results of the targeted attack in termsof SNR, ASR and mean ldBx(v), considering all classes. Inthis case, the perturbation is also generated from a singlerandomly selected audio sample of each class in order toattack the model for a specific target class. Mean ASR of0.698 and 0.602 are achieved for untargeted and targetedattack scenarios, respectively. Moreover, mean SNR of 18.489dB and 19.690 dB are also achieved for untargeted andtargeted attack scenarios, respectively. Mean ldBx(v) of -15.844 dB and 15.571 dB are also achieved for untargetedand targeted attack scenarios, respectively. Similar resultswere also obtained for all other target models.

Finally, Table 4 shows the transferability of perturbed audiosamples of the test set of UrbanSound8k dataset generatedby one model to other models. Generating transferableadversarial perturbations among the proposed models is achallenging task [23], [55]. Abdullah et al. [23] have shownthat transferability is challenging for most of the currentaudio attacks. This problem is still more challenging togradient-based optimization attacks. Liu et al. [56] intro-duced an ensemble-based approach that seems to be apromising research direction to produce more transferableaudio examples.

5 CONCLUSION

In this study, we proposed an iterative method and a penaltymethod for generating targeted and untargeted universaladversarial audio perturbations. Both methods were used

9

AI CA CH DO DR EN GU JA SI ST AVGTarget Class

AI

CA

CH

DO

DR

EN

GU

JA

SI

ST

AVG

Avai

labl

e Cl

ass

0.563 0.473 0.554 0.673 0.799 0.722 0.514 0.888 0.637 0.546 0.637

0.629 0.582 0.53 0.643 0.833 0.746 0.497 0.86 0.578 0.608 0.651

0.646 0.549 0.479 0.559 0.836 0.763 0.207 0.836 0.523 0.589 0.599

0.64 0.519 0.564 0.698 0.847 0.751 0.465 0.894 0.606 0.643 0.663

0.645 0.28 0.506 0.667 0.789 0.745 0.509 0.867 0.606 0.617 0.623

0.608 0.557 0.497 0.683 0.83 0.715 0.57 0.894 0.522 0.638 0.651

0.612 0.338 0.529 0.588 0.827 0.722 0.457 0.824 0.486 0.562 0.594

0.58 0.649 0.347 0.55 0.848 0.751 0.67 0.834 0.592 0.571 0.639

0.554 0.389 0.439 0.553 0.809 0.73 0.392 0.839 0.406 0.514 0.562

0.669 0.339 0.529 0.696 0.84 0.81 0.39 0.902 0.656 0.611 0.644

0.615 0.468 0.497 0.631 0.826 0.745 0.467 0.864 0.561 0.59 0.626

ASR

(a)

AI CA CH DO DR EN GU JA SI ST AVGTarget Class

AI

CA

CH

DO

DR

EN

GU

JA

SI

ST

AVG

Avai

labl

e Cl

ass

20.43 20.48 20.33 20.00 19.64 19.99 20.52 19.62 19.93 20.24 20.12

19.89 19.11 20.11 19.49 19.37 20.07 20.15 19.97 20.19 20.10 19.84

19.51 19.56 20.09 19.48 19.32 19.46 20.39 19.39 19.59 19.38 19.62

20.11 20.38 20.71 20.34 19.87 20.39 20.64 20.00 20.54 20.30 20.33

19.82 20.16 20.05 19.36 19.43 19.66 20.31 19.11 19.67 19.68 19.72

19.66 19.97 20.03 19.58 19.51 20.15 20.31 19.13 20.07 19.67 19.81

19.71 19.68 19.97 19.23 18.96 19.06 19.60 19.39 19.99 19.28 19.49

19.42 19.31 19.41 19.15 19.12 19.05 19.82 19.14 19.11 19.18 19.27

18.98 19.71 19.20 18.77 18.70 18.68 20.47 18.66 19.04 19.07 19.13

19.49 20.33 19.69 19.33 19.18 19.16 20.43 18.91 19.43 19.71 19.57

19.70 19.87 19.96 19.47 19.31 19.57 20.26 19.33 19.76 19.66 19.69

SNR

(b)

AI CA CH DO DR EN GU JA SI ST AVGTarget Class

AI

CA

CH

DO

DR

EN

GU

JA

SI

ST

AVG

Avai

labl

e Cl

ass

-16.822-15.002-17.225-15.621 -15.5 -15.632-14.959-15.423-15.235-16.388-15.781

-15.11 -15.268-15.893-15.331-15.044-15.013-14.859-15.065-15.253-15.228-15.206

-14.827-14.785-17.521-15.755-15.506-15.184-15.492-14.933-14.941-15.786-15.737

-15.738-15.243-16.971-16.745-15.641-15.445 -14.96 -15.169-15.328-15.083-15.632

-14.955-15.608-16.384-15.531-16.644 -15.28 -14.955-15.903-14.672 -15.18 -15.511

-15.676-14.938-17.259-15.275-15.528-16.418-14.738-15.362-15.288-15.524-15.601

-14.977-14.912-16.437-15.935 -15.32 -15.104-14.982-15.475 -14.85 -15.479-15.347

-14.953-14.615-17.138-16.013-15.258-16.011-14.824-17.579-14.978-15.999-15.737

-15.235-15.073 -17.09 -15.982 -15.45 -14.696-14.918-14.782-16.328 -15.41 -15.496

-15.51 -15.706-16.745-15.357 -15.76 -15.257 -15.0 -15.074-15.028 -17.19 -15.663

-15.38 -15.115-16.866-15.755-15.565-15.404-14.969-15.476 -15.19 -15.727-15.571

Mean dB

(c)

Fig. 3. Targeted mean values of ASR (a), SNR (b) and ldBx (v) on the test set. Proposed penalty method is used for crafting the perturbation. Theperturbation is generated by a single randomly selected sample of each class in order to attack the 1D CNN Gamma model [52] for a specific targetclass.

TABLE 4Transferability of adversarial samples generated by both iterative and penalty methods between pairs of the models for untargeted and targeted

attacking scenarios. The cell (i, j) indicates the ASR of the adversarial sample generated for model i (row) evaluated over model j (column)

.Panel (A): Untargeted attack

Method Model 1D CNN Rand 1D CNN Gamma ENVnet-V2 SincNet SincNet+VGG19

Iterative

1D CNN Rand N/A 0.371 0.175 0.300 0.2001D CNN Gamma 0.340 N/A 0.245 0.627 0.530ENVnet-V2 0.269 0.339 N/A 0.304 0.310SincNet 0.164 0.207 0.152 N/A 0.237SincNet+VGG19 0.176 0.191 0.134 0.228 N/A

Penalty

1D CNN Rand N/A 0.558 0.342 0.675 0.3881D CNN Gamma 0.423 N/A 0.295 0.698 0.672ENVnet-V2 0.390 0.503 N/A 0.490 0.479SincNet 0.142 0.170 0.103 N/A 0.185SincNet+VGG19 0.253 0.192 0.194 0.340 N/A

Panel (B): Targeted attack

Method Model 1D CNN Rand 1D CNN Gamma ENVnet-V2 SincNet SincNet+VGG19

Iterative

1D CNN Rand N/A 0.352 0.186 0.534 0.2321D CNN Gamma 0.285 N/A 0.210 0.476 0.423ENVnet-V2 0.292 0.340 N/A 0.327 0.356SincNet 0.126 0.131 0.105 N/A 0.215SincNet+VGG19 0.185 0.149 0.172 0.237 N/A

Penalty

1D CNN Rand N/A 0.119 0.192 0.130 0.1091D CNN Gamma 0.130 N/A 0.117 0.251 0.134ENVnet-V2 0.127 0.153 N/A 0.146 0.159SincNet 0.103 0.106 0.103 N/A 0.129SincNet+VGG19 0.102 0.113 0.099 0.142 N/A

for attacking a diverse family of end-to-end audio classifiersbased on deep models and the experimental results haveshown that both methods can easily fool all models with ahigh success rate. It is also proved that the proposed penaltymethod converges to an optimal solution.

Although the perturbations produced by both methodscan target the models with high success rate, in listeningtests humans can detect the additive noise but the ad-versarial audios can be recognized as their original labels.Developing effective universal adversarial audio examplesusing the principle of psychoacoustics and auditory mask-

ing [23], [37] to reduce the level of noise introduced by theadversarial perturbation is our current goal. Moreover, itis also an interesting direction for designing appropriateaudio intelligibility metrics to asses the quality of perturbedsamples.

Different from image-based universal adversarial pertur-bations [14], the universal audio perturbations crafted in thisstudy do not perform well in the physical world (playedover-the-air). Combining the methods proposed in this pa-per with recent studies on generating robust adversarialattacks on several transformations on the audio signal [36],

10

[37] may be a promising way to craft robust physical attacksagainst audio systems. Moreover, proposing an ensemble-based method for crafting more transferable attacks is alsoa promising direction for future work [56].

Finally, proposing a defensive mechanism [53], [57], [58],[59] against such universal perturbations is also an impor-tant issue. Using methods like adversarial training [60], [61]against such attacks is also considered as one of our futureresearch directions. Moreover, using the proposed methodsfor targeting sequence-to-sequence models (e.g. speech-to-text) is another interesting research direction.

APPENDIX ASOLVING wi FOR v′

wi =1

2(tanh(x′i + v′) + 1)

Substituting x′i + v′ by bi:

wi =1

2(tanh(bi) + 1)

By using the definition of tanh function:

tanh(bi) =−e−bi + ebi

e−bi + ebi,

we have:

2wi − 1 =−e−bi + ebi

e−bi + ebi.

Substituting ebi by ui:

2wi − 1 =−u−1i + ui

u−1i + ui

⇒ 2wi − 1 =−1 + u2

i

1 + u2i

⇒ 2wi

(1 + u2

i

)−(1 + u2

i

)= −1 + u2

i

⇒ 2wi + 2wiu2i − u2

i = u2i

⇒ 2wi = 2u2i − 2wiu

2i

⇒ wi = u2i (1−wi)

⇒ wi

1−wi= u2

i

ui has two solutions:

⇒ ui = ±√

wi

1−wi

Considering ebi = ui, for the first solution we have:

ebi =

√wi

1−wi

⇒ ebi =

(wi

1−wi

) 12

⇒ ln(ebi

)= ln

(wi

1−wi

) 12

⇒ bi =1

2ln

(wi

1−wi

).

By substituting back bi by x′i + v′, we have:

⇒ x′i + v′ =1

2ln

(wi

1−wi

)⇒ v′ =

1

2ln

(wi

1−wi

)− x′i.

For the second solution we have:

bi =1

2ln

(− wi

1−wi

)However, since 0 ≤ wi ≤ 1 and the natural logarithm of anegative number is undefined, the second solution for ui isinvalid.

APPENDIX BSOLVING v′ FOR v

v′ = arctanh ((2v − 1) ∗ (1− ε)) .

Substituting (2v − 1) ∗ (1− ε) by s:

v′ = arctanh (s)

⇒ tanh(v′) = tanh(arctanh(s))

⇒ tanh(v′) = s.

By substituting back s by (2v − 1) ∗ (1− ε) we have:

tanh(v′) = (2v − 1) ∗ (1− ε)⇒ tanh(v′) = 2v − 2vε− 1 + ε

⇒ tanh(v′) = v(2− 2ε)− 1 + ε

tanh(v′) + 1− ε2− 2ε

= v

APPENDIX CSTATISTICAL TEST

Since the ASRs reported in this paper are the proportionof the successfully attacked samples by the two methods(iterative and penalty), the test of hypothesis concerning twoproportions can be applied here [62]. Compare the ASRsobtained by two proposed methods (iterative and penalty)presented in Table 1 and Table 2 and let pl and ph be theASR of the method with lower ASR and the ASR of themethod with higher ASR, respectively. The statistical test[62] is given by :

Z =pl − ph√

2p(1− p)/m, p =

(Xl + Xh)

2m.

Where Xl and Xh are the number of successfully attackedsamples by the method with lower ASR and by the methodwith higher ASR, respectively. Parameter m is also thenumber of samples in the test set. The intention is to provethe ASR of the two methods i.e., we want to establish that:pl < ph so,

• H0 : pl = ph (Null hypothesis)• Ha : pl < ph (Alternative hypothesis).

The null hypothesis is rejected if Z < −zα, where zα isobtained from a standard normal distribution that is relatedto level of significance, α. If the condition is true we canreject H0 and accept Ha. Table 5 shows pl and ph for all

11

of the target models for each attacking scenario as well asthe corresponding Z values. The ASR of the method whichproduces higher ASR is considered as Xh and vice versa.From the standard normal distribution function [62], weknow that z0.057 = 1.58. Since all of the Z values in Table 5are below -1.58 (Z < −z0.057), we can reject the null hypoth-esis at least with 94.3% significance level (1-α) for all of thetarget models. In other words, the method corresponding toASR of ph is more effective with 94.3% significance level.For most of the models, the attacking method with higherASR, is more effective with much higher significance level.We can conclude that results are statistically significant.

TABLE 5Statistical test of the two attacking methods. pl and ph for all of the

target models for each attacking scenario as well as the correspondingZ values. pl and ph are derived from ASRs reported in Table 1 and

Table 2

Attacking sc. Model pl ph Z

Targeted

1D CNN Rand 0.672 0.854 -8.9461D CNN Gamma 0.795 0.888 -5.323ENVnet-V2 0.767 0.877 -6.011SincNet 0.899 0.971 -6.105SincNet+VGG19 0.872 0.898 -1.703SpchCMD 0.850 0.855 -3.969

Untargeted

1D CNN Rand 0.412 0.876 -20.2571D CNN Gamma 0.737 0.858 -6.294ENVnet-V2 0.669 0.831 -7.820SincNet 0.886 0.919 -2.325SincNet+VGG19 0.838 0.865 -1.587SpchCMD 0.834 0.875 -32.737

APPENDIX DDETAILED TARGETED ATTACK RESULTS

Tables 6 to 11 show the detailed mean vlaues of ASR, SNRand ldBx(v) on the target models in the targeted attackscenario for training and test sets. For Tables 6 to 10 themeasures are reported for each specific target class of Urban-Sound8k [49] and for the Table 11, the measures are reportedfor each specific target class of speech commands dataset[50]. The target classes of Urbansound8K dataset [49] are:Air conditioner (AI), Car horn (CA), Children playing (CH),Dog bark (DO), Drilling (DR), Engine (EN) idling, Gun shot(GU), Jackhammer (JA), Siren (SI), Street music (ST).

APPENDIX ETARGET MODELS

In this study six types of models are targeted. For trainingall models, categorical crossentropy is used as loss function.Adadelta [63] is used for optimizing the parameters of

the models proposed for environmental sound classifica-tion. For training the speech command classification modelRMSprop is also used as the optimization method. In thissection, we present the complete description of the models.

E.1 1D CNN RandTable 12 shows the configuration of 1D CNN Rand [52]. Thismodel consists of five one-dimensional CLs. The numberof kernels of each CL is 16, 32, 64, 128 and 256. The sizeof the feature maps of each CL is 64, 32, 16, 8 and 4.The first, second and fifth CLs are followed by a one-dimensional max-pooling layer of size of eight, eight andfour, respectively. The output of the second pooling layer isused as input to two FC layers on which a drop-out withprobability of 0.5 is applied for both layers [64]. Relu is usedas the activation function for all of the layers. The numberof neurons of the FC layers are 128 and 64. In order toreduce the over-fitting, batch normalization is applied afterthe activation function of each convolution layer [65]. Theoutput of the last FC layer is used as the input to a softmaxlayer with ten neurons for classification.

E.2 1D CNN GammaThis model is similar to 1D CNN Rand except that agammatone filter-bank is used for initialization of the filtersof the first layer of this model [52]. Table 13 shows theconfiguration of this model. The gammatone filters are keptfrozen and they are not trained during the backpropagationprocess. Sixty-four filters are used to decompose the inputsignal into appropriate frequency bands. This filter-bankcovers the frequency range between 100 Hz to 8 kHz. Afterthis layer, batch normalization is also applied [65].

E.3 ENVnet-V2Table 14 shows the architecture of ENVnet-V2 [51]. Thismodel extracts short-time frequency features from audiowaveforms by using two one-dimensional CLs with 32 and64 filters, respectively followed by a one-dimensional max-pooling layer. The model then swaps axes and convolvesin time and frequency domain features using two two-dimensional CLs each with 32 filters. After the CLs, atwo-dimensional max-pooling layer is used. After that, twoother two-dimensional CLs followed by a max-pooling layerare used and finally another two-dimensional CL with 128filters is used. After using two FC layers with 4,096 neurons,a softmax layer is applied for classification. Drop-out withprobability of 0.5 is applied to FC layers [64]. Relu is usedas the activation function for all of the layers.

E.4 SincNetTable 15 shows the architecture of SincNet [19]. In thismodel, 80 sinc functions are used as band-pass filters fordecomposing the audio signal into appropriate frequencybands. After that, two one-dimensional CLs with 80 and60 filters are applied. Layer normalization [66] is used aftereach CL. After each CL, max-pooling is used. Two FC layersfollowed by a softmax layer is used for classification. Drop-out with probability of 0.5 is applied to FC layers [64]. Batchnormalization [65] is used after FC layers. In this model, allhidden layers use leaky-ReLU [67] non-linearity.

12

TABLE 6Mean values of ASR, SNR and ldBx (v) for targeting each label of UrbanSound8k [49] dataset. The target model is 1D CNN Rand. Higher ASRs

are in boldface.

Target ClassesMethod AI CA CH DO DR EN GU JA SI ST

Iterative

ASR training set 0.962 0.900 0.924 0.920 0.941 0.937 0.919 0.907 0.936 0.909ASR test set 0.636 0.741 0.602 0.666 0.716 0.656 0.808 0.662 0.640 0.593SNR (dB) test set 25.396 24.234 25.751 25.256 26.494 25.715 24.689 25.399 25.623 24.651ldBx (v) -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416

Penalty

ASR training set 0.907 0.917 0.916 0.919 0.906 0.932 0.929 0.921 0.906 0.917ASR test set 0.846 0.872 0.807 0.832 0.860 0.890 0.905 0.871 0.822 0.834SNR (dB) test set 24.170 22.492 23.239 22.532 24.371 23.647 24.688 23.445 23.172 22.920ldBx (v) -18.848 -15.553 -15.421 -14.980 -19.406 -18.046 -16.627 -16.752 -16.394 -15.590

TABLE 7Mean values of ASR, SNR and ldBx (v) for targeting each label of UrbanSound8k [49] dataset. The target model is 1D CNN Gamma. Higher ASRs

are in boldface.

Target ClassesMethod AI CA CH DO DR EN GU JA SI ST

Iterative

ASR training set 0.905 0.938 0.952 0.941 0.968 0.936 0.959 0.974 0.941 0.934ASR test set 0.745 0.874 0.744 0.810 0.815 0.746 0.887 0.795 0.772 0.761SNR (dB) test set 21.766 23.091 23.384 24.829 25.339 22.620 24.734 24.530 22.519 23.058ldBx (v) -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416

Penalty

ASR training set 0.916 0.928 0.923 0.912 0.909 0.902 0.926 0.910 0.903 0.900ASR test set 0.871 0.920 0.895 0.891 0.879 0.886 0.900 0.899 0.883 0.855SNR (dB) test set 21.351 21.730 22.392 22.648 24.257 22.141 23.380 24.663 21.401 22.902ldBx (v) -15.560 -15.113 -16.543 -16.950 -21.505 -17.312 -15.828 -21.380 -16.329 -17.229

TABLE 8Mean values of ASR, SNR and ldBx (v) for targeting each label of UrbanSound8k [49] dataset. The target model is ENVnet-V2. Higher ASRs are in

boldface.

Target ClassesMethod AI CA CH DO DR EN GU JA SI ST

Iterative

ASR training set 0.939 0.928 0.921 0.925 0.882 0.923 0.902 0.929 0.901 0.906ASR test set 0.797 0.835 0.697 0.767 0.764 0.763 0.804 0.744 0.747 0.754SNR (dB) test set 22.852 22.932 22.371 23.183 22.702 23.387 20.868 23.355 23.049 22.637ldBx (v) -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416

Penalty

ASR training set 0.926 0.935 0.916 0.928 0.923 0.904 0.929 0.904 0.936 0.919ASR test set 0.873 0.902 0.860 0.873 0.867 0.888 0.895 0.879 0.866 0.863SNR (dB) test set 22.143 21.208 22.241 21.601 22.251 21.917 20.798 22.367 21.971 21.818ldBx (v) -13.571 -14.281 -13.874 -12.977 -15.444 -14.541 -12.176 -15.025 -15.190 -14.896

E.5 SincNet+VGG19Table 16 shows the specification of this architecture. Thismodel uses 227 Sinc filters to extract features from theraw audio signal as it is introduced in SincNet [19]. Afterapplying one-dimensional max-pooling layer of size 218with stride of one, and layer normalization [66], the outputis stacked along time axis to form a 2D representation. Thistime-frequency representation is used as input to a VGG19[54] network followed by a FC layer and softmax layer forclassification. The parameters of the VGG19 are the sameas described in [54] and they are not changed in this study.The output of the VGG19 is used as input of a softmax layerwith ten neurons for classification.

E.6 SpchCMDTable 17 shows the architecture of SpchCMD model. Themodel receives the raw audio input of size of 16,000. Themodel generates 40 patches of overlapped audio chunks of

size of 800. After that, a one-dimensional convolution layerwith 64 filters is used. Eleven depth-wise one-dimensionalconvolution layers with appropriate number of featuremaps are then used to extract suitable information fromthe representations from the last layer. Relu is used as theactivation function for all convolution layers. Bach normal-ization is also used after each convolution layers. After anattention layer and also a global average pooling layer aFC and softmax layer is used to classify the input samplesinto appropriate classes. This model is trained based on theavailable recipe from the Github page of the winner of thechallenge 3. A batch consists of 384 sample from the datasetis used where up to 40% of the batch are samples from thetest set which are annotated based on pseudo labeling. Anensemble of the three best performed models during theinitial experiments on the test is used for the labeling where,the three models predict the same label for the sample. Up

3. https://github.com/see--/speech recognition

13

TABLE 9Mean values of ASR, SNR and ldBx (v) for targeting each label of UrbanSound8k [49] dataset. The target model is SincNet. Higher ASRs are in

boldface.

Target ClassesMethod AI CA CH DO DR EN GU JA SI ST

Iterative

ASR training set 1.000 0.998 1.000 1.000 1.000 1.000 1.000 1.000 1.000 1.000ASR test set 0.754 0.955 0.931 0.854 0.916 0.919 0.959 0.878 0.905 0.920SNR (dB) test set 28.852 25.245 29.940 30.968 29.004 28.910 26.639 26.966 30.356 29.800Mean ldBx (v) -20.1006 -18.416 -23.405 -24.369 -19.411 -20.230 -18.416 -18.416 -24.250 -23.427

Penalty

ASR training set 0.935 0.974 0.964 0.948 0.985 0.994 0.924 0.970 0.966 0.961ASR test set 0.941 0.990 0.974 0.957 0.987 0.994 0.943 0.975 0.978 0.975SNR (dB) test set 33.329 25.554 32.919 32.652 28.420 28.579 28.437 28.946 32.663 32.616Mean ldBx (v) -35.161 -25.740 -35.174 -35.117 -29.397 -29.583 -29.214 -29.408 -35.149 -35.219

TABLE 10Mean values of ASR, SNR and ldBx (v) for targeting each label of UrbanSound8k [49] dataset. The target model is SincNet+VGG. Higher ASRs

are in boldface.

Target ClassesMethod AI CA CH DO DR EN GU JA SI ST

IterativeASR training set 0.990 0.992 0.993 0.994 0.995 0.972 0.925 0.996 0.999 0.994ASR test set 0.876 0.918 0.855 0.887 0.863 0.831 0.899 0.852 0.864 0.879SNR (dB) test set 25.571 26.434 27.734 25.674 28.890 24.276 22.930 26.621 27.292 26.607ldBx (v) -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416 -18.416

PenaltyASR training set 0.902 0.908 0.903 0.922 0.921 0.903 0.920 0.922 0.924 0.935ASR test set 0.899 0.889 0.898 0.897 0.897 0.881 0.882 0.907 0.919 0.914SNR (dB) test set 26.417 27.191 28.784 27.085 28.448 24.626 22.411 27.330 27.276 27.787ldBx (v) -20.024 -21.601 -23.594 -21.293 -23.944 -18.213 -14.776 -22.494 -22.045 -22.607

to 50% percent of the samples are also augmented by the useof time shifting with the minimum and maximum range of(-2000, 0) moreover, up to 15% of the samples are also silentsignals.

APPENDIX FAUDIO EXAMPLES

Several randomly chosen examples of perturbed audio sam-ples of Urbansound8k dataset [49] and Speech commands[50] datasets are also presented. The audio samples areperturbed based on two presented methods in this study.Targeted and untargeted perturbations are considered. Ta-ble 18 shows a list of the samples of audio samples ofUrbansound8k dataset [49] and Table 19 also shows thelist of audio samples of Speech Commands [50] dataset .Methodology of crafting the samples, target models, SNRof the perturbed samples, ldBx(v) of the perturbed samples,detected class of the sample by each model as well as thetrue class of the samples are also presented.

ACKNOWLEDGMENTS

We thank Dr. Rachel Bouserhal for her insightful feedback.This work was funded by the Natural Sciences and Engi-neering Research Council of Canada (NSERC). This workwas also supported by the NVIDIA GPU Grant Program.

REFERENCES

[1] Z. Zhao, P. Zheng, S. Xu, and X. Wu, “Object detection with deeplearning: A review,” IEEE Trans Neural Netw and Learn Syst, vol. 30,no. 11, pp. 3212–3232, 2019.

[2] M. Yuan, B. Van Durme, and J. L. Ying, “Multilingual anchoring:Interactive topic modeling and alignment across languages,” inAdv in Neural Inf Proc Syst, 2018, pp. 8653–8663.

[3] Z. Yang, Z. Hu, C. Dyer, E. P. Xing, and T. Berg-Kirkpatrick,“Unsupervised text style transfer using language models as dis-criminators,” in Adv in Neural Inf Proc Syst, 2018, pp. 7287–7298.

[4] Y. Jia, Y. Zhang, R. Weiss, Q. Wang, J. Shen, F. Ren, P. Nguyen,R. Pang, I. L. Moreno, Y. Wu et al., “Transfer learning from speakerverification to multispeaker text-to-speech synthesis,” in Adv inNeural Inf Proc Syst, 2018, pp. 4480–4490.

[5] Y.-A. Chung, W.-H. Weng, S. Tong, and J. Glass, “Unsupervisedcross-modal alignment of speech and text embedding spaces,” inAdv in Neural Inf Proc Syst, 2018, pp. 7354–7364.

[6] C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Good-fellow, and R. Fergus, “Intriguing properties of neural networks,”in 2nd Intl Conf Learn Repres, 2014.

[7] I. J. Goodfellow, J. Shlens, and C. Szegedy, “Explaining and Har-nessing Adversarial Examples,” in Intl Conf Learn Repres, 2015.

[8] N. Carlini and D. Wagner, “Towards evaluating the robustness ofneural networks,” in IEEE Symp Secur Privacy, 2017, pp. 39–57.

[9] N. Akhtar and A. Mian, “Threat of adversarial attacks on deeplearning in computer vision: A survey,” IEEE Access, vol. 6, pp.14 410–14 430, 2018.

[10] K. Grosse, T. A. Trost, M. Mosbach, M. Backes, and D. Klakow,“Adversarial initialization – when your network performs the wayi want,” arXiv preprint 1902.03020, 2019.

[11] K. M. Koerich, M. Esmaeilpour, S. Abdoli, A. S. Britto Jr., andA. L. Koerich, “Cross-representation transferability of adversarialattacks: From spectrograms to audio waveforms,” in Intl J Conf onNeural Netw, 2020, pp. 1–7.

[12] B. Biggio and F. Roli, “Wild patterns: Ten years after the rise ofadversarial machine learning,” Patt Recog, vol. 84, pp. 317–331,2018.

[13] T. Orekondy, B. Schiele, and M. Fritz, “Knockoff nets: Stealingfunctionality of black-box models,” in IEEE Conf Comp Vis PattRecog, 2019, pp. 4954–4963.

14

TAB

LE11

Mea

nva

lues

ofA

SR

,SN

Ran

dl d

Bx(v

)fo

rtar

getin

gea

chla

belo

fspe

ech

com

man

dsda

tase

t[50

]dat

aset

.The

targ

etm

odel

isS

pchC

MD

.Hig

herA

SR

sar

ein

bold

face

.

Targ

etC

lass

esM

etho

dsi

lenc

eun

know

nsh

eila

nine

stop

bed

four

six

dow

nbi

rdm

arvi

nca

tof

fri

ght

seve

nei

ght

upth

ree

happ

ygo

zero

onw

owdo

gye

sfiv

eon

etr

eeho

use

two

left

no

Iter

ativ

e

ASR

trai

ning

set

0.90

30.

902

0.90

30.

901

0.90

80.

902

0.90

00.

902

0.90

40.

906

0.90

10.

902

0.90

10.

902

0.90

30.

902

0.90

20.

900

0.90

00.

901

0.90

00.

903

0.90

00.

903

0.90

50.

904

0.90

40.

901

0.90

50.

904

0.90

00.

900

ASR

test

set

0.87

50.

893

0.85

00.

868

0.85

90.

857

0.87

10.

829

0.84

50.

861

0.84

10.

840

0.84

30.

863

0.83

80.

872

0.84

90.

871

0.83

50.

857

0.86

40.

863

0.85

50.

857

0.85

40.

840

0.87

00.

838

0.86

00.

840

0.85

60.

835

SNR

(dB)

test

set

21.5

5927

.747

31.2

4026

.656

32.1

1625

.345

26.7

2629

.381

30.7

9525

.127

26.9

8428

.068

25.6

4027

.637

29.0

7325

.217

25.7

7527

.901

27.4

0428

.173

29.6

3027

.074

26.0

4326

.052

28.2

6726

.428

27.7

8928

.529

26.8

0028

.596

26.7

8226

.203

l dB x

(v)

-18.

416

-18.

416

-20.

546

-18.

416

-22.

206

-18.

416

-18.

416

-19.

350

-22.

887

-18.

416

-18.

416

-18.

529

-18.

416

-18.

418

-20.

292

-18.

416

-18.

416

-19.

338

-18.

416

-18.

416

-19.

459

-18.

416

-18.

416

-18.

416

-18.

421

-18.

416

-18.

417

-18.

450

-18.

416

-18.

840

-18.

416

-18.

416

Pena

lty

ASR

trai

ning

set

0.90

00.

901

0.90

20.

905

0.90

10.

906

0.90

30.

901

0.90

40.

900

0.90

10.

901

0.90

10.

909

0.90

10.

900

0.90

10.

913

0.90

00.

907

0.91

40.

901

0.90

30.

902

0.90

70.

901

0.90

30.

903

0.90

20.

901

0.90

30.

902

ASR

test

set

0.85

90.

910

0.84

90.

867

0.85

80.

835

0.83

80.

856

0.86

40.

821

0.86

10.

830

0.83

20.

837

0.85

40.

883

0.82

90.

867

0.85

50.

848

0.83

70.

857

0.82

30.

825

0.84

30.

850

0.85

70.

849

0.86

00.

849

0.85

10.

842

SNR

(dB)

test

set

26.7

0726

.693

26.7

1626

.763

26.7

1426

.706

26.7

2226

.728

26.7

4126

.744

26.7

2926

.716

26.7

6526

.720

26.7

1326

.758

26.7

2926

.750

26.7

2626

.738

26.7

2126

.761

26.7

1226

.727

26.7

2726

.735

26.7

1626

.722

26.7

2826

.722

26.7

3026

.769

l dB x

(v)

-19.

767

-19.

913

-24.

018

-20.

482

-22.

100

-20.

514

-22.

881

-21.

749

-21.

484

-20.

034

-20.

996

-23.

751

-20.

333

-23.

642

-20.

663

-20.

971

-17.

679

-22.

893

-19.

322

-22.

805

-23.

252

-19.

994

-22.

219

-21.

555

-22.

725

-20.

931

-23.

301

-23.

257

-20.

861

-20.

510

-20.

723

-22.

056

15

TABLE 121D CNN Rand architecture.

Layer Ksize Stride # of filters Data shape

InputLayer - - - (50,999, 1)Conv1D 64 2 16 (25,468, 16)MaxPooling1D 8 8 16 (3,183, 16)Conv1D 32 2 32 (1,576, 32)MaxPooling1D 8 8 32 (197, 32)Conv1D 16 2 64 (91, 64)Conv1D 8 2 128 (42, 128)Conv1D 4 2 256 (20, 256)MaxPooling1D 4 4 128 (5, 256)FC - - 128 (128)FC - - 64 (64)FC - - 10 (10)

TABLE 131D CNN Gamma architecture

Layer Ksize Stride # of filters Data shape

InputLayer - - - (50,999, 1)Conv1D 512 1 64 (50,488, 64)MaxPooling1D 8 8 64 (6,311, 64)Conv1D 32 2 32 (3,140, 32)MaxPooling1D 8 8 32 (392, 32)Conv1D 16 2 64 (189, 64)Conv1D 8 2 128 (91, 128)Conv1D 4 2 256 (44, 256)MaxPooling1D 4 4 128 (11, 256)FC - - 128 (128)FC - - 64 (64)FC - - 10 (10)

TABLE 14ENVnet-V2 architecture

Layer Ksize Stride # of filters Data shape

InputLayer - - - (50,999, 1)Conv1D 64 2 32 (25,468, 32)Conv1D 16 2 64 (12,727, 64)MaxPooling1D 64 64 64 (198, 64)swapaxes - - - (64, 198, 1)Conv2D (8,8) (1,1) 32 (57, 191, 32)Conv2D (8,8) (1,1) 32 (50, 184, 32)MaxPooling2D (5,3) (5,3) 32 (10, 61, 32)Conv2D (1,4) (1,1) 64 (10, 58, 64)Conv2D (1,4) (1,1) 64 (10, 55, 64)MaxPooling2D (1,2) (1,2) 64 (10, 27, 64)Conv2D (1,2) (1,1) 128 (10, 26, 128)FC - - 4,096 (4,096)FC - - 4,096 (4,096)FC - - 10 (10)

TABLE 15SincNet architecture

Layer Ksize Stride # of filters Data shape

InputLayer - - - (50,999, 1)SincConv1D 251 1 80 (50,749, 80)MaxPooling1D 3 1 80 (16,916, 80)Conv1D 5 1 60 (16,912, 60)MaxPooling1D 3 1 60 (5,637, 60)Conv1D 5 1 60 (5,633, 60)FC - - 128 (128)FC - - 64 (64)FC - - 10 (10)

TABLE 16SincNet+VGG19 architecture

Layer Ksize Stride # of filters Data shape

InputLayer - - - (50,999, 1)SincConv1D 251 1 227 (50,749, 1)MaxPooling1D 218 1 227 (232, 1)Reshape - - - (232, 227, 1)VGG19 [54] - - - (4096)FC - - 10 (10)

TABLE 17SpchCMD architecture

Layer Ksize Stride # of filters Data shape

InputLayer - - - (16,000)Time slice stack 40 20 - (800, 40)Conv1D 3 2 64 (399, 64)DeptwiseConv1D 3 1 128 (397, 128)DeptwiseConv1D 3 1 192 (199, 192)DeptwiseConv1D 3 1 192 (197, 192)DeptwiseConv1D 3 1 256 (99, 256)DeptwiseConv1D 3 1 256 (97, 256)DeptwiseConv1D 3 1 320 (49, 320)DeptwiseConv1D 3 1 320 (47, 320)DeptwiseConv1D 3 1 384 (24, 384)DeptwiseConv1D 3 1 384 (22, 384)DeptwiseConv1D 3 1 448 (11, 448)DeptwiseConv1D 3 1 448 (9, 448)AttentionLayer - - 448 (9, 448)GolobalAvgPooling - - 448 (448)FC - - 32 (32)

[14] S.-M. Moosavi-Dezfooli, A. Fawzi, O. Fawzi, and P. Frossard,“Universal adversarial perturbations,” in IEEE Conf Comp Vis PattRecog, 2017, pp. 1765–1773.

[15] A. Hannun, C. Case, J. Casper, B. Catanzaro, G. Diamos, E. Elsen,R. Prenger, S. Satheesh, S. Sengupta, A. Coates et al., “Deepspeech: Scaling up end-to-end speech recognition,” arXiv preprint1412.5567, 2014.

[16] Y. Hoshen, R. J. Weiss, and K. W. Wilson, “Speech acoustic mod-eling from raw multichannel waveforms,” in IEEE Intl Conf onAcoust Speech Signal Process, 2015, pp. 4624–4628.

[17] A. van den Oord, S. Dieleman, H. Zen, K. Simonyan, O. Vinyals,A. Graves, N. Kalchbrenner, A. W. Senior, and K. Kavukcuoglu,“Wavenet: A generative model for raw audio,” in 9th ISCA SpeechSynth Workshop, 2016, p. 125.

[18] T. N. Sainath, R. J. Weiss, A. Senior, K. W. Wilson, and O. Vinyals,“Learning the speech front-end with raw waveform CLDNNs,” in16th Annual Conf Intl Speech Comm Assoc, 2015, pp. 1–5.

[19] M. Ravanelli and Y. Bengio, “Speaker recognition from raw wave-form with SincNet,” in IEEE Spoken Lang Techn Works, 2018, pp.1021–1028.

[20] N. Zeghidour, N. Usunier, I. Kokkinos, T. Schaiz, G. Synnaeve,and E. Dupoux, “Learning filterbanks from raw speech for phonerecognition,” in IEEE Intl Conf on Acoust Speech Signal Process, 2018,pp. 5509–5513.

[21] N. Zeghidour, N. Usunier, G. Synnaeve, R. Collobert, andE. Dupoux, “End-to-end speech recognition from the raw wave-form,” in 19th Annual Conf Intl Speech Comm Assoc, 2018, pp. 781–785.

[22] N. Carlini and D. Wagner, “Audio adversarial examples: Targetedattacks on speech-to-text,” in IEEE Secur Privacy Worksh, 2018, pp.1–7.

[23] H. Abdullah, K. Warren, V. Bindschaedler, N. Papernot, andP. Traynor, “The faults in our asrs: An overview of attacks againstautomatic speech recognition and speaker identification systems,”arXiv preprint 2007.06622, 2020.