1 Debriefing, Recommendations CSSE 376, Software Quality Assurance Rose-Hulman Institute of...

-

date post

22-Dec-2015 -

Category

Documents

-

view

215 -

download

0

Transcript of 1 Debriefing, Recommendations CSSE 376, Software Quality Assurance Rose-Hulman Institute of...

1

Debriefing, Recommendations

CSSE 376, Software Quality Assurance

Rose-Hulman Institute of Technology

May 3, 2007

3

Post-test Questionnaire

Purpose: collect preference information from participant

May also be used to collect background information

4

Likert Scales

Overall, I found the widget easy to usea. strongly agree

b. agree

c. neither agree nor disagree

d. disagree

e. strongly disagree

5

Semantic Differentials

Circle the number closest to your feelings about the product:

Simple ..3..2..1..0..1..2..3.. Complex

Easy to use ..3..2..1..0..1..2..3.. Hard to use

Familiar ..3..2..1..0..1..2..3.. Unfamiliar

Reliable ..3..2..1..0..1..2..3.. Unreliable

6

Free-form Questions

I found the following aspects of the product particularly easy to use

________________________________

________________________________

________________________________

8

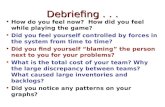

Debriefing

Purpose: find out why the participant behaved the way they did.

Method: interview May focus on specific behaviors

observed during the test.

9

Debriefing Guidelines

1. Review participant's behaviors and post-test answers.

2. Let participant say whatever is on their mind.

3. Start with high-level issues and move on to specific issues.

4. Focus on understanding problems, not on problem-solving.

10

Debriefing Techniques

What did you remember?When you finished inserting an

appointment did you notice any change in the information display?

Devil's advocateGee, other people we've brought in have

responded in quite the opposite way.

12

Performance Findings

Mean time to completeMedian time to completeMean number of errorsMedian number of errorsPercentage of participants performing

successfully

13

Preference Findings

Limited-choice questions• sum each answer• compute averages to compare questions

Free-form questions• group similar answers

15

Analysis

Focus on problematic tasks70% of participants failed to complete

task successfully Conduct a source of error analysis

look for multiple causeslook at multiple participants

Prioritize problems by criticalityCriticality = Severity + Probability

16

Recommendations (1/2)

Get some perspectivewait until a couple days after testingcollect thoughts from group of testersget buy-in from developers

Focus on highest impact areas