( current & future work ) explicit confirmation implicit confirmation unplanned implicit...

-

Upload

ashley-lowe -

Category

Documents

-

view

212 -

download

0

Transcript of ( current & future work ) explicit confirmation implicit confirmation unplanned implicit...

( current & future work ) explicit

confirmation

implicitconfirmation

unplanned implicitconfirmation

request

constructing accurate beliefs in spoken dialog systems

Dan Bohus, Alexander I. RudnickyComputer Science Department, Carnegie Mellon University

1 abstract

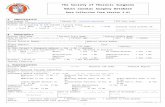

phone-based, mixed initiative system for conference room reservations access to live schedules for 13 rooms in 2 buildings size, location, a/v equipment

Roomline

( current & future work )We propose a data-driven approach for constructing more accurate

beliefs over concept values in spoken dialog systems by integrating information across multiple turns in the conversation. The approach bridges existing work in confidence annotation and correction detection and provides a unified framework for belief updating. It significantly outperforms heuristic rules currently used in most spoken dialog systems.

3 user response analysis a k-hypotheses + other

4 models. results

5 conclusion

2 problem

3 dataset

b estimated impact on task success

c using information from n-best lists

user study with the RoomLine spoken dialog system 46 participants (1st time users) 10 scenario-based interactions each 449 dialogs 8278 turns

corpus transcribed and annotated

responses to explicit confirmations

Yes No Other

Correct (1159)

94% [93%]

0% [0%] 5% [7%]

Incorrect (279)

1% [6%] 72% [57%]

27% [37%]

responses to implicit confirmations

Yes No Other

Correct (554)

30% [0%] 7% [0%] 63% [100%]

Incorrect (229)

6% [0%] 33% [15%]

61% [85%]

how do users respond to correct and incorrect confirmations?

explicit confirmation

User correc

ts

User does not

correct

Correct 0 1159

Incorrect

250 29

implicit confirmation

User correc

ts

User does not

correct

Correct 2 552

Incorrect

111 118

users interact strategically

~ correct later

correct

later

~critical

55 2

critical 14 47

how often users correct the system?

31.15

8.41

3.57 2.71

30%

20%

10%

0%

30.40

23.37

16.15 15.33

30%

20%

10%

0%

15.4014.36

12.6410.37

20%

10%

0%

explicit confirmation implicit confirmation unplanned implicit confirmation

proposed a data-driven approach for constructing more accurate beliefs in task-oriented spoken dialog systems

bridge insights from detection of misunderstandings and corrections into a unified belief updating framework

model significantly outperforms heuristics currently used in most spoken dialog systems

initial initial confidence score of top hypothesis, # of initial hypotheses, concept type (bool / non-bool), concept identity;

system action indicators describing other system actions in conjunction with current confirmation;

user response

acoustic / prosodic

acoustic and language scores, duration, pitch (min, max, mean, range, std.dev, min and max slope, plus normalized versions), voiced-to-unvoiced ratio, speech rate, initial pause;

lexical number of words, lexical terms highly correlated with corrections (MI);

grammatical

number of slots (new, repeated), parse fragmentation, parse gaps;

dialog dialog state, turn number, expectation match, new value for concept, timeout, barge-in.

evaluation initial(error rate in system beliefs before the update)

heuristic(error rate in system beliefs after the update – using the heuristic update rules)

proposed model(error rate of the proposedlogistic model tree)

oracle(oracle error rate)

features

model

Confupd(thC) ← M (Confinit(thC), SA(C), R) logistic model tree [one for each system action]

1-level deep, root splits on answer-type (YES / NO / other) leaves contain stepwise logistic regression models

sample efficient, feature selection good probability outputs (minimize cross entropy between model predictions and reality)

10%

0%

20%

30%

16.17%

5.52%

30.83%

6.06%7.86%

10%

20%

0%

26.16%

17.56%

30.46%

21.45%22.69%

0%

12%

4%

8%

15.15%

10.72%

15.49%

14.02%12.95%

4%

0%

8%

12%9.49%

6.08%

98.14%

9.64%

45.03%

19.23%

80.00%

25.66%

20%

0%

40%

unexpectedupdates

initial

heuristic

proposed model(basic feature set)

proposed model(basic + priors)

oracle

As a prerequisite for increased robustness and making better decisions, dialog systems must be able to accurately assess the reliability of the information they use. Typically, recognition confidence scores provide an initial assessment for the reliability of the information obtained from the user. Ideally, a system should leverage information available in subsequent turns to update and improve

the accuracy of it’s beliefs.

belief updating problem:given an initial belief over a concept Belieft(C), a system

action SA(C) and a user response R, compute the updated belief Belieft+1(C)

S: starting at what time do you need the room?U: [STARTING AT TEN A M / 0.45]

starting at ten a.m.start-time = {10:00 / 0.45}

S: did you say you wanted the room starting at ten a.m.?U: [GUEST UNTIL ONE / 0.89]

yes until noon

start-time = {?}

explicit confirmation (correct value)

S: for when do you need the room?U: [NEXT THURSDAY / 0.75]

next Thursdaydate = {2004-08-26 / 0.75}

S: a room for Thursday, August 26th … starting at what

time do you need the room?U: [FIVE TO SEVEN P_M / 0.58]

five to seven p.m.

date = {?}

implicit confirmation (correct value)

S: how may I help you?U: [THREE TO RESERVE A ROOM / 0.65]

I’d like to reserve a roomstart-time = {15:00 / 0.65}

S: starting at three p.m. … for which day do you need the

conference room?U: [CAN YOU DETAILS TIME / NONUNDER.(0.0)]

I need a different time

start-time = {?}

implicit confirmation (incorrect value)

belief representation k hypotheses + other multinomial generalized linear model

system actions: all actions explicit confirmation implicit confirmation unplanned impl. confirmation request [system asks for the value for a concept] unexpected update [system received a value for a concept, without asking for it, e.g. as a result of a misrecognition or the user over-answering or attempting a topic shift]

features added prior information on concepts priors constructed manually

belief representation: most accurately: probability distribution over the set of possible values but: system is not likely to “hear” more than 3 or 4 conflicting values

in our data, the maximum number of hypotheses for a concept accumulated through interaction was 3; the system heard more than 1 hypothesis for a concept in only 6.9% of cases

compressed belief representation: k hypotheses + other for now, k = 1: top hypothesis + other [see current and future work for extensions]

for now, only updates after system confirmation actions

given an initial confidence score for the top hypothesis h for a concept C - Confinit(thC), construct an updated confidence score for the hypothesis

h - Confupd(thC) - in light of the system confirmation action SA(C) and

the follow-up user response R

compressed belief updating problem:

how does the accuracy of the belief updating model affect task success? relates the accuracy of the belief updates to overall task success through a logistic regression model accuracy of belief updates: measured as AVG-LIK of the correct hypothesis word-error-rate acts as a co-founding factor

model: P(Task Success=1) ← α + β•WER + γ•AVG-LIK fitted model using 443 data-points (dialog sessions) β, γ capture the impact of WER and AVG-LIK on overall task success

0 20 40 60 80 1000

0.2

0.4

0.6

0.8

1

0 20 40 60 80 1000

0.2

0.4

0.6

0.8

1

0 20 40 60 80 100

0.2

0.4

0.6

0.8

0.0

1.0

0.2

0.4

0.6

0.8

0.0

1.0

0 20 40 60 80 100

word-error-rate word-error-rate

pro

bab

ility

of

task

su

ccess

avg.lik. = 0.5

avg.lik. = 0.6

avg.lik. = 0.7

avg.lik. = 0.8

avg.lik. = 0.9

current heuristic

proposed model

avg.lik. = 0.5

avg.lik. = 0.6

current heuristic

avg.lik. = 0.7

proposed model

avg.lik. = 0.8

avg.lik. = 0.9

avera

ge w

ord

-err

or

rate

avera

ge w

ord

-err

or

rate

natives non-natives

pro

bab

ility

of

task

su

ccess

currently: using only the top hypothesis from the recognizer next: extract more information from n-best list or lattices